Study of Autonomous Capture and Detumble of Non-Cooperative Target by a Free-Flying Space Manipulator Using an Air- Bearing Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Russia's Posture in Space

Russia’s Posture in Space: Prospects for Europe Executive Summary Prepared by the European Space Policy Institute Marco ALIBERTI Ksenia LISITSYNA May 2018 Table of Contents Background and Research Objectives ........................................................................................ 1 Domestic Developments in Russia’s Space Programme ............................................................ 2 Russia’s International Space Posture ......................................................................................... 4 Prospects for Europe .................................................................................................................. 5 Background and Research Objectives For the 50th anniversary of the launch of Sputnik-1, in 2007, the rebirth of Russian space activities appeared well on its way. After the decade-long crisis of the 1990s, the country’s political leadership guided by President Putin gave new impetus to the development of national space activities and put the sector back among the top priorities of Moscow’s domestic and foreign policy agenda. Supported by the progressive recovery of Russia’s economy, renewed political stability, and an improving external environment, Russia re-asserted strong ambitions and the resolve to regain its original position on the international scene. Towards this, several major space programmes were adopted, including the Federal Space Programme 2006-2015, the Federal Target Programme on the development of Russian cosmodromes, and the Federal Target Programme on the redeployment of GLONASS. This renewed commitment to the development of space activities was duly reflected in a sharp increase in the country’s launch rate and space budget throughout the decade. Thanks to the funds made available by flourishing energy exports, Russia’s space expenditure continued to grow even in the midst of the global financial crisis. Besides new programmes and increased funding, the spectrum of activities was also widened to encompass a new focus on space applications and commercial products. -

Back to the the Future? 07> Probing the Kuiper Belt

SpaceFlight A British Interplanetary Society publication Volume 62 No.7 July 2020 £5.25 SPACE PLANES: back to the the future? 07> Probing the Kuiper Belt 634089 The man behind the ISS 770038 Remembering Dr Fred Singer 9 CONTENTS Features 16 Multiple stations pledge We look at a critical assessment of the way science is conducted at the International Space Station and finds it wanting. 18 The man behind the ISS 16 The Editor reflects on the life of recently Letter from the Editor deceased Jim Beggs, the NASA Administrator for whom the building of the ISS was his We are particularly pleased this supreme achievement. month to have two features which cover the spectrum of 22 Why don’t we just wing it? astronautical activities. Nick Spall Nick Spall FBIS examines the balance between gives us his critical assessment of winged lifting vehicles and semi-ballistic both winged and blunt-body re-entry vehicles for human space capsules, arguing that the former have been flight and Alan Stern reports on his grossly overlooked. research at the very edge of the 26 Parallels with Apollo 18 connected solar system – the Kuiper Belt. David Baker looks beyond the initial return to the We think of the internet and Moon by astronauts and examines the plan for a how it helps us communicate and sustained presence on the lunar surface. stay in touch, especially in these times of difficulty. But the fact that 28 Probing further in the Kuiper Belt in less than a lifetime we have Alan Stern provides another update on the gone from a tiny bleeping ball in pioneering work of New Horizons. -

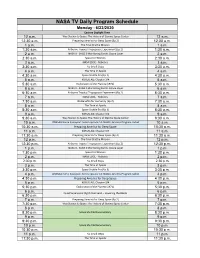

NASA TV Schedule for Web (Week of 6-22-2020).Xlsx

NASA TV Daily Program Schedule Monday - 6/22/2020 Eastern Daylight Time 12 a.m. Way Station to Space: The History of Stennis Space Center 12 a.m. 12:30 a.m. Preparing America for Deep Space (Ep.2) 12:30 a.m. 1 a.m. The Final Shuttle Mission 1 a.m. 1:30 a.m. Airborne Tropical Tropopause Experiment (Ep.2) 1:30 a.m. 2 a.m. NASA X - SAGE 3 Monitoring Earths Ozone Layer 2 a.m. 2:30 a.m. Space for Women 2:30 a.m. 3 a.m. NASA EDGE - Robotics 3 a.m. 3:30 a.m. No Small Steps 3:30 a.m. 4 a.m. The Time of Apollo 4 a.m. 4:30 a.m. Space Shuttle Era (Ep.3) 4:30 a.m. 5 a.m. KORUS-AQ: Chapter 3/4 5 a.m. 5:30 a.m. Exploration of the Planets (1971) 5:30 a.m. 6 a.m. NASA X - SAGE 3 Monitoring Earths Ozone Layer 6 a.m. 6:30 a.m. Airborne Tropical Tropopause Experiment (Ep.2) 6:30 a.m. 7 a.m. NASA EDGE - Robotics 7 a.m. 7:30 a.m. ISS Benefits for Humanity (Ep.2) 7:30 a.m. 8 a.m. The Time of Apollo 8 a.m. 8:30 a.m. Space Shuttle Era (Ep.3) 8:30 a.m. 9 a.m. KORUS-AQ: Chapter 3/4 9 a.m. 9:30 a.m. Way Station to Space: The History of Stennis Space Center 9:30 a.m. -

Robotic Arm.Indd

Ages: 8-12 Topic: Engineering design and teamwork Standards: This activity is aligned to national standards in science, technology, health and mathematics. Mission X: Train Like an Astronaut Next Generation: 3-5-ETS1-2. Generate and compare multiple possible solutions to a problem based on how well each is likely A Robotic Arm to meet the criteria and constraints of the problem. 3-5-ETS1-3. Plan and carry out fair tests in which variables are controlled and failure points are considered to identify aspects of a model or prototype that can be EDUCATOR SECTION (PAGES 1-7) improved. STUDENT SECTION (PAGES 8-15) Background Why do we need robotic arms when working in space? As an example, try holding a book in your hands straight out in front of you and not moving them for one or two minutes. After a while, do your hands start to shake or move around? Imagine how hard it would be to hold your hands steady for many days in a row, or to lift something really heavy. Wouldn’t it be nice to have a really long arm that never gets tired? Well, to help out in space, scientists have designed and used robotic arms for years. On Earth, scientists have designed robotic arms for everything from moving heavy equipment to performing delicate surgery. Robotic arms are important machines that help people work on Earth as well as in space. Astronaut attached to a robotic arm on the ISS. Look at your arms once again. Your arms are covered in skin for protection. -

The International Space Station Partners: Background and Current Status

The Space Congress® Proceedings 1998 (35th) Horizons Unlimited Apr 28th, 2:00 PM Paper Session I-B - The International Space Station Partners: Background and Current Status Daniel V. Jacobs Manager, Russian Integration, International Partners Office, International Space Station ogrPr am, NASA, JSC Follow this and additional works at: https://commons.erau.edu/space-congress-proceedings Scholarly Commons Citation Jacobs, Daniel V., "Paper Session I-B - The International Space Station Partners: Background and Current Status" (1998). The Space Congress® Proceedings. 18. https://commons.erau.edu/space-congress-proceedings/proceedings-1998-35th/april-28-1998/18 This Event is brought to you for free and open access by the Conferences at Scholarly Commons. It has been accepted for inclusion in The Space Congress® Proceedings by an authorized administrator of Scholarly Commons. For more information, please contact [email protected]. THE INTERNATIONAL SPACE STATION: BACKGROUND AND CURRENT STATUS Daniel V. Jacobs Manager, Russian Integration, International Partners Office International Space Station Program, NASA Johnson Space Center Introduction The International Space Station, as the largest international civil program in history, features unprecedented technical, managerial, and international complexity. Seven interna- tional partners and participants encompassing fifteen countries are involved in the ISS. Each partner is designing, developing and will be operating separate pieces of hardware, to be inte- grated on-orbit into a single orbital station. Mission control centers, launch vehicles, astronauts/ cosmonauts, and support services will be provided by multiple partners, but functioning in a coordinated, integrated fashion. A number of major milestones have been accomplished to date, including the construction of major elements of flight hardware, the development of opera- tions and sustaining engineering centers, astronaut training, and seven Space Shuttle/Mir docking missions. -

Espinsights the Global Space Activity Monitor

ESPInsights The Global Space Activity Monitor Issue 6 April-June 2020 CONTENTS FOCUS ..................................................................................................................... 6 The Crew Dragon mission to the ISS and the Commercial Crew Program ..................................... 6 SPACE POLICY AND PROGRAMMES .................................................................................... 7 EUROPE ................................................................................................................. 7 COVID-19 and the European space sector ....................................................................... 7 Space technologies for European defence ...................................................................... 7 ESA Earth Observation Missions ................................................................................... 8 Thales Alenia Space among HLS competitors ................................................................... 8 Advancements for the European Service Module ............................................................... 9 Airbus for the Martian Sample Fetch Rover ..................................................................... 9 New appointments in ESA, GSA and Eurospace ................................................................ 10 Italy introduces Platino, regions launch Mirror Copernicus .................................................. 10 DLR new research observatory .................................................................................. -

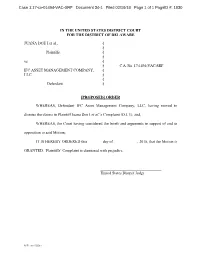

IFC-AMC Motion to Dismiss

Case 1:17-cv-01494-VAC-SRF Document 34-1 Filed 02/16/18 Page 1 of 1 PageID #: 1030 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE JUANA DOE I et al., § § Plaintiffs, § § vs. § § C.A. No. 17-1494-VAC-SRF IFC ASSET MANAGEMENT COMPANY, § LLC, § § Defendant. § [PROPOSED] ORDER WHEREAS, Defendant IFC Asset Management Company, LLC, having moved to dismiss the claims in Plaintiff Juana Doe I et al.’s Complaint (D.I. 1); and, WHEREAS, the Court having considered the briefs and arguments in support of and in opposition to said Motion; IT IS HEREBY ORDERED this _______ day of ____________, 2018, that the Motion is GRANTED. Plaintiffs’ Complaint is dismissed with prejudice. _______________________________ United States District Judge RLF1 18887565v.1 Case 1:17-cv-01494-VAC-SRF Document 34-1 Filed 02/16/18 Page 1 of 1 PageID #: 1030 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE JUANA DOE I et al., § § Plaintiffs, § § vs. § § C.A. No. 17-1494-VAC-SRF IFC ASSET MANAGEMENT COMPANY, § LLC, § § Defendant. § [PROPOSED] ORDER WHEREAS, Defendant IFC Asset Management Company, LLC, having moved to dismiss the claims in Plaintiff Juana Doe I et al.’s Complaint (D.I. 1); and, WHEREAS, the Court having considered the briefs and arguments in support of and in opposition to said Motion; IT IS HEREBY ORDERED this _______ day of ____________, 2018, that the Motion is GRANTED. Plaintiffs’ Complaint is dismissed with prejudice. _______________________________ United States District Judge RLF1 18887565v.1 Case 1:17-cv-01494-VAC-SRF Document 35 Filed 02/16/18 Page 1 of 51 PageID #: 1031 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE JUANA DOE I et al., § § Plaintiffs, § § vs. -

Expedition 63

National Aeronautics and Space Administration INTERNATIONAL 20 Years on the International Space Station SPACE STATION EXPEDITION 63 Soyuz MS-16 Launch: April 9, 2020 Landing: October 2020 CHRIS CASSIDY (NASA) Commander Born: Salem Massachusetts Interests: Traveling, biking, camping, snow skiing, weight lifting, running Spaceflights: STS-127, Exp 35/36 Bio: https://go.nasa.gov/2NsLd0s Twitter: @Astro_SEAL ANATOLY IVANISHIN (Roscosmos) Flight Engineer Born: Irkutsk, Soviet Union Spaceflights: Exp 29/30, Exp 48/49 Bio: https://go.nasa.gov/2uy7DqK IVAN VAGNER (Roscosmos) Flight Engineer Born: Severoonezhsk, Russia Spaceflights: First flight Bio: https://go.nasa.gov/2CgZD1h Twitter: @ivan_mks63 EXPEDITION Expedition 63 began in April 2020 and ends in October 2020. This expedition will include research investigations and technology demonstrations not possible on Earth to advance scientific knowledge of Earth, space, physical and biological sciences. Stay up to date with the mission at the following web page: 63 https://www.nasa.gov/mission_pages/station/expeditions/expedition63/index.html During Expedition 63, scientists will collect standardized data from crew members to continue expanding our understanding of how human physiology responds to long-duration life in microgravity, and will test life support technologies that will be vital to our continued exploration of deep space. Follow the latest ISS Research and Technology news at: www.nasa.gov/stationresearchnews Capillary Driven Microfluidics s-Flame On long space missions such as flights to Mars, crew members need to be able to diagnose The Advanced Combustion via Microgravity Experiments (ACME) project is a series of independent studies of flames and treat anyone who gets sick. Many medical diagnostic devices function by moving liquids produced by burning gas. -

A Call for a New Human Missions Cost Model

A Call For A New Human Missions Cost Model NASA 2019 Cost and Schedule Analysis Symposium NASA Johnson Space Center, August 13-15, 2019 Joseph Hamaker, PhD Christian Smart, PhD Galorath Human Missions Cost Model Advocates Dr. Joseph Hamaker Dr. Christian Smart Director, NASA and DoD Programs Chief Scientist • Former Director for Cost Analytics • Founding Director of the Cost and Parametric Estimating for the Analysis Division at NASA U.S. Missile Defense Agency Headquarters • Oversaw development of the • Originator of NASA’s NAFCOM NASA/Air Force Cost Model cost model, the NASA QuickCost (NAFCOM) Model, the NASA Cost Analysis • Provides subject matter expertise to Data Requirement and the NASA NASA Headquarters, DARPA, and ONCE database Space Development Agency • Recognized expert on parametrics 2 Agenda Historical human space projects Why consider a new Human Missions Cost Model Database for a Human Missions Cost Model • NASA has over 50 years of Human Space Missions experience • NASA’s International Partners have accomplished additional projects . • There are around 70 projects that can provide cost and schedule data • This talk will explore how that data might be assembled to form the basis for a Human Missions Cost Model WHY A NEW HUMAN MISSIONS COST MODEL? NASA’s Artemis Program plans to Artemis needs cost and schedule land humans on the moon by 2024 estimates Lots of projects: Lunar Gateway, Existing tools have some Orion, landers, SLS, commercially applicability but it seems obvious provided elements (which we may (to us) that a dedicated HMCM is want to independently estimate) needed Some of these elements have And this can be done—all we ongoing cost trajectories (e.g. -

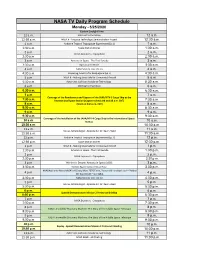

NASA TV Schedule for Web (Week of 5-25-2020)(1).Xlsx

NASA TV Daily Program Schedule Monday - 5/25/2020 Eastern Daylight Time 12 a.m. 300 Feet to the Moon 12 a.m. 12:30 a.m. NASA X - Airspace Technology Demonstration Project 12:30 a.m. 1 a.m. AirBorne Tropical Tropopause Experiment (Ep.1) 1 a.m. 1:30 a.m. Space Station Stories 1:30 a.m. 2 a.m. 2 a.m. NASA Explorers - Cryosphere 2:30 a.m. 2:30 a.m. 3 a.m. America in Space - The First Decade 3 a.m. 3:30 a.m. Operation IceBride 3:30 a.m. 4 a.m. NASA Science Live: On Ice 4 a.m. 4:30 a.m. Preparing America for Deep Space (Ep.1) 4:30 a.m. 5 a.m. NASA X - Making Skies Safe for Unmanned Aircraft 5 a.m. 5:30 a.m. Automatic Collision Avoidance Technology 5:30 a.m. 6 a.m. 300 Feet to the Moon 6 a.m. 6:30 a.m. 6:30 a.m. 7 a.m. 7 a.m. Coverage of the Rendezvous and Capture of the JAXA/HTV-9 Cargo Ship at the 7:30 a.m. International Space Station (Capture scheduled at 8:15 a.m. EDT) 7:30 a.m. 8 a.m. (Starts at 6:45 a.m. EDT) 8 a.m. 8:30 a.m. 8:30 a.m. 9 a.m. 9 a.m. 9:30 a.m. 9:30 a.m. Coverage of the Installation of the JAXA/HTV-9 Cargo Ship to the International Space 10 a.m. -

Expedition 63

National Aeronautics and Space Administration International Space Station [MISSION SUMMARY] began in April 2020 and ends in October 2020. This expedition EXPEDITION 63 will include research investigations focused on biology, Earth science, human research, physical sciences and technology development, providing the foundation for continuing human spaceflight beyond low-Earth orbit to the Moon and Mars. THE CREW: Chris Cassidy (NASA) Anatoly Ivanishin (Roscosmos) Ivan Vagner (Roscosmos) Commander Flight Engineer Flight Engineer Born: Salem, Massachusetts Born: Irkutsk, Russia Born: Severoonezhsk, Russia Spaceflights: STS-127, Exp. 35/36 Spaceflights: Exp. 29/30, Exp. 48/49 Spaceflights: First flight Bio: https://go.nasa.gov/2NsLd0s Bio: https://go.nasa.gov/2uy7DqK Bio: https://go.nasa.gov/3e8efhq Instagram: @Astro_SEAL Instagram: @ivan_mks63 THE SCIENCE: During Expedition 63, scientists will collect standardized data from crew What are some members to continue expanding our understanding of how human physiology investigations responds to long-duration life in microgravity, and will test life support the crew is technologies that will be vital to our continued exploration of deep space. operating? International Mission Space Station Summary ■ ACE-T-Ellipsoids across the duration of the International Space Station Program that This investigation creates three-dimensional colloids, small particles helps characterize the risks of living in space and how humans suspended within a fluid medium, and uses temperature to control the adapt to those risks. Scientists can use the data to monitor the density and behavior of the particles. Colloids can organize into various effectiveness of countermeasures and interpret astronaut health and structures, called self-assembled colloidal structures, which could enable performance outcomes, as well as to support future human research 3D printing of replacement parts and repair of facilities on future long- on planetary missions. -

NASA Television Schedule (Week of April 6Th) Updated.Xlsx

NASA TV Daily Program Schedule Monday All Times Eastern Time 12 a.m. 300 Feet to the Moon 12:30 a.m. NASA X - Airspace Technology Demonstration Project 1 a.m. Airborne Tropical Tropopause Experiment (Ep.1) 1:30 a.m. Space Station Stories 2 a.m. ISS Benefits for Humanity (Ep.1) 2:30 a.m. NASA Explorers - Cryosphere 3 a.m. 3:30 a.m. Operation IceBriDe 4 a.m. KORUS-AQ: Chapter 1/2 4:30 a.m. Preparing America for Deep Space (Ep.1) 5 a.m. Space Shuttle Era (Ep.1) 5:30 a.m. Automatic Collision AvoiDance Technology 6 a.m. BuilDing Curiosity 6:30 a.m. NASA EDGE - 3D Printing 7 a.m. STEM in 30 - Fly Girls: Women in Aerospace 7:30 a.m. 300 Feet to the Moon 8 a.m. NASA X - Airspace Technology Demonstration Project 8:30 a.m. Airborne Tropical Tropopause Experiment (Ep.1) 9 a.m. Space Station Stories 9:30 a.m. ISS Benefits for Humanity (Ep.1) 10 a.m. NASA Explorers - Cryosphere 10:30 a.m. 11 a.m. Space Shuttle Era (Ep.4) 11:30 a.m. Astrobiology in the FielD 12 p.m. NASA EDGE - 3D Printing 12:30 p.m. STEM in 30 - Fly Girls: Women in Aerospace 1 p.m. Operation IceBriDe 1:30 p.m. KORUS-AQ: Chapter 1/2 2 p.m. Preparing America for Deep Space (Ep.1) 2:30 p.m. Space Shuttle Era (Ep.1) 3 p.m. Automatic Collision AvoiDance Technology 3:30 p.m.