Ncsccss 2K20)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Village Lanad To

Hospi tu 1’s ’ .OIL obstetrics h Village lanad to section to stay open close in October Cass City is “going out of Although village officials ternational Surplus Lines, for extending Ale Street Thanks to a last minute the landfill business” fol- expect eventually to see a will not be renewed by the south through the property. replacement, Ken Jensen, lowing approval by the Vil- savings by closing the land- company. The council indicated it administrator of Hills and lage Council Monday night fill, there will be ongoing The policy, which costs will send a letter of appreci- Dales General Hospital, not to reapply for a license costs (about $3,600 per about $1,200 per year, is set ation to Matt Prieskorn for Cass City, has announced to operate the village’s 40- year) to monitor the site for to expire Aug. 4, LaPonsie his organization of a project the facility’s obstetrical acre Type I11 landfill. the next 5 years. said. in which new lights were unit will remain open. Also approved during the Councilmen approved a recently purchased and in- 65-minute monthly meeting ZONING VIOLATION resolution to seek a quote stalled at the basketball According to Jensen, an was authorization fpr the for the policy from the court. Prieskorn raised announcement about the village attorney to file a suit Turning to the zoning vio- Michigan , Municipal some $800 through clubs unit’s closing had been an- seeking an end to a zoning lation, Trustee Joanne Hop- League. and private donations, ticipated when Dr. Sang ordinance violation by 2 per, who chairs the coun- Also Monday, the council which covered the entire Park, obstetrics and area businessmen. -

Designing Design Tools Genel Bakış

Designing Design Tools Genel Bakış İyi ve güzel bir oyun, gelişmiş ve kullanışlı oyun tasarlama araçları ile oluşturulabilr. Bunlardan en önemlisi Geliştirme ya da seviye ortamı adını verebileceğimiz LEVEL EDITOR dür. Level editörler, 3d , 2d modelcilerin, ve programcıların kullandığı bir ara yüzdür. Geçmişte ilk jenerasyon oyunlarda genelde tek level dan oluşan oyunlar karşımıza çıkmış iken günümüzde yüzlerce level a ulaşan oyunlar bulunmaktadır. 1. UNREAL EDITOR / UDK Game Engine: Unreal Engine 3, (UDK) Games: Unreal Tournament 3, Bioshock 1/2, Bioshock Infinite, Gears of War Series, Borderlands 1/2, Dishonored Fonksiyonellik Level editörlerinden beklenen en önemli özellik kullanışlı olmalarıdır. Hızlı çalışılabilmesi için kısa yollar, tuşlar içermelidir. Bir çok özellik ayarlanabilir, açılıp kapanabilmelidir. Stabil çalışmalıdır. 2. HAMMER SOURCE Game Engine: Source Engine Games: L4D2/L4D1, CS: GO, CS:S, Day of Defeat: Source, Half-Life 2 and its Episodes, Portal 1 and 2, Team Fortress 2. Görselleştirme - Yapılan değişikliklerin aynı anda hem oyuncu gözünden hem de dışarıdan görülebilmesi gerekir. Bunu yazar “What you see is what you get” Ne goruyorsan onu alirsin diyerek anlatmıştır. - Kamera hareketleri kolayca değiştirilebilmelidir, Level içinde bir yerden başka bir yere hızla gitmeyi sağlayan ve diğer oyun objeleri ile çarpışmayan, hatta icinden gecebilen “Flight Mode” uçuş durumu adı verilen bir fonksiyon olmalıdır. - Editörün gördüğü ile oyuncunun grdugu uyumlu olmalidir, tersi durumunda oynanabilirlik azalacak, oyun iyi gozukmeyecektirç - Editor coklu goruntu seceneklerine ihtiyac duyulubilir. Bazi durumlarda hem ustten hem onden hemde kamera acisi ayni anda gorulmelidir. 3. SANDBOX EDITOR / CRYENGINE 3 SDK Game Engine: CryEngine 3 Games: Crysis 1, 2 and 3, Warface, Homefront 2 Oyunun Butunu Level editorler, tasarimciya her turlu kolayligi saglayabilecek fazladan bilgileri de vermek durumundadir. -

Oczekiwania Fanów Elektronicznej Rozrywki Wobec Gr Afiki W Gr Ach Komputerowych

Studia Informatica Pomerania nr 2/2016 (40) | www.wnus.edu.pl/si | DOI: 10.18276/si.2016.40-07 | 71–85 OCZEKIWANIA FANÓW ELEKTRONICZNEJ ROZRYWKI WOBEC GR AFIKI W GR ACH KOMPUTEROWYCH Agnieszka Szewczyk1 Uniwersytet Szczeciński 1 e-mail: [email protected] Słowa kluczowe gry komputerowe, silniki graficzne, elektroniczna rozrywka Streszczenie Celem artykułu jest przedstawienie informacji na temat silników graficznych wyko- rzystywanych w nowoczesnych grach komputerowych. Wskazano w nim także najpo- pularniejsze obecnie silniki oraz specyfikację ich głównych zalet i wad. Dokonano po- równania silników graficznych na podstawie badań ankietowych przeprowadzonych na forach internetowych gromadzących fanów elektronicznej rozrywki. Wprowadzenie Kiedy na świecie pojawił się pierwszy komputer osobisty, przed wieloma kreatywnymi i po- mysłowymi osobami pojawiło się całkiem nowe pole do popisu. Któż mógł jednak zakładać, że opisywany przemysł rozrośnie się do takich rozmiarów i zacznie się go traktować poważnie z punktu widzenia biznesu i zysków? Tak się jednak stało i obecnie gry komputerowe, a także Uniwersytet Szczeciński 71 Agnieszka Szewczyk całą rozrywkę elektroniczną należy traktować jako motor rozwoju technologii cyfrowych, za- równo w sferze software’u jak i hardware’u. Z punktu widzenia grafiki ma to swoje odzwierciedlenie nie tylko w coraz lepszej jakości i realizmie gier, ale także wszelakich formach multimediów. To właśnie gry dyktują w wiel- kiej mierze to, w jakim tempie rozwija się technologia cyfrowa. Obecnie firmy zajmujące się tworzeniem gier, które u swych początków składały się najczęściej z garstki entuzjastów, są gigantami, jak na przykład firma Microsoft czy też firma Electronic Arts. Producenci najczęściej nie ograniczają się wyłącznie do pojedynczych projektów, tworząc jednocześnie kilka tytułów w studiach w różnych zakątkach świata. -

A Web-Based Collaborative Multimedia Presentation Document System

ABSTRACT With the distributed and rapidly increasing volume of data and expeditious de- velopment of modern web browsers, web browsers have become a possible legitimate vehicle for remote interactive multimedia presentation and collaboration, especially for geographically dispersed teams. To our knowledge, although there are a large num- ber of applications developed for these purposes, there are some drawbacks in prior work including the lack of interactive controls of presentation flows, general-purpose collaboration support on multimedia, and efficient and precise replay of presentations. To fill the research gaps in prior work, in this dissertation, we propose a web-based multimedia collaborative presentation document system, which models a presentation as media resources together with a stream of media events, attached to associated media objects. It represents presentation flows and collaboration actions in events, implements temporal and spatial scheduling on multimedia objects, and supports real-time interactive control of the predefined schedules. As all events are repre- sented by simple messages with an object-prioritized approach, our platform can also support fine-grained precise replay of presentations. Hundreds of kilobytes could be enough to store the events in a collaborative presentation session for accurate replays, compared with hundreds of megabytes in screen recording tools with a pixel-based replay mechanism. A WEB-BASED COLLABORATIVE MULTIMEDIA PRESENTATION DOCUMENT SYSTEM by Chunxu Tang B.S., Xiamen University, 2013 M.S., Syracuse University, 2015 Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical and Computer Engineering. Syracuse University June 2019 Copyright c Chunxu Tang 2019 All Rights Reserved To my parents. -

'Cracks' Ground 24 More DC-10S

mily quarrel; father held Darnel Suotun » beuf o*U u B* •dqiur •K and the e eiperted to have Mtt, a police additional liornution on the uKidwl by own today Mirad several etflk fn m Oir none! had . made a determiiiauon of t complei before respoMkim BetidM Sft Beih irly tlui morni |, e»cept to say •ctt, Patraimw Uat Use** aad Chart* Davit reapooaad ilensmCare totfcecaSi Dataetiwc Sergeant Thomas Itmufcim it investigating The Daily Register VOL. 101 NO. 293 SHREWSBURY, N.J. TUESDAY, JUNE 5, 1979 15 CENTS r 'Cracks' ground 24 more DC-10s WASHINGTON (AP) - Federal officials, concerned that The intensive inspections of the jets were triggered by last removed the engine and pylon in one section, instead of two than 20 of the nation's 137 DC-lOs would be affected improper maintenance procedures may have produced a crack month's crash of an American Airlines DC-lOas it was taking steps. Flight 191 had undergone the same "engine removal and Under the order, airlines are urged to immedititelv and led to the natiqa's worst air crash, have grounded nearly off from Chicago's O'Hare International Airport. The crash, reinstallation procedure ' on March 30, the FAA order said all planes which had their engines and pylons removed In 0 > two dozen DC-lOs. which occurred after one of the plane's three engines fell off, McDonnell Douglas, maker of the IK' ui specifies in a section. The grounding was ordered after similar cracks were claimed 274 lives. service bulletin that the engine and pylon should be removed American's method of removing and reinstalling the p] discovered in two jets that had gone through the same pro- The Federal Aviation Administration ordered the latest separately involved using a forklift. -

The Design of a Rocket Propulsion Labview Data Acquisition And

University of Texas at El Paso DigitalCommons@UTEP Open Access Theses & Dissertations 2018-01-01 The esiD gn Of A Rocket Propulsion Labview Data Acquisition And Control System Dylan Allan Ott University of Texas at El Paso, [email protected] Follow this and additional works at: https://digitalcommons.utep.edu/open_etd Part of the Aerospace Engineering Commons Recommended Citation Ott, Dylan Allan, "The eD sign Of A Rocket Propulsion Labview Data Acquisition And Control System" (2018). Open Access Theses & Dissertations. 1508. https://digitalcommons.utep.edu/open_etd/1508 This is brought to you for free and open access by DigitalCommons@UTEP. It has been accepted for inclusion in Open Access Theses & Dissertations by an authorized administrator of DigitalCommons@UTEP. For more information, please contact [email protected]. THE DESIGN OF A ROCKET PROPULSION LABVIEW DATA ACQUISITION AND CONTROL SYSTEM DYLAN ALLAN OTT Master’s Program in Mechanical Engineering APPROVED: Ahsan Choudhuri, Ph.D., Chair Norman Love, Ph.D. Jack Chessa, Ph.D. Luis Rene Contreras, Ph.D. Charles Ambler, Ph.D. Dean of the Graduate School Copyright © by Dylan Allan Ott 2018 Dedication I would like to dedicate this thesis to my friends and family. Without their love and support, I would not have made it as far as I did. THE DESIGN OF A ROCKET PROPULSION LABVIEW DATA ACQUISITION AND CONTROL SYSTEM by DYLAN ALLAN OTT, BSME THESIS Presented to the Faculty of the Graduate School of The University of Texas at El Paso in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE Department of Mechanical Engineering THE UNIVERSITY OF TEXAS AT EL PASO May 2018 Acknowledgements I would like to start of by thanking cSETR director Dr. -

ALL ABO Ardt::~:~WARE Morris · NEWARK, DELAWARE

Newarker in Hall of Fame/19a 25~ CHS's Phi Kappa Kicka/lb October 8, 1986 Newark, Del. ALL ABO ARDt::~:~WARE Morris · NEWARK, DELAWARE ... the Wilmington & Western for a Library scenic trip through Red Clay Valley event Saturday Librarian of Congress Dr. Daniel J. Boorstin will be the featured speaker when the University of Delaware rededicates its Hugh M. Morris Library on Saturday morning, Oct. 11. The rededication is being held to mark completion of the $15 million renovation and expansion of the library, a project which has provided increased space for students and book collections and which has given the University the region's first computerized card catalogue system. That system, known as DELCAT, has made Morris I.Jbrary a "book oriented high technology library, and that's a wonderful combination," ac· cording to Susan Brynteson, U. of D. director of libraries. DELCAT, which began operating last Thursday, features an easy to read, full col· or screen. It enables users to quickly search the library's col lection, using authors, titles or subjects as by author, title or subject. Currently, 22 DELCAT ter minals are placed in the card catalogue area just inside the library's new Mall entrance and Photo/Butch Comegys additional terminals are scat Engineer Donald Fenstermacher with the Wilmington & Western steam engine as it prepares to tered throughout the facility. pull out of Greenbank Station. Brynteson said officials hope to have 100 terminals in place by Of approximately 125 steam railroads from Greenbank through Mount Cuba to winter, with some at University left in the United States, one of the most Hockessin, families and friends have satellite campuses. -

Tom Clancy's Rainbow Six Siege Aretha C

JULY 2019 ISSUE 19 ® TITI MAGAZINE Titimag.com EDITOR Dickson Max Prince ® @dicksonprincemax CONTRIBUTORS JULY 2019 ISSUE 19 Anita .W. Dickson Efenudu Ejiro Michael TABLE OF CONTENTS Bekesu Anthony Dickson Max Prince Dying Light: The Following Ernest .O. Nicole Carlin Tom Clancy's Rainbow Six Siege Aretha C. Smith Warface Doom Eternal PHOTOGRAPHER Esegine Bright Kelvin Call of Duty: Black Ops 4 @bright_kevin MODEL Infinix S4 Queeneth @Queen.mia_ng Oppo F11 Pro Oppo F7 PUBLISHERS Oppo F5 Pucutiti.Inc® Vivo V9 Koenigsegg BMW M2 COUPÉ Movies The Best Skin Products for Black Skin How to Get Naturally Clear & Glowing Skin for Black titimag.com Woman For more info [email protected] +2348134428331 +2348089216836 Titimag.com Titi Magazine® and all Titi related Sub sections are trademark of Pucutiti.inc® The Pucutiti® logo, Titi Magazine® logo, Titi Store® logo , Titi Comics® logo Titi Games®logo, Titi Animation® logo, TitiWeb Developers® logo,, Titi Studios® logo, Titi Messenger® logo are all trade mark of Pucutiti.inc. Only Pucutiti.Inc® reserve the rights to all Titi Magazine® and all Titi® related Copyright © titimag July 2019 Sub sections. Dying Light: The Following Dying Light: The Following is an expansion pack for the open-world first-person survival horror video game Dying Light. The game was developed by Techland, published by Warner Bros. Interactive Entertainment, and released for Microsoft Win- dows, Linux, PlayStation 4, and Xbox One on February 9, 2016. The expansion adds characters, a story campaign, weapons, and gameplay me- chanics. Dying Light: The Following – Enhanced Edition includes Dying Light, Dying Light: The Following, and downloadable content released for the original game, except for three DLCs: Harran Ranger Bundle, Gun Psycho Bundle and Volatile Hunter Bundle. -

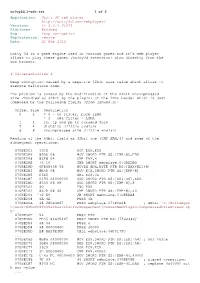

Application: Unity 3D Web Player

unity3d_1-adv.txt 1 of 2 Application: Unity 3D web player http://unity3d.com/webplayer/ Versions: <= 3.2.0.61061 Platforms: Windows Bug: heap corruption Exploitation: remote Date: 21 Feb 2012 Unity 3d is a game engine used in various games and it’s web player allows to play these games (unity3d extension) also directly from the web browser. # Vulnerabilities # Heap corruption caused by a negative 32bit size value which allows to execute malicious code. The problem is caused by the modification of the 64bit uncompressed size (handled as 32bit by the plugin) of the lzma header which is just composed by the following fields (from lzma86.h): Offset Size Description 0 1 = 0 - no filter, pure LZMA = 1 - x86 filter + LZMA 1 1 lc, lp and pb in encoded form 2 4 dictSize (little endian) 6 8 uncompressed size (little endian) Reading of the 64bit field as 32bit one (CMP EAX,4) and some of the subsequent operations: 070BEDA3 33C0 XOR EAX,EAX 070BEDA5 895D 08 MOV DWORD PTR SS:[EBP+8],EBX 070BEDA8 83F8 04 CMP EAX,4 070BEDAB 73 10 JNB SHORT webplaye.070BEDBD 070BEDAD 0FB65438 05 MOVZX EDX,BYTE PTR DS:[EAX+EDI+5] 070BEDB2 8B4D 08 MOV ECX,DWORD PTR SS:[EBP+8] 070BEDB5 D3E2 SHL EDX,CL 070BEDB7 0196 A4000000 ADD DWORD PTR DS:[ESI+A4],EDX 070BEDBD 8345 08 08 ADD DWORD PTR SS:[EBP+8],8 070BEDC1 40 INC EAX 070BEDC2 837D 08 40 CMP DWORD PTR SS:[EBP+8],40 070BEDC6 ^72 E0 JB SHORT webplaye.070BEDA8 070BEDC8 6A 4A PUSH 4A 070BEDCA 68 280A4B07 PUSH webplaye.074B0A28 ; ASCII "C:/BuildAgen t/work/b0bcff80449a48aa/PlatformDependent/CommonWebPlugin/CompressedFileStream.cp p" 070BEDCF 53 PUSH EBX 070BEDD0 FF35 84635407 PUSH DWORD PTR DS:[7546384] 070BEDD6 6A 04 PUSH 4 070BEDD8 68 00000400 PUSH 40000 070BEDDD E8 BA29E4FF CALL webplaye.06F0179C .. -

Club Veedub Sydney. March 2008

Club Veedub. Aus Liebe zum Automobil Klub. Rolf Blaschke has rallied this 1966 1500 since 1972. March 2008 IN THIS ISSUE: Deutschland reiße Pt 1 All the latest VW news The VW cornerstone Driving around Australia Pt 1 Polo Bluemotion The Toy Department Hartees hamburgers Plus lots more... Club Veedub Sydney. www.clubvw.org.au A member of the NSW Council of Motor Clubs. ZEITSCHRIFT - March 2008 - Page 1 Club Veedub Sydney. Das Auto Klub. Club Veedub membership. Club Veedub Sydney Membership of Club Veedub Sydney is open to all Committee 2007-08. Volkswagen owners. The cost is $40 for 12 months. President: David Birchall (02) 9534 4825 [email protected] Monthly meetings. Monthly Club VeeDub meetings are held at Vice President: Bill Daws 0419 431 531 Greyhound Social Club Ltd., 140 Rookwood Rd, Yagoona, on [email protected] the third Thursday of each month from 7:30 pm. All our members, and visitors, are most welcome. Secretary and: Bob Hickman (02) 4655 5566 Public Officer: [email protected] Correspondence. Club Veedub Sydney Treasurer: Martin Fox 0411 331 121 PO Box 1135 [email protected] Parramatta NSW 2124 [email protected] Editor: Phil Matthews (02) 9773 3970 [email protected] Our magazine. Webmaster: Steve Carter 0439 133 354 Zeitschrift is published monthly by Club Veedub [email protected] Sydney. We welcome all letters and contributions of general VW interest. These may be edited for reasons of space, clarity, spelling or grammar. Deadline for all contributions Trivia Pro: John Weston (02) 9520 9343 is the first Thursday of each month. -

Detecting and Tracking Criminals in the Real World Through an Iot-Based System

sensors Article Detecting and Tracking Criminals in the Real World through an IoT-Based System Andrea Tundis 1,*, Humayun Kaleem 2 and Max Mühlhäuser 1 1 Department of Computer Science, Technische Universität Darmstadt, 64289 Darmstadt, Germany; [email protected] 2 Fraunhofer SIT, Institute for Secure Information Technology, 64295 Darmstadt, Germany; [email protected] * Correspondence: [email protected]; Tel.: +49-6151-16-23199 Received: 8 May 2020; Accepted: 3 July 2020; Published: 7 July 2020 Abstract: Criminals and related illegal activities represent problems that are neither trivial to predict nor easy to handle once they are identified. The Police Forces (PFs) typically base their strategies solely on their intra-communication, by neglecting the involvement of third parties, such as the citizens, in the investigation chain which results in a lack of timeliness among the occurrence of the criminal event, its identification, and intervention. In this regard, a system based on IoT social devices, for supporting the detection and tracking of criminals in the real world, is proposed. It aims to enable the communication and collaboration between citizens and PFs in the criminal investigation process by combining app-based technologies and embracing the advantages of an Edge-based architecture in terms of responsiveness, energy saving, local data computation, and distribution, along with information sharing. The proposed model as well as the algorithms, defined on the top of it, have been evaluated through a simulator for showing the logic of the system functioning, whereas the functionality of the app was assessed through a user study conducted upon a group of 30 users. -

City of Elgin, Illinois Council Agenda City Council Chambers

CITY OF ELGIN, ILLINOIS COUNCIL AGENDA CITY COUNCIL CHAMBERS Regular Meeting 7:00 P.M. February 28, 2018 Call to Order Invocation – Reverend Janie McCutchen, Second Baptist Church Pledge of Allegiance Roll Call Minutes of Previous Meetings – February 14, 2018 Recognize Persons Present Bids 1. 18-001 Community Development Block Grant Park Projects ($668,076) (This item must be removed from the Table) 2. 18-002 Medium Voltage Starter and Source Transfer Controls ($440,130) 3. Joint Purchasing Cooperative – Utilities Division Vehicle Purchases ($426,711) 4. Joint Purchasing Agreement - Microsoft Enterprise Software License Subscription Renewal ($131,295 annually for three year term) 5. Joint Purchasing Contract - Wheeled Coach Fire Ambulance ($250,362) Other Business (O) 1. Resolution Authorizing Execution of a Purchase of Services Agreement with the Downtown Neighborhood Association of Elgin for Economic Development Services 2. Resolution Authorizing Execution of an Owner Impact Fee Agreement with Watermark Apartments LLC for the Watermark at the Grove Development (Vantage Drive within the Grove at Randall) City Council Agenda – February 28, 2018 Page 2 3. Resolution Authorizing Execution of a Sponsor Impact Fee, Excess Cost, and Construction Agreement with Watermark IP IL LLC for the Watermark at the Grove Development (Vantage Drive within the Grove at Randall) 4. Ordinance Amending Chapter 6.06 of the Elgin Municipal Code, 1976, as Amended, Entitled "Alcoholic Liquor Dealers" to Amend the Number of Certain Available Liquor License Classifications **Consent Agenda (C) 1. Resolution Authorizing the Execution of a Proposal From TKB Associates, Inc. for the Purchase of Laserfiche Enterprise Content Management System Upgrade 2. Resolution Authorizing Execution of an Agreement with Elgin Men's Baseball League, Inc.