PHYS 391 – Lab 2: Measurement of the Speed of Light

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TEE Internal Core API Specification V1.1.2.50

GlobalPlatform Technology TEE Internal Core API Specification Version 1.1.2.50 (Target v1.2) Public Review June 2018 Document Reference: GPD_SPE_010 Copyright 2011-2018 GlobalPlatform, Inc. All Rights Reserved. Recipients of this document are invited to submit, with their comments, notification of any relevant patents or other intellectual property rights (collectively, “IPR”) of which they may be aware which might be necessarily infringed by the implementation of the specification or other work product set forth in this document, and to provide supporting documentation. The technology provided or described herein is subject to updates, revisions, and extensions by GlobalPlatform. This documentation is currently in draft form and is being reviewed and enhanced by the Committees and Working Groups of GlobalPlatform. Use of this information is governed by the GlobalPlatform license agreement and any use inconsistent with that agreement is strictly prohibited. TEE Internal Core API Specification – Public Review v1.1.2.50 (Target v1.2) THIS SPECIFICATION OR OTHER WORK PRODUCT IS BEING OFFERED WITHOUT ANY WARRANTY WHATSOEVER, AND IN PARTICULAR, ANY WARRANTY OF NON-INFRINGEMENT IS EXPRESSLY DISCLAIMED. ANY IMPLEMENTATION OF THIS SPECIFICATION OR OTHER WORK PRODUCT SHALL BE MADE ENTIRELY AT THE IMPLEMENTER’S OWN RISK, AND NEITHER THE COMPANY, NOR ANY OF ITS MEMBERS OR SUBMITTERS, SHALL HAVE ANY LIABILITY WHATSOEVER TO ANY IMPLEMENTER OR THIRD PARTY FOR ANY DAMAGES OF ANY NATURE WHATSOEVER DIRECTLY OR INDIRECTLY ARISING FROM THE IMPLEMENTATION OF THIS SPECIFICATION OR OTHER WORK PRODUCT. Copyright 2011-2018 GlobalPlatform, Inc. All Rights Reserved. The technology provided or described herein is subject to updates, revisions, and extensions by GlobalPlatform. -

Domain Tips and Tricks Lab

Installing a Domain Service for Windows: Domain Tips and Tricks Lab Novell Training Services www.novell.com OES10 ATT LIVE 2012 LAS VEGAS Novell, Inc. Copyright 2012-ATT LIVE-1-HARDCOPY PERMITTED. NO OTHER PRINTING, COPYING, OR DISTRIBUTION ALLOWED. Legal Notices Novell, Inc., makes no representations or warranties with respect to the contents or use of this documentation, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc., reserves the right to revise this publication and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. Further, Novell, Inc., makes no representations or warranties with respect to any software, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc., reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to notify any person or entity of such changes. Any products or technical information provided under this Agreement may be subject to U.S. export controls and the trade laws of other countries. You agree to comply with all export control regulations and to obtain any required licenses or classification to export, re-export or import deliverables. You agree not to export or re-export to entities on the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws. You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. -

Show Command Output Redirection

show Command Output Redirection The show Command Output Redirection feature provides the capability to redirect output from Cisco IOS command-line interface (CLI) show commands and more commands to a file. • Finding Feature Information, page 1 • Information About show Command Output Redirection, page 1 • How to Use the show Command Enhancement, page 2 • Additional References, page 2 • Feature Information for show Command Output Redirection, page 3 Finding Feature Information Your software release may not support all the features documented in this module. For the latest caveats and feature information, see Bug Search Tool and the release notes for your platform and software release. To find information about the features documented in this module, and to see a list of the releases in which each feature is supported, see the feature information table at the end of this module. Use Cisco Feature Navigator to find information about platform support and Cisco software image support. To access Cisco Feature Navigator, go to www.cisco.com/go/cfn. An account on Cisco.com is not required. Information About show Command Output Redirection This feature enhances the show commands in the Cisco IOS CLI to allow large amounts of data output to be written directly to a file for later reference. This file can be saved on local or remote storage devices such as Flash, a SAN Disk, or an external memory device. For each show command issued, a new file can be created, or the output can be appended to an existing file. Command output can optionally be displayed on-screen while being redirected to a file by using the tee keyword. -

APPENDIX a Aegis and Unix Commands

APPENDIX A Aegis and Unix Commands FUNCTION AEGIS BSD4.2 SYSS ACCESS CONTROL AND SECURITY change file protection modes edacl chmod chmod change group edacl chgrp chgrp change owner edacl chown chown change password chpass passwd passwd print user + group ids pst, lusr groups id +names set file-creation mode mask edacl, umask umask umask show current permissions acl -all Is -I Is -I DIRECTORY CONTROL create a directory crd mkdir mkdir compare two directories cmt diff dircmp delete a directory (empty) dlt rmdir rmdir delete a directory (not empty) dlt rm -r rm -r list contents of a directory ld Is -I Is -I move up one directory wd \ cd .. cd .. or wd .. move up two directories wd \\ cd . ./ .. cd . ./ .. print working directory wd pwd pwd set to network root wd II cd II cd II set working directory wd cd cd set working directory home wd- cd cd show naming directory nd printenv echo $HOME $HOME FILE CONTROL change format of text file chpat newform compare two files emf cmp cmp concatenate a file catf cat cat copy a file cpf cp cp Using and Administering an Apollo Network 265 copy std input to std output tee tee tee + files create a (symbolic) link crl In -s In -s delete a file dlf rm rm maintain an archive a ref ar ar move a file mvf mv mv dump a file dmpf od od print checksum and block- salvol -a sum sum -count of file rename a file chn mv mv search a file for a pattern fpat grep grep search or reject lines cmsrf comm comm common to 2 sorted files translate characters tic tr tr SHELL SCRIPT TOOLS condition evaluation tools existf test test -

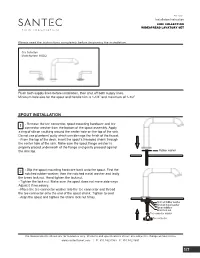

Installation Instruction

Rev 1/2020 Installation Instruction Please read the instructions completely before beginning the installation. Circ Collection Model Number: 3920CI Flush both supply lines before installation, then shut off both supply lines. Minimum hole size for the spout and handle trim is 1-1/4” and maximum of 1-1/2” SPOUT INSTALLATION 1 - Remove the tee connector, spout mounting hardware and tee connector washer from the bottom of the spout assembly. Apply a ring of silicon caulking around the center hole on the top of the sink. Do not use plumbers' putty which can damage the finish of the faucet. - From the top of the deck, insert the spout's threaded shank through the center hole of the sink. Make sure the spout flange washer is properly placed underneath of the flange and gently pressed against the sink top. Rubber washer 2 - Slip the spout mounting hardware back onto the spout. First the notched rubber washer, then the notched metal washer and lastly the brass lock nut. Hand tighten the lock nut. - Tighten the lock nut. Make sure the spout does not move side ways Adjust it if necessary. - Place the tee connector washer into the tee connector and thread the tee connector onto the end of the spout shank. Tighten to seal. - Align the spout and tighten the shank lock nut firmly. Notched rubber washer Notched metal washer Spout stabilizer Brass lock nut Tee connector washer Tee connector The measurements shown are for reference only. Products and specifications shown are subject to change without notice. www.santecfaucet.com | P: 310.542.0063 F: 310.542.5681 1/7 HANDLE TRIM INSTALLATION DO NOT DISASSEMBLE HANDLE ASSEMBLY - DROP-IN VALVES 1 - Both hot and cold side handle trims are pre-assmbled for faster installation. -

Keep TEE Probe Care Top-Of-Mind

Keep TEE Probe Care Top-Of-Mind Three Ways to Prevent Catastrophic TEE Probe Failures 1. Perform Frequent Quality Visual Inspections • When removed from storage • During removal • Before soaking in disinfectant • When set-up and connected to the scanner • After removal • After soaking in disinfectant • Before insertion • During pre-cleaning • Before storing 2. Perform Frequent and Time-based Leakage Testing Frequent • After EVERY patient exam, following a thorough visual inspection, under magnification, and prior to lengthy soak in disinfectant Time-based • Test once at the beginning of the soak cycle and once again at the end in order to reveal intermittent, slow leaks (the beginning of hard, catastrophic failures) 3. Establish a TEE Probe Preventative Maintenance Program • Address wearable items before they can contribute to larger, costly failures • Restore optimal performance and maintain uptime • Extend TEE probe lifecycyle • Suggested Intervals: • Bending rubber replacement - every 6-12 months • Articulation adjustment - every 6-12 months • Re-coating/re-labeling - every 12-18 months Industry Best-Practices for TEE Probe Care and Handling • Always use a protective tip cover when the probe is outside of the patient • Always transport TEE probes in covered bins • Don't coil the insertion tube in less than a 12-inch diameter • Use a TEE bite guard on every patient • Anytime a probe changes hands, perform a quick visual inspection of the tip, bending section and insertion tube. See below • Thoroughly inspect the tip and bending section, -

CS2043 - Unix Tools & Scripting Cornell University, Spring 20141

CS2043 - Unix Tools & Scripting Cornell University, Spring 20141 Instructor: Bruno Abrahao January 31, 2014 1 Slides evolved from previous versions by Hussam Abu-Libdeh and David Slater Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting Vim: Tip of the day! Line numbers Displays line number in Vim: :set nu Hides line number in Vim: :set nonu Goes to line number: :line number Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting Counting wc How many lines of code are in my new awesome program? How many words are in this document? Good for bragging rights Word, Character, Line, and Byte count with wc wc -l : count the number of lines wc -w : count the number of words wc -m : count the number of characters wc -c : count the number of bytes Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting Sorting sort Sorts the lines of a text file alphabetically. sort -ru file sorts the file in reverse order and deletes duplicate lines. sort -n -k 2 -t : file sorts the file numerically by using the second column, separated by a colon Example Consider a file (numbers.txt) with the numbers 1, 5, 8, 11, 62 each on a separate line, then: $ sort numbers.txt $ sort numbers.txt -n 1 1 11 5 5 8 62 11 8 62 Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting uniq uniq uniq file - Discards all but one of successive identical lines uniq -c file - Prints the number of successive identical lines next to each line Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting Character manipulation! The Translate Command tr [options] <char_list1> [char_list2] Translate or delete characters char lists are strings of characters By default, searches for characters in char list1 and replaces them with the ones that occupy the same position in char list2 Example: tr 'AEIOU' 'aeiou' - changes all capital vowels to lower case vowels Instructor: Bruno Abrahao CS2043 - Unix Tools & Scripting Pipes and redirection tr only receives input from standard input (stdin) i.e. -

TEE Management Framework V1.0

GlobalPlatform Device Technology TEE Management Framework Version 1.0 Public Release November 2016 Document Reference: GPD_SPE_120 Copyright 2012-2016 GlobalPlatform, Inc. All Rights Reserved. Recipients of this document are invited to submit, with their comments, notification of any relevant patents or other intellectual property rights (collectively, “IPR”) of which they may be aware which might be necessarily infringed by the implementation of the specification or other work product set forth in this document, and to provide supporting documentation. The technology provided or described herein is subject to updates, revisions, and extensions by GlobalPlatform. Use of this information is governed by the GlobalPlatform license agreement and any use inconsistent with that agreement is strictly prohibited. TEE Management Framework – Public Release v1.0 THIS SPECIFICATION OR OTHER WORK PRODUCT IS BEING OFFERED WITHOUT ANY WARRANTY WHATSOEVER, AND IN PARTICULAR, ANY WARRANTY OF NON-INFRINGEMENT IS EXPRESSLY DISCLAIMED. ANY IMPLEMENTATION OF THIS SPECIFICATION OR OTHER WORK PRODUCT SHALL BE MADE ENTIRELY AT THE IMPLEMENTER’S OWN RISK, AND NEITHER THE COMPANY, NOR ANY OF ITS MEMBERS OR SUBMITTERS, SHALL HAVE ANY LIABILITY WHATSOEVER TO ANY IMPLEMENTER OR THIRD PARTY FOR ANY DAMAGES OF ANY NATURE WHATSOEVER DIRECTLY OR INDIRECTLY ARISING FROM THE IMPLEMENTATION OF THIS SPECIFICATION OR OTHER WORK PRODUCT. Copyright 2012-2016 GlobalPlatform, Inc. All Rights Reserved. The technology provided or described herein is subject to updates, revisions, and extensions by GlobalPlatform. Use of this information is governed by the GlobalPlatform license agreement and any use inconsistent with that agreement is strictly prohibited. TEE Management Framework – Public Release v1.0 3 / 228 Contents 1 Introduction ......................................................................................................................... -

Scripting in Axis Network Cameras and Video Servers

Scripting in Axis Network Cameras and Video Servers Table of Contents 1 INTRODUCTION .............................................................................................................5 2 EMBEDDED SCRIPTS ....................................................................................................6 2.1 PHP .....................................................................................................................................6 2.2 SHELL ..................................................................................................................................7 3 USING SCRIPTS IN AXIS CAMERA/VIDEO PRODUCTS ......................................8 3.1 UPLOADING SCRIPTS TO THE CAMERA/VIDEO SERVER:...................................................8 3.2 RUNNING SCRIPTS WITH THE TASK SCHEDULER...............................................................8 3.2.1 Syntax for /etc/task.list.....................................................................................................9 3.3 RUNNING SCRIPTS VIA A WEB SERVER..............................................................................11 3.3.1 To enable Telnet support ...............................................................................................12 3.4 INCLUDED HELPER APPLICATIONS ..................................................................................13 3.4.1 The image buffer - bufferd........................................................................................13 3.4.2 sftpclient.........................................................................................................................16 -

07 07 Unixintropart2 Lucio Week 3

Unix Basics Command line tools Daniel Lucio Overview • Where to use it? • Command syntax • What are commands? • Where to get help? • Standard streams(stdin, stdout, stderr) • Pipelines (Power of combining commands) • Redirection • More Information Introduction to Unix Where to use it? • Login to a Unix system like ’kraken’ or any other NICS/ UT/XSEDE resource. • Download and boot from a Linux LiveCD either from a CD/DVD or USB drive. • http://www.puppylinux.com/ • http://www.knopper.net/knoppix/index-en.html • http://www.ubuntu.com/ Introduction to Unix Where to use it? • Install Cygwin: a collection of tools which provide a Linux look and feel environment for Windows. • http://cygwin.com/index.html • https://newton.utk.edu/bin/view/Main/Workshop0InstallingCygwin • Online terminal emulator • http://bellard.org/jslinux/ • http://cb.vu/ • http://simpleshell.com/ Introduction to Unix Command syntax $ command [<options>] [<file> | <argument> ...] Example: cp [-R [-H | -L | -P]] [-fi | -n] [-apvX] source_file target_file Introduction to Unix What are commands? • An executable program (date) • A command built into the shell itself (cd) • A shell program/function • An alias Introduction to Unix Bash commands (Linux) alias! crontab! false! if! mknod! ram! strace! unshar! apropos! csplit! fdformat! ifconfig! more! rcp! su! until! apt-get! cut! fdisk! ifdown! mount! read! sudo! uptime! aptitude! date! fg! ifup! mtools! readarray! sum! useradd! aspell! dc! fgrep! import! mtr! readonly! suspend! userdel! awk! dd! file! install! mv! reboot! symlink! -

Building Trust by Maximizing Plant Uptime While Minimizing Costs

CASE STORY Building trust by maximizing plant uptime while minimizing costs Major petrochemical company, Saudi Arabia A major Saudi Arabia-based petro- chemical company asked Alfa Laval to optimize its large heat exchangers used for cooling. Using the Alfa Laval 360° Service Portfolio resulted in more uptime, higher productivity and reduced maintenance costs. The company then placed its trust in Alfa Laval to optimize critical processes throughout the plant. CASE STORY We consider Alfa Laval a trusted partner, not just a service provider. We rely on their expertise to help us optimize our processes and raise plant productivity. Representative of a world-leading Saudi Arabia petrochemicals company Non-stop performance Business Manager, Saudi Arabia. “A comprehensive, Shortly after the plant was commissioned, Alfa Laval long-term maintenance plan goes a long way in prevent- recommended that the company consider condi- ing failures and catching them before the occur.” tion-based cleaning for 45 of its large Alfa Laval plate heat exchangers used for cooling. By conducting a By maximizing cooling uptime and minimizing main- Performance Audit, Alfa Laval engineers determined the tenance costs, Alfa Laval gained the petrochemicals optimal Cleaning-in-Place schedule and predicted the company’s trust. time for gasket replacement based on the actual oper- ating conditions of the heat exchangers. The plate heat Increasing olefin process uptime by 85% exchangers have been in operation for years and will not When faced with challenges at their olefin plant, the need to be opened until reconditioning is required. company once again turned to Alfa Laval. The challenge: to boost productivity and cut downtime due to quarterly “Being proactive is always in the customer’s best in- cleaning of large Alfa Laval plate heat exchangers in terest,” says Mr. -

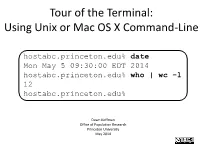

Tour of the Terminal: Using Unix Or Mac OS X Command-Line

Tour of the Terminal: Using Unix or Mac OS X Command-Line hostabc.princeton.edu% date Mon May 5 09:30:00 EDT 2014 hostabc.princeton.edu% who | wc –l 12 hostabc.princeton.edu% Dawn Koffman Office of Population Research Princeton University May 2014 Tour of the Terminal: Using Unix or Mac OS X Command Line • Introduction • Files • Directories • Commands • Shell Programs • Stream Editor: sed 2 Introduction • Operating Systems • Command-Line Interface • Shell • Unix Philosophy • Command Execution Cycle • Command History 3 Command-Line Interface user operating system computer (human ) (software) (hardware) command- programs kernel line (text (manages interface editors, computing compilers, resources: commands - memory for working - hard-drive cpu with file - time) memory system, point-and- hard-drive many other click (gui) utilites) interface 4 Comparison command-line interface point-and-click interface - may have steeper learning curve, - may be more intuitive, BUT provides constructs that can BUT can also be much more make many tasks very easy human-manual-labor intensive - scales up very well when - often does not scale up well when have lots of: have lots of: data data programs programs tasks to accomplish tasks to accomplish 5 Shell Command-line interface provided by Unix and Mac OS X is called a shell a shell: - prompts user for commands - interprets user commands - passes them onto the rest of the operating system which is hidden from the user How do you access a shell ? - if you have an account on a machine running Unix or Linux , just log in. A default shell will be running. - if you are using a Mac, run the Terminal app.