A Citation Analysis of the Quantitative/Qualitative Methods Debate's Reflection in Sociology Research: Implications for Library Collection Development Amanda J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Citation Analysis of the Quantitative/Qualitative Methods Debate's Reflection in Sociology Research: Implications for Library Collection Development

Georgia State University ScholarWorks @ Georgia State University University Library Faculty Publications Georgia State University Library January 2004 A Citation Analysis of the Quantitative/Qualitative Methods Debate's Reflection in Sociology Research: Implications for Library Collection Development Amanda J. Swygart-Hobaugh M.L.S., Ph.D. Georgia State University, [email protected] Follow this and additional works at: https://scholarworks.gsu.edu/univ_lib_facpub Part of the Library and Information Science Commons, and the Sociology Commons Recommended Citation Swygart-Hobaugh, A. J. (2004). A citation analysis of the quantitative/qualitative methods debate's reflection in sociology esearr ch: Implications for library collection development. Library Collections, Acquisitions, and Technical Services, 28, 180-95. This Article is brought to you for free and open access by the Georgia State University Library at ScholarWorks @ Georgia State University. It has been accepted for inclusion in University Library Faculty Publications by an authorized administrator of ScholarWorks @ Georgia State University. For more information, please contact [email protected]. A Citation Analysis of the Quantitative/Qualitative Methods Debate’s Reflection in Sociology Research: Implications for Library Collection Development Amanda J. Swygart-Hobaugh Consulting Librarian for the Social Sciences Russell D. Cole Library Cornell College 600 First Street West Mt. Vernon, IA 52314-1098 [email protected] NOTICE: This is the author’s version of a work that was accepted for publication in Library Collections, Acquisitions, and Technical Services. Changes resulting from the publishing process, such as peer review, editing, corrections, structural formatting, and other quality control mechanisms may not be reflected in this document. Changes may have been made to this work since it was submitted for publication. -

How Frequently Are Articles in Predatory Open Access Journals Cited

publications Article How Frequently Are Articles in Predatory Open Access Journals Cited Bo-Christer Björk 1,*, Sari Kanto-Karvonen 2 and J. Tuomas Harviainen 2 1 Hanken School of Economics, P.O. Box 479, FI-00101 Helsinki, Finland 2 Department of Information Studies and Interactive Media, Tampere University, FI-33014 Tampere, Finland; Sari.Kanto@ilmarinen.fi (S.K.-K.); tuomas.harviainen@tuni.fi (J.T.H.) * Correspondence: bo-christer.bjork@hanken.fi Received: 19 February 2020; Accepted: 24 March 2020; Published: 26 March 2020 Abstract: Predatory journals are Open Access journals of highly questionable scientific quality. Such journals pretend to use peer review for quality assurance, and spam academics with requests for submissions, in order to collect author payments. In recent years predatory journals have received a lot of negative media. While much has been said about the harm that such journals cause to academic publishing in general, an overlooked aspect is how much articles in such journals are actually read and in particular cited, that is if they have any significant impact on the research in their fields. Other studies have already demonstrated that only some of the articles in predatory journals contain faulty and directly harmful results, while a lot of the articles present mediocre and poorly reported studies. We studied citation statistics over a five-year period in Google Scholar for 250 random articles published in such journals in 2014 and found an average of 2.6 citations per article, and that 56% of the articles had no citations at all. For comparison, a random sample of articles published in the approximately 25,000 peer reviewed journals included in the Scopus index had an average of 18, 1 citations in the same period with only 9% receiving no citations. -

The Journal Impact Factor Denominator Defining Citable (Counted) Items

COMMENTARIES The Journal Impact Factor Denominator Defining Citable (Counted) Items Marie E. McVeigh, MS The items counted in the denominator of the impact fac- tor are identifiable in the Web of Science database by hav- Stephen J. Mann ing the index field document type set as “Article,” “Re- view,” or “Proceedings Paper” (a specialized subset of the VER ITS 30-YEAR HISTORY, THE JOURNAL IMPACT article document type). These document types identify the factor has been the subject of much discussion scholarly contribution of the journal to the literature and and debate.1 From its first release in 1975, bib- are counted as “citable items” in the denominator of the im- liometricians and library scientists discussed its pact factor. A journal accepted for coverage in the Thom- Ovalue and its vagaries. In the last decade, discussion has 6 son Reuters citation database is reviewed by experts who shifted to the way in which impact factor data are used. In consider the bibliographic and bibliometric characteristics an environment eager for objective measures of productiv- of all article types published by that journal (eg, items), which ity, relevance, and research value, the impact factor has been are covered by that journal in the context of other materi- applied broadly and indiscriminately.2,3 The impact factor als in the journal, the subject, and the database as a whole. has gone from being a measure of a journal’s citation influ- This journal-specific analysis identifies the journal sec- ence in the broader literature to a surrogate that assesses tions, subsections, or both that contain materials likely to the scholarly value of work published in that journal. -

Citation Analysis: Web of Science, Scopus

Citation analysis: Web of science, scopus Golestan University of Medical Sciences Information Management and Research Network Citation Analysis • Citation analysis is the study of the impact and assumed quality of an article, an author, or an institution based on the number of times works and/or authors have been cited by others • Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the pattern of citations, links from one document to another document, to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books.[1][2] The judgements produced by judges of law to support their decisions refer back to judgements made in earlier cases so citation analysis in a legal context is important. Another example is provided by patents which contain prior art, citation earlier patents relevant to the current claim. Citation Databases • Citation databases are databases that have been developed for evaluating publications. The citation databases enable you to count citations and check, for example, which articles or journals are the most cited ones • In a citation database you get information about who has cited an article and how many times an author has been cited. You can also list all articles citing the same source. • Most important citation database are • “Web of Science”, • “Scopus” • “Google Scholar” Web of Sciences • Web of Science is owned and produced by Thomson Reuters. WoS is composed of three databases containing citations from international scientific journals: • Arts & Humanities Citation Index - AHCI • Social Sciences Citation Index - SSCI • Science Citation Index - SCI • Journal Coverage: • Aims to include the best journals of all fields. -

National Citation Patterns of NEJM, the Lancet, JAMA and the BMJ in the Lay Press: a Quantitative Content Analysis

Open Access Research BMJ Open: first published as 10.1136/bmjopen-2017-018705 on 12 November 2017. Downloaded from National citation patterns of NEJM, The Lancet, JAMA and The BMJ in the lay press: a quantitative content analysis Gonzalo Casino,1 Roser Rius,2 Erik Cobo2 To cite: Casino G, Rius R, ABSTRACT Strengths and limitations of this study Cobo E. National citation Objectives To analyse the total number of newspaper patterns of NEJM, The Lancet, articles citing the four leading general medical journals ► This is the first study that analyses the citations of JAMA and The BMJ in the and to describe national citation patterns. lay press: a quantitative the four leading general medical journals in the lay Design Quantitative content analysis. content analysis. BMJ Open press. Setting/sample Full text of 22 general newspapers in 2017;0:e018705. doi:10.1136/ ► This study shows the existence of a national factor 14 countries over the period 2008–2015, collected from bmjopen-2017-018705 in science communication that needs to be further LexisNexis. The 14 countries have been categorised into monitored and analysed. ► Prepublication history for four regions: the USA, the UK, Western World (European ► Citation and correspondence analyses offer a new this paper is available online. countries other than the UK, and Australia, New Zealand To view these files, please visit way to quantify and monitor the media impact of and Canada) and Rest of the World (other countries). the journal online (http:// dx. doi. scientific journals. Main outcome measure Press citations of four medical org/ 10. 1136/ bmjopen- 2017- ► This study is observational and descriptive; thus, it journals (two American: NEJM and JAMA; and two British: 018705). -

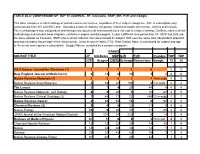

TABLE 46.4: COMPARISON of TOP 10 JOURNAL JIF, Citescore, SNIP, SIR, FCR and Google

TABLE 46.4: COMPARISON OF TOP 10 JOURNAL JIF, Citescore, SNIP, SIR, FCR and Google This table compares current rankings of journals across six sources, regardless of their subject categories. JCR is subscription only, uses journals from SCI and SSCI and calculates a ratio of citations comparing citations to citable documents.- articles and reviews. The methodology is size independent and having more documents and citations does not lead to a higher ranking. CiteScore uses a similar methodology and includes book chapters, conference papers and data papers. It uses a different time period than JIF. SNIP and SJR use the same dataset as Citescore. SNIP uses a similar ratio but normalizes based on subject. SJR uses the same size independent approach and also considers the prestige of the citing journal. It has its own H-Index. FCR, Field Citation Ratio, is normalized for subject and age of the article and requires a subscription. Google Metrics compiled by a computer program. RANKS SOURCE TITLE JIF CiteScore SNIP SJR FCR h5-index TOP TOP JCR Scopus CWTS Scimago Dimensions Google 10 20 CA:A Cancer Journal for Clinicians (1) 1 1 1 1 6 5 5 New England Journal of Medicine (2) 2 13 8 19 1 2 4 6 Nature Reviews Materials (1) 3 3 5 3 4 16 in subc 5 5 Nature Reviews Drug Discovery 4 53 22 156 5 1 in subc 2 2 The Lancet 5 7 4 29 44 4 4 4 Nature Reviews Molecular Cell Biology 6 8 20 5 15 67 3 5 Nature Reviews Clinical Oncology (3) 7 44 71 72 249 13 in subc 1 1 Nature Reviews Cancer 8 10 18 10 9 90 4 5 Chemical Reviews (2) 9 4 9 12 11 9 4 6 Nature Energy -

JOURNAL CITATION REPORTS: the HEART of RESEARCH Systematic

JOURNAL CITATION REPORTS: THE HEART OF RESEARCH Systematic. Objective. Current. Journal Citation Reports® adds a unique vitality to the research ecosystem with quantifiable, statistical information based on citation data from the world's leading journals. Critically evaluate the world’s leading journals faster than ever, keep your finger on the pulse of leading research trends and findings, and more. 11,149 INDEXED JOURNALS 272 NEW JOURNALS ADDED 82 COUNTRIES FOR A 9% INCREASE IN CONTENT 1,721,170 ARTICLES 79,218 REVIEWS 493,588 OTHER ITEMS THE JCR PROCESS CURATED CONTENT PROCESS JOURNAL EVALUATION CONTENT CURATED YEAR-ROUND BY OUR 20152015 JOURNALJOURNAL FULL-TIME EDITORIAL TEAM METRICS CALCULATION CITATIONCITATION REPORTSREPORTS OUR LIST OF COVERED TITLES IS CONTINUOUSLY CURATED DATA UPLOADING ADDITION OF THREE NEW INDICATORS: • Journal Impact Factor Percentile DATA IS REFRESHED TO CAPTURE CITED AND CITING DATA UNIFICATION • Normalized Eigenfactor THE MOST COMPLETE PICTURE OF • Percent Articles in Citable Items JOURNAL ANALYTICS EVALUATE the world’s leading CLARIFY a journal’s true place in the DRILL DOWN to the article-level source INVESTIGATE journal journals to measure influence world of scholarly literature with information with clickable metrics that profiles, complete with and impact at the journal and Transparent indicators trusted by the reveal underlying calculation, and Open Access details. category level. world’s leading research organizations. easy-to-download data and graphs. For more information about Journal Citation Reports, please visit http://about.jcr.incites.thomsonreuters.com © 2015 Thomson Reuters S022241/6-15 Thomson Reuters and the Kinesis logo are trademarks of Thomson Reuters.. -

Evaluating the Importance of Journals in Your Subject Area

Evaluating the importance of journals in your subject area Contents Part 1: Introduction ............................................................................................................................. 2 Key journal metrics ............................................................................................................................ 2 Journal Impact Factor ..................................................................................................................... 2 SCImago Journal Rank (SJR) ........................................................................................................ 3 Scopus Source Normalised Impact per Paper (Scopus SNIP) ..................................................... 3 Issues to consider when using journal metrics ................................................................................. 3 Part 2: Finding journal metrics .......................................................................................................... 5 Journal Impact Factor ........................................................................................................................ 5 Accessing Journal Citation Reports: .............................................................................................. 5 View a group of journals by subject ............................................................................................... 5 Search for a specific journal .......................................................................................................... -

Handout-Choosing a Journal to Publish In.Pdf

Choosing a Journal to Publish In WORKSHOP BROUGHT TO YOU BY NIU LIBRARIES AND THE GRADUATE SCHOOL Introduction Choosing a journal to submit your article to is a highly subjective process with many factors to consider. This toolkit outlines a two-step process to help you choose a journal to submit your publication to. In step 1, you will create a list of potential journals. In step 2, you will assess each journal on your list for fit, reputation, impact, and visibility. Step 1: Methods to Identify Potential Journals The purpose of this section is to create a list of potential journals you could submit to. Use any of the methods below to identify potential journals. Talk to your supervisor • As they know the field, ask them where they would recommend publishing your work. Use your bibliography of reference list • Are you citing the same journal over and over again in your bibliography? If so, it’s an indication that your research fits the scope of that journal. Use academic databases • Search for research on your topic in an academic database for your field. Then use the filters to look at the journals that are most frequently publishing on your topic. Database journal lists • Did you know that you can often find/download the complete list of journals that are indexed in an academic database for your field? The list will be long, but you could identify potential journals based on the titles by skimming the list. High impact journals by field • What are the core journals in your field? Use the Web of Science’s Journal Citation Reportsi or Scopus’ Sources Previewii to view high impact journals in predefined fields. -

Journal Citation Reports Journal Citation Reports: a Primer on the JCR and Journal Impact Factor

Journal Citation Reports Journal Citation Reports: A Primer on the JCR and Journal Impact Factor Web of Science Trust the difference 2 The Journal Citation Reports, first published in 1975, is an annual compendium of citation data that provides a systematic and objective means to assess influence and impact at the journal and category levels. It contains reports on citation performance for science and social science journals and the relationships between citing and cited journals. To receive a Journal Impact Factor a journal must be covered in the Science Citation Index Expanded or Social Sciences Citation Index of the Web of Science. Only journals covered in these citation indexes are eligible to appear in Journal Citation Reports (JCR) and receive a Journal Impact Factor (JIF). Clarivate Analytics | Journal Citation Reports: A Primer 3 on the JCR and Journal Impact Factor How is the JCR used? Journal Impact Factor Numerator The Journal Impact Factor was originally developed by The numerator of the JIF consists of any citation Drs. Eugene Garfield and Irving H. Sher as a metric to the journal as defined by the title of the journal, to aid in selection of additional journals for the newly irrespective of what item in the journal might be created Science Citation Index. Today librarians continue cited. Each cited reference in a scholarly publication to use the JCR as a tool in building and managing their is an acknowledgement of influence. JCR therefore journal collections. Publishers use the JCR to gauge aggregates all citations to a given journal in the journal performance and assess their competitors. -

2018 Journal Citation Reports Journals in the 2018 Release of JCR 2 Journals in the 2018 Release of JCR

2018 Journal Citation Reports Journals in the 2018 release of JCR 2 Journals in the 2018 release of JCR Abbreviated Title Full Title Country/Region SCIE SSCI 2D MATER 2D MATERIALS England ✓ 3 BIOTECH 3 BIOTECH Germany ✓ 3D PRINT ADDIT MANUF 3D PRINTING AND ADDITIVE MANUFACTURING United States ✓ 4OR-A QUARTERLY JOURNAL OF 4OR-Q J OPER RES OPERATIONS RESEARCH Germany ✓ AAPG BULL AAPG BULLETIN United States ✓ AAPS J AAPS JOURNAL United States ✓ AAPS PHARMSCITECH AAPS PHARMSCITECH United States ✓ AATCC J RES AATCC JOURNAL OF RESEARCH United States ✓ AATCC REV AATCC REVIEW United States ✓ ABACUS-A JOURNAL OF ACCOUNTING ABACUS FINANCE AND BUSINESS STUDIES Australia ✓ ABDOM IMAGING ABDOMINAL IMAGING United States ✓ ABDOM RADIOL ABDOMINAL RADIOLOGY United States ✓ ABHANDLUNGEN AUS DEM MATHEMATISCHEN ABH MATH SEM HAMBURG SEMINAR DER UNIVERSITAT HAMBURG Germany ✓ ACADEMIA-REVISTA LATINOAMERICANA ACAD-REV LATINOAM AD DE ADMINISTRACION Colombia ✓ ACAD EMERG MED ACADEMIC EMERGENCY MEDICINE United States ✓ ACAD MED ACADEMIC MEDICINE United States ✓ ACAD PEDIATR ACADEMIC PEDIATRICS United States ✓ ACAD PSYCHIATR ACADEMIC PSYCHIATRY United States ✓ ACAD RADIOL ACADEMIC RADIOLOGY United States ✓ ACAD MANAG ANN ACADEMY OF MANAGEMENT ANNALS United States ✓ ACAD MANAGE J ACADEMY OF MANAGEMENT JOURNAL United States ✓ ACAD MANAG LEARN EDU ACADEMY OF MANAGEMENT LEARNING & EDUCATION United States ✓ ACAD MANAGE PERSPECT ACADEMY OF MANAGEMENT PERSPECTIVES United States ✓ ACAD MANAGE REV ACADEMY OF MANAGEMENT REVIEW United States ✓ ACAROLOGIA ACAROLOGIA France ✓ -

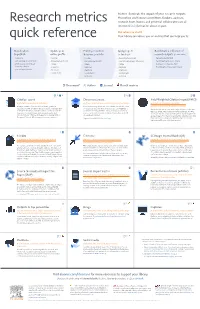

Research Metrics Quick Reference

Metrics illuminate the impact of your research outputs. Promotion and tenure committees, funders, advisors, Research metrics research team leaders and potential collaborators are all interested in information about impact. But where to start? quick reference Your library can advise you on metrics that can help you to: Decide where Update your Enrich promotion Apply/report Benchmark a collection of to publish online profile & tenure portfolio to funders1 research outputs ( for team leaders) • CiteScore • h-index • h-index • Percentile benchmark • Percentile benchmark • SJR: SCImago Journal Rank • Percentile benchmark • Percentile benchmark • Journal metrics (e.g., CiteScore) • Field-Weighted Citation Impact • SNIP: Source Normalized • Usage • Usage • Usage • h-index (if in the same field) Impact per Paper • Captures • Captures • Captures • Field-Weighted Download Impact 2 • Journal Impact Factor • Mentions • Mentions • Mentions • Social media • Social media • Social media • Citations • Citations Document* Author Journal PlumX metrics Citation count Document count Field-Weighted Citation Impact (FWCI) # of citations accrued since publication # of items published by an individual or group of individuals # of citations received by a document A simple measure of attention for an article, journal or A researcher using document count should also provide a list expected # of citations for similar documents researcher. As with all citation-based measures, it is important of document titles with links. If authors use an ORCID iD—a Similar documents are ones in the same discipline, of the same to be aware of citation practices. Citation counts can include persistent scholarly identifier—they can draw on numerous type (e.g., article, letter, review) and of the same age. An FWCI measures of societal impact, such as patent, policy and sources for document count including Scopus, ResearcherID, of 1 means that the output performs just as expected against the clinical citations.