Artificial Intelligence for Interstellar Travel

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Last and First Men

LAST AND FIRST MEN A STORY OF THE NEAR AND FAR FUTURE by W. Olaf Stapledon Project Gutenburg PREFACE THIS is a work of fiction. I have tried to invent a story which may seem a possible, or at least not wholly impossible, account of the future of man; and I have tried to make that story relevant to the change that is taking place today in man's outlook. To romance of the future may seem to be indulgence in ungoverned speculation for the sake of the marvellous. Yet controlled imagination in this sphere can be a very valuable exercise for minds bewildered about the present and its potentialities. Today we should welcome, and even study, every serious attempt to envisage the future of our race; not merely in order to grasp the very diverse and often tragic possibilities that confront us, but also that we may familiarize ourselves with the certainty that many of our most cherished ideals would seem puerile to more developed minds. To romance of the far future, then, is to attempt to see the human race in its cosmic setting, and to mould our hearts to entertain new values. But if such imaginative construction of possible futures is to be at all potent, our imagination must be strictly disciplined. We must endeavour not to go beyond the bounds of possibility set by the particular state of culture within which we live. The merely fantastic has only minor power. Not that we should seek actually to prophesy what will as a matter of fact occur; for in our present state such prophecy is certainly futile, save in the simplest matters. -

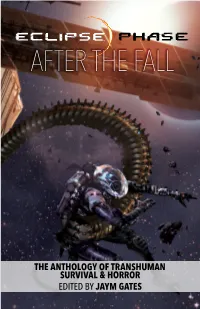

Eclipse Phase: After the Fall

AFTER THE FALL In a world of transhuman survival and horror, technology allows the re-shaping of bodies and minds, but also creates opportunities for oppression and puts the capa- AFTER THE FALL bility for mass destruction in the hands of everyone. Other threats lurk in the devastated habitats of the Fall, dangers both familiar and alien. Featuring: Madeline Ashby Rob Boyle Davidson Cole Nathaniel Dean Jack Graham Georgina Kamsika SURVIVAL &SURVIVAL HORROR Ken Liu OF ANTHOLOGY THE Karin Lowachee TRANSHUMAN Kim May Steven Mohan, Jr. Andrew Penn Romine F. Wesley Schneider Tiffany Trent Fran Wilde ECLIPSE PHASE 21950 Advance THE ANTHOLOGY OF TRANSHUMAN Reading SURVIVAL & HORROR Copy Eclipse Phase created by Posthuman Studios EDITED BY JAYM GATES Eclipse Phase is a trademark of Posthuman Studios LLC. Some content licensed under a Creative Commons License (BY-NC-SA); Some Rights Reserved. © 2016 AFTER THE FALL In a world of transhuman survival and horror, technology allows the re-shaping of bodies and minds, but also creates opportunities for oppression and puts the capa- AFTER THE FALL bility for mass destruction in the hands of everyone. Other threats lurk in the devastated habitats of the Fall, dangers both familiar and alien. Featuring: Madeline Ashby Rob Boyle Davidson Cole Nathaniel Dean Jack Graham Georgina Kamsika SURVIVAL &SURVIVAL HORROR Ken Liu OF ANTHOLOGY THE Karin Lowachee TRANSHUMAN Kim May Steven Mohan, Jr. Andrew Penn Romine F. Wesley Schneider Tiffany Trent Fran Wilde ECLIPSE PHASE 21950 THE ANTHOLOGY OF TRANSHUMAN SURVIVAL & HORROR Eclipse Phase created by Posthuman Studios EDITED BY JAYM GATES Eclipse Phase is a trademark of Posthuman Studios LLC. -

SESSION: Archaeology of the Future (Time Keeps on Slipping…)

SESSION: Archaeology of the future (time keeps on slipping…) North American Theoretical Archaeology Group (TAG) New York University May 22-24, 2015 Organizer: Karen Holmberg (NYU, Environmental Studies) Recent interest in deep time from historical perspectives is intellectually welcome (e.g., Guldi and Armitage, 2014), though the deep past has long been the remit of archaeology so is not novel in archaeological vantages. Rather, a major shift of interest in time scale for archaeology came from historical archaeology and its relatively recent focus and adamant refusal to be the handmaiden of history. Foci on the archaeology of ‘now’ (e.g., Harrison, 2010) further refreshed and expanded the temporal playing field for archaeological thought, challenging and extending the preeminence of the deep past as the sole focus of archaeological practice. If there is a novel or intellectually challenging time frame to consider in the early 21st century, it is that of the deep future (Schellenberg, 2014). Marilyn Strathern’s (1992: 190) statement in After Nature is accurate, certainly: ‘An epoch is experienced as a now that gathers perceptions of the past into itself. An epoch will thus always will be what I have called post-eventual, that is, on the brink of collapse.’ What change of tone comes from envisioning archaeology’s role and place in a very deep future? This session invites papers that imaginatively consider the movement of time, the movement of archaeological ideas, the movement of objects, the movement of data, or any other form of movement the author would like to consider in relation to a future that only a few years ago might have been seen as a realm of science and cyborgs (per Haraway, 1991) but is increasingly fraught with dystopic environmental anxiety regarding the advent of the Anthropocene (e.g., Scranton, 2013). -

Abstracts of the 50Th DDA Meeting (Boulder, CO)

Abstracts of the 50th DDA Meeting (Boulder, CO) American Astronomical Society June, 2019 100 — Dynamics on Asteroids break-up event around a Lagrange point. 100.01 — Simulations of a Synthetic Eurybates 100.02 — High-Fidelity Testing of Binary Asteroid Collisional Family Formation with Applications to 1999 KW4 Timothy Holt1; David Nesvorny2; Jonathan Horner1; Alex B. Davis1; Daniel Scheeres1 Rachel King1; Brad Carter1; Leigh Brookshaw1 1 Aerospace Engineering Sciences, University of Colorado Boulder 1 Centre for Astrophysics, University of Southern Queensland (Boulder, Colorado, United States) (Longmont, Colorado, United States) 2 Southwest Research Institute (Boulder, Connecticut, United The commonly accepted formation process for asym- States) metric binary asteroids is the spin up and eventual fission of rubble pile asteroids as proposed by Walsh, Of the six recognized collisional families in the Jo- Richardson and Michel (Walsh et al., Nature 2008) vian Trojan swarms, the Eurybates family is the and Scheeres (Scheeres, Icarus 2007). In this theory largest, with over 200 recognized members. Located a rubble pile asteroid is spun up by YORP until it around the Jovian L4 Lagrange point, librations of reaches a critical spin rate and experiences a mass the members make this family an interesting study shedding event forming a close, low-eccentricity in orbital dynamics. The Jovian Trojans are thought satellite. Further work by Jacobson and Scheeres to have been captured during an early period of in- used a planar, two-ellipsoid model to analyze the stability in the Solar system. The parent body of the evolutionary pathways of such a formation event family, 3548 Eurybates is one of the targets for the from the moment the bodies initially fission (Jacob- LUCY spacecraft, and our work will provide a dy- son and Scheeres, Icarus 2011). -

Catalogue 147: Science Fiction

And God said: DELETE lines One to Aleph. LOAD. RUN. And the Universe ceased to exist. Then he pondered for a few aeons, sighed, and added: ERASE. It never had existed. For David Catalogue 147: Science Fiction Bromer Booksellers 607 Boylston Street, at Copley Square Boston, MA 02116 P: 617-247-2818 F: 617-247-2975 E: [email protected] Visit our website at www.bromer.com n the Introduction to Catalogue 123, which contained the bulk of a In his fifty years as a bookman, David naturally recognized the signifi- science fiction collection he had assembled, David Bromer noted cance of the early rarities, the books that laid the groundwork for the that “science fiction is a robust genre of literature, not allowing authors of the modern era. He was pleased to discover, when cata- one to ever complete a collection.” The progressive nature of sci- loguing Cyrano de Bergerac’s The Comical History of the States and enceI and the social fabric that it impacts means that the genre itself Empires of the Worlds of the Moon and the Sun, that its author de- has to be fluid, never quite getting pinned down like a specimen under scribed a personal music player–anticipating in the year 1687 the cre- glass. ation of the Walkman and iPod three centuries later. In this regard, it is entirely fitting that David has been drawn to science Ultimately, science fiction primed the human imagination to accom- fiction as a reader, and as a collector. He is a scientist by training, hav- plish what is perhaps its greatest achievement: the exploration of ing earned a PhD in Metallurgy from MIT and worked in research fields space and the mission to the moon in 1969. -

General Interstellar Issue

Journal of the British Interplanetary Society VOLUME 72 NO.4 APRIL 2019 General interstellar issue POSITRON PROPULSION for Interplanetary and Interstellar Travel Ryan Weed et al. SPACECRAFT WITH INTERSTELLAR MEDIUM MOMENTUM EXCHANGE REACTIONS: the Potential and Limitations of Propellantless Interstellar Travel Drew Brisbin ARTIFICIAL INTELLIGENCE for Interstellar Travel Andreas M. Hein & Stephen Baxter www.bis-space.com ISSN 0007-084X PUBLICATION DATE: 30 MAY 2019 Submitting papers International Advisory Board to JBIS JBIS welcomes the submission of technical Rachel Armstrong, Newcastle University, UK papers for publication dealing with technical Peter Bainum, Howard University, USA reviews, research, technology and engineering in astronautics and related fields. Stephen Baxter, Science & Science Fiction Writer, UK James Benford, Microwave Sciences, California, USA Text should be: James Biggs, The University of Strathclyde, UK ■ As concise as the content allows – typically 5,000 to 6,000 words. Shorter papers (Technical Notes) Anu Bowman, Foundation for Enterprise Development, California, USA will also be considered; longer papers will only Gerald Cleaver, Baylor University, USA be considered in exceptional circumstances – for Charles Cockell, University of Edinburgh, UK example, in the case of a major subject review. Ian A. Crawford, Birkbeck College London, UK ■ Source references should be inserted in the text in square brackets – [1] – and then listed at the Adam Crowl, Icarus Interstellar, Australia end of the paper. Eric W. Davis, Institute for Advanced Studies at Austin, USA ■ Illustration references should be cited in Kathryn Denning, York University, Toronto, Canada numerical order in the text; those not cited in the Martyn Fogg, Probability Research Group, UK text risk omission. -

Scratch Pad 23 May 1997

Scratch Pad 23 May 1997 Graphic by Ditmar Scratch Pad 23 Based on The Great Cosmic Donut of Life No. 11, a magazine written and published by Bruce Gillespie, 59 Keele Street, Victoria 3066, Australia (phone (03) 9419-4797; email: [email protected]) for the May 1997 mailing of Acnestis. Cover graphic by Ditmar. Contents 2 ‘IF YOU DO NOT LOVE WORDS’: THE PLEAS- 7 DISCOVERING OLAF STAPLEDON by Bruce URE OF READING R. A. LAFFERTY by Elaine Gillespie Cochrane ‘IF YOU DO NOT LOVE WORDS’: THE PLEASURE OF READING R. A. LAFFERTY by Elaine Cochrane (Author’s note: The following was written for presentation but many of the early short stories appeared in the Orbit to the Nova Mob (Melbourne’s SF discussion group), 2 collections and Galaxy, If and F&SF; the anthology Nine October 1996, and was not intended for publication. I don’t Hundred Grandmothers was published by Ace and Strange even get around to listing my favourite Lafferty stories.) Doings and Does Anyone Else Have Something Further to Add? by Scribners, and the novels were published by Avon, Ace, Scribners and Berkley. The anthologies and novels since Because I make no attempt to keep up with what’s new, my then have been small press publications, and many of the subject for this talk had to be someone who has been short stories are still to be collected into anthologies, sug- around for long enough for me to have read some of his or gesting that a declining output has coincided with a declin- her work, and to know that he or she was someone I liked ing market. -

NASA Finds Neptune Moons Locked in 'Dance of Avoidance' 15 November 2019, by Gretchen Mccartney

NASA finds Neptune moons locked in 'dance of avoidance' 15 November 2019, by Gretchen McCartney Although the dance may appear odd, it keeps the orbits stable, researchers said. "We refer to this repeating pattern as a resonance," said Marina Brozovi?, an expert in solar system dynamics at NASA's Jet Propulsion Laboratory in Pasadena, California, and the lead author of the new paper, which was published Nov. 13 in Icarus. "There are many different types of 'dances' that planets, moons and asteroids can follow, but this one has never been seen before." Far from the pull of the Sun, the giant planets of the Neptune Moon Dance: This animation illustrates how the outer solar system are the dominant sources of odd orbits of Neptune's inner moons Naiad and gravity, and collectively, they boast dozens upon Thalassa enable them to avoid each other as they race dozens of moons. Some of those moons formed around the planet. Credit: NASA alongside their planets and never went anywhere; others were captured later, then locked into orbits dictated by their planets. Some orbit in the opposite direction their planets rotate; others swap orbits Even by the wild standards of the outer solar with each other as if to avoid collision. system, the strange orbits that carry Neptune's two innermost moons are unprecedented, according to Neptune has 14 confirmed moons. Neso, the newly published research. farthest-flung of them, orbits in a wildly elliptical loop that carries it nearly 46 million miles (74 million Orbital dynamics experts are calling it a "dance of kilometers) away from the planet and takes 27 avoidance" performed by the tiny moons Naiad years to complete. -

Filipiak Solar System Book

Our Solar System Mrs. Filipiak’s Class Our Solar System Mrs. Filipiak’s Class Sun ...............................................................................................7 Michael ........................................................................................7 Mercury ........................................................................................1 Denzel ..........................................................................................1 Mercury ........................................................................................3 Austin ...........................................................................................3 Mercury ........................................................................................5 Madison B ....................................................................................5 Venus ............................................................................................7 Shyla .............................................................................................7 Venus ............................................................................................9 Kenny ...........................................................................................9 Earth ..........................................................................................11 Kaleb ..........................................................................................11 Earth ..........................................................................................13 -

Formation of Regular Satellites from Ancient Massive Rings in the Solar System

ABCDECFFFEE ABCBDEFBEEBCBAEABEB DAFBABEBBE EF !"#CF$ EABBAEBBBBEBA BAFBBEB!EABEBDEBB "AEB#BAEBEBEEABFFEFA$%BEEBCBABFEB#B&EE%B #E B # B B B EF B EE B A B E B B E B !! B CE B B #B AFBBBABE%B#EABEBEAFBB#%BBEAEBCBEEB EB#BEBAEAFB#BAEBBEBDEB%BABE'EEABFEEEAB #BA %B(A %BAB)EAE BEEBEB*BFFEBB(ABAB )EAEBEBBEBEBAFBBEEBBFEB!BBBCBEBEFB EEBEABEBEAFBBC%BABAEBFEBEEBC%BB#BEBEBCB +BAB,B*BAEB!FEBEBFB!E#EEABEEBABFABAE-B E %&AAA)A&*%+F%))( +&%A,*'AAA-.*A)A+%(A/ ABCDF*)A%%%AA)(%%0 A&A1A%+-.(A2A)AFA) /++%A))A3F43!+3&(F *(A))()A+*)0 A F ( ) * ) % A A 05%- E1- 6AAF'(A))(+&%F43AA )+)+FA7A+A+ED-AF4!+ (%AA%A2(2A%A,FD-A A%%2(AFA/+A%A%+8 (A- F*)A,AA(A+F(A3%F* +&+2%%%A*AD0++(&6E A),*+D-.FAB,'()' & τ, 9 6, : 5.F*5('AAF. A+A C 6,9πΣ ,8(0Σ%')&1-4%+)+AA2)A& A'%2&()AADFA' C τ,9DDC F =E *96,:6+6++8(06CCE E>AA>%%046CF 42!)AA:!:"2A@8BABCCCFDD))/F!= .AA)AA)A-=([email protected] C>AA6F2CA:=:54+AEEE'F2F5!= $425)A ED6)FDDF!= ABCDECFFFEE FB.-AA%A%+-D'ACAA.-4D 01E'AAA"A01EF'AB)A"A0/)++6FAA)1- !+D!F.F+FEF>CA-H+D6FF(F.FAF =AFE&(A 016')AAA-.'A&(A/*&AA+& 02)1F++A(A2+)'))0A(F 1-.( )'ABA*)AFAA)A+- 01 A+ ( A 7 ')A A ∆901: - . A 'A ) + , )A&*(&AFA*AA)AA06E-5AF4 !+F9EDDDD,(F<?DD,(F;;DDD,(+)2&-A)2DA(A'A+&( :< $- %(0∆10=7-0CF6-$1D'A∆I∆CEF7∝ ∆ A'A∆J∆CEF7∝0∆KE F*∆CE9D(,& 2)A-H+8&(AA'*AA06?-$1- ((%AF*(AAAD-.&+& ,AA%(A(/)%F(%A*&A- A6A%A,'A2%2AADD C C ; C $ Γ 9Cπ :C7 Σ . -

Stapledon Chapter

“Stapledon and Cosmic Evolution” Draft of Chapter Seven for the Forthcoming Book Science Fiction: The Evolutionary Mythology of the Future Volume II: The Time Machine to Star Maker © Thomas Lombardo Center for Future Consciousness “Beside his [Stapledon’s] stupendous panorama, his vision of worlds and galaxies, of cosmos piled upon cosmos, the glimpses of the future that Mr. Wells and others have provided for us are no more than penny peepshows.” Christian Science Monitor Olaf Stapledon (1886-1950) earned a Ph.D. in philosophy in 1925 from the University of Liverpool, and his first published book was in philosophy, titled A Modern Theory of Ethics (1929). From very early in his life, he showed an intense passion for writing and the thoughtful exploration of the deepest questions of life, which generated both a set of “modern psalms,” as well as a study of Joan of Arc, in which he argued that her inspirational visions were neither hallucinations nor a product of psychological hysteria, but due to “natural causes.” Indeed, also from his early years, Stapledon was fascinated with mystical and psychic topics, searching for some new doorway into the “way of the spirit,” having come to the conclusion that contemporary religion, which is based on a hope in personal immortality, was neither credible nor inspiring. Of relevance to his later science fiction writings, during these early formative years Stapledon appears to have explored the unorthodox idea of an “evolving God.” See Robert Crossleyʼs Olaf Stapledon: Speaking for the Future (1994) for an in-depth biography of Stapledonʼs early life and education. -

Cosmic Search Issue 02

North American AstroPhysical Observatory (NAAPO) Cosmic Search: Issue 2 (Volume 1 Number 2; March 1979) [All Articles & Miscellaneous Items] Webpage Table of Contents (Bookmarks) (Internal links to items in this webpage) [Note. Use Back button (or <Alt>+<Left Arrow>) to get back to this Table of Contents after you have clicked on a link and viewed the article.] Codes Used Below: P: Starting page of article in magazine; A: Author(s); T: Title of article P: 2; A: Carl Sagan; T: The Quest for Extraterrestrial Intelligence P: 9; A: Editors; T: Letters; SETI Courses P: 10; A: Don Lago; T: Circles of Stone and Circles of Steel P: 15; A: Bernard M. Oliver; T: Editorial: Let's Get SETI Through Congress P: 16; A: Gerard K. O'Neill & John Kraus; T: Gerard K. O'Neill on "Space Colonization and SETI" P: 24; A: Editors; T: A Single Vote (An Historical Fable by Sedgewick Seti) P: 25; A: Richard Berendzen; T: The Case for SETI P: 30; A: Editors; T: The SEnTInel (SETI News) P: 35; A: Jerome Rothstein; T: Generalized LIfe P: 39; A: John Kraus; T: ABCs of SETI P: 47; A: Editors; T: In Review: P: 48; A: R. N. Bracewell; T: Man's Role in the Galaxy P: 52; A: Mirjana Gearhart; T: Off the Shelf P: various; A: Editors; T: Miscellaneous: Information from the Editors, Quotes & Graphics ● Information About the Publication (Editorial Board, Editors, Table of Contents) ● Coming in COSMIC SEARCH ● Cosmic Calendar ● Distance Table ● Glossary ● SEARCH AWARDS ● SEARCH PUZZLE; Solution to Jan. 1979 Puzzle ● Miscellaneous Quotes ● Miscellaneous Photos ● Miscellaneous Graphics The Quest for Extraterrestrial Intelligence By: Carl Sagan [Article in magazine started on page 2] A masterful overview of SETI and its meaning to humanity — a classic — Eds.