HP ISS Technology Update The Newsletter for HP Certified Professionals

ISS Headlines HP ISS Technology Update

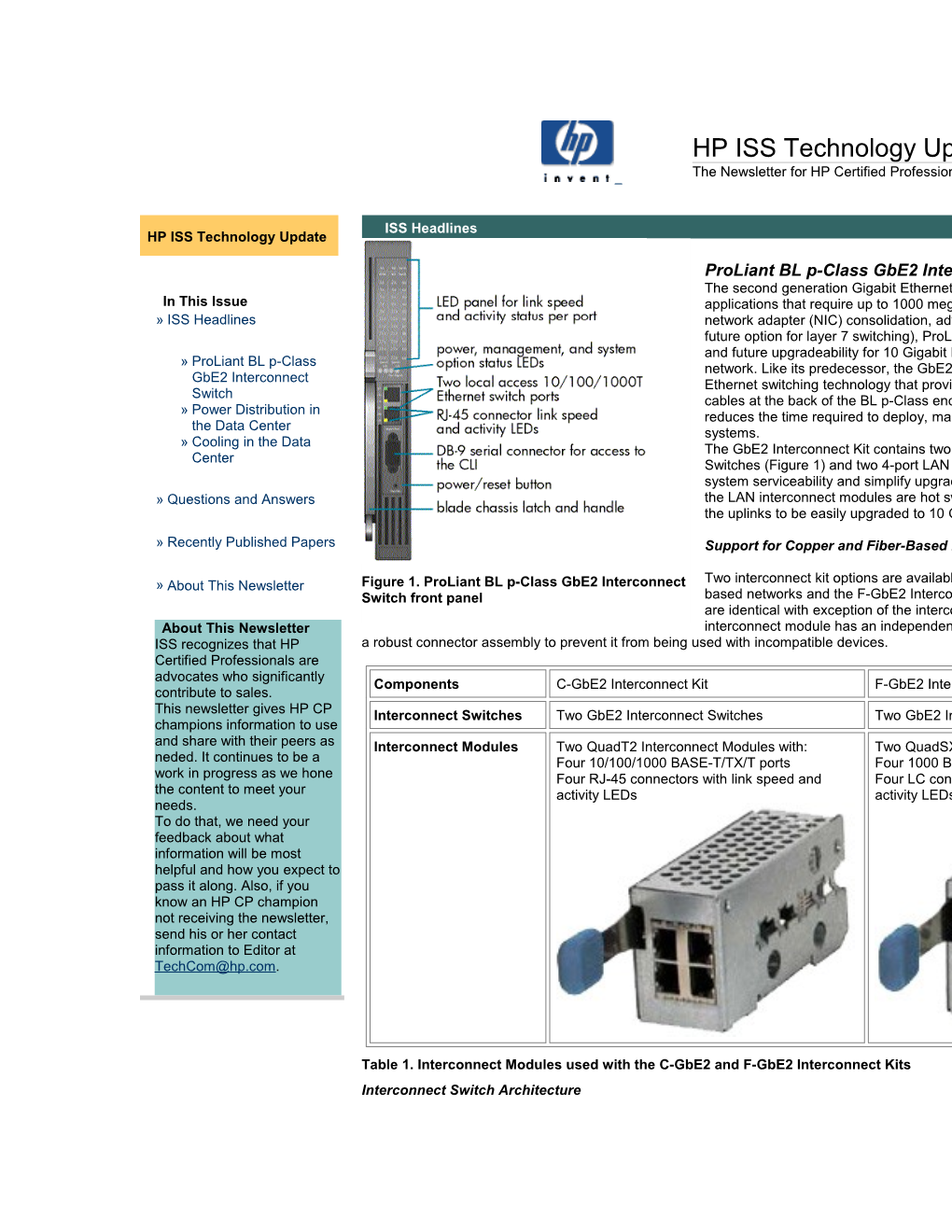

ProLiant BL p-Class GbE2 Interconnect Switch The second generation Gigabit Ethernet (GbE2) Interconnect Switch is intended for In This Issue applications that require up to 1000 megabits per second (Mb/s) Gigabit Ethernet » ISS Headlines network adapter (NIC) consolidation, advanced network feature support (including a future option for layer 7 switching), ProLiant BL20p G2 Fibre Channel pass-through, and future upgradeability for 10 Gigabit Ethernet bandwidth connectivity to the » ProLiant BL p-Class network. Like its predecessor, the GbE2 Interconnect Switch includes managed GbE2 Interconnect Ethernet switching technology that provides up to a 32-to-1 reduction in network Switch cables at the back of the BL p-Class enclosure. This cable reduction significantly » Power Distribution in reduces the time required to deploy, manage, and service ProLiant BL p-Class the Data Center systems. » Cooling in the Data The GbE2 Interconnect Kit contains two redundant, hot-swappable GbE2 Interconnect Center Switches (Figure 1) and two 4-port LAN interconnect modules. To enhance the system serviceability and simplify upgrades, both the GE2 Interconnect Switch and » Questions and Answers the LAN interconnect modules are hot swappable. This modular design also allows the uplinks to be easily upgraded to 10 Gigabit Ethernet. » Recently Published Papers Support for Copper and Fiber-Based Networks

Two interconnect kit options are available: the C-GbE2 Interconnect Kit for copper- » About This Newsletter Figure 1. ProLiant BL p-Class GbE2 Interconnect Switch front panel based networks and the F-GbE2 Interconnect Kit for fiber-based networks. These kits are identical with exception of the interconnect modules (see Table 1). Each About This Newsletter interconnect module has an independent guide pin to ensure insertion alignment and ISS recognizes that HP a robust connector assembly to prevent it from being used with incompatible devices. Certified Professionals are advocates who significantly Components C-GbE2 Interconnect Kit F-GbE2 Interconnect Kit contribute to sales. This newsletter gives HP CP Interconnect Switches Two GbE2 Interconnect Switches Two GbE2 Interconnect Switches champions information to use and share with their peers as Interconnect Modules Two QuadT2 Interconnect Modules with: Two QuadSX Interconnect Modules with: neded. It continues to be a Four 10/100/1000 BASE-T/TX/T ports Four 1000 BASE-SX ports work in progress as we hone Four RJ-45 connectors with link speed and Four LC connectors with link speed and the content to meet your activity LEDs activity LEDs needs. To do that, we need your feedback about what information will be most helpful and how you expect to pass it along. Also, if you know an HP CP champion not receiving the newsletter, send his or her contact information to Editor at [email protected].

Table 1. Interconnect Modules used with the C-GbE2 and F-GbE2 Interconnect Kits Interconnect Switch Architecture Each GbE2 Interconnect Kit provides an end-to-end, fully redundant architecture that maximizes network availability (Figure 2). Redundant network adapters are routed from each server blade bay to each hot-swappable interconnect switch (four NICs total per server bay) creating a fully meshed topology to the external Ethernet network. The interconnect switches are cooled by on-board redundant fans and they are powered by two independent feeds via the redundant, N+N hot-plug ProLiant BL p-Class power enclosure. Users can independently disable or enable the switch ports as desired. Auto-MDI/MDIX with auto-negotiation of speed and duplex mode is supported. Each interconnect switch includes the following Ethernet ports: Sixteen dedicated internal 10/100/1000 Mb/s Ethernet downlink ports connecting the server NICs to the switch Six external Ethernet ports for data, interconnect switch A and B management, Integrated Lights-out (iLO) management, and/or pre-boot execution environment (PXE) remote configuration Four 10/100/1000T uplink ports (C-GbE2) or four 1000SX (F-GbE2) uplink ports on the rear-mounted LAN interconnect module Two 10/100/1000T ports on the switch front panel A 10-gigabit (10-Gb) internal fabric that supports future planned GbE2 switch upgrades, including layer 3-7 switching and 10 Gigabit Ethernet uplinks Two dedicated internal 10/100/1000 Mb/s Ethernet crosslink ports bundled as a multi-port trunk for switch-to-switch communication and failover, if desired

Figure 2. ProLiant BL p-Class GbE2 Interconnect Kit architecture

Fibre Channel Pass-Through

The GbE2 Interconnect Kits offer the ability to pass-through BL20p G2 Fibre Channel signals using the optional GbE2 Storage Connectivity Kit. Therefore, both Ethernet LAN signal consolidation and Fibre Channel SAN signal pass-through is now possible with a single interconnect. The GbE2 Interconnect Switch chassis is simply used as a carrier to pass-through the Fibre Channel signals. The Ethernet LAN and the Fibre Channel SAN signals are completely isolated from each other even though they share the common GbE2 chassis. The GbE2 Storage Connectivity Kit provides components for one p-Class server blade enclosure. This kit contains two GbE2 Fibre Channel Mezzanine Cards (one per switch) consisting of a retimer to recondition the Fibre Channel signals and two 8-port SAN (OctalFC) interconnect modules (Figure 3). Customers plug the GbE2 Fibre Channel Mezzanine Card into the connectors provided in each GbE2 Interconnect Switch prior to loading the switches into the interconnect bays. A OctalFC interconnect module is installed into each interconnect bay from the back of the server blade enclosure. Each OctalFC interconnect module contains eight slots for connection to the SAN. Customers plug the small-form-factor pluggable (SFP) transceivers (included with the BL20p G2 Dual Port Fibre Channel Mezzanine Card) into the OctalFC interconnect module SFP slots. Providing redundant, independent path access to the SAN, one Fibre Channel signal from each ProLiant BL20p G2 server blade bay is routed through the blade enclosure backplane and to the chassis of each GbE2 Interconnect Switch. The Fibre Channel signals travel through the GbE2 Fibre Channel Mezzanine Card (for signal conditioning) and then directly to the OctalFC interconnect module (Figure 4). The GbE2 Storage Connectivity Kit supports Fibre Channel signal pass-through from eight BL20p G2 servers for a total of 16 Fibre Channel signals per server blade enclosure (8 signals routed through each GbE Interconnect Switch chassis). The ProLiant BL40p server blade does not require Fibre Channel signals to be routed to the interconnect bays.

Figure 3. BL20p G2 Fibre Channel signal routing with the GbE2 Interconnect Switch

For More Information

For more information, see the ProLiant BL p-Class GbE2 Interconnect Switch home page at http://h18004.www1.hp.com/products/servers/proliant-bl/p-class/20p/bl-p-interconnect-switch2.html

Power Distribution in the Data Center The Rack and Power Development Engineering Group develops best practices for data centers by focusing on the three chief issues that face data centers today: increasing heat, server density, and power demands. This article discusses the last two issues. The biggest problem facing data centers today is not a lack of power. It’s the lack of efficiency in distributing power. Three factors influence this trend.

Factor #1: Servers Per Rack

Two to three years ago, the number of servers in a rack was four to six with an average load per rack of 1500-3000 watts. Today, on average each rack has eight to twelve servers with an average load per rack of 5000-6000 watts. It’s not unusual nowadays to see 7000-8000 watt implementations. HP has built environments capable of supplying up to 18000 watts in a cabinet.

Factor #2: Power Supply Capacity

Power supply capacity, on the other hand, has not grown significantly in the last three years. 200-1200 watts per power supply is the average. The physical size of power supplies, however, is decreasing. In addition, high voltage operation at 208 VAC is becoming a requirement. To facilitate this transition, many servers today have dual-rated power supplies. At low-line they can support a limited capacity: of 500-600 watts. Plugged into a 208 VAC outlet, however, the same power supplies’ power density doubles to 1100-1200 watts.

Factor #3: Processor Trends

Today, each processor requires on average 90 watts of power. In the next two to three years, generation-to-generation density growth will cause large increases in processor power demands. Even today, replacing a full ProLiant 6400 with a DL580 G2 triggers a 140 percent increase in power density. Overall Power Density

There’s one way to look at these trends in server density, power supplies and processors to understand what’s causing data centers to become so hot: watts per U. A “U” is a unit of rack height equal to 1.75 inches. By measuring the amount of power consumed per unit of rack height, a “watts per U” evaluation facilitates server-to-server density comparisons. On the chart below, the vertical axis shows the power in watts that the product consumes per U. The horizontal axis displays platforms arranged from the coolest to hottest based on density, not platform or form factor.

350 300 250 200 150 100 50 0

0 0 0 0 0 2 3 3 0 0 0 2 e P 2 2 0 0 3 1 5 3 7 7 G G G 0 6 8 G 0 0 G G 6 4 G 3 3 3 3 5 0 0 0 0 7 3 0 1 4 0 0 5 7 0 L L L L L 7 7 5 1 L L 6 L 8 2 L L 6 3 3 3 A D D 7 L B 5 3 D D 3 M M M M M L L L S L B L L L M M M M D D D D

Chart 1. Server density in watts per U

For the older ProLiant servers and for some of the storage products and servers from the ML line, the density is reasonable: 50-150 watts per U, maximum. HP’s highest density storage products still fall into this cooler category. The MSA1000 and the ProLiant cluster storage box are only about 125 watts per U. The power densities of the BL and DL class of products, on the other hand, lie anywhere between 100 to 350 watts per U. Blades fall under these areas of the chart. There’s a power jump between the generation 1 and generation 2 Blades due to processor power needs alone. Power needs are becoming quite dense.

Delivering More Power

On the electrical side, why is deploying a full rack such a problem? It’s not the total load on the rack. It all depends on how power is distributed in the data center. Originally, a 120 volt, 10 amp power cord provided sufficient power to any component in the data center. This is no longer true. The chart below illustrates the power available using different distribution methods.

Branch Voltage Branch Circuit Maximum Continuous Maximum Available Power Size Load

120V 20A 16A 1920 VA

120V 30A 24A 2880 VA

208V 20A 16A 3328 VA

208V 30A 24A 4992 VA

208V 20A 3-phase 27A 5760 VA

208V 30A 3-phase 42A 8650 VA

Table 2. Available power from each distribution method From a power density perspective, it’s clear why 120V is not a good solution anymore. A 120V circuit can only provide a little less than 3000 VA or 3000 watts of power. The same size wire run at 208V 30A can provide almost 5000 VA, or 5000 watts. Nonetheless, even 5000 VA can fall short when the rack contains a very large power load. Consequently, 30 amp branches are becoming standard, and 3-phase branches to the rack are becoming more popular as this density trend continues.

Distribution Panel Efficiency

Again, the way power is distributed from the panel determines how efficiently it is used. In most cases, there is plenty of unused power left at the panel due to inefficient distribution methods. For example, a cabinet with 21 DL380 G2s requires 8560 VA to operate. Consequently, at least two 24A PDUs are needed to power that load. To be redundant, 4 PDUs are necessary. Consequently, each cabinet requires 4 2-pole breakers, or 8 poles. 10 cabinets and 210 servers can be powered off of an 84-pole panel with today’s average distribution method. Why not install a larger current-density PDU? In North America, there are no cost-effective single-phase plug standards above 30A, as anything above 30A makes plug-and-play power distribution more difficult. The only likely solution is moving to 3-phase power distribution. Because electrical power is generated using 3-phase, this is the most efficient way of distributing it as well. 3-phase is roughly 150% more efficient than single phase. In 3-phase, fewer overall panel positions are used for each rack. The chart below illustrates the differences in distribution efficiency among 1-phase, 2-phase, and 3-phase:

24A 1-phase PDU, 24A 2-phase PDU, PDU Model 120V 208V

VA Per PDU 2880 VA 4992 VA

PDUs Per Rack of 21 DL380 G2s 8 PDU 4 PDU

8 cables, 3-conductor, 4 cables, 3-conductor, Panel to Rack Cable Type 10-guage 10-guage

8 poles 8 poles Breaker Poles Required Per Rack (1 pole per PDU) (2 poles per PDU)

Racks Per 84-Pole Distribution Panel 10 10

VA Per 84-Pole Distribution Panel 89,460 VA 86,730 VA

Table 3. Distribution efficiency from 1-phase, 2-phase, and 3-phase In summary, 3-phase distribution enables more efficient use of the panel by powering another 4 racks (for a total of 84 additional servers), reduces the number of in-rack PDUs, and minimizes the number of cable runs from the panel to the rack. As a result, the overall cost of the power distribution infrastructure is significantly reduced.

Power Planning Methods

Larger power demands require better ways to determine those potential loads. In the past, power planning followed the name plate rating on the servers. Due to the way name plate ratings are established, however, this usually resulted in a lot of infrastructure being built out that was never used. The most accurate method to determine power needs is actually measuring power usage. If you don’t have a product there to measure, however, it’s hard to plan a power infrastructure. Consequently, the best practice today is using the ProLiant Power Calculator. The numbers given by the ProLiant Power Calculator are more realistic than name plate ratings, and they provide a little room for growth in the power calculation. Figure 4. Screen shot of DL560 tool

This is a screen shot of the DL560 tool. The user selects the input voltage, the number of processors, the amount of memory, the number of PCI cards, the number of hard drives, the size of the hard drives, and other factors, and the calculator determines power consumption based on the actual measurements of the system. The site preparation utility rolls all of the individual ProLiant Power Calculators into one workbook and assesses total rack power needs on the front page. The utility recommends the number of PDUs and UPSs. The Blade, because the Blade architecture is so different, has its own calculator. The site preparation utility can configure each Blade Server with its memory, processors, drives, and so forth.

Figure 5. Rack power summary

This screen typifies a power summary: complete facilities impact, heat load, heat produced per hour, power consumption, and power-on phases. It provides the total system weight including the rack weight as well. Most companies don’t consider the load factor of a one-ton rack sitting on their floor. In some of the older buildings that exist today, that can be a very serious issue. The power calculators are based on an actual measurements. In a laboratory, systems running an NT-based Windows operating system run power exercising utilities to exercise the system’s processors, the drives, and the memory at a 100 percent duty cycle or at 100 percent utilization. The ProLiant Power Calculators can be found at: http://h30099.www3.hp.com/configurator/calc/Power Calculator Catalog.xls .

Redundant Power Planning

Planning the number of redundant power supplies and what loads to place on those supplies is a very important part of data center management. The designations 1+1, N+1, and N+N reveal the number of power supplies needed. The first digit is the number of power supplies required to support the full configuration of the server. The second number is the number of available online spares. A 1+1 environment includes most of the ProLiant servers that come with two power supplies. The ML570 falls into the N+1 category. It comes with three power supplies. At low voltage operation, it requires a minimum of two power supplies to support the full configuration. It has one online spare. Only one product today supports the N+N configuration: the p-Class Blade. Up to four power supplies – two on each side – can be placed in a single phase power enclosure. Two power supplies support the full capacity of the system, and two online spares provide redundancy. Any combination of two power supplies in an N+N system can fail, and full capacity power will still be available. Redundant power supplies load share. In every system with multiple power supplies, the power supplies equally share the power load. How much load is safe? In a 1+1 environment, at the server level each power supply supports 50 percent of the load. At the rack level, each PDU supports 50 percent of the rack load. In the event of a failure, one of the feeds drops out. All of the loads transfer to the other side, and the system still runs with no problems. Unfortunately, some users tend to load their PDUs. After realizing an electrical panel is drawing less than its capacity, they add more products in that rack and push the power load beyond the recommended 50 percent. It is very dangerous to load PDUs in this way. If a PDU fails, all the loads shift to the other side and suddenly the whole rack is down. No more than 50 percent of a PDU’s rated capacity should be utilized in a normal operating environment.

In Conclusion

In summary, HP’s Rack and Power Development Engineering Group helps customers tackle the complicated issue of power planning through examining each customer’s needs and applying proven methods in power distribution. HP’s expertise leads to efficiency in data center power distribution and confidence in facing future power planning issues.

For More Information

For white papers on this subject, go to: http://h18004.www1.hp.com/products/servers/technology/whitepapers/index.html

Cooling in the Data Center As data center power consumption grows, so do thermal demands. The largest issue is not finding enough cooling, but the way the cooling is distributed in the data center. HP is driving new methods and technologies to deliver cooler air.

ProLiant Server DL 360G3 DL 380G3 DL 580G2 BL 20pG2 Model Enclosures Per 42 21 10 6 (48 servers) 42U Rack Number of CPU 84P 42P 40P 96P Per Rack Power Load 14.7 kW 8.49 kW 7.9 kW 14.75 kW Heat Load 50,170 BTU/hour 30,461 BTU/hour 26,940 BTU/hour 50,374 BTU/hour Cooling 4.2 tons 2.5 tons 2.2 tons 4.2 tons Requirement

Table 4. Cooling requirements for fully configured racks This chart shows cooling requirements for some fully configured racks. A rack loaded with 21 DL380 G3s, for instance, requires 30,000 BTU, or 2.5 tons of air conditioning. The racks in the chart average about 3.2 tons of AC. Two years ago, on the other hand, the average rack required 15,000 BTU, or just over one ton of air conditioning. The average size of a product at that time was five to seven U. Today, the average rack produces 28,000 BTU, and the average size of a server is down to three U. Airflow is critical. Unfortunately, generally very little effort is allocated to data center airflow design. HP, on the other hand, devotes considerable effort to this increasingly important area of data center design.

Common Data Center Air Distribution Problems

There several ways improper data center design can hamper airflow. Poor Computer Room Air Conditioning (CRAC) unit placement is one way. The high velocity air flowing from the air conditioner must disperse and become turbulent to pressurize the floor. That way, air can flow up through the vents. Placed too close to the air handler, a rack can miss beneficial airflow. The CRAC’s high velocity air can create a vacuum close to the underside of the floor and pull air down through the floor. This is the exact opposite of the desired airflow.

Figure 6. Proper and improper rack placement

Other poor data center designs include low ceilings or low return air volume. If the ceiling height is not sufficient to support the load in the data center, and the dropped ceiling does not serve as a return plenum, heat can build up in the upper air space and be pulled back through the servers. The arrows show recirculation over the tops of the racks.

Figure 7. A ceiling that is too low

Cutting big holes or leaving panels out of the floor can cause air leaks, lower the plenum pressure, decrease desired airflow through the perforated tiles and waste valuable air conditioning capacity. Likewise, cables stretched under the floor can cause airflow restrictions. Shoddy building construction methods can decrease airflow efficiency as well. Often construction companies won’t install sheetrock all the way to the concrete floor inside data center columns. Instead, the sheetrock only reaches the level of the raised floor. Consequently, air conditioning pressure goes up the hollow column and exits the room.

Data Center Cooling Best Practices

The best way to lay out a data center today is placing servers so that their fronts face the fronts of opposing rows, and backs face the backs. This optimizes natural convection currents. Cold air sinks, while hot air rises. Air is delivered to cold aisles through perforated tiles in the floor. The server fronts pull the cold air through the servers. After cooling the server, the air exits into the hot aisle. The hot air rises to the ceiling and returns to the air conditioning unit to be cooled. Then it is delivered through the floor again. Figure 8. Hot aisles and cold aisles

Air conditioners should be placed perpendicular to rows of racks. This arrangement provides the shortest possible path for hot air to return to the air conditioner. If one particular area has a very high density heat load compared to the rest of the data center, a barrier can be built under the floor to direct cool air from a particular air handler to that area alone. Blanking panels keep air flowing in the right direction. Air should flow in through the rack’s front and out through its back. A server, however, will get its air via the path of least resistance. The rack’s front and rear doors are 65 percent perforated. That’s a 35 percent restriction of airflow. Without blanking panels, air will recirculate through an empty rack space, causing the servers to overheat. Missing blanking panels can also cause air from the hot aisle to blow into the cold aisle or vice-versa, thereby limiting cooling efficiency. Blanking panels prevent this scenario and maintain correct airflow. Another method for optimizing air flow involves splitting the ceiling plenum into a supply plenum and a return plenum. In this setup, cold air is driven down into the cold aisles from the top and the bottom simultaneously. Air conditioning systems can provide up to 100-125 watts per square foot of cooling using under-floor plenums alone. Watts per square foot is the equipment’s gross load over the raised floor’s gross area. Splitting the air flow between two plenums adds another 50 watts per square foot of cooling power.

Figure 9. Split plenum cooling

If the data center air handling and delivery system is optimized, no rack fan systems should be necessary. In fact, in certain cases, such systems are counter-productive. Fans in the top of a rack can create air curtains that prevent hot air from returning to the air conditioner. Rack fans also add more electrical load to the data center and contribute to lower overall availability. If a rack of servers is dependent on rack fans for adequate cooling, the failure of one or more fans in the rack can place all the servers in jeopardy. Air conditioning loads should be based on sensible capacity. The name plate on an air conditioners do not describe actual capacity. A 30-ton air conditioner does not deliver 30 tons of cooling capacity. Sensible air conditioning capacity is based on how the air conditioning system is set up in the data center. Every air conditioner comes with installation information describing how to calculate the air conditioner’s sensible cooling capacity. Balancing data center thermal loads is also very important. One common mistake is putting all servers in cabinets together, and, in another part of the data center, putting all storage products together. This creates thermal differences among different spots in the data center. Mixing loads distributes the load across the floor more evenly and helps control the problem.

In addition, each facility has its own maximum cooling capacity. This maximum load capacity provides a power and cooling “budget” for the data center. Going beyond that maximum load can lead to serious heat issues.

Other Alternatives

HP is exploring alternatives to forced-air cooling as well. In-cabinet heat exchangers and supplemental differential exchange (DX) air conditioners are two options. DX air conditioners use freon-based air conditioning to provide 3 to 5 tons of cooled air. DX units are rack-sized. In-cabinet heat exchangers are closed-circulation chilled water systems. The hot air from the back of the rack is pulled into a heat exchanger at the bottom of the rack, cooled off, and returned to the front of the rack. Cool Labs

HP continues to develop best practices for data center cooling at the HP Cool Labs. The Cool Labs build model data centers and analyze them. A new process developed there, called Computational Flow Dynamics (CFD), models the way air flows in the data center using flow simulation software. After building an actual data center based on CFD models, the Cool Labs’ model was found to be within seven percent of the actual data center thermal readings. Consequently, it’s a very accurate way to analyze data centers. The Cool Labs provide a service called “Static Smart Cooling” that analyzes heat flow problems using CFD. If a customer believes they have an airflow problem, HP data center services go to the data center in question, collect information, and determine if the data center is a good candidate for the process. If the customer is a good candidate, the HP personnel go on-site, examine physical layout, air conditioning resources, and enterprise equipment, and note where the data center loads are positioned. After running all of the information through the CFD software they return to the data center and perform a variety of corrective measures, including: reprovisioning the CRAC unit settings, converting drop ceilings into return air plenums, moving floor tiles around, adding blanking panels where they are absent, or other measures to optimize airflow using available resources. No other major product provider offers this service. Through proactive data center thermal planning, HP leads its customers towards the most efficient use of available cooling resources.

For More Information

For more information on data center cooling, visit: http://www.hpl.hp.com/research/dca/smart_cooling/ Questions and Answers

Question 1: SuSE Certification of BL 20p and BL 20p g2 What are our plans to get official SuSE certification for the BL 20p and BL 20p g2 with SuSE 8 (UL 1.0)? We only have support for SLES7 for the BL 20p (not g2) in the HP OS Support Matrix, and SLES8 has been out for some time now. I am working with a large global customer who is considering deploying multiple 2p blades. We are competing with the IBM Bladecentre— which is certified by SuSE.

Response: The Linux ProLiant Support Matrix located here http://h18000.www1.hp.com/products /servers/linux/hpLinuxcert.html updates to our Linux Certification program, as well as all servers we are currently supporting running Linux.

If you are looking for SLES 8 certification information, it is part of UnitedLinux. HP recommends that you click on the server in our support matrix at http://h18000.www1.hp.com/products/servers/linux/hpLinuxcert.html. This brings up a web page with product documentation, driver downloads and certification information. Scroll to the bottom for certification information. The certification link will take you to the Linux Vendors Certification database. The UnitedLinux Certification Database is located at this url: http://wdb1.sco.com/chwp/owa/hch_search_form?f_skin_id=6.

Question 2: Disk Capacity on the SA-5300 The SA-5300 supports 14 drives per channel. Is there a limit on the total disk capacity it will support? Will the SA-5300 support more than 2TB per channel when larger drives become available? As well, is there an upper limit on the total disk capacity that is recognized by Windows 2000?

Response: The SA-5302 supports up to 28 drives (2 buses with 14 drives on each). The SA-5304 supports up to 56 drives (4 buses with 14 drives each). The maximum size of a single volume on the SA-5300 controllers is 2TB of user capacity. logical volumes of that size. Therefore, the SA-5300 controllers support the full capacity of the largest disk drives in the largest configuration with multiple 2TB volumes. Today, Windows has the same capacity limit per volume (2TB). This assumes the use of the 512 byte block size used by SMART Array controllers.

Question 3: Creating a Delay Before a Page or E-mail is Triggered in Insight Manager 7 In Insight Manager 7 sp2, can a paging or e-mail task be created to notify someone only when a server has been down for longer than 4 minutes? Can I create a group based on the SNMP value "Location?” When I add a newly discovered server I want it to be automatically added to the query group.

Response: The answer to your first question is “yes.” By default, the SNMP Status Polling for Servers task is set to run every 5 minutes. When a server goes down, its status changes to Critical and an event is created in Insight Manager 7 (a Critical Device Status Change event). email or paging task and tie it to the default query called “Important Uncleared Events.” This will notify you when traps arrive and when a device goes critical. As for your second question, you cannot create a query based on SNMP Location, but you can create a query based on the Server Role that is part of the web agent. The server role is also group configurable so that you can change it on multiple servers simultaneously. That’s how I would create a dynamic query based on your own data.

Question 4: Support for Insight Manager 7 Will HP have an out-of-band forum where customers can submit questions and then check back later for the response from HP?

Response: Yes, customers can now post questions about Insight Manager, RDP, RILOE/iLO, and SmartStart on the ITRC forums on HP.COM. located at http://forums.itrc.hp.com. Most of the time the answers here are provided by the forum community. great resource.

Recently Published Papers

Title URL

HP Advanced Memory Protection Technologies ftp://ftp.compaq.com/pub/supportinformation/papers/tc030804tb_rev1_us.pdf Technology Brief

HP ProLiant BL p-Class SAN Storage ftp://ftp.compaq.com/pub/supportinformation/papers/tc030803tb_rev1_us.pdf Connectivity Technology Brief

Implementing Microsoft Windows 2000 with http://h20000.www2.hp.com/bc/docs/support/UCR/SupportManual/ Service Pack 4 on ProLiant Servers Integration TPM_tc030911in_rev5_us/TPM_tc030911in_rev5_us.pdf Note (6th Edition)

Implementing Novell NetWare 6.5 on ProLiant http://h20000.www2.hp.com/bc/docs/support/UCR/SupportManual/ Servers Integration Note (2nd Edition) TPM_tc030805in_rev1_us/TPM_tc030805in_rev1_us.pdf

Linux Hot Plug Support for SCSI Tape Drives ftp://ftp.compaq.com/pub/supportinformation/papers/tc030902ht_rev0_us.pdf How-To

ProLiant BL p-Class System Technology Tech ftp://ftp.compaq.com/pub/supportinformation/papers/tc030901tb_rev0_us.pdf Brief

ProLiant DL560 Server Technologies Tech Brief ftp://ftp.compaq.com/pub/supportinformation/papers/tc030807tb_rev0_us.pdf

Setting Up a Linux PXE Server and Integrating http://h20000.www2.hp.com/bc/docs/support/UCR/SupportManual/ Clients How-To TPM_tc030904ht_rev1_us/TPM_tc030904ht_rev1_us.pdf

Upgrading to Novell NetWare 6.5 on ProLiant ftp://ftp.compaq.com/pub/supportinformation/papers/tc031002in_rev0_us.pdf Servers Tech Brief

Using ProLiant Essentials Rapid Deployment Pack for Scripted Blade-Based Switch ftp://ftp.compaq.com/pub/supportinformation/papers/tc030905ht_rev0_us.pdf Configuration How To

© 2003 Hewlett-Packard Company. HP, Compaq, the HP logo, and ProLiant are trademarks of the Hewlett-Packard Company in the U.S. and /or other countries.