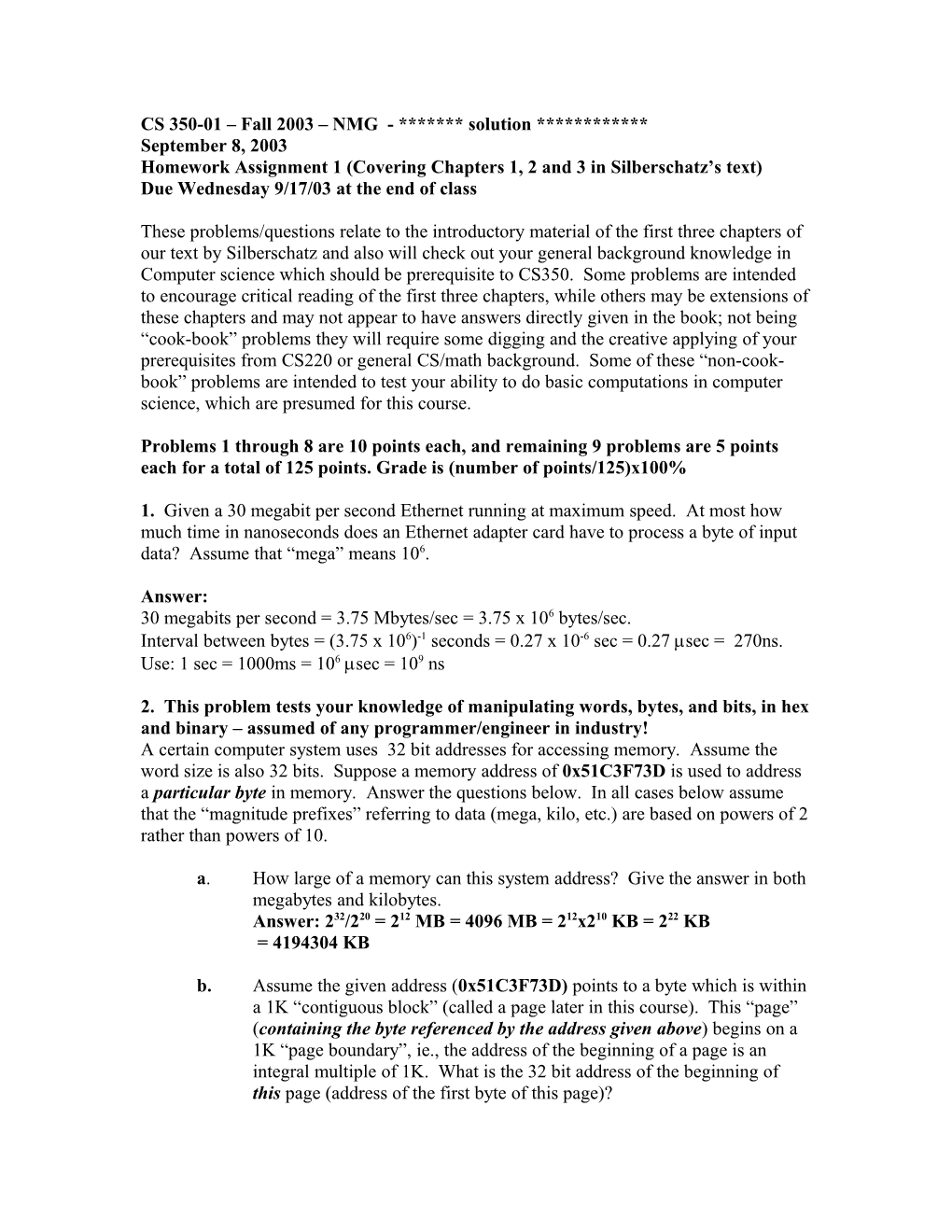

CS 350-01 – Fall 2003 – NMG - ******* solution ************ September 8, 2003 Homework Assignment 1 (Covering Chapters 1, 2 and 3 in Silberschatz’s text) Due Wednesday 9/17/03 at the end of class

These problems/questions relate to the introductory material of the first three chapters of our text by Silberschatz and also will check out your general background knowledge in Computer science which should be prerequisite to CS350. Some problems are intended to encourage critical reading of the first three chapters, while others may be extensions of these chapters and may not appear to have answers directly given in the book; not being “cook-book” problems they will require some digging and the creative applying of your prerequisites from CS220 or general CS/math background. Some of these “non-cook- book” problems are intended to test your ability to do basic computations in computer science, which are presumed for this course.

Problems 1 through 8 are 10 points each, and remaining 9 problems are 5 points each for a total of 125 points. Grade is (number of points/125)x100%

1. Given a 30 megabit per second Ethernet running at maximum speed. At most how much time in nanoseconds does an Ethernet adapter card have to process a byte of input data? Assume that “mega” means 106.

Answer: 30 megabits per second = 3.75 Mbytes/sec = 3.75 x 106 bytes/sec. Interval between bytes = (3.75 x 106)-1 seconds = 0.27 x 10-6 sec = 0.27 sec = 270ns. Use: 1 sec = 1000ms = 106 sec = 109 ns

2. This problem tests your knowledge of manipulating words, bytes, and bits, in hex and binary – assumed of any programmer/engineer in industry! A certain computer system uses 32 bit addresses for accessing memory. Assume the word size is also 32 bits. Suppose a memory address of 0x51C3F73D is used to address a particular byte in memory. Answer the questions below. In all cases below assume that the “magnitude prefixes” referring to data (mega, kilo, etc.) are based on powers of 2 rather than powers of 10.

a. How large of a memory can this system address? Give the answer in both megabytes and kilobytes. Answer: 232/220 = 212 MB = 4096 MB = 212x210 KB = 222 KB = 4194304 KB

b. Assume the given address (0x51C3F73D) points to a byte which is within a 1K “contiguous block” (called a page later in this course). This “page” (containing the byte referenced by the address given above) begins on a 1K “page boundary”, ie., the address of the beginning of a page is an integral multiple of 1K. What is the 32 bit address of the beginning of this page (address of the first byte of this page)? Answer: 1K page needs 10 bits for offset in page. 1K = 210 = 1024 ==> 10 bits ==> set low 10 bits to 0, or the low 2 hex digits to 0 and then change the 3rd hex digit from the left from a 7 to a 4: 0x51C3F400. c. If the system reads a word out of memory containing the desired byte, what is the 32 bit address of this word? Answer: A hex digit has 4 bits ==> set low 2 bits of least significant hex digit to 0: change low digit from D to C ==> 0x51C3F73C d. Which byte in this word is the desired byte (number the bytes starting with 0 from low to high address)? Answer: low digit is 0xD or 1101 binary ==>byte #1 (01 binary) in the word e. Do think the largest addressable memory would be implement directly in semiconductor devices in a typical “PC” type of machine? If not how could it be implemented? Give a short “high level” answer. Answer: address space is 232 = 4 GB may be too large. Implement as a virtual memory or memory hierarchy.

3. Consider a hypothetical 32 bit microprocessor having 32 bit instructions composed of two fields: The first 4 bits contains the opcode and the remainder an immediate operand, an operand address, or register references. a. What is the maximum directly addressable in bytes. b. What is the impact on system speed if the microprocessor bus has: 1. a 32 bit local address bus and a 16 bit local data bus 2. a 16 bit local address bus and a 16 bit local data bus c. Is this machine more likely to be a RISC or CISC architecture? Why?

Hint: Directly addressable may be interpreted as the address space spanned by the address field in the instruction for a fixed segment register in a segmented memory system. Answer: a. 228 = 256 Mbytes b. (1) If the local address bus is 32 bits, the whole address can be transferred at once and decoded in memory. However, since the data bus is only 16 bits, it will require 2 cycles to fetch a 32-bit instruction or operand.

(2) The 16 bits of the address placed on the address bus can't access the whole memory. Thus a more complex memory interface control is needed to latch the first part of the address and then the second part (since the microprocessor will end in two steps). For a 32-bit address, one may assume the first half will decode to access a "row" in memory, while the second half is sent later to access a "column" in memory. In addition to the two-step address operation, the microprocessor will need 2 cycles to fetch the 32 bit instruction/operand. c. A RISC architecture. There are only 24 = 16 different opcodes.

4. A 32 bit microprocessor has a 16 bit external data bus driven by an 20-MHz input clock. Assume that this microprocessor has a bus cycle whose minimum duration equal four input clock cycles. What is the maximum data transfer rate that this microprocessor can sustain? To increase its performance, would it be better to make its external data bus 32 bits or to double the external clock frequency supplied to the microprocessor? State any other assumptions you make and explain. Hint: Remember the reciprocal relationship between frequency and period. Also it takes one bus cycle to transfer 2 bytes. How long is a bus cycle? See “tips log” for more tips.

Answer: Clock cycle = 1/(20 MHz) = 0.05x10-6 = 50 ns Bus cycle = 4 50 ns = 200 ns 2 bytes transferred every 200 ns; thus transfer rate = 2/200ns = 10 MBytes/sec, where Mega is 106 in this case.

Doubling the frequency may mean adopting a new chip manufacturing technology (assuming each instructions will have the same number of clock cycles); doubling the external data bus means wider (maybe newer) on-chip data bus drivers/latches and modifications to the bus control logic. In the first case, the speed of the memory chips will also need to double (roughly) not to slow down the microprocessor; in the second case, the "word length" of the memory will have to double to be able to send/receive 32- bit quantities.

5. In virtually all systems that include DMA modules, DMA access to main memory is given higher priority than the processor acces to main memory. Why?

Answer: If a processor is held up in attempting to read or write memory, usually no damage occurs except a slight loss of time. However, a DMA transfer may be to or from a device that is receiving or sending data in a stream (e.g., disk or tape), and cannot be stopped. Thus, if the DMA module is held up (denied continuing access to main memory), data will be lost.

6. A DMA module is transferring characters to main memory from an external device at 38400 bits per second. The processor can fetch instructions at the rate of 2 million instructions per second. How much will the processor be slowed down due to DMA activity? Express this a percent of the time there is a conflict between DMA and the CPU. Hint: See top of page 34 on “cycle stealing”. Hint: Assume every CPU instruction accesses memory. Assume the CPU is fetching instructions only (and no data) at the rate of 2 MIPs. What fraction of the time does DMA interfere with the CPU? Answer: Ignore data read/write operations and assume the processor only fetches instructions. The processor needs to access main memory once every 0.5 microsecond. The DMA module is transferiign characters (bytes) at a rate of 38400/8 = 4800 characters per second, or one character every 208s. Durint this 208s, 2 inst/s x 208s or 416 instructions gets executed. Thus there is interference once every 416s or (1/416)x100 or 0.24 percent of the time

7. A computer has a cache, main memory, and a disk used for virtual memory. If a referenced word is in the cache, 10 ns are required to access it. If it is main memory but not in the cache, 200 ns are needed to load it into cache, and then the reference is started again. If the word is not in main memory, 500 ms are required to fetch the word from the disk, followed by 200 ns to copy it to the cache, and then the reference is started again. The cache hit ratio is 99%, and the main memory hit ratio is 90%. What s the average time in ns to access a referenced word on this system? Assume the you may ignore the time it takes to determine whether or not a reference may be found in cache or main memory. The hit ratio is the percent of the time a reference is found in the given level of the memory hierarchy. Question: is this design any good?

Answer: There are three cases to consider:

Location of referenced word Probability Total time for access in ns In cache 0.99 10 Not in cache, but in main memory (0.01)(0.9) = 0.009 200 + 10 = 210 Not in cache or main memory (0.01)(0.1) = 0.001 500ms + 200 + 10 = 500000210

So the average access time would be: Avg = (0.99)(10) + (0.009)(210) + (0.001)( 500000210) = 500012 ns Design is no good. Average access time is over 50000 time cache access time,

8. How can the operating system detect an infinite loop in a program?

Answer: A timer (hardware) is added to system. Each user is allowed some predeter-mined time of execution (not all users are given same amount). If user exceeds these time limits, the program is aborted via an interrupt.

Questions from chapters 1,2, and 3 of Silberschatz (5 points each).

Chapter 1: 1.4, 1.9, 1.10 Chapter 2: 2.2, 2.9 Chapter 3: 3.1, 3.5, 3.7, 3.11

Solution for text questions: Prob 1.4: What are the main differences between operating systems for mainframe computers and personal computers? Answer: Personal computer operating systems are not concerned with fair use, or maxi-mal use, of computer facilities. Instead, they try to optimize the usefulness of the computer for an individual user, usually at the expense of efficiency. Consider how many CPU cy-cles are used by graphical user interfaces (GUIs). Mainframe operating systems need more complex scheduling and I/O algorithms to keep the various system components busy.

Prob. 1.9 1.9 Describe the differences between symmetric and asymmetric multiprocessing. What are three advantages and one disadvantage of multiprocessor systems? Answer: Symmetric multiprocessing treats all processors as equals, and I/O can be pro-cessed on any CPU. Asymmetric multiprocessing has one master CPU and the remainder CPUs are slaves. The master distributes tasks among the slaves, and I/O is usually done by the master only. Multiprocessors can save money by not duplicating power supplies, hous-ings, and peripherals. They can execute programs more quickly and can have increased reliability. They are also more complex in both hardware and software than uniprocessor systems.

Prob. 1.10 1.10 What is the main difficulty that a programmer must overcome in writing an operating system for a real-time environment? Answer: The main difficulty is keeping the operating system within the fixed time con-straints of a real-time system. If the system does not complete a task in a certain time frame, it may cause a breakdown of the entire system it is running. Therefore when writ-ing an operating system for a real-time system, the writer must be sure that his scheduling schemes don’t allow response time to exceed the time constraint.

Prob 2.2: 2.2 How does the distinction between monitor mode and user mode function as a rudimentary form of protection (security) system? Answer: By establishing a set of privileged instructions that can be executed only when in the monitor mode, the operating system is assured of controlling the entire system at all times

Prob. 2.9: 2.9 Give two reasons why caches are useful. What problems do they solve? What problems do they cause? If a cache can be made as large as the device for which it is caching (for instance, a cache as large as a disk), why not make it that large and eliminate the device? Answer: Caches are useful when two or more components need to exchange data, and the components perform transfers at differing speeds. Cahces solve the transfer problem by providing a buffer of intermediate speed between the components. If the fast device finds the data it needs in the cache, it need not wait for the slower device. The data in the cache must be kept consistent with the data in the components. If a component has a data value change, and the datum is also in the cache, the cache must also be updated. This is especially a problem on multiprocessor systems where more than one process may be accessing a datum. A component may be eliminated by an equal-sized cache, but only if: (a) the cache and the component have equivalent state-saving capacity (that is, if the component retains its data when electricity is removed, the cache must retain data as well), and (b) the cache is affordable, because faster storage tends to be more expensive.

Prob . 3.1: 3.1 What are the five major activities of an operating system in regard to process management? Answer: _ The creation and deletion of both user and system processes

_ The suspension and resumption of processes

_ The provision of mechanisms for process synchronization

_ The provision of mechanisms for process communication

_ The provision of mechanisms for deadlock handling

Prob. 3.5: 3.5 What is the purpose of the command interpreter? Why is it usually separate from the kernel? Answer: It reads commands from the user or from a file of commands and executes them, usually by turning them into one or more system calls. It is usually not part of the kernel since the command interpreter is subject to changes.

Prob. 3.7: 3.7 What is the purpose of system calls? Answer: System calls allow user-level processes to request services of the operating sys-tem.

Prob. 3.11: 3.11 What is the main advantage of the layered approach to system design? Answer: As in all cases of modular design, designing an operating system in a modular way has several advantages. The system is easier to debug and modify because changes affect only limited sections of the system rather than touching all sections of the operating system. Information is kept only where it is needed and is accessible only within a defined and restricted area, so any bugs affecting that data must be limited to a specific module or layer.