Sociology 541 Bivariate Regression Analysis

Examining relationships between a pair of quantitative variables.

We'll review three different but related aspects of these relationships: 1. The form of the relationship. If it’s linear, we estimate a formula that predicts a value on the response variable from the value on the explanatory variable. 2. Is there a significant association between the two variables by testing the hypothesis of statistical independence? 3. The strength of their association using a measure of association called correlation.

Collectively, these analyses of the relationship between two quantitative variables are known as regression.

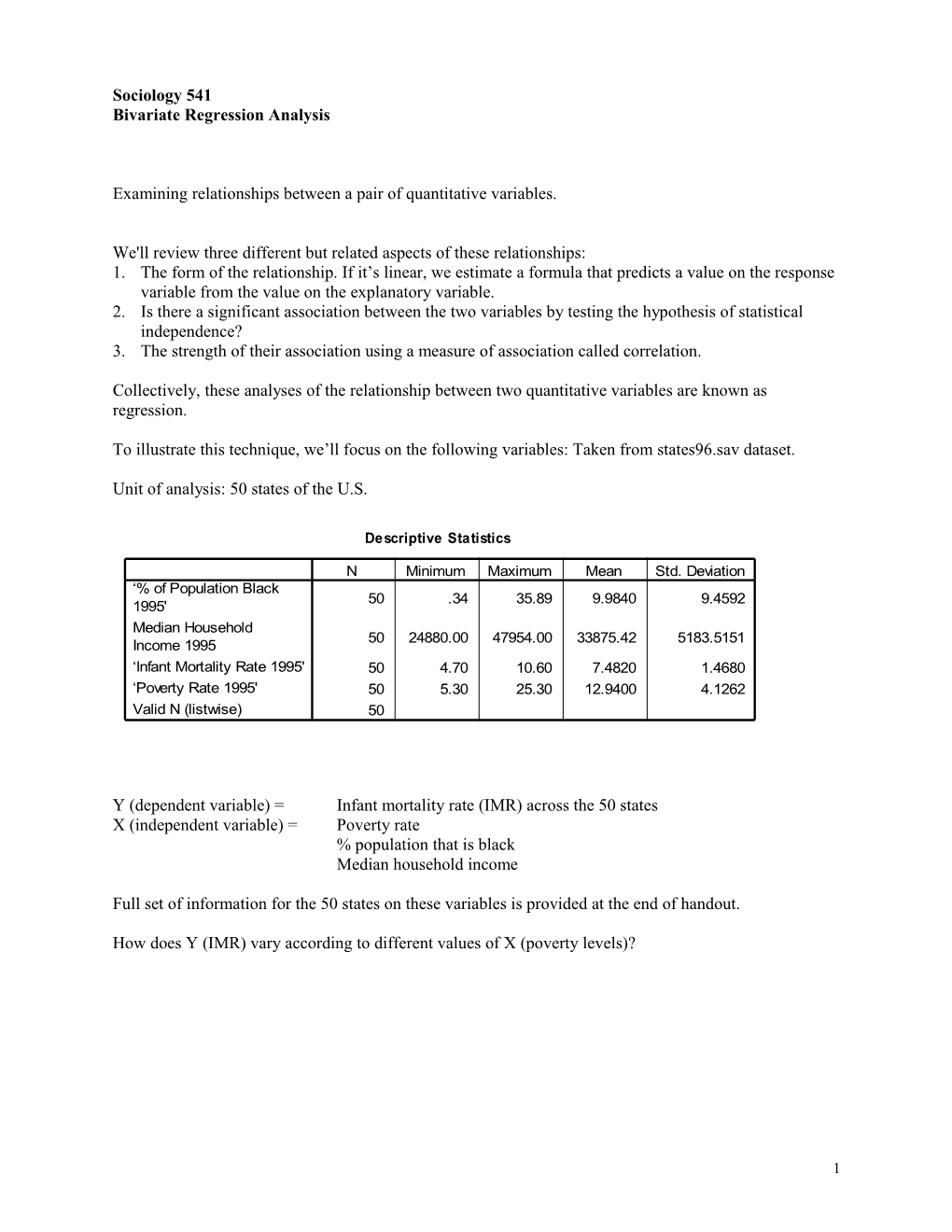

To illustrate this technique, we’ll focus on the following variables: Taken from states96.sav dataset.

Unit of analysis: 50 states of the U.S.

Descriptive Statistics

N Minimum Maximum Mean Std. Deviation ‘% of Population Black 50 .34 35.89 9.9840 9.4592 1995' Median Household 50 24880.00 47954.00 33875.42 5183.5151 Income 1995 ‘Infant Mortality Rate 1995' 50 4.70 10.60 7.4820 1.4680 ‘Poverty Rate 1995' 50 5.30 25.30 12.9400 4.1262 Valid N (listwise) 50

Y (dependent variable) = Infant mortality rate (IMR) across the 50 states X (independent variable) = Poverty rate % population that is black Median household income

Full set of information for the 50 states on these variables is provided at the end of handout.

How does Y (IMR) vary according to different values of X (poverty levels)?

1 Creating Scatterplots

Graphical representation of the relationship between two variables is called a scatterplot.

SPSS commands: Graphs-Scatter-Simple-Define-Select dependent and independent variables

11

10

9

8 ' 5 9 9 1 7 e t a R

y t

i 6 l a t r o M

5 t n a f n

I 4 ‘ 0 10 20 30

‘Poverty Rate 1995'

Each point in the diagram represents the IMR and the poverty rate for a particular state. New Jersey: (7.8, 7.3).

The two variables exhibit a positive linear association (or the two variables covary positively).

A scatterplot provides a visual means of checking whether a relationship is approximately linear. If we find that the relationship between two variables is linear, we can describe it more succinctly by fitting a straight line – a regression equation - to model the relationship.

It's very important to do this exploratory work before you begin your analysis.

When the relationship seems strongly non-linear, fitting a straight line to model the relationship is not appropriate.

2 GROUP EXERICSE: Review of Linear Functions

A number of different formulas might describe how Y relates to X. We will focus on the simplest class of formulas to describe the relationship between two variables – a straight line. Also known as linear functions.

X Y 0 5 1 7 2 9 3 11 4 13 5 15

1. Construct a scatterplot of these two variables (Y=dependent variable):

We can describe this line with the following function: y = a + bx

Intercept (a) is the value of y when x = 0.

Slope (b) tells us how much y changes for a 1-unit change in x? (y2-y1) / (x2-x1)

2. What is the intercept and slope in the linear function above? Interpret.

3. Is the relationship between X and Y positive or negative?

4. What is the predicted value of Y when X equals 20?

3 We've confirmed that the relationship between poverty rate and IMR can be described by a linear function. The scatterplot is useful, but it’s better to have a more precise expression/summary of the relationship between the two variables.

Prediction Equation

We want to come up with a linear function that can describe the scatterplot shown above.

Let’s describe this linear function in general terms first.

ˆ Yi a bX i

We can substitute a particular value of X into the formula to obtain a predicted value of Y for that value of X (we call the predicted value of Y Yhat).

However, this equation assumes that the Ys (in this case, infant mortality rates) are exact functions of the X variable (poverty rate). We should allow for errors in the prediction of Y from X since empirical relations are almost never perfect (and we saw that from the scatterplot above).

Hence, the linear function employed is as follows: ˆ Yi a bX i ei

The ei term takes into account that the ith observation is not accounted for perfectly by the prediction equation Yi = a + bXi

For this reason, ei is called the error (or residual) term.

This linear function that we’ve just specified is a model. The formula provides a simple approximation for the true relationship between X and Y. For a given value of X, the model predicts a value for Y. The better the predictions, the better the model.

4 How do we fit a line through a scatterplot? Method of Least Squares

We have to make use of all the information on both variables for each state (the IMR that corresponds to each poverty rate for each state).

11

10

9

8 ' 5 9 9 1 7 e t a R

y t

i 6 l a t r o M

5 t n a f n

I 4 ‘ 0 10 20 30

‘Poverty Rate 1995'

One common way to fit a line through a scatterplot is to pick the line that minimizes the sum of the squared residuals.

Least Squares Estimate

The method of least squares provides the prediction equation Yˆ a bX having the minimal value of ˆ 2 2 SSE (Yi Yi ) e . The least squares estimates, a and b, are the values determining the prediction equation for which the sum of squared errors SSE is a minimum sum compared to the quantity obtained using any other straight line.

Note that SSE (Sum of Squared Errors) is also known as the Residual Sum of Squares (RSS).

The SSE in a sense describes the variation around the prediction line. This estimation criterion is called the least squares error sum, or more simply, a and b are said to be estimated using ordinary least squares (OLS).

Note: Kurtz discusses the formulas used to estimate the regression line, and they are also included at the end of the handout for your reference.

5 Prediction Equation for Infant Mortality Rate and Poverty Rate

SPSS Commands: Analyze – Regression – Linear – Select dependent and independent variable(s) – Statistics - Okay

Descriptive Statistics

Mean Std. Deviation N ‘Infant Mortality Rate 1995' 7.4820 1.4680 50 ‘Poverty Rate 1995' 12.9400 4.1262 50

Model Summary

Adjusted Std. Error of Model R R Square R Square the Estimate 1 .452a .204 .187 1.3233 a. Predictors: (Constant), ‘Poverty Rate 1995'

Standard error of estimate = Root mean square error of regression line

ANOVAb

Sum of Model Squares df Mean Square F Sig. 1 Regression 21.538 1 21.538 12.299 .001a Residual 84.056 48 1.751 Total 105.594 49 a. Predictors: (Constant), ‘Poverty Rate 1995' b. Dependent Variable: ‘Infant Mortality Rate 1995'

Coefficientsa

Standardi zed Unstandardized Coefficien Coefficients ts Model B Std. Error Beta t Sig. 1 (Constant) 5.403 .622 8.691 .000 ‘Poverty Rate 1995' .161 .046 .452 3.507 .001 a. Dependent Variable: ‘Infant Mortality Rate 1995'

6 What is the line that minimizes the sum of squared errors in the case of IMR and poverty?

The prediction equation is Yhat = 5.403 + 0.161X + e

Interpretation of Y-intercept (constant): The predicted IMR when the poverty rate is zero. Interpretation of slope (poverty rate): On average, an increase of 1% in the poverty rate leads to an increase of 0.161 in the infant mortality rate (# infant deaths per 1000 births). A 10 % point increase in the poverty rate leads to a 10(0.161) or 1.61 increase in infant deaths per 1000 births.

Residuals

The prediction equation provides predicted infant mortality rates for states with various levels of X = poverty rate. For the sample data, a comparison of the predicted values to the actual infant mortality rates checks the 'goodness of fit' of the prediction equation.

The prediction errors are called residuals.

Residual: The difference between the observed and predicted values of the response variable, Y – Yˆ , is called a residual.

Using information on states provided at end of handout, calculate the predicted IMR and residual for New Jersey and Mississippi.

The output provides an estimate of the sum of squared residuals: 84.056

Linear Regression 10.00 ‘Infant M ortality Rate 1995' = 5.40 + 0.16 * pvs501 9.00 R-Square = 0.20

8.00 7.00 6.00 5.00

5.00 10.00 15.00 20.00 25.00 ‘Poverty Rate 1995'

7 GROUP EXERCISE

For the data on the 50 states in Table 9.1 on Y=violent crime rate and X=poverty rate, the prediction equation is: Yhat = 209.9 + 25.5X + e

a) Sketch a plot of the prediction equation for X between 0 and 100. b) Interpret the Y-intercept and the slope. c) Find the predicted violent crime rate for Massachusetts, which has X = 10.7 and Y = 805. d) Find the residual for the Massachusetts prediction. Interpret. e) Two states differ by 10.0 in their poverty rates. Find the difference in their predicted violent crime rates. f) The state poverty rates range from 8.0 (Hawaii) to 24.7 (for Mississippi). Over this range, find the range of predicted values for violent crime rates.

8 Describing variation about the regression line

For each fixed value of X, there is a conditional distribution of Y-values.

That is, for each poverty rate, there is a distribution of IMR values.

Linear Regression 10.00 ‘Infant Mortality Rate 1995' = 5.40 + 0.16 * pvs501 9.00 R-Square = 0.20

8.00 7.00 6.00 5.00

5.00 10.00 15.00 20.00 25.00 ‘Poverty Rate 1995'

The mean of the distribution of IMR values for a poverty rate of 10 equals Yˆ (a+bX).

How much variation is there in the IMR for all states that have a certain poverty level?

The standard error of the estimate is given by the following formula:

SSE (Y Yˆ) 2 S YX N 2 N 2

NOTE CORRECTION: Add Σ before (y-yhat)2

SYX: Standard error of predicting Y from X

Based on SPSS output above for IMR and poverty rates, calculate the standard error of the estimate.

What would larger values of this standard error of the estimate suggest?

Note: We are assuming that the variability of the observations about the regression line is the same, at any fixed X value.

9 The Coefficient of Determination (Kurtz, pp. 283-285)

Another way to assess the strength of a linear association (or how close the observed values are to the regression line).

We can think of the observed values of the IMR as due in part to a systematic component related to X (poverty levels) and to a random error component.

Graphical depiction:

Numerical depiction:

ˆ ˆ Yi Y (Yi Y ) (Yi Yi )

ˆ ˆ Yi Y (Yi Yi ) (Yi Y )

First part of this identity: Our definition of the residual or error – the discrepancy between an observation

and the predicted value for that observation (ei). Second part of this identity: The part of the observed value that can be accounted for by the regression line.

We can square and sum the components shown above. They are known as the regression sum of squares and the error sum of squares. (See Wonnacott & Wonnacott for a proof of this).

2 ˆ 2 ˆ 2 (Yi Y ) (Yi Y ) (Yi Yi )

OR

SStotal = SSregression + SSerror

This should look very familiar (Review your notes on ANOVA). However, there is one important difference. In ANOVA, we made no assumption about the form of the relationship between the independent and dependent variable. In regression analysis, we assume that the relationship between Y and X is linear.

Two terms, SSregression and SStotal, give us all the information we need to construct a proportional reduction in error or a measure of association for linear regression.

The proportional reduction in error from using the linear prediction equation instead of Y to predict Y is called the Coefficient of Determination. It is denoted by R2.

10 SS SS R 2 Total Error SSTotal

OR

SSRegression/SSTotal

Interpretation: % of variation in Y explained or associated with X.

Interpreting SPSS output

The R2 value is shown in the model summary statement and equals 0.204. This is the amount of variation in the infant mortality rate that is explained by or associated with variation in poverty rates.

Calculating the Coefficient of Determination based on the Sums of Squares:

(105.594 – 84.056) / 105.594 = 0.204 = r2 21.538 / 105.594 = 0.204

11 SPSS

Constructing a scatterplot of IMR and poverty rate:

Graphs – Scatter – Simple – Define – Specify Y (infant mortality rate) and X (poverty rate) axis variables – OK

Estimating OLS regression equation for IMR and poverty rate:

Analyze – Regression – Linear – Select dependent and independent variable(s) – Statistics – Okay

Calculating residuals:

Statistics – Regression – Linear – Dependent (HTS348) – Independent (PVS501) – Save: Predicted values (unstandardized) and residuals (unstandardized) - Okay

Plotting the regression line:

Double-click on scatterplot – Chart – Options – Fit line (Total) – Fit options – Linear regression – Continue – Okay.

12 GROUP EXERCISE: Using STATES96nodc.sav (in regression directory)

Examine the relationship between the infant mortality rate (HTS384) and the percent of the population of a state that is black (DMS439).

1. Construct a scatterplot of the two variables. Does the relationship appear to be linear? 2. What is the regression equation? Interpret the intercept and slope of the regression equation. 3. Describe the relationship between the infant mortality rate and the percent of the population that is black. How would you explain these findings? 4. Generate the predicted values and the residuals for your regression line. What is the predicted value and residual for New Jersey and Alabama? 5. What is the standard error of the estimate? Interpret. 6. What is the coefficient of determination for this regression equation? Confirm that this is its value by using the information of sums of squares. What does this figure tell you? 7. Graph the regression line between the infant mortality rate and the percent of the population of a state that is black. Offer your interpretation of the regression line in relationship to the scatterplot. Do you think the regression coefficient accurately reflects the relationship between the percent of the population that is black and the infant mortality rate?

If we have time, conduct the same analysis between the infant mortality rate and median household income.

13 SELF STUDY:

Formulas to estimate the OLS regression line

(See Wonnacott and Wonnacott / Hamilton for derivation of these coefficients)

The prediction equation is the best straight line summarizing the trend of the points in the scatter diagram. It falls closest to those points, in a certain average sense. The formulas for the coefficients of this prediction equation are:

(X X )(Y Y ) b (X X )2

The numerator is the sum of the product of the deviations of the Xs and the Ys about their respective means. When divided by N-1 in the sample, this term is the covariance and is labeled sxy.

The denominator of the regression coefficient is the sum of the squared deviations of the independent 2 variable, X, about its mean. If divided by N-1, the term would be the variance sx .

The slope of the regression line (that is, the regression coefficient) is estimated by the ratio of the covariance between Y and X to the variance of X.

Refer to Kurtz (p.264) for computing formula using sums of raw scores for easier calculation:

The intercept is estimated by the following equation: a Y bX

If b = 0, the estimate of the intercept is the mean of y. Thus, when Y and X are unrelated, the best fitting regression line will be parallel to the x-axis, passing through the Y-axis at the sample mean. Knowing a specific value of X will not yield a predicted value of Y different from the mean.

14 Measuring the Strength of a Linear Association: The Pearson Correlation Coefficient

The slope b of the prediction equation tells us the direction of the association; its sign indicates whether the prediction line slopes upward or downward as X increases. The slope does not tell us the strength of the association. The numerical value of the slope is intrinsically linked to the units of measurement of the variables.

The correlation coefficient r is a summary measure of how tightly cases are clustered around the regression line. If cases bunch very closely along the regression line, r is large in magnitude – indicating a strong relationship. If cases are widely dispersed about the regression line, then r is small in magnitude – indicating a weak relationship.

The measure of association for quantitative variables known as the Pearson correlation or simply the correlation is a standardized version of the slope. The correlation is the value the slope assumes if the measurement units for the two variables are such that their standard deviations are equal.

S X rXY b SY

where SX and SY denote the sample standard deviations for X and Y.

(X X )2 S X n 1

(Y Y )2 S Y n 1

If we increase X by one standard deviation, how many standard deviations would we see Y change?

IMR and Poverty Rate Example: Calculate the correlation coefficient r = (4.1262/1.468)0.161 = 0.452

Note that this is the same as the standardized beta coefficient in the coefficients table. This information is indicated in the table entitled Model Summary under r.

Interpretation: A one-standard deviation increase in the poverty rate is associated with a 0.452 standard deviation increase in the infant mortality rate.

15 Properties of the Pearson Correlation

1. Only valid when a linear model is appropriate for describing the relationship between two variables. It measures the strength of the linear association between X and Y. 2. –1 r 1. r = 1 when all sample points fall exactly on the prediction line. A perfect linear association. When r=0, there is no linear relationship between X and Y (random scatter or U shaped relationship) 3. r has the same sign as b (standard deviations are always positive). Same sign as covariance. 4. The larger the absolute value of r, the stronger the degree of linear association. (loose versus tight clustering) 5. The value of r does not depend on the variables' units. 6. If you only take a range of X-values sampled, the sample correlation tends to underestimate drastically (in absolute value) the population correlation.

Kurtz (pp.278-283) provides a more technical introduction to the correlation coefficient (and alternative ways to calculate it).

16 Data used in class examples.

STATE POPBLK MEDHHINC IMR POVRATE ALABAMA 25.56 25991 10.2 20.1 ALASKA 4.3 47954 6.7 7.1 ARIZONA 3.46 30863 7.4 16.1 ARKANSAS 15.86 25814 8.7 14.9 CALIFORNIA 7.64 37009 6.1 16.7 COLORADO 4.38 40706 7.1 8.8 CONNECTICUT 9.16 40243 6 9.7 DELAWARE 18.27 34928 7.2 10.3 FLORIDA 14.67 29745 7.5 16.2 GEORGIA 28.04 34099 9.8 12.1 HAWAII 2.44 42851 6 10.3 IDAHO 0.52 32676 6.4 14.5 ILLINOIS 15.33 38071 9 12.4 INDIANA 8.12 33385 9 9.6 IOWA 1.97 35519 6.6 12.2 KANSAS 6.16 30341 7.7 10.8 KENTUCKY 7.1 29810 8 14.7 LOUISIANA 31.83 27949 9.6 19.7 MAINE 0.4 33858 5.4 11.2 MARYLAND 26.72 41041 8.7 10.1 MASSACHUSETTS 6.14 38574 5.5 11 MICHIGAN 14.44 36426 8.5 12.2 MINNESOTA 2.75 37933 6.4 9.2 MISSISSIPPI 35.89 26538 10.6 23.5 MISSOURI 11.06 34825 7.9 9.4 MONTANA 0.34 27757 7.5 15.3 NEBRASKA 3.91 32929 7.7 9.6 NEVADA 7.12 36084 6 11.1 NEW HAMPSHIRE 0.7 39171 4.8 5.3 NEW JERSEY 14.49 43924 7.3 7.8 NEW MEXICO 2.43 25991 7 25.3 NEW YORK 17.6 33028 7.8 16.5 NORTH CAROLINA 22.21 31979 9.3 12.6 NORTH DAKOTA 0.47 29089 5.6 12 OHIO 11.21 34941 8.4 11.5 OKLAHOMA 7.84 26311 8.9 17.1 OREGON 1.78 36374 6.1 11.2 PENNSYLVANIA 9.68 34524 7.6 12.2 RHODE ISLAND 4.85 35359 6.9 10.6 SOUTH CAROLINA 30.03 29071 9 19.9 SOUTH DAKOTA 0.41 29578 9.3 14.5 TENNESSEE 16.23 29015 9.6 15.5 TEXAS 12.24 32039 6.6 17.4 UTAH 0.92 36480 5.1 8.4 VERMONT 0.34 33824 5.8 10.3 VIRGINIA 19.61 36222 7.6 10.2 WASHINGTON 3.31 35568 4.7 12.5 WEST VIRGINIA 3.12 24880 7.2 16.7 WISCONSIN 5.52 40955 7.4 8.5 WYOMING 0.63 31529 8.9 12.2 FROM STATES96nodc.SAV

17