PSY 2010 Lecture Notes One Way Analysis of Variance

Example problem

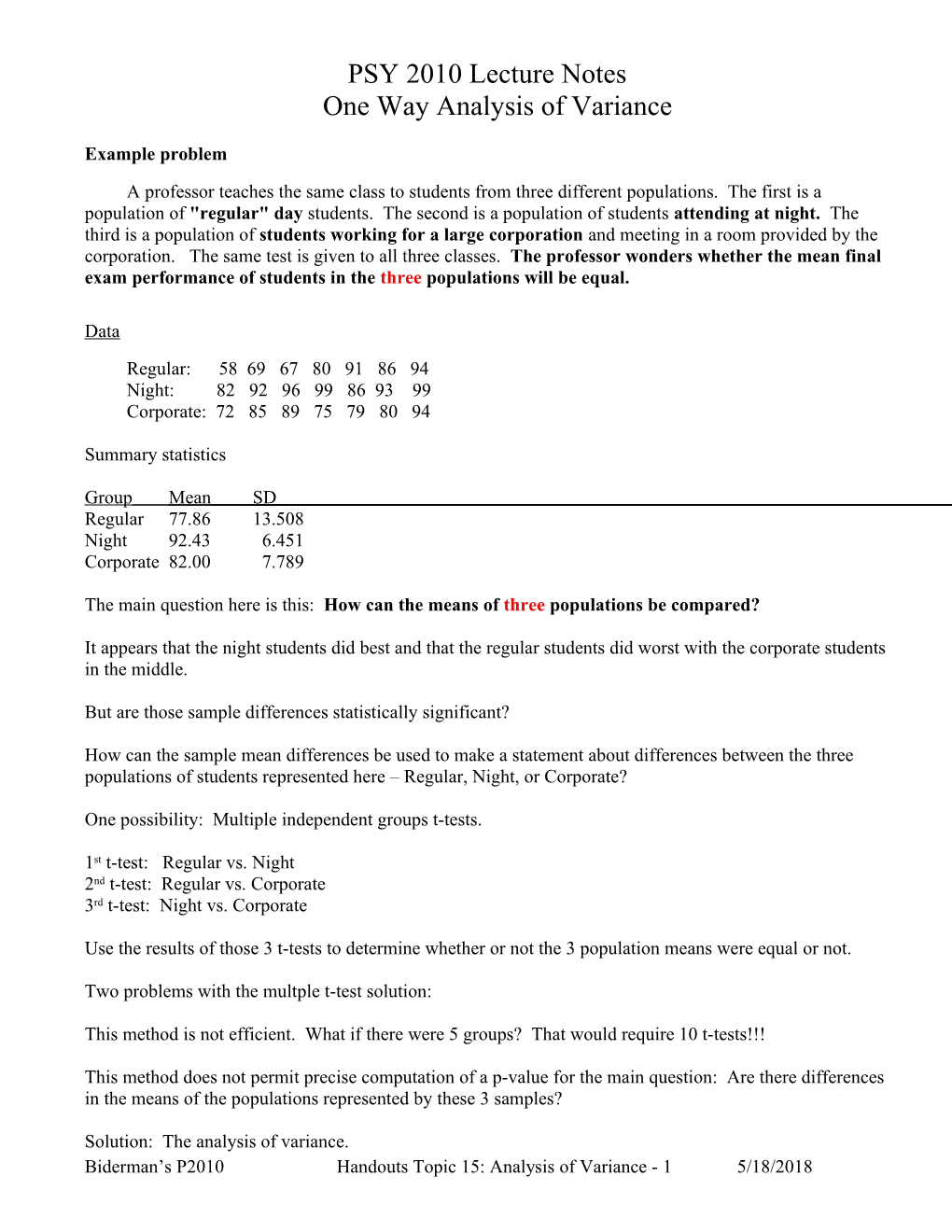

A professor teaches the same class to students from three different populations. The first is a population of "regular" day students. The second is a population of students attending at night. The third is a population of students working for a large corporation and meeting in a room provided by the corporation. The same test is given to all three classes. The professor wonders whether the mean final exam performance of students in the three populations will be equal.

Data

Regular: 58 69 67 80 91 86 94 Night: 82 92 96 99 86 93 99 Corporate: 72 85 89 75 79 80 94

Summary statistics

Group Mean SD Regular 77.86 13.508 Night 92.43 6.451 Corporate 82.00 7.789

The main question here is this: How can the means of three populations be compared?

It appears that the night students did best and that the regular students did worst with the corporate students in the middle.

But are those sample differences statistically significant?

How can the sample mean differences be used to make a statement about differences between the three populations of students represented here – Regular, Night, or Corporate?

One possibility: Multiple independent groups t-tests.

1st t-test: Regular vs. Night 2nd t-test: Regular vs. Corporate 3rd t-test: Night vs. Corporate

Use the results of those 3 t-tests to determine whether or not the 3 population means were equal or not.

Two problems with the multple t-test solution:

This method is not efficient. What if there were 5 groups? That would require 10 t-tests!!!

This method does not permit precise computation of a p-value for the main question: Are there differences in the means of the populations represented by these 3 samples?

Solution: The analysis of variance. Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 1 5/18/2018 One Way Analysis of Variance: Overview

(One way means that the groups differ on only one dimension – type of student in the above example.)

Situation

We wish to test the hypothesis that the means of several (2 or more) populations are equal. The populations are independent - in the sense of the independent groups t-test. This means that the scores in the several populations are not matched or paired. (There is a matched/paired scores version of the analysis of variance, but we won’t cover it in this semester.)

General Procedure

Take a sample of scores from each population. The sample sizes need not be equal. Compute the mean of each sample. If the values of the sample means are all "close" to each other, then retain the hypothesis of equality of the population means. But, if the values of the sample means are not "close" to each other, then reject the hypothesis of equality of the population means.

As in previous hypothesis testing situations, we first translate “close” and “far” into probabilistic terms. As before, for many data analysts “far” is any outcome whose probability is .05 or less. You can substitute your own significance level for .05.

To determine the probability associated with an outcome, statisticians employ a statistic, originally conceived by Sir R. A. Fisher, called the F statistic.

Test Statistic: F Statistic

Equal sample size formula

Common Sample size * Variance of Means F = ------Mean of Sample variances

where n = common sample size

K = No. of means being compared

2 = Variance of sample means SX 2 Si = Variance of scores within group i

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 2 5/18/2018 Unequal Sample Size formula

Whew!! I’m glad we have computers to do this.

where ni = No. of scores in group i

N = n1 + n2 + . . . + nK = Total no. of scores observed.

X - = Mean of all the N scores.

Numerator df = K - 1

Denominator df = N - K

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 3 5/18/2018 Why do the above? More Than You Ever Wanted to Know about F

The analysis of variance involves estimating the population variance in two different ways and then comparing those two estimates.

The numerator of the F formula

The numerator of the F statistic is called Mean Square Between Groups.

It is an estimate of the variance of the scores in the population computed from the variability of the sample means.

It’s based on the following from Chapter 15, p. 178: (Standard error of the mean.)

2 2 2 2 This means that σ X-bar = σ / n. If so, then n * σ X-bar = σ

2 2 We don’t know the values of σ X-bar, but we can estimate it using S X-bar, the variance of the means of the samples.

2 So, we have an estimate of the population variance, n * S X-bar.

This is an unbiased estimate of σ2 if the population means are equal. Key facts. 2 2 But if the population means are not equal, n * S X-bar will be larger than σ .

The denominator of the F formula

The denominator of the F statistic is called Mean Square Within Groups.

The denominator is simply the arithmetic average, i.e., the mean, of the individual sample variances.

Mean Square Within Groups is also an estimate of σ2. It is always unbiased.

The F statistic

The F statistic is simply the ratio of the two values:

2 Mean Square Between Groups n * S X-bar F = ------= ------Mean Square Within Groups Average of within-group variances

Values expected if the Null Hypothesis is true

If the null hypothesis of no difference in population means is true, the value of F should be about equal to 1.

Values not expected if the Null is true If the null is false, F should be larger than 1.

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 4 5/18/2018 One Way Analysis of Variance: Worked Out Example

Problem

A professor teaches the same class to students from three different populations. The first is a population of "regular" day students. The second is a population of students attending at night. The third is a population of students working for a large corporation and meeting in a room provided by the corporation. The same test is given to all three classes. The professor wonders whether the mean final exam performance of students in the three populations will be equal.

Statement of Hypotheses In SPSS . . . H0: µ1 = µ2 = µ3. H1: At least 1 inequality.

Test statistic

F statistic for the one way analysis of variance.

Data

Regular: 58 69 67 80 91 86 94 Night: 82 92 96 99 86 93 99 Corporate: 72 85 89 75 79 80 94

Summary statistics

Group Mean SD Regular 77.86 13.508 Night 92.43 6.451 Corporate 82.00 7.789

Variance of the sample means is 7.5082 = 56.370

Conclusion

7*7.5082 394.590 F = ------= ------= 4.157 (13.5082+6.4512+7.7892) ------94.917 3 The F value of 4.157 is larger than 1. Since we would expect it to be equal to 1 if the null were true, this kind of suggests that the null is not true. But it might be possible to get an F of 4.157 even when the null was true. What would be the probability of that?

The value we’re looking for is the p-value for the F statistic. If that p-value is less than or equal to .05, then we can feel comfortable in rejecting the null hypothesis of equal population means. Otherwise we’ll retain it.

The following shows how SPSS was used to conduct the analysis.

The SPSS output reports the p-value associated with the F statistic. Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 5 5/18/2018 One way analysis of variance using SPSS Analyze -> Compare Means -> Oneway

Put the name of the variable being analyzed (the dependent variable) in this box.

Click on the Options button to open the Options Dialog box.

Put the name of the variable which designates the groups being compared in this box.

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 6 5/18/2018 Oneway

Descriptives

examscor

N Mean Std. Deviation Std. Error 95% Confidence Interval for Mean Minimum Maximum

Lower Bound Upper Bound

1 7 77.86 13.508 5.106 65.36 90.35 58 94

2 7 92.43 6.451 2.438 86.46 98.40 82 99

3 7 82.00 7.789 2.944 74.80 89.20 72 94

Total 21 84.10 11.175 2.439 79.01 89.18 58 99

ANOVA

examscor

Sum of Squares df Mean Square F Sig.

Between Groups 789.238 2 394.619 4.157 .033

Within Groups 1708.571 18 94.921

Total 2497.810 20

Means Plots

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 7 5/18/2018 A Second Example

An Industrial/Organizational Psychologist compared three methods of teaching workers the safety rules which were supposed to be in force in the organization. Method L consisted of two days of classes, mostly lecture. Method C consisted of two days of interactive computer games designed to teach the basics of safety in the organization. Method W consisted of two days of filling out a workbook covering the safety rules. The dependent variable in the research was the number of problems answered correctly on a 50 question multiple choice exam given on the last day of training. The data are as follows:

Group L 30 31 32 28 39 30 33 27 28 29 34 35 32 25 28 29 32 30 31 35 27 26 31 30 29 28 34 31 29 30

Group C 23 29 30 27 28 31 32 33 29 27 28 30 29 30 27 29 26 25 24 28 29 30 34 31 30 24 26 27 30 22

Group W 42 42 41 43 41 40 48 43 48 42 46 47 40 41 40 38 39 41 46 45 38 39 39 40 43 46 40 48 49 50

Hypotheses

Null Hypothesis: µL = µC = µW

Alternative Hypothesis: Some inequality between the values of the means.

Post Hoc Tests

If the p-value associated with F is larger than .05, then that means there are no differences between the population means. We retain the null and that’s it.

But if the p-value is <= .05, then that means there is “some inequality between the values of the means.”

Unfortunately, that’s all the overall test tells us. It does NOT tell us where the inequality lies.

For this reason, analysts usually follow up a significant overall test with special tests, called post hoc tests, designed to identify just which means are different.

In this example, the use of the Tukey b post hoc test is illustrated.

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 8 5/18/2018 A portion of the data matrix

Specifying the analyses: Analyze -> Compare means -> One-Way ANOVA . . .

Click on the Post Hoc button to open the Post Hocs dialog box.

Check Tukey’s-b.

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 9 5/18/2018

Click on the Options button to choose options.

Check Descriptive, Homogeneity of variance test and Means plot. Click on the Options button to choose options.

Check Descriptive, Homogeneity of variance test and Means plot.

The Output Oneway

Descriptives

numcorrect

N Mean Std. Deviation Std. Error 95% Confidence Interval for Mean Minimum Maximum

Lower Bound Upper Bound

1 18 30.67 3.290 .775 29.03 32.30 25 39

2 42 28.79 2.876 .444 27.89 29.68 22 35

3 30 42.83 3.563 .651 41.50 44.16 38 50

Total 90 33.84 7.167 .755 32.34 35.35 22 50

ANOVA

numcorrect

Sum of Squares df Mean Square F Sig.

Between Groups 3680.584 2 1840.292 179.644 .000

Within Groups 891.238 87 10.244

Total 4571.822 89

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 10 5/18/2018 Post Hoc Tests

Homogeneous Subsets

numcorrect Means that are in different Tukey B columns are significantly group N Subset for alpha = 0.05 different from each other. 1 2

2 42 28.79 Means that are in the same column are NOT significantly 1 18 30.67 different. 3 30 42.83 Means for groups in homogeneous subsets are displayed. In this instance, the Group 3 a. Uses Harmonic Mean Sample Size = 26.620. mean is significantly larger than b. The group sizes are unequal. The harmonic mean of the group either the Group 2 or Group 1 sizes is used. Type I error levels are not guaranteed. mean.

Means Plots

Biderman’s P2010 Handouts Topic 15: Analysis of Variance - 11 5/18/2018