Statistics 510: Notes 23 Reading: Section 7.8

I. The Multivariate Normal Distribution

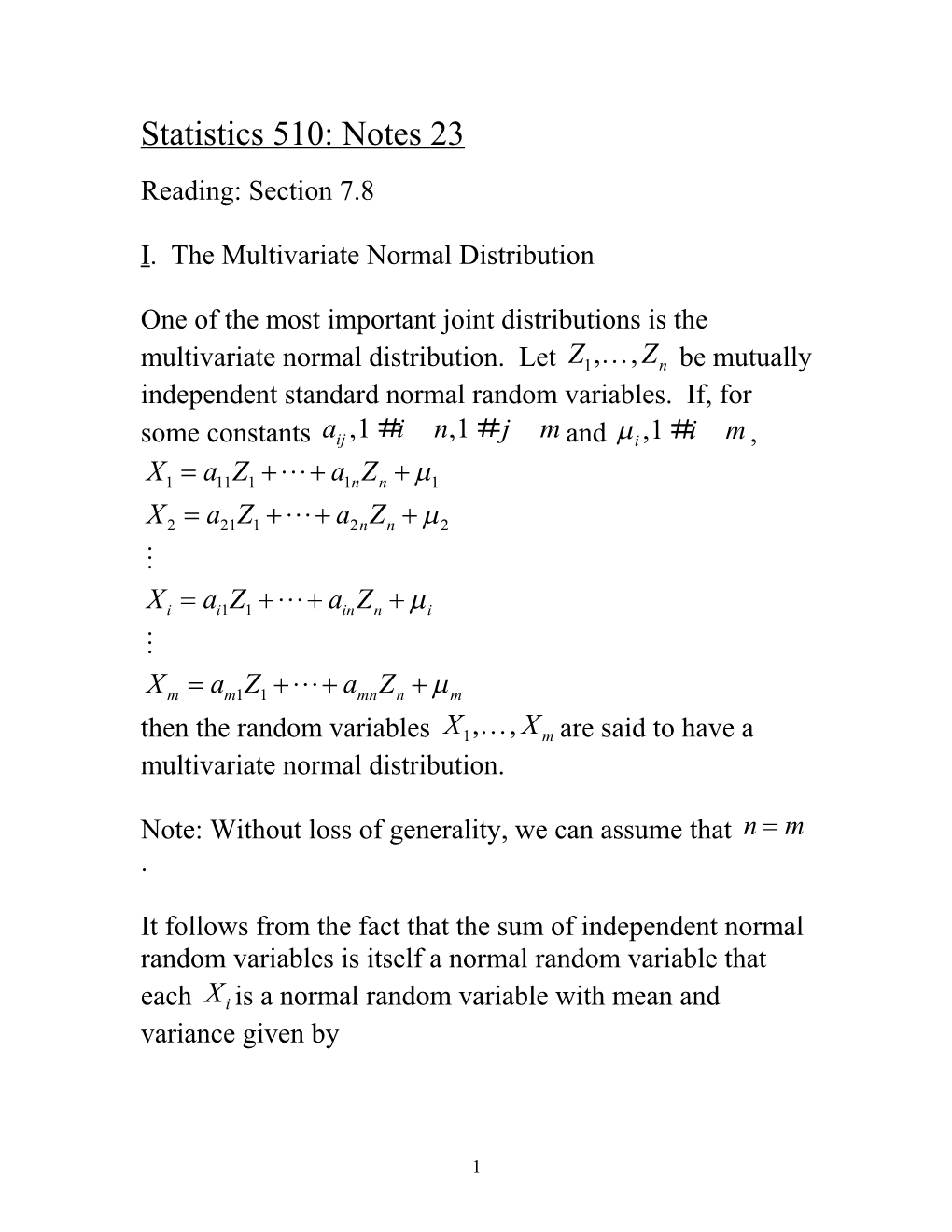

One of the most important joint distributions is the multivariate normal distribution. Let Z1, , Zn be mutually independent standard normal random variables. If, for # # some constants aij ,1 i n ,1 j m and mi ,1#i m ,

X1= a 11 Z 1 +⋯ + a 1n Z n + m 1

X2= a 21 Z 1 +⋯ + a 2n Z n + m 2 ⋮

Xi= a i1 Z 1 +⋯ + a in Z n + m i ⋮

Xm= a m1 Z 1 +⋯ + a mn Z n + m m then the random variables X1, , X m are said to have a multivariate normal distribution.

Note: Without loss of generality, we can assume that n= m .

It follows from the fact that the sum of independent normal random variables is itself a normal random variable that each X i is a normal random variable with mean and variance given by

1 E( X i ) = m i n 2 Var( Xi ) = a ij j=1

Let’s specialize to the case of m = 2 . This is called the bivariate normal distribution.

X1= a 11 Z 1 + a 12 Z 2 + m 1

X2= a 21 Z 1 + a 22 Z 2 + m 2

The means and variances of X1 and X 2 are 2 2 2 E( X1 )=m 1 , Var ( X 1 ) = a 11 + a 12 = s 1 2 2 2 E( X2 )=m 2 , Var ( X 2 ) = a 21 + a 22 = s 2

The correlation between X1 and X 2 is a a+ a a r = 11 21 12 22 2 2 2 2 a11+ a 12 a 21 + a 22

Using the method of Chapter 6.7 for calculating the joint pdf of functions of random variables and a lot of messy algebra leads to the conclusion that the the joint density of

X1 and X 2 is

2 2 1 1 骣x - m f( x , x )= exp{ - [琪1 1 1 2 2 2(1- r2 ) s 2ps1 s 2 1- r 桫 1 2 骣x-m( x - m )( x - m ) +琪 2 2 - 2r 1 1 2 2 ]} 桫 s2 s 1 s 2 so the joint density depends only on the means, variances and correlation of X1 and X 2 .

The algebra is shown below (taken from the Mathworld page on the bivariate normal distribution)

To derive the bivariate normal probability function, let and be normally and independently distributed variates with mean 0 and variance 1, then define

(13) (14)

(Kenney and Keeping 1951, p. 92). The variates and are then themselves normally distributed with means and , variances

(15) (16) and covariance

(17)

The covariance matrix is defined by

(18) where

(19)

Now, the joint probability density function for and is

(20)

3 but from (◇) and (◇), we have

(21)

As long as

(22) this can be inverted to give

(23)

(24)

Therefore,

(25) and expanding the numerator of (◇) gives

(26) so

(27)

Now, the denominator of (◇) is

(28) so

(29)

4 (30)

(31) (32) can be written simply as

(33) and

(34)

Solving for and and defining

(35)

gives

(36)

(37)

But the Jacobian is

(38)

(39)

(40)

so

(41)

and

5 (42)

where

(43)

Q.E.D.

Conditional expectations for the bivariate normal distribution:

We will now compute the conditional expectation

E( X2 | X 1= x 1 ) for (X1 , X 2 ) having a bivariate normal distribution.

E( X2 | X 1= x 1 ) = x 2 fX | X ( x 2 | x 1 ) dx 2 - 2 1

We now determine the conditional density of X2| X 1= x 1 . In doing so, we will continually collect all factors that do not depend on x2 , and represent them by the constants Ci . The final constant will then be found by using

fX| X ( x 2 | x 1 ) dx 2 = 1. - 2 1

6 f( x , x ) f( x | x ) = 1 2 X2| X 1 2 1 f( x ) X1 1

= C1 f ( x 1 , x 2 ) 禳 2 镲 1 轾骣x-m x( x - m ) =C exp睚 -犏琪 2 2 - 2r 2 1 1 2 2(1- r2 ) s s s 铪镲 臌犏桫 2 1 2 禳 镲 1 轾2 s 2 =C3 exp睚 -2 2 犏 x 2 - 2 x 2 (m 2 + r (x1- m 1 )) 铪镲 2s2 (1- r ) 臌 s1 禳 2 镲 1 轾 s =C exp睚 - x - (m + r2 ( x - m )) 42s2 (1- r 2 ) 犏 2 2 s 1 1 铪镲 2臌 1 The last expression is proportional to a normal density with s m+ r2 (x - m ) 2 2 mean 2 1 1 and variance s2 (1- r ) . Thus, s1 the conditional distribution of X2| X 1= x 1 is normal with s m+ r2 (x - m ) 2 2 mean 2 1 1 and variance s2 (1- r ) . s1

s 2 E( X2 | X 1= x 1 ) =m 2 + r ( x 1 - m 1 ) . s1

Also, by interchanging X1 and X 2 in the above calculations, it follows that

s1 E( X1 | X 2= x 2 ) =m 1 + r ( x 2 - m 2 ) s 2

Example 1: The statistician Karl Pearson carried out a study on the resemblances between parents and children. He measured the heights of 1078 fathers and sons, and found that the fathers and sons joint heights approximately

7 followed a bivariate normal distribution with the mean of the fathers’ heights = 5 feet, 9 inches; mean of sons’ heights = 5 feet, 10 inches; standard deviation of fathers’ heights = 2 inches; standard deviation of sons’ heights = 2 inches; correlation between fathers and sons’ heights = 0.5.

(a) Predict the height of the son of a father who is 6’2’’ tall.

(b) What is the probability that a father is taller than his son?

8 Example 2: Regression to the Mean. As part of their training, air force pilots make two practice landings with instructors, and are rated on performance. The instructors discuss the ratings with the pilots after each landing. Statistical analysis shows that pilots who make poor landings the first time tend to do better the second time. Conversely, pilots who make good landings the first time tend to do worse the second time. The conclusion: criticism helps the pilots while praise makes them do worse. As a result, instructors were ordered to criticize all landings, good or bad. Was this warranted by the facts?

Let X1 =rating on first landing, X 2 =rating on first landing.

9 We consider the following model for the ratings (X1 , X 2 ) of a random pilot.

X1=q + aZ 1

X2=q + aZ 2 where (q ,Z1 , Z 2 ) are independent standard normals. q represents the true skill of the pilot and (aZ1 , aZ 2 ) are chance errors for each flight. The joint distribution of

(X1 , X 2 ) is bivariate normal with parameters

骣 2 2 2 2 1 琪m1=0, m 2 = 0, s 1 = 1 +a , s 2 = 1 + a , r = . 桫 1+ a2

s 2 1 Thus, E( X2 | X 1= x 1 ) =m 2 + r ( x 1 - m 1 ) = 2 x 1 s1 1+ a Thus, even if praise or punishment has no effect, for pilots who did well on the first landing ( x1 > 0 ), we expect them to do worse on the second landing, and for pilots who did poorly on the first landing ( x2 < 0 ), we expect them to do better on the second landing. This is called the regression to the mean effect.

10