Lab 5 Fall ‘15

More Fun with Correlations

Two labs ago we conducted bivariate correlations between continuous scale variables. Last lab we conducted Chi squared analyses which are essentially correlations between two categorical variables. Correlations do not allow us to make conclusions about the causes of a phenomenon, they just tell us if we are able to better predict the value of one variable based on knowledge of a persons’ score on another variable. Since correlations serve this purpose, it is valuable for us to know how to turn the correlation statistic into a prediction. Before, we get into this, let’s look at predictions in general.

Assume that in past the mean final grade of my General Psychology courses has been 72%. A student comes to me before the course begins. I know nothing whatsoever about them, yet they ask me to predict what their final grade in the course will be. My best guess would be 72%. Why? Because it is the score which is most representative of scores obtained in the past. Clearly, I may not be correct. Not all the students will receive a score of 72%, however, given that I have no information other than average past performance of students in my General Psychology classes to base my prediction on, the class mean is my best guess. If all of the students came to me beforehand and asked me to make the same prediction, predicting, 72% for each of them will produce the best estimate over the long run.

Assume that I have kept records of student’s final grades in General Psychology and records of students high school GPA’s for past years and have found that there is a positive correlation (r = 0.45) between these two scores. GPA is not a perfect predictor of General Psychology grades, but I know from this correlation statistic that people who have higher GPAs also tend to obtain higher grades in General Psychology. If I ask the student what their High School GPA was, I should be able to better predict their grade in General Psychology. If their GPA is higher than the mean GPA of my past students, I could predict that they are likely to obtain a final grade higher than the mean of past students on the General Psychology course. On the other hand, if they have a GPA that is lower than the mean of my past students, my best prediction of their final General Psychology grade would be below the mean.

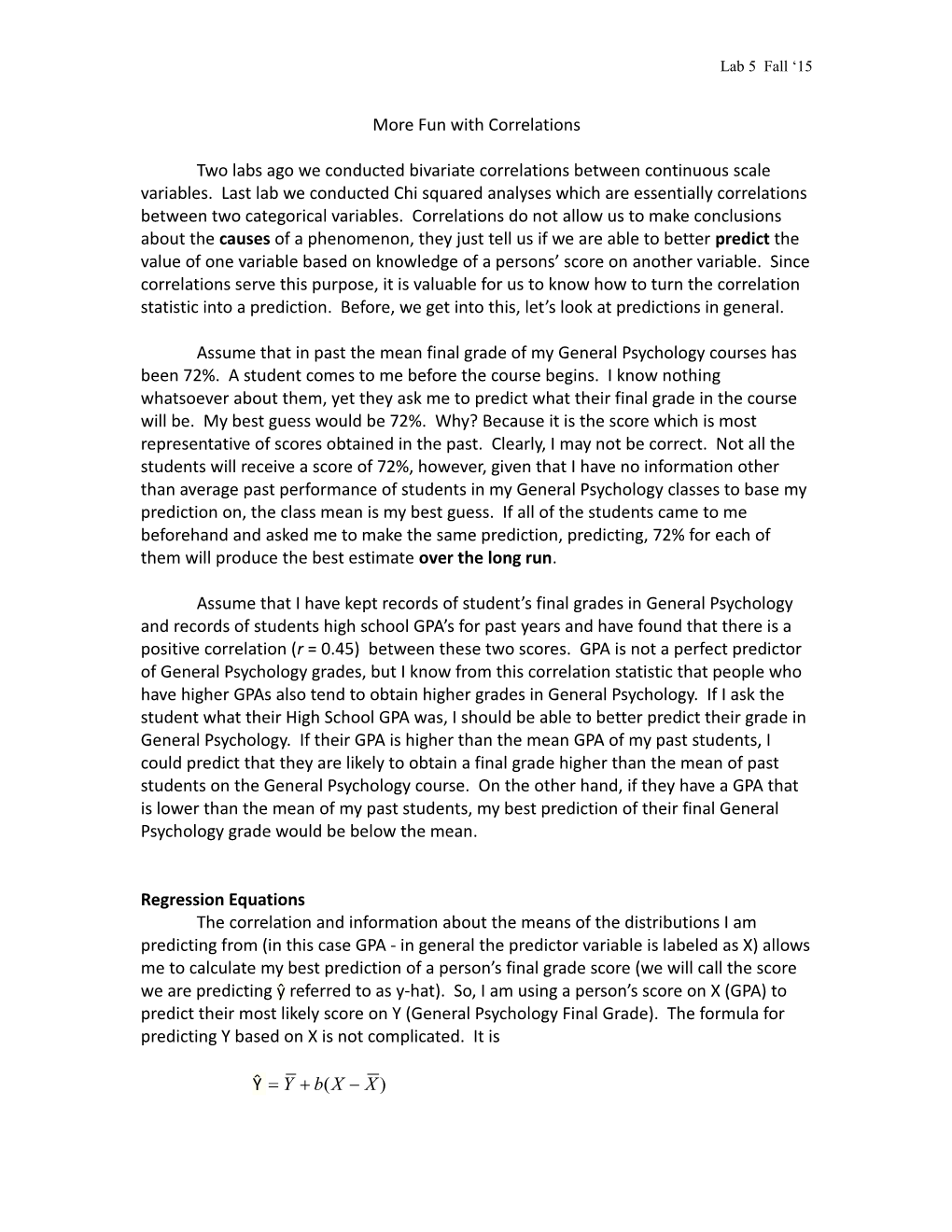

Regression Equations The correlation and information about the means of the distributions I am predicting from (in this case GPA - in general the predictor variable is labeled as X) allows me to calculate my best prediction of a person’s final grade score (we will call the score we are predicting ŷ referred to as y-hat). So, I am using a person’s score on X (GPA) to predict their most likely score on Y (General Psychology Final Grade). The formula for predicting Y based on X is not complicated. It is

Ŷ Y b(X X ) Lab 5 Fall ‘15

Where Y is the mean of the distribution I am predicting (in this case General Psychology Final Grades). And X is the mean of the distribution I am predicting from (in this case GPAs), and X is the score of the person on distribution X. All we need to know now is what b is.

b is based on the correlation statistic between the two variables (r ).

sy b r sx Where sy is the standard deviation of Y, and sx is the standard deviation of X.

From my past classes I have found that the mean GPA of my students is 2.2 with a standard deviation of 0.5, and the mean Final Grade is 72% with a standard deviation of 5. If a student has a GPA of 3 I can calculate my best estimate of their final grade by first calculating b and then putting into the formula above.

5 b .45 .4510 4.5 .5 Putting this into our regression equation, we can predict the most likely final grade for this student.

ŷ 72 4.5(3 2.2) 72 4.5(.8) 72 3.6 75.6

Not all students with GPAs of 3 will get 75.6 as their final grade, but 75.6 is my best estimate of the mean final grade of students with a GPA of 3. Why? Because GPA is not a perfect predictor of General Psychology Final Grades. We can determine how accurate this estimate is by determining the Standard deviation of my distribution of estimated scores. The standard deviation of the predicted distributions called the Standard Error of the Estimate. N 1 s (s 1 r 2 ) estimate y xy N 2

Where N is the number of pairs of scores I based my correlation on. Notice that the N 1 larger the sample that I am basing my correlation on, the closer N 2 gets to one. With large sample sizes this term is essentially one and since multiplying a number by one does not change it, we can get rid of this term. Think of it as a correction built into the formula to adjust the error term for smaller samples.

Assume I have based my correlation on GPAs and Final Grades from 500 students. We N 1 can get rid of the N 2 part of the equation. Lab 5 Fall ‘15

2 sestimate (5 1.45 ) 5 1.203 5(.89) 4.45

The standard error of the estimated (predicted) distribution is 4.45 (lets round to 4.5)

From our discussion of z-scores recall that approximately 95% of scores in a distribution fall within 2 standard deviations of the mean. The mean of my distribution of estimated Final Grades is 75.6. Therefore 95% of people with a GPA of 3 will fall between 75.6 -(2 x 4.5) and 75.6 +(2 x 4.5).

(2 x 4.5 = 9) therefore I predict that 95% of the students who have GPAs of 3 will obtain a final grade between 66.6 and 84.6. I can predict with 95% confidence that a student with a GPA of 3 will obtain a final grade between 67 and 85.

Okay, too much math. On your lab I will ask you to calculate these, but I will not make you do this for your in-class exam. What you need to know for the exam.

1. If you know the mean of the predictor distribution and the mean of the distribution you are predicting and the correlation between the two variables you can use a regression equation to estimate the mean of the distribution of scores for any given X score. 2. The higher the correlation between the two variables, the less variance there will be in your predicted distribution. At the extremes if X perfectly predicts Y, (r = 1 or r = -1) then the regression equation will give you a perfect prediction of Y. If r = 0, then your best prediction of Y is the mean of the distribution of Y. A correlation of zero gives you no predictive power.

Partial Correlations Suppose you are interested in the effect of counselor’s touching their clients during counseling sessions. You observe a large number of counselors during counseling sessions and count the number of times each counselor touched his or her client. At the end of the session, you ask each client to fill out a client satisfaction measure. You have two measures for each client (how often they are touched -- we will call variable X) and their satisfaction rating (we will call this variable Y). You enter your data into SPSS and find a significant positive correlation -- hurrah! When you announce to your fellow counselors that your data indicate that frequent counselor touch contributed to greater client satisfaction, a skeptic could argue that the correlation is not due to counselor touch at all but rather to counselor empathy. They argue that perhaps more empathetic counselors also tend to touch more -- clients give higher satisfaction ratings not because of being touched, but because their counselor is more empathic. They argue, therefore, that the correlation between touch and satisfaction is just an irrelevant side effect of the Lab 5 Fall ‘15 relationship between touching and empathy.

To answer this criticism, you might well choose to use the statistical technique known as partial correlation. Partial correlation allows you to measure the degree of relationship between two variables (X and Y) with the effect of a third variable (Z) “partialed out” or “controlled for” or “statistically removed from the equation“. In order to do this you of course would have needed to measure counselor empathy. Although the formula for calculating a partial correlation by hand is not very difficult, SPSS will calculate these for you, so we will not go through the math. Conceptually, what a partial correlation does is statistically remove variation in your client satisfaction scores that can be attributed to variation in empathy scores. The partial correlation is denoted rxy.z.

From our example, we found that the correlation between counselor touch (X) and client satisfaction (Y) is rxy = .36. The correlation between empathy (Z) and counselor touch (X) is rzx = .65 and the relationship between counselor empathy (Z) and client satisfaction (Y) is ryz is .60. These are called zero order correlations and they are the same as Pearson correlations we talked about two labs ago. They are called zero order because they are correlations between two variables with zero other variables controlled for. The partial correlation program of SPSS tells you that rxy.z. (rtouch satisfaction. controlling for empathy) = -.05 (essentially zero). This result would tend to support the hypothesis of your skeptical colleague: There does not seem to be a very strong relationship, if any between counselors touch and client satisfaction when empathy is partialed out. When one variable is partialed out, the statistic is referred to as a first order correlation (i.e., one variable has been controlled for). It is possible to control for several variables at the same time. If two variables are partialed out it would be called a second order correlation (and so on….). How does this fit in with your research projects? Several of you have run correlational studies, and when we have put together your design you might have noted that there may be factors that would affect your results, or may be alternative explanations for any relationship you might find between two variables. I have suggested that you might want to measure these variables. For example, in a study I previously conducted with a BRII student we were looking at the relationship between Year in College and Attitudes toward Learning. Past research has suggested that as student’s progress through their college careers, their attitudes toward the role of their professors, and their peers change. A possible confound here is that as students progress through their college careers they also age. Grade level and age are positively correlated. Someone is bound to suggest this as a possible explanation of any correlation we find between grade level and attitudes. We can explore this alternative explanation by calculating a partial correlation. Essentially, what SPSS does is remove any variance in the attitude scores that can be attributed to age differences between the subjects. The partial correlation between attitude and grade level with age partial led out will give us an estimate of how related grade level and attitude would be if all the Lab 5 Fall ‘15 subjects at the different grade levels were the same age (a sample that would be very difficult to find in real life).

When we partial out variables, we may reduce a correlation to a non-significant level (indicating that the variable that has been partialled out (Z) can account for the correlation between X and Y. If X was not related to Z, then it would not be correlated with Y. In Attitudes toward Learning example, if the partial correlation between Attitudes and Grade Level with Age partialled out is reduced to a non-significant level, the student would have to conclude that the correlation she obtained between Grade Level and Attitudes was a side effect of the relationship between Age and Attitudes. Relationships which are due to a third variable (recall the third variable problem) are called spurious.

Sometimes when a variable is partialled out, it increases the correlation between

X and Y. In other words rxy.z is actually larger than rxy. Variables that increase a correlation when they are partialled out are called suppressor variables. They tend to have a very low correlation with X, but a significant correlation with Y. Partialing such a variable out suppresses, or controls for irrelevant variance, or what is sometimes referred to as noise.

Multiple Correlations Many of you have likely run into a statistic called a multiple correlation when you have been reading the literature in your topic area. A multiple correlation (denoted R) allows you to look at more than one predictor at time. Let’s return to the attitudes study. Suppose the student finds a positive correlation between attitudes and grade level, and she also finds a positive correlation between age and attitudes. She partials out age and finds the correlation between attitudes and grade level is still significant. So she does the partial correlation the other way around and partials out grade level from the correlation between attitudes and age and again finds there is a significant partial correlation. What she has found is that age is a predictor of attitudes (even when grade level is controlled for) and that grade level is a predictor of attitudes (even when age is controlled for). In other words, age and grade level give us independent information that could help us better predict attitudes towards learning. So, if we wanted to get a good estimate of attitudes, our best prediction would be based on both age and grade level. We would get a better prediction if we used more than one predictor variable. This is what a multiple correlation does. Multiple Correlations allow you to address the following research questions.

How well can a set of variables predict a particular outcome? Which variable in a set of variables is the best predictor of an outcome? and

Whether a particular predictor variable is still able to predict an outcome when the effects of another variable (or set of variables) are controlled for (e.g., can grade level still predict attitudes after age has been controlled for)? Lab 5 Fall ‘15

When telling SPSS to run this analysis you need to indicate to the program which variable you are predicting (SPSS calls it the dependant variable). In the Attitudes toward Learning study we wanted to know if age and grade level predict attitude, her dependent variable is Attitudes. You also need to indicate which variables you are using as predictor variables (SPSS calls these independent variables). In the Attitudes study these would be age and grade level.

There are different ways to conduct a Multiple Regression. For this course we will use only one, called a standard multiple regression. It enters all the variables at the same time and will provide the following output.

1. Zero order correlations. The table containing zero order correlations is titled correlations. This is the same type of output you would obtain if you did a Pearson correlation. It I did a multiple correlation analysis using attitudes the variable I am predicting, and age and Grade level as my predictor variable, the zero order correlations that would be reported would be Correlation between attitudes and attitudes (remember this will always be +1.0) Correlation between attitudes and age Correlation between attitudes and grade level.

2. The next table is labeled Model Summary. In the column labeled R you will find the multiple correlation. This is the correlation between the set of independent variables you specified and your dependant variable. The next column presents R2. This is the same as the coefficient of determination we discussed with correlations, however, it is based on the set of predictor variables. The next column is the adjusted R2. When the sample size is small R is somewhat overestimated. The adjusted R2 corrects for this. When you report R2 in your results section, you should report this adjusted value. The final column of this table gives you the Standard Error of the Estimate. This is the standard deviation of the predicted distribution.

3. The next table is titled ANOVA. It is just like the ANOVA’s we discussed in Introduction to Experimental Psychology. It is the test of statistical significance. SPSS compares the R value you obtained to see if it is significantly higher than 0. In other words, if the value in the sig. column of this table is less than .05, your R value is significant. You can say that this correlation is high enough that it is unlikely to be due to chance.

4. Finally, in the fourth table, titled coefficients, the coefficients for each of your predictor variables will be given. The first Column of this table identifies which predictor variable the rows refer to. The first row is labeled constant. The constant (c ) is used instead of the mean of X in a regression equation that includes multiple predictor variables. In the second column, labeled as Unstandardized Coefficients, and there are two Lab 5 Fall ‘15

columns. The first is labeled B and the second standard error. These are the b values we discussed with the regression equation. If you wanted to predict a value for any set of predictor variable scores you use the formula.

Predicted = b1 X1 + b2 X2 + …+ c

If, for example, I wanted to predict the attitude score for a subject with a grade level score of 3, who was 24 years old, I could plug in the B values from this column into the formula

B1(3)+ B2(24) + c = predicted attitude score.

The next column reports Standardized Coefficients ( or Beta values). These values could also be used in a regression equation to predict the z-score of an individual. Unstandardized B values cannot be compared meaningfully to each other because they are not on the same metric. Because Beta values are in standardized form (transformed to z-scores) they are all on the same metric. We can compare them. If one Beta value is higher than the others, we can meaningfully say that that beta value is a better individual predictor of the dependant variable (when all other predictor variables are partialled out).

The next two columns give t-statistics and sig values for the coefficients. If the value in the sig column is less than .05, the partial correlation is significant. It is higher than would be expected based on chance alone.

Lab 5

1. The data sent to you this week includes variables defined and labeled as the following.

ID – Subject ID Sex Age tmastery – total scores from the Total Mastery Scale which measures peoples perceived control over events and circumstances in their lives. Higher values indicate higher levels of perceived control tpstress - total scores from a perceived stress scale. High scores indicate higher levels of stress. tmarlow – total scores on the Marlow-Crowne Social Desirability scale which measures the degree to which people try to present themselves in a positive light. Lab 5 Fall ‘15

Tpcontrol - scores from a scale which measures the degree to which people feel they have control over their internal states (emotions, thoughts, and physical reactions). Higher scores indicate higher levels of control

For the first part of the lab you are going to find the partial correlation between Perceived Control of Internal States and Perceived Stress score, while controlling for social Desirability. Social Desirability refers to people’s tendency to present themselves in a positive or “socially desirable” way (also known as faking good) when completing questionnaires. Social desirability scales therefore provide a measure of Demand Characteristics.

A. SPSS Procedure for Partial Correlations

1. From the menu at the top of the screen click on Analyze, and then click on Correlate, then on Partial. 2. Click on the on the two variables you want to correlate. Click on the arrow to move these into the Variables box. 3. Click on the variable you wish to control for (partialed out). Move this into the controlling for box 4. Choose two-tailed significance and display actual significance levels 5. On the options menu, select Means and Standard Deviations, zero order correlations and under missing values select exclude cases pairwise. 5. Click on continue and then on Ok.

This will produce a table of descriptive statistics and two correlation matrixes. The first correlation matrix contains zero order Pearson Correlations between the variables.

Q1. Using this correlation matrix, the descriptive statistics and the regression formula we discussed in class, predict your best estimate of perceived stress for a person who has a tpcontrol score of 64.

Q2. Within what range could you be 95% confident that this person’s tpstress score would fall?

The second matrix provided the first order correlations. Does Social desirability appear to be a viable third variable? Lab 5 Fall ‘15

B. Multiple Correlation (R)

Run a Multiple Correlation predicting Total Perceived stress using Total Mastery and Total Perceived control. 1. From the menu at the top of the screen click on Analyze, then click on Regression, then on Linear. 2. Enter the appropriate Independent and dependant variables using the arrow keys. 3. Select ENTER in the box for method. 4. For the statistics menu be sure the only option you select is Descriptives (otherwise you will get a whole wack of tables and stuff you will not understand). 5. On the options menu be sure to select pairwise under missing values .

Based on the print out.

Q3. Predict your best estimate of Perceived Stress, for a subject who has a perceived Mastery score of 18 and a Perceived Control score of 40.

Q4. Which independent variable is the better predictor of perceived stress? What values are you basing your answer on, and why?

Hand in your calculations and answers for each of the four questions.