Building Affordance Relations for Robotic Agents - a Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Marathon 2,500 Years Edited by Christopher Carey & Michael Edwards

MARATHON 2,500 YEARS EDITED BY CHRISTOPHER CAREY & MICHAEL EDWARDS INSTITUTE OF CLASSICAL STUDIES SCHOOL OF ADVANCED STUDY UNIVERSITY OF LONDON MARATHON – 2,500 YEARS BULLETIN OF THE INSTITUTE OF CLASSICAL STUDIES SUPPLEMENT 124 DIRECTOR & GENERAL EDITOR: JOHN NORTH DIRECTOR OF PUBLICATIONS: RICHARD SIMPSON MARATHON – 2,500 YEARS PROCEEDINGS OF THE MARATHON CONFERENCE 2010 EDITED BY CHRISTOPHER CAREY & MICHAEL EDWARDS INSTITUTE OF CLASSICAL STUDIES SCHOOL OF ADVANCED STUDY UNIVERSITY OF LONDON 2013 The cover image shows Persian warriors at Ishtar Gate, from before the fourth century BC. Pergamon Museum/Vorderasiatisches Museum, Berlin. Photo Mohammed Shamma (2003). Used under CC‐BY terms. All rights reserved. This PDF edition published in 2019 First published in print in 2013 This book is published under a Creative Commons Attribution-NonCommercial- NoDerivatives (CC-BY-NC-ND 4.0) license. More information regarding CC licenses is available at http://creativecommons.org/licenses/ Available to download free at http://www.humanities-digital-library.org ISBN: 978-1-905670-81-9 (2019 PDF edition) DOI: 10.14296/1019.9781905670819 ISBN: 978-1-905670-52-9 (2013 paperback edition) ©2013 Institute of Classical Studies, University of London The right of contributors to be identified as the authors of the work published here has been asserted by them in accordance with the Copyright, Designs and Patents Act 1988. Designed and typeset at the Institute of Classical Studies TABLE OF CONTENTS Introductory note 1 P. J. Rhodes The battle of Marathon and modern scholarship 3 Christopher Pelling Herodotus’ Marathon 23 Peter Krentz Marathon and the development of the exclusive hoplite phalanx 35 Andrej Petrovic The battle of Marathon in pre-Herodotean sources: on Marathon verse-inscriptions (IG I3 503/504; Seg Lvi 430) 45 V. -

Livability Court Records 1/1/1997 to 8/31/2021

Livability Court Records 1/1/1997 to 8/31/2021 Last First Middle Case Charge Disposition Disposition Date Judge 133 Cannon St Llc Rep JohnCompany Q Florence U43958 Minimum Standards For Vacant StructuresGuilty 8/13/18 Molony 148 St Phillips St Assoc.Company U32949 Improper Disposal of Garbage/Trash Guilty- Residential 10/17/11 Molony 18 Felix Llc Rep David BevonCompany U34794 Building Permits; Plat and Plans RequiredGuilty 8/13/18 Mendelsohn 258 Coming Street InvestmentCompany Llc Rep Donald Mitchum U42944 Public Nuisances Prohibited Guilty 12/18/17 Molony 276 King Street Llc C/O CompanyDiversified Corporate Services Int'l U45118 STR Failure to List Permit Number Guilty 2/25/19 Molony 60 And 60 1/2 Cannon St,Company Llc U33971 Improper Disposal of Garbage/Trash Guilty- Residential 8/29/11 Molony 60 Bull St Llc U31469 Improper Disposal of Garbage/Trash Guilty- Residential 8/29/11 Molony 70 Ashe St. Llc C/O StefanieCompany Lynn Huffer U45433 STR Failure to List Permit Number N/A 5/6/19 Molony 70 Ashe Street Llc C/O CompanyCobb Dill And Hammett U45425 STR Failure to List Permit Number N/A 5/6/19 Molony 78 Smith St. Llc C/O HarrisonCompany Malpass U45427 STR Failure to List Permit Number Guilty 3/25/19 Molony A Lkyon Art And Antiques U18167 Fail To Follow Putout Practices Guilty 1/22/04 Molony Aaron's Deli Rep Chad WalkesCompany U31773 False Alarms Guilty 9/14/16 Molony Abbott Harriet Caroline U79107 Loud & Unnecessary Noise Guilty 8/23/10 Molony Abdo David W U32943 Improper Disposal of Garbage/Trash Guilty- Residential 8/29/11 Molony Abdo David W U37109 Public Nuisances Prohibited Guilty 2/11/14 Pending Abkairian Sabina U41995 1st Offense - Failing to wear face coveringGuilty or mask. -

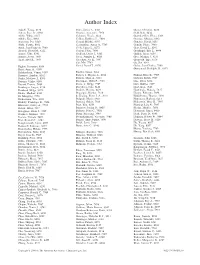

Author Index

Author Index Adachi, Yasuo, 8126 Clark, James A., 8361 Glover, Christian, 8410 Aitken, Jesse D., 8280 Cloutier, Alexandre, 7958 Gold, Ralf, 8434 Akiba, Yukio, 8327 Coleman, Nicole, 8222 Gonzalez-Rey, Elena, 8369 Alitalo, Kari, 8048 Collins, Kathleen L., 7804 Gorospe, Myriam, 8342 Anderson, Per, 8369 Conrad, Heinke, 8135 Goucher, David, 8231 Araki, Yasuto, 8102 Constantino, Agnes A., 7783 Gourdy, Pierre, 7980 Arnal, Jean-Franc¸ois, 7980 Cook, James L., 8272 Gray, David L., 8241 Aronoff, David M., 8222 Coulon, Flora, 7898 Greidinger, Eric L., 8444 Atasoy, Ulus, 8342 Crofford, Leslie J., 8361 Griffith, Jason, 8250 August, Avery, 7869 Cross, Jennifer L., 8020 Gros, Marilyn J., 8153 Azad, Abul K., 7847 Crowther, Joy E., 7847 Grunwald, Ingo, 8176 Cui, Min, 7783 Gu, Jun, 8011 Bagley, Jessamyn, 8168 Curiel, David T., 8126 Gue´ry, Jean-Charles, 7980 Bajer, Anna A., 8109 Guinamard, Rodolphe R., 8153 Balakrishnan, Vamsi, 8159 Daefler, Simon, 8262 Banerjee, Anirban, 8192 Damen, J. Mirjam A., 8184 Haddad, Elias K., 7969 Banks, Matthew I., 8393 Daniels, Mark A., 8211 Halwani, Rabih, 7969 Baranyi, Ulrike, 8168 Davenport, Miles P., 7938 Hao, Yibai, 8222 Bayard, Francis, 7980 Davis, T. Gregg, 7989 Hara, Hideki, 7859 Bernhagen, Jurgen, 8250 Davydova, Julia, 8126 Hase, Koji, 7840 Bernhard, Helga, 8135 Deckert, Martina, 8421 Hashimoto, Makoto, 7827 Bhatia, Madhav, 8333 Degauque, Nicolas, 7818 Haspot, Fabienne, 7898 Bi, Mingying, 7793 de Koning, Pieter J. A., 8184 Haudebourg, Thomas, 7898 Biedermann, Tilo, 8040 Delgado, Mario, 8369 Hausmann, Barbara, 8211 Blakely, -

ANTONIS ROKAS, Ph.D. – Brief Curriculum Vitae

ANTONIS ROKAS, Ph.D. – Brief Curriculum Vitae Department of Biological Sciences [email protected] Vanderbilt University office: +1 (615) 936 3892 VU Station B #35-1634, Nashville TN, USA http://www.rokaslab.org/ BRIEF BIOGRAPHY: I am the holder of the Cornelius Vanderbilt Chair in Biological Sciences and Professor in the Departments of Biological Sciences and of Biomedical Informatics at Vanderbilt University. I received my undergraduate degree in Biology from the University of Crete, Greece (1998) and my PhD from Edinburgh University, Scotland (2001). Prior to joining Vanderbilt in the summer of 2007, I was a postdoctoral fellow at the University of Wisconsin-Madison (2002 – 2005) and a research scientist at the Broad Institute (2005 – 2007). Research in my laboratory focuses on the study of the DNA record to gain insight into the patterns and processes of evolution. Through a combination of computational and experimental approaches, my laboratory’s current research aims to understand the molecular foundations of the fungal lifestyle, the reconstruction of the tree of life, and the evolution of human pregnancy. I serve on many journals’ editorial boards, including eLife, Current Biology, BMC Genomics, BMC Microbiology, G3:Genes|Genomes|Genetics, Fungal Genetics & Biology, Evolution, Medicine & Public Health, Frontiers in Microbiology, Microbiology Resource Announcements, and PLoS ONE. My team’s research has been recognized by many awards, including a Searle Scholarship (2008), an NSF CAREER award (2009), a Chancellor’s Award for Research (2011), and an endowed chair (2013). Most recently, I was named a Blavatnik National Awards for Young Scientists Finalist (2017), a Guggenheim Fellow (2018), and a Fellow of the American Academy of Microbiology (2019). -

Beijing 2008 Paralympic Games Cycling (Road) Individual Road Race CP1-2

Die in diesem Dokument zusammengestellten Ergebnislisten haben ihren Ursprung in der IPC-Datenbank http://www.paralympic.org/Athletes/Results und betreffen nur jene Bewerbe, in denen österreichische Athletinnen und Athleten am Start waren. Da diese Listen „Rekonstruktionen“ sind, kann nicht ausgeschlossen werden, dass bei der Übertragung auch Fehler passiert sein können. Sollten Ihnen Fehler auffallen, so können Sie uns diese per E-Mail: [email protected] mitteilen, und wir werden dementsprechende Richtigstellungen veranlassen. Suchfunktion: Diese Datei ist auch mit einer Suchfunktion ausgestattet. Die Suchfunktion ist versionsabhängig und kann dementsprechend unterschiedlich zu handhaben sein. ¾ Bei der Suche können Sie das „Lesezeichen“ verwenden – Lesezeichen bringen Sie schnell zu bestimmten Stellen im Dokument. ¾ Sie können auch direkt das Suchfeld verwenden – bzw. über die Tastenkombination SStrgS+FFF Beijing 2008 Paralympic Games Athletics Men's 100 m T44 Beijing 2008 Paralympic Games Athletics Men's 100 m T44 1st Round Heat 1 Rank Athlete(s) Result 1 Frasure, Brian USA 11.49 2 Shirley, Marlon USA 11.77 3 Wilson, Stephen AUS 11.87 4 Fourie, Arnu RSA 12.05 5 Linhart, Michael AUT 12.11 6 Oliveira, Andre Luiz BRA 12.12 Heat 2 Rank Athlete(s) Result 1 Pistorius, Oscar RSA 11.16 =PR 2 Singleton, Jerome USA 11.48 3 Bausch, Christoph SUI 12.03 4 Marai, Heros ITA 12.05 5 Flores, Antonio MLT 12.71 6 Kim, Vanna CAM 13.45 Final Round Rank Athlete(s) Result 1 Pistorius, Oscar RSA 11.17 2 Singleton, Jerome USA 11.20 3 Frasure, Brian USA 11.50 -

BELGIUM NAME № YEAR Abbeloos, Antoine & Flore (Devos) 944 1975 Abras, Joseph & Jeanne (Delage) 12468 2012 Aelbers, Mathieu H

Righteous Among the Nations Honored by Yad Vashem by 1 January 2020 BELGIUM NAME № YEAR Abbeloos, Antoine & Flore (Devos) 944 1975 Abras, Joseph & Jeanne (Delage) 12468 2012 Aelbers, Mathieu H. & Maria (Alberts) 2794 1984 Aendekerk, Petrus Johannes H. 3109 1985 Aerts Jean 10604 2005 Alardo, Victor & Ida; ch: Marcelle, Marie-Louise, 5483 1992 Joseph Alleman, Jean-Baptiste & Augusta 6978 1996 Amelot, Adele 7357 1996 Anciaux, Emile & Josephine 6466 1994 Andre Emile & daughter Madeleine 8864 2000 Andre Emilie & sister Juliette 8864.1 2000 Andre, Joseph 486 1968 Andre, Paul & Jeanne 2407 1983 Andries, Joseph Jean & Marie (Vanden Bergh) 13158 2015 Andriesse-Renard, Paule 7065 1996 Aneuse, Nelly (Mother Julienne Aneuse) 13034.3 2015 Annaert, Petrus & Isabella, her mother Catherine 11130.1 2007 Steenput-Jacobs Ansiaux, Nicolas J. & Marie Celine 4031.1 1988 Antonis-Maluin, Rene & Leontine (Depraetere) 4435 1989 Arcq, Georgees & Lydie 8432 1999 Arekens, Marie 7465.1 1997 Arnauts, Marie T.E. (Mere Ursule) 11793 2010 Arnoldy, Marcel & Celestine (Schyns) 11350 2008 Arnould, Fernand & Nelly (Bayard) 756 1972 Artus, Mathilde 3127 1985 Audenardt, Pieter & Victorine & daughter Germaine 5986 1994 (Van 't Hoff) Avaux, Evence & Irene (Grimee) 8306 1998 Baeck, Bernard & Arlette (Peeters) 11697 2009 Baeten, Antoon & Maria (Jaspers) 1748 1980 Baggen, Helene 9938 2003 Baichez, Fernand & Juliette-Marie 1638.4 2002 Bal, Auguste & Odile 8998 2000 Bamps, Louis & Elisa 9981 2003 Bams, Joannes & Elvira (Borgugnons) 13402 2017 Bartholomeus, Urbain & Marie-Louise 8910 -

Babies' First Forenames: Births Registered in Scotland in 1997

Babies' first forenames: births registered in Scotland in 1997 Information about the basis of the list can be found via the 'Babies' First Names' page on the National Records of Scotland website. Boys Girls Position Name Number of babies Position Name Number of babies 1 Ryan 795 1 Emma 752 2 Andrew 761 2 Chloe 743 3 Jack 759 3 Rebecca 713 4 Ross 700 4 Megan 645 5 James 638 5 Lauren 631 6 Connor 590 6 Amy 623 7 Scott 586 7 Shannon 552 8 Lewis 568 8 Caitlin 550 9 David 560 9 Rachel 517 10 Michael 557 10 Hannah 480 11 Jordan 554 11 Sarah 471 12 Liam 550 12 Sophie 433 13 Daniel 546 13 Nicole 378 14 Cameron 526 14 Erin 362 15 Matthew 509 15 Laura 348 16 Kieran 474 16 Emily 289 17 Jamie 452 17 Jennifer 277 18 Christopher 440 18 Courtney 276 19 Kyle 421 19= Kirsty 258 20 Callum 419 19= Lucy 258 21 Craig 418 21 Danielle 257 22 John 396 22= Katie 252 23 Dylan 394 22= Louise 252 24 Sean 367 24 Heather 250 25 Thomas 348 25 Rachael 221 26 Adam 347 26 Eilidh 214 27 Calum 335 27 Holly 213 28 Mark 310 28 Samantha 208 29 Robert 297 29 Stephanie 202 30 Fraser 292 30= Kayleigh 194 31 Alexander 288 30= Zoe 194 32 Declan 284 32 Melissa 189 33 Paul 266 33 Claire 182 34 Aaron 260 34 Chelsea 180 35 Stuart 257 35 Jade 176 36 Euan 252 36 Robyn 173 37 Steven 243 37 Jessica 160 38 Darren 231 38= Aimee 159 39 William 228 38= Gemma 159 40 Lee 226 38= Nicola 159 41= Aidan 207 41 Hayley 156 41= Stephen 207 42= Lisa 155 43 Nathan 205 42= Natalie 155 44 Shaun 198 44 Anna 151 45 Ben 195 45 Natasha 148 46 Joshua 191 46 Charlotte 134 47 Conor 176 47 Abbie 132 48 Ewan 174 -

Antonis Kokossis, Ceng, Ficheme, FRSA, FIET National Technical University of Athens School of Chemical Engineering Zografou Campus, 9, Iroon Polytechniou Str

CURRICULUM VITAE Antonis Kokossis, CEng, FIChemE, FRSA, FIET National Technical University of Athens School of Chemical Engineering Zografou Campus, 9, Iroon Polytechniou Str. GR-15780, Athens, GREECE Tel +30-2-10-772-4275 Fax +30-2-10-772- 3155 email: [email protected] http://www.ipsen.ntua.gr EDUCATION - Princeton University, Princeton, New Jersey, USA Ph.D. in Chemical Engineering, 1991; Thesis title: Synthesis and optimization of reactor networks and reactor-separation-recycle systems - National Technical University of Athens, Greece Diploma in Chemical Engineering, 1985 ACADEMIC CAREER - 2009- Professor, Process Systems Engineering, School of Chemical Engineering, National Technical University of Athens, Greece; Director, Industrial Process Systems Engineering (IPSEN) - 2019- Visiting Professor, Imperial College of Science Technology and Medicine, UK - 2012- Adjunct Research Professor, Research and Innovation Center in Information, Communication and Knowledge Technologies (ATHENA), Athens, Greece - 2000-2009, Professor and Head, Process and Information Systems Engineering, School of Engineering, University of Surrey, UK - 1993–2000 Senior Lecturer/Lecturer (1993–1997), Department of Process Integration, School of Chemical Engineering, University of Manchester (formerly UMIST), UK WORK EXPERIENCE Academic and administration service University of Manchester (formerly UMIST) - Postgraduate Admissions Tutor, Department for Process Integration, 1994-1997 - Postgraduate Admissions Tutor, Department for Process Integration,1999-2000 -

Table 5: Full List of First Forenames Given, Scotland, 2014 (Final) in Rank Order

Table 5: Full list of first forenames given, Scotland, 2014 (final) in rank order NB: * indicates that, sadly, a baby who was given that first forename has since died. Number of Number of Rank1 Boys' names NB Rank1 Girls' names NB babies babies 1 Jack 583 * 1 Emily 569 * 2 James 466 * 2 Sophie 542 * 3 Lewis 411 * 3 Olivia 485 * 4 Oliver 403 * 4 Isla 435 5 Logan 354 * 5 Jessica 419 * 6 Daniel 349 * 6 Ava 379 7 Noah 321 * 7 Amelia 372 * 8 Charlie 320 * 8 Lucy 363 * 9 Lucas 310 * 9 Lily 310 * 10 Alexander 309 * 10 Ellie 278 * 11 Mason 285 * 11 Ella 273 12 Harris 276 * 12 Sophia 271 13 Max 274 * 13 Grace 265 * 14 Harry 268 * 14 Chloe 251 15 Finlay 267 15 Freya 249 16 Adam 266 * 16 Millie 246 17 Aaron 264 * 17 Mia 230 18 Ethan 259 18 Emma 229 19= Cameron 256 19 Eilidh 224 19= Jacob 256 * 20 Anna 218 21 Callum 252 21 Charlotte 213 * 22 Archie 239 22 Eva 210 * 23 Alfie 236 * 23= Holly 208 24 Leo 234 * 23= Ruby 208 * 25 Thomas 228 * 25 Layla 190 26 Nathan 226 26 Hannah 187 27 Riley 223 * 27 Evie 175 28 Rory 215 28 Orla 171 * 29 Matthew 214 29 Katie 170 * 30 Joshua 213 * 30 Poppy 162 * 31 Oscar 212 31 Erin 154 32 Jamie 211 32 Leah 144 33 Ryan 208 * 33 Lexi 140 * 34 Luke 195 34 Molly 132 35 William 180 * 35= Isabella 130 36 Liam 178 35= Skye 130 37 Dylan 176 * 37 Lacey 124 * 38 Samuel 166 38 Abigail 122 * 39 Andrew 163 * 39 Georgia 119 40= David 154 * 40= Rebecca 115 40= John 154 40= Sofia 115 42 Connor 146 42= Amber 113 * 43= Brodie 144 42= Hollie 113 43= Kyle 144 * 44 Amy 111 45 Joseph 143 45= Brooke 107 46 Kian 142 * 45= Daisy 107 47 Benjamin 141 45= Niamh 107 48= Aiden 139 48= Lilly 106 48= Harrison 139 * 48= Zoe 106 * 50 Robert 135 * 50 Rosie 102 51= Ben 130 51 Abbie 101 51= Muhammad 130 52 Robyn 100 * 53 Michael 127 53 Sienna 98 54 Tyler 123 * 54= Summer 96 55 Kai 120 54= Zara 96 56 Euan 114 * 56 Iona 91 57= Arran 112 57 Maya 89 57= Jayden 112 58 Sarah 88 59 Jake 111 * 59= Aria 87 60= Cole 110 59= Maisie 87 60= Ollie 110 * 61 Cara 85 Footnote 1) The equals sign indicates that there is more than one name with this position of rank in the list. -

Seven Greek-Americans on Forbes' 400 Richest List

S O C V st ΓΡΑΦΕΙ ΤΗΝ ΙΣΤΟΡΙΑ W ΤΟΥ ΕΛΛΗΝΙΣΜΟΥ E 101 ΑΠΟ ΤΟ 1915 The National Herald anniversa ry N www.thenationalherald.com A wEEkly GrEEk-AmEriCAN PuBliCATiON 1915-2016 VOL. 19, ISSUE 991 October 8-14 , 2016 c v $1.50 Maillis, a Seven Greek-Americans On Forbes’ 400 Richest List 9-Year-Old New Balance’s Jim Davis, a philanthropist Enrolled and son of Greek immigrants, is #94 Forbes Magazine’s annual list crosoft’s Bill Gates at number of the 400 richest Americans in - one and Amazon’s Jeff Bezos, in College cludes seven Greek-Americans, who moved into second place with New Balance running ahead of investor Warren Buffet, shoe’s Jim Davis leading his have fortunes totaling $814 bil - Budding Physicist peers at number 94 with an es - lion. timated fortune of $5.1 billion, Facebook’s Mark Zuckerberg Plans to Prove tied with seven others. is fourth, former New York Davis, 73, the son of Greek Mayor Michael Bloomberg, that God Exists immigrants, lives in Newton, sixth, and Larry Page and Sergey Massachusetts, and oversees Brin of Google at 9 and 10, with TNH Staff one of the top running shoe Brin the richest immigrant on companies in the world. the list, 14 of whom have more William Maillis is a 9-year- He has donated to many money than New York business - old with a lot more on his plate causes, including financing stat - man and Presidential candidate than most kids his age. Most are ues of 1946 Boston Marathon Donald Trump, whose fortune in the fourth grade, tackling winner Stylianos Kyriakides, fell $800 million very fast. -

Uitslag Van Het Rad 10-04-16 Totaal.Xlsx

Gilde Sint Catharina Moergestel Uitslag " Rad van Sinte Catrien " dd. 3 & 10 april 2016 Naam Woon- Naam 3 10 Gilde Plaats Schutter april april Gilde Sint Joris Diessen Henrdrie v Berkel 20 Gilde Sint Joris Diessen Paul van Berkel 20 Gilde Sint Joris Diessen Hans v Berkel 18 Gilde Sint Joris Diessen Co vd Hout 17 Gilde Sint Joris Diessen Aida v Berkel 11 Gilde Sint Joris & St. Catharina Gemonde Wilfried van de Leest 20 Gilde Sint Joris & St. Catharina Gemonde Harrie Geerts 16 Gilde Sint Antonius Sebastiaan Gemonde Tonnie van de Sande 19 Gilde Sint Antonius Sebastiaan Gemonde Tjeerd van der Zee 9 Gilde Sint Joris Hapert Frans v Rooy 16 Gilde Sint Joris Sebastiaan Heukelom Rob Elings 13 Gilde Sint Joris Hoogeloon Kees van Puijenbroek 17 Gilde Sint Joris Hoogeloon Jan Nouwens 16 Gilde Sint Joris Hoogeloon Bert van Rooij 15 Gilde Sint Joris Hoogeloon Jack van Rooij 15 Gilde Sint Joris Hoogeloon Jan Michiels 6 Gilde Sint Joris Hooge Mierde Jos Wouters 12 Gilde Heilige Sacrament Hooge Mierde Truus Wouters 12 Gilde Sint Antonis & Sint Martinus Lexmond Fons Brand 18 Gilde Sint Antonis & Sint Martinus Lexmond Lex van Heumen 17 Gilde Sint Antonis & Sint Martinus Lexmond Walter Huisman 16 Gilde Sint Antonis & Sint Martinus Lexmond Gratia Brand 0 Gilde Sint Catharina Moergestel René Bruurs 19 Gilde Sint Catharina Moergestel Jan Wolfs 19 Gilde Sint Catharina Moergestel Geert Denissen 18 Gilde Sint Catharina Moergestel Peter Wolfs sr 18 Gilde Sint Catharina Moergestel Henk vd Zanden 18 Gilde Sint Catharina Moergestel Johan van Daelen 17 Gilde Sint Catharina Moergestel Jochem Bruurs 16 Gilde Sint Catharina Moergestel Sjef v Roessel 16 Gilde Sint Catharina Moergestel Jos vd Ven 16 Gilde Sint Catharina Moergestel Peter Scheffers 15 Gilde Sint Catharina Moergestel Gos Denissen 14 Gilde Sint Catharina Moergestel Frans Becx 13 1 Gilde Sint Catharina Moergestel Uitslag " Rad van Sinte Catrien " dd. -

List of Participants

First name Last name Organization Position Rose Abdollahzadeh Chatham House Research Partnerships Manager David Abulafia Cambridge University Professor Of Mediterranean History Evangelia Achladi General Consulate Of Greece At Constantinople/Sismanoglio Megaro Greek Language Instructor - Librarian Babis Adamantidis Vasiliki Adamidou LIDL Hellas Corporate Communications and CSR Director Katerina Agorogianni The Greek Guiding Association Vice Chair of National Board Eleni Agouridi Stavros Niarchos Foundation Program Officer George Agouridis Stavros Niarchos Foundation Board Member & Group Chief Legal Counsel Kleopatra Alamantariotou Biomimicry Greece/ Nasa Challenge Greece Founder CEO Christina Albanou Spastics' Society of Northern Greece Director Eleni Alexaki US Embassy Cultural Affairs Specialist Maria Alexiou TITAN Cement SA Vasiliki Alexopoulos Stavros Niarchos Foundation Intern Georgios Alexopoulos European Research Institute on Cooperative and Social Enterprises Senior Researcher Ben Altshuler Institute for Digital Archaeology Director Mike Ammann Embassy Of Switzerland Deputy Head Of Mission Virginia Anagnos Goodman Media International Executive Vice President Dora Anastasiadou American Farm School of Thessaloniki -Perrotis College Despina Andrianopoulou American Embassy Protocol Specialist Mayor's Office Communications & Foteini Andrikopoulou City Of Athens Public Relations Advisor Elly Andriopoulou Stavros Niarchos Foundation SNFCC Grant Manager Anastasia Andritsou British Council Head Partnerships & Programmes Sophia Anthopoulou