SNS COLLEGE OF TECHNOLOGY (An Autonomous Institution) COIMBATORE – 35 DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING (UG & PG) II Year Computer Science and Engineering, 4th Semester UNIVERSITY QUESTIONS BANK Subject Code & Name: CS301 SOFTWARE ENGINEERING Prepared by: Mr.R.Kamalraj, AP/CSE, Ms. M.Vaijayanthi, AP/CSE, Ms.S.Jaya Shree, AP/CSE UNIT-I PART – A 1. Give the difference between Software Engineering and System Engineering. (Nov/Dec 11) Software Engineering deals with designing and developing software of the highest quality, while Systems Engineering is the sub discipline of engineering, which deals with the overall management of engineering projects during their life cycle.

2. What are the drawbacks of Development process (Nov/Dec 11) Organizational development is the ongoing attempt to improve overall company productivity and efficiency by creating a nurturing atmosphere for employees. It can be used to effect organizational change, or it can be implemented to improve specific operations. Some of the elements of organizational development include training, performance rewards, team-building exercises and improving workplace communication. There are advantages and disadvantages to using the organizational development method of company growth and change.

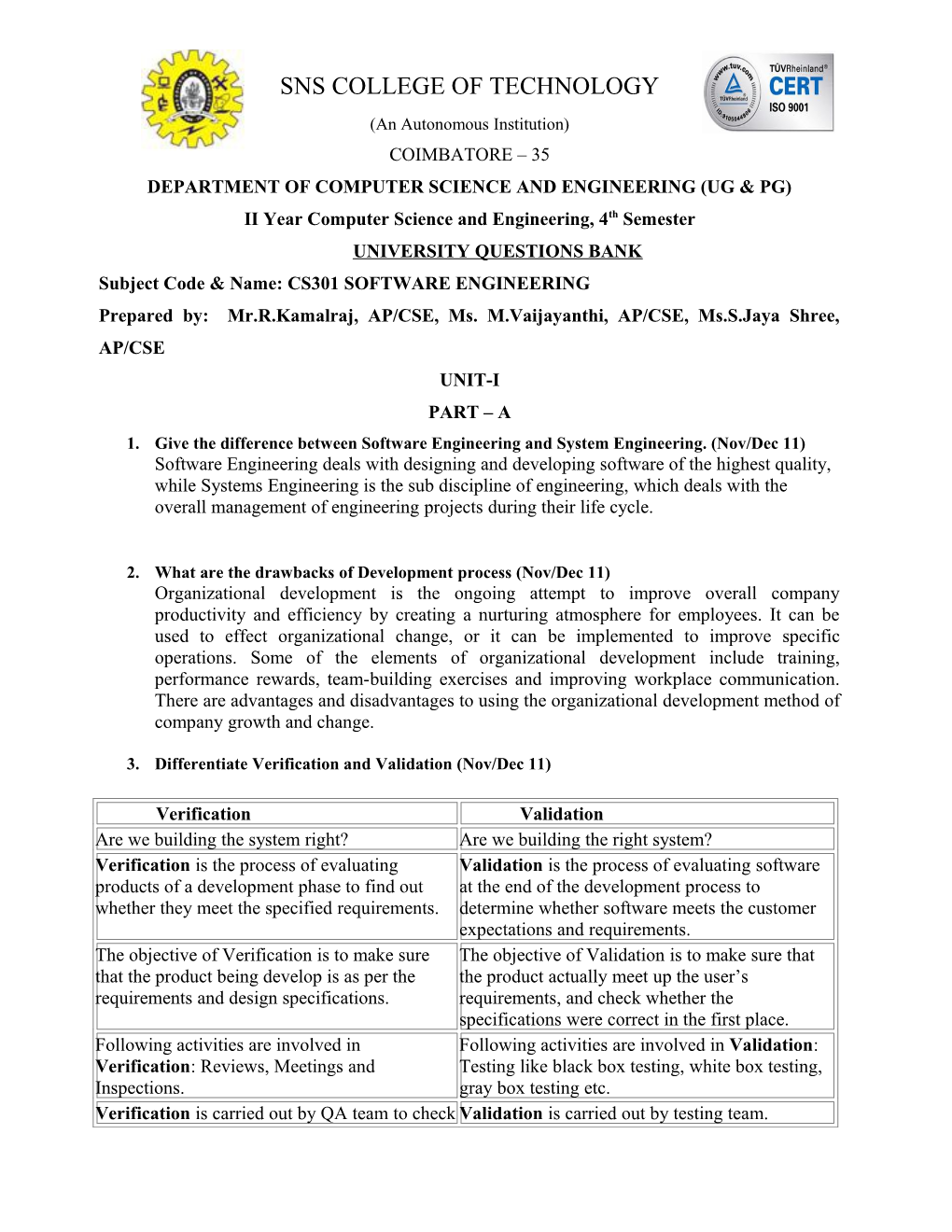

3. Differentiate Verification and Validation (Nov/Dec 11)

Verification Validation Are we building the system right? Are we building the right system? Verification is the process of evaluating Validation is the process of evaluating software products of a development phase to find out at the end of the development process to whether they meet the specified requirements. determine whether software meets the customer expectations and requirements. The objective of Verification is to make sure The objective of Validation is to make sure that that the product being develop is as per the the product actually meet up the user’s requirements and design specifications. requirements, and check whether the specifications were correct in the first place. Following activities are involved in Following activities are involved in Validation: Verification: Reviews, Meetings and Testing like black box testing, white box testing, Inspections. gray box testing etc. Verification is carried out by QA team to check Validation is carried out by testing team. whether implementation software is as per specification document or not. Execution of code is not comes under Execution of code is comes under Validation. Verification.

4. What is meant by Software Validation (Nov/Dec 11)

Validation is the process of evaluating software at the end of the development process to determine whether software meets the customer expectations and requirements.

5. What is meant by User Requirements? (Nov/Dec 11)

What user really expects from the software application is known as user requirements.

6. What are the advantages of Software Prototyping?(June 2009) Users are actively involved in the development Since in this methodology a working model of the system is provided, the users get a better understanding of the system being developed. Errors can be detected much earlier. Quicker user feedback is available leading to better solutions. Missing functionality can be identified easily Confusing or difficult functions can be identified Requirements validation, Quick implementation of, incomplete, but functional, application.

7. List the characteristic of Software as a product. (June 2009) Functionality Reliability Usability Efficiency Maintainability Portability 8. What are the limitations of Waterfall model? (June 2009) The model implies that you should attempt to complete a given stage before moving on to the next stage Does not account for the fact that requirements constantly change.

It also means that customers can not use anything until the entire system is complete. The model makes no allowances for prototyping. It implies that you can get the requirements right by simply writing them down and reviewing them. The model implies that once the product is finished, everything else is maintenance.

9. Define Software. (Apr / May 2011)

Software means computer instructions or data. Anything that can be stored electronically is software, in contrast to storage devices and display devices which are called hardware.

10. What is Software Engineering? (Apr / May 2011)

Software Engineering is the study and application of engineering to the design, development, and maintenance of software.

11. What are the problems make elicitation difficult? (Apr / May 2011)

* Poor Education *Poor Communication

* Transcription * Documentation

12. How a software process can be characterized? (Nov/Dec 2012)

The capability maturity assessment is used to characterize the level of process models.

13. What are the drawbacks of prototype model? (Nov/Dec 2011) Leads to implementing and then repairing way of building systems. Practically, this methodology may increase the complexity of the system as scope of the system may expand beyond original plans. Incomplete application may cause application not to be used as the full system was designed Incomplete or inadequate problem analysis.

14. Mention the steps involved in Win-Win spiral model. (Nov/Dec 2011)

Collect the goals of customers from the business

Collect the goals for developers after developing software.

Collect the goals of the organization.

15. List the advantages of using Water model instead of adhoc build and fix model(May/june 2009) Simple and easy to understand and use. Easy to manage due to the rigidity of the model – each phase has specific deliverables and a review process. Phases are processed and completed one at a time. Works well for smaller projects where requirements are very well understood.

16. How does ‘project Risk’ factor affect the Spiral model of software development(May/june 2009)

Risk Analysis phase is the most significant part of "Spiral Model". In this phase all possible (and available) alternatives, which can help in developing a cost effective project are examined and strategies are decided to use them. This phase has been added specially in order to verify and resolve all the possible risks in the project development. If risks indicate any type of uncertainty in needs, prototyping may be used to proceed with the available data and search out possible solution in order to deal with the potential changes in the requirements.

PART – B 1. Explain in detail about Spiral Model of Software Process. (June 2009)

The spiral model is similar to the incremental model, with more emphasis placed on risk analysis. The spiral model has four phases: Planning, Risk Analysis, Engineering and Evaluation. A software project repeatedly passes through these phases in iterations (called Spirals in this model). The baseline spiral, starting in the planning phase, requirements are gathered and risk is assessed. Each subsequent spirals builds on the baseline spiral. Requirements are gathered during the planning phase. In the risk analysis phase, a process is undertaken to identify risk and alternate solutions. A prototype is produced at the end of the risk analysis phase.

Software is produced in the engineering phase, along with testing at the end of the phase. The evaluation phase allows the customer to evaluate the output of the project to date before the project continues to the next spiral.

Diagram of Spiral model: Advantages of Spiral model:

High amount of risk analysis hence, avoidance of Risk is enhanced. Good for large and mission-critical projects. Strong approval and documentation control. Additional Functionality can be added at a later date. Software is produced early in the software life cycle.

Disadvantages of Spiral model:

Can be a costly model to use. Risk analysis requires highly specific expertise. Project’s success is highly dependent on the risk analysis phase. Doesn’t work well for smaller projects.

When to use Spiral model:

When costs and risk evaluation is important For medium to high-risk projects Long-term project commitment unwise because of potential changes to economic priorities Users are unsure of their needs Requirements are complex New product line Significant changes are expected (research and exploration)

2. Distinguish between Verification and Validation. (June 2009)

Verification Validation Are we building the system right? Are we building the right system? Verification is the process of evaluating Validation is the process of evaluating software products of a development phase to find out at the end of the development process to whether they meet the specified requirements. determine whether software meets the customer expectations and requirements. The objective of Verification is to make sure The objective of Validation is to make sure that that the product being develop is as per the the product actually meet up the user’s requirements and design specifications. requirements, and check whether the specifications were correct in the first place. Following activities are involved in Following activities are involved in Validation: Verification: Reviews, Meetings and Testing like black box testing, white box testing, Inspections. gray box testing etc. Verification is carried out by QA team to check Validation is carried out by testing team. whether implementation software is as per specification document or not. Execution of code is not comes under Execution of code is comes under Validation. Verification. Verification process explains whether the Validation process describes whether the outputs are according to inputs or not. software is accepted by the user or not. Verification is carried out before the Validation activity is carried out just after the Validation. Verification. Following items are evaluated during Following item is evaluated during Validation: Verification: Plans, Requirement Actual product or Software under test. Specifications, Design Specifications, Code, Test Cases etc, Cost of errors caught in Verification is less thanCost of errors caught in Validation is more than errors found in Validation. errors found in Verification. It is basically manually checking the of It is basically checking of developed program documents and files like requirement based on the requirement specifications specifications etc. documents & files.

3. Write note on following process models. (Nov/Dec 2011)

a. Waterfall Model

Systems Engineering – Software as part of larger system, determine requirements for all system elements, allocate requirements to software. • Software Requirements Analysis – Develop understanding of problem domain, user needs, function, performance, interfaces, ... – Software Design – Multi-step process to determine architecture, interfaces, data structures, functional detail. Produces (high-level) form that can be checked for quality, conformance before coding. • Coding – Produce machine readable and executable form, match HW, OS and design needs. • Testing b. Incremental Model

c. Prototyping Model

d. Object Oriented Model

4. Explain about System Engineering Hierarchy in detail. (Nov/Dec 2011) Software engineering occurs as a consequence of a process called system engineering. Instead of concentrating solely on software, system engineering focuses on a variety of elements, analyzing, designing, and organizing those elements into a system that can be a product, a service, or a technology for the transformation of information or control.

5. Which type of applications would suit RAD model? Justify your answer. (Apr / May 2011)

Rapid application development (RAD) is a software development methodology that uses minimal planning in favor of rapid prototyping. The "planning" of software developed using RAD is interleaved with writing the software itself. The lack of extensive pre-planning generally allows software to be written much faster, and makes it easier to change requirements. Graphical user interface builders are often called rapid application development tools. RAD phases : • Business modeling • Data modeling • Process modeling

6. A software project which is considered is very simple and the customer is in the position of giving all the requirements at the initial stage, Which Process model would you prefer for developing the project? Justify. (Nov/Dec 2009)

Water Fall Life cycle Model can be considered to collect requirements.

7. What is a Prototype? Why prototype is considered as throwable one? (Nov/Dec 2009)

Throwaway Prototyping Model is especially useful when the project needs are vaguely and poorly laid out. It functions by providing proof that something can indeed be done in terms of systems and strategies . Throwaway Prototyping Model is used for certain projects and will eventually be discarded after the project has been completed. It is also known as Close-Ended Prototyping. Throwaway Prototyping Model is implemented through the creation of prototypes and thereafter gathering feedback from end users to check if they find it good or not. This is valuable to get a better understanding of the actual needs of customers before a product or service is developed.

The Throwaway Prototyping Phases

There are 5 phases involved when using Throwaway Prototyping model. • Identification of requirements and materials to be used Although most requirements are unknown because the process works through “discovery method”, the basics should be identified so that the project can begin. Planning The ability of the project management team to plan well is necessary for Throwaway Prototyping model to work. The team is a huge factor for its success; with this model you can expect numerous surprises coming your way so having the skills necessary to resolve problems is a must. Implementation, Prototyping and Verification Once planning has been completed, implementation of the project will then take place. Prototypes will be created and introduced to end users who will utilize them for testing and evaluation purposes. At this time, they will be providing feedback, clarify needs and relay requirements. • Prototype Enhancements and Revisions As per requirements of end users derived through feedback and testing, the prototypes will be continuously altered until such time it has reached near-perfection. • Finalization Once everything has been set and issues have been properly addressed, the prototype will then be “thrown away” and a product or service will be developed, taking into consideration the feedback derived during the verification process. Advantages of Throwaway Prototyping Model There are many reasons why project teams use Throwaway Prototyping model. For one thing, it is very cost-effective. Since Throwaway Prototyping model uses a series of prototypes to detect and forecast possible problems, it can prevent these from taking place as soon as the product or service is introduced to the market.

Problems are usually a very costly occurrence, and if you can keep them from happening, expenses can be reduced. Next, project completion is quick . Since it allows early detection of issues, the transition from one step to the next will be smoother and faster. Lastly, when you use Throwaway Prototyping model, you can be assured that the end result is something that will certainly work for you and your customers because it has been thoroughly tested through the use of prototypes. The end product is expected to be able to meet the wants and needs of the target market. 8. Explain the evolutionary process models in detail (9 marks (Nov/Dec 2012))

The Evolutionary Prototyping Model Phases This model includes four phases: The identification of the basic requirements. Though we may not be able to know all the requirements since it is a continuous process, we should be able to identify the basic things needed for this project to work. Creating the prototype. There will be several prototypes to be made in this project, with each one better than the one before it to ensure its success. Verification of prototype. This will be done through surveys and experimentation using participants taken from the target market. The customers are the best people to get feedback from to find out whether the system is good or not. Changes for the prototypes. When found that the prototype is seen as insufficient or unsatisfactory, the project team will again make further iterations until such time it becomes perfect, as per feedback from customers. The advantages of Evolutionary Prototyping Model 9. Write brief notes on computer based systems and system engineering hierarchy. (Nov/Dec 2012) Computer Based System computer-based system as A set or arrangement of elements that are organized to accomplish some predefined goal by processing information. The goal may be to support some business function or to develop a product that can be sold to generate business revenue. To accomplish the goal, a computer-based system makes use of a variety of system elements: Software. Computer programs, data structures, and related documentation that serve to effect the logical method, procedure, or control that is required. Hardware. Electronic devices that provide computing capability, the interconnectivity devices (e.g., network switches, telecommunications devices) that enable the flow of data, and electromechanical devices (e.g., sensors, motors, pumps) that provide external world function. People. Users and operators of hardware and software. Database. A large, organized collection of information that is accessed via software. Documentation. Descriptive information (e.g., hardcopy manuals, on-line help files, Web sites) that portrays the use and/or operation of the system. Procedures. The steps that define the specific use of each system element or the procedural context in which the system resides. The elements combine in a variety of ways to transform information. For example, a marketing department transforms raw sales data into a profile of the typical purchaser of a product; a robot transforms a command file containing specific instructions into a set of control signals that cause some specific physical action. Creating an information system to assist the marketing department and control software to support the robot both require system engineering.

10. Explain the Incremental Process models in detail. (6 marks -Nov/Dec 2012)

In incremental model the whole requirement is divided into various builds. Multiple development cycles take place here, making the life cycle a “multi-waterfall” cycle. Cycles are divided up into smaller, more easily managed modules. Each module passes through the requirements, design, implementation and testing phases. A working version of software is produced during the first module, so you have working software early on during the software life cycle. Each subsequent release of the module adds function to the previous release. The process continues till the complete system is achieved.

Advantages of Incremental model:

Generates working software quickly and early during the software life cycle. More flexible – less costly to change scope and requirements. Easier to test and debug during a smaller iteration. Customer can respond to each built. Lowers initial delivery cost. Easier to manage risk because risky pieces are identified and handled during it’d iteration.

Disadvantages of Incremental model:

Needs good planning and design. Needs a clear and complete definition of the whole system before it can be broken down and built incrementally. Total cost is higher than waterfall. 11. Compare and contrast between System engineering and Software engineering. (10 marks -Nov/Dec 2012)

What is the difference between Software Engineering and Systems Engineering?

The difference between System Engineering and Software Engineering is not very clear. However, it can be said that the System Engineers focus more on users and domains, while Software Engineering focus more on n implementing quality software. System Engineer may deal with a substantial amount of hardware engineering, but typically software engineers will focus solely on software components. System Engineers may have a broader education (including Engineering, Mathematics and Computer science), while Software Engineers will come from a Computer Science or Computer Engineering background.

12. Discuss about Component Based Model (Nov/Dec 2010)

Component-based software engineering (CBSE) (also known as component-based development (CBD)) is a branch of software engineering that emphasizes the separation of concerns in respect of the wide-ranging functionality available throughout a given software system. It is a reuse-based approach to defining, implementing and composing loosely coupled independent components into systems. This practice aims to bring about an equally wide-ranging degree of benefits in both the short-term and the long-term for the software itself and for organizations that sponsor such software.

13. What is a process model? Describe the process model that you would choose to manufacture a car? Explain the same. (Nov/Dec 2010)

A software development process, also known as a software development life-cycle (SDLC), is a structure imposed on the development of a software product. Similar terms include software life cycle and software process. It is often considered a subset of systems development life cycle. There are several models for such processes, each describing approaches to a variety of tasks or activities that take place during the process. Some people consider a life-cycle model a more general term and a software development process a more specific term. For example, there are many specific software development processes that 'fit' the spiral life-cycle model. ISO/IEC 12207 is an international standard for software life-cycle processes. It aims to be the standard that defines all the tasks required for developing and maintaining software. UNIT-II PART – A 1. What are the two kinds of requirements document? Explain it. (Nov/Dec 11)

*Functional Requirements – represents software functionalities

* Non-Functional Requirements – quality factors required in software

2. Define Requirements Elicitation. (Nov/Dec 11)

Collecting requirements from customer is known as ‘Requirements Elicitation’.

3. Distinguish Functional and Non-Functional Requirements. (June 2009)

*Functional Requirements – represents software functionalities

* Non-Functional Requirements – quality factors required in software

4. How do we create Behavioral Model? (June 2009)

Using Sequence Diagram or State Machine Diagram the behavior of the system can be designed.

5. What is Requirements Management?

Requirement Elicitation, Analysis, Feasibility Studies and Negotiation are the activities in Requirements Management.

6. What are the primary objectives of Analysis Model? (Apr / May 2011)

Comparing Two objects

Analyzing System Functionalities

7. What are the artifacts used to manage requirements? (Nov/Dec 2012)

Requirement Document, Design Document, Project Plan, Testing Plan Document.

8. What is partitioning? (Nov/Dec 2009)

Decomposing the system into subsystems to reduce the complex of problem

9. List the areas of Software requirement analysis. (Nov/Dec 2011) * Feasibility Study *Requirements Verification and Validation

10. What is the purpose of Domain Analysis? (Apr/May 2011)

To identify and collect the same domain of system details to build the project plan for the new one.

11. Mention the process activities of Requirement Elicitation & Analysis. (Apr/May 2011)

Requirements Verification and Requirements Management are two process activities.

12.What are the linkages between data flow and ER diagrams? (May/june 2009)

Data flow diagram shows the function bang in software system to handle the data.

ER diagram shows the data bangs in the proposed data model.

13. List out the Requirement engineering tasks (May/june 2009)

* Requirement Elicitation * Requirement Analysis * Feasibility Study

* Requirements Documentation

14. Define Slack Time.

The amount of time that a non-critical path activity can be delayed without delaying the project is referred to as slack time.

PART – B 1.What are the various methods for gathering software requirements? Explain. (June 2009) One-on-one interviews The most common technique for gathering requirements is to sit down with the clients and ask them what they need. The discussion should be planned out ahead of time based on the type of requirements you're looking for. There are many good ways to plan the interview, but generally you want to ask open-ended questions to get the interviewee to start talking and then ask probing questions to uncover requirements. Group interviews Group interviews are similar to the one-on-one interview, except that more than one person is being interviewed -- usually two to four. These interviews work well when everyone is at the same level or has the same role. Group interviews require more preparation and more formality to get the information you want from all the participants. You can uncover a richer set of requirements in a shorter period of time if you can keep the group focused. Facilitated sessions In a facilitated session, you bring a larger group (five or more) together for a common purpose. In this case, you are trying to gather a set of common requirements from the group in a faster manner than if you were to interview each of them separately. Joint application development (JAD) JAD sessions are similar to general facilitated sessions. However, the group typically stays in the session until the session objectives are completed. For a requirements JAD session, the participants stay in session until a complete set of requirements is documented and agreed to. Questionnaires Questionnaires are much more informal, and they are good tools to gather requirements from stakeholders in remote locations or those who will have only minor input into the overall requirements. Questionnaires can also be used when you have to gather input from dozens, hundreds, or thousands of people. Prototyping Prototyping is a relatively modern technique for gathering requirements. In this approach, you gather preliminary requirements that you use to build an initial version of the solution -- a prototype. You show this to the client, who then gives you additional requirements. You change the application and cycle around with the client again. This repetitive process continues until the product meets the critical mass of business needs or for an agreed number of iterations. Use cases Use cases are basically stories that describe how discrete processes work. The stories include people (actors) and describe how the solution works from a user perspective. Use cases may be easier for the users to articulate, although the use cases may need to be distilled later into the more specific detailed requirements.

2. Explain in detail about elements in Data Modeling. (June 2009)

Flat model: This may not strictly qualify as a data model. The flat (or table) model consists of a single, two-dimensional array of data elements, where all members of a given column are assumed to be similar values, and all members of a row are assumed to be related to one another. Hierarchical model: In this model data is organized into a tree-like structure, implying a single upward link in each record to describe the nesting, and a sort field to keep the records in a particular order in each same-level list. Network model: This model organizes data using two fundamental constructs, called records and sets. Records contain fields, and sets define one-to-many relationships between records: one owner, many members. Relational model: is a database model based on first-order predicate logic. Its core idea is to describe a database as a collection of predicates over a finite set of predicate variables, describing constraints on the possible values and combinations of values.

3. Write a note on Functional and Non-Functional Requirements. (Nov/Dec 2011) Non Functional Requirements:

1. Explain in detail about Prototyping in the Software Process. (Nov/Dec 2011)

2. State and explain the tasks in Requirements engineering in detail. 3. Discuss Data Dictionary in detail. Give suitable examples. (Nov/Dec 2009)

4. Explain with examples, the rapid prototyping techniques in detail. (Nov/Dec 2009) 5. Explain IEEE standards for software requirements document. (Nov/Dec 2012)

6. Discuss in detail the static and dynamic process modeling techniques. (Nov/Dec 2012) 7. Draw and explain the data flow diagram for physician visit. Neatly state your assumption. (Nov/Dec 2012)

A function model or functional model in systems engineering and software engineering is a structured representation of the functions (activities, actions, processes, operations) within the modeled system or subject area.[1] UNIT-III PART – A 1. Write about Architectural Design. (Nov/Dec 11) Architectural Design: the process of defining a collection of hardware and software components and their interfaces to establish the framework for the development of a computer system. Detailed Design: the process of refining and expanding the preliminary design of a system or component to the extent that the design is sufficiently complete to begin implementation. Functional Design: the process of defining the working relationships among the components of a system. Preliminary Design: the process of analyzing design alternatives and defining the architecture, components, interfaces, and timing/sizing estimates for a system or components

2. What is meant by Software Configuration Item? (Nov/Dec 11) The term configuration item can be applied to anything designated for the application of the elements of configuration management and treated as a single entity in the configuration management system. The entity must be uniquely identified so that it can be distinguished from all other configuration items. 3. What are the different levels of Software Design? (Nov/Dec 11) Software design is the process of implementing software solutions to one or more set of problems. One of the important parts of software design is the software requirements analysis (SRA). It is a part of the software development process that lists specifications used in software engineering. 4. What are the characteristics of good design? (June 2009) Good design makes a product useful – A product is bought to be used. Good design emphasizes the usefulness of a product while disregarding anything that could possibly detract from its usefulness. Good Design is Innovative Good Design Makes a Product Understandable Good Design is Aesthetic

5. Why do we go for Modular Design? (June 2009) Changes made during testing and maintenance becomes manageable and they do not affect other modules. 6. Justify why highly cohesive modules are preferred. (June 2009) A cohesive module performs only “one task” in software procedure with little interaction with other modules. In other words cohesive module performs only one thing. 7. Define Software Architecture. (Apr / May 2011) Software architecture refers to the high level structures of a software system. The term can be defined as the set of structures needed to reason about the software system, which comprises the software elements, the relations between them, and the properties of both elements and relations 8. Define Interface Design. (Nov/Dec 2009) The interface design describes how the software communicates within itself, with systems that interoperate with it, and with humans who use it. 9. Define Data Design. (Nov/Dec 2009) The data design transforms the information domain model created during analysis into the data structures that will be required to implement the software.

10. Give some design heuristics for effective modularity. (Nov/Dec 2012) Evaluate the "first iteration" of the program structure to reduce coupling and improve cohesion The scope of effect of module is defined as all other modules that are affected by a decision made in module Evaluate module interfaces to reduce complexity and redundancy and improve consistency. Module interface complexity is a prime cause of software errors Define modules whose function is predictable, but avoid modules that are overly restrictive. A module is predictable when it can be treated as a black box; that is, the same external data will be produced regardless of internal processing details.

11. Define coupling. List any two types of coupling. (Nov/Dec 2012) Coupling is the measure of interconnection among modules in a program structure. It depends on the interface complexity between modules. There are two types. High Coupling Low Coupling 12. List the characteristics of a good conceptual design. (Nov/Dec 2012) Software design should correspond to the analysis model Choose the right programming paradigm Software design should be uniform and integrated Software design should be flexible Software design should ensure minimal conceptual (semantic) errors: Software reuse. 13. Define cardinality and modality. (Nov/Dec 2010) Cardinality: The data model must be capable of representing the number of occurrences objects in a given relationship Modality: The modality of a relationship is 0 if there is no explicit need for the relationship to occur or the relationship is optional. The modality is 1 if an occurrence of the relationship is mandatory 14. List the concepts of good design. (Nov/Dec 2010) The design process should not suffer from “tunnel vision.” A good designer should consider alternative approaches, judging each based on the requirements of the problem, the resources available to do the job. The design should be traceable to the analysis model. Because a single element of the design model often traces to multiple requirements, it is necessary to have a means for tracking how requirements have been satisfied by the design model. 15. What is fan-in and fan-out? (Apr/May 2011) The fan-out of a module is the number of its immediately subordinate modules. As a rule of thumb, the optimum fan-out is seven, plus or minus 2. This rule of thumb is based on the psychological study conducted by George Miller during which he determined that the human mind has difficulty dealing with more than seven things at once. The fan-in of a module is the number of its immediately superordinate (i.e., parent or boss) modules. The designer should strive for high fan-in at the lower levels of the hierarchy. This simply means that normally there are common low-level functions that exist that should be identified and made into common modules to reduce redundant code and increase maintainability. High fan-in can also increase portability if, for example, all I/O handling is done in common modules. 16. Distinguish between cohesion and coupling. (May / June 2013) cohesion is generally considered a good thing, creating a software component that combines related functionality into a single unit. The goal, which is one of the core principle of object-oriented programming, is to create a component that encapsulates implementation details and presents a simple external interface.

Coupling (or more specifically, unnecessary coupling) is generally considered a bad thing: having software components be dependent upon specific details of other components. Keeping external interfaces simple and general will help reduce coupling to a minimum.

17. What is modularity(May/june 2009) Modularity is the degree to which a system's components may be separated and recombined. The meaning of the word, however, can vary somewhat by context: In biology, modularity is the concept that organisms or metabolic pathways are composed of modules.

18. If a module has logical cohesion what kind of coupling is this module likely to have with others(May/june 2009) Coincidental cohesion - A module has coincidental cohesion if it performs multiple, unrelated actions. Clumping together unrelated functions for "convenience."

PART – B 1. Discuss in detail about Design Concepts. (June 2009)

Fundamental Software Design Concepts: Abstraction-allows designers to focus on solving a problem without being concerned about irrelevant lower level details(procedural abstraction –named sequence of events, data abstraction-named collection of data objects) Refinement –process of elaboration where the designer provides successively more detail for each design component. Modularity – the degree to which software can be understood by examining its components independently of one another. Software architecture – overall structure of the software components and the ways in which structure provides conceptual integrity for a system. Control hierarchy or program structure-represents the module organization and implies a control hierarchy, but does not represent the procedural aspects of the software (e,g,event sequences) Structural portioning – horizontal partitioning defines three partitions(input, data transformations, and output); vertical partitioning (factoring) distributes control in a top down manner(control decisions in top level modules and processing work in the lower level modules) Abstraction Abstraction is the theory that allows one to deal with concepts apart from the particular instances of those concepts.

Abstraction reduces complexity to a larger extent during design Abstraction is an important tool I software engineering in many aspects. There are three types of abstractions used in software design a) Functional abstraction. b) Data abstraction c) Control abstraction

Functional abstraction: -This involves the usage of parameterized routines. -The number and type of parameters to a routine can be made dynamic and this ability to use the apt parameter during the apt invocation of the sub-program is functional abstraction

Data Abstraction: This involves specifying or creating a new data type or a date object by specifying valid operations on the object. -Other details such as representative and manipulations of the data are not specified. - Many languages is an important feature of OOP. - Data abstraction is an important as ADA, C++, provide abstract data type. -Example: Implementation of stacks, queues.

Control Abstraction:

-Control abstraction is used to specify the effect of a statement or a function without

MODULARITY Modularity derives from the architecture. Modularity is a logical partitioning of the software design that allows complex software to be manageable for purpose of implementation and maintenance. The logic of partitioning may be based on related functions, implementations considerations, data links, or other criteria. Modularity does imply interface overhead related to information exchange between modules and execution of modules.

Modularity – the degree to which software can be understood by examining its components independently of in another COHESION: Cohesion is an interaction within a single object of software component. It reflects the single-purposeless of an object. Coincidentally cohesive is cohesive that performs a set of tasks, that relate to each other object. Logically cohesive is a cohesive process that performs tasks that are related logically each other objects. Method cohesion, like the function cohesion, means that a method should carry only function. Inheritance cohesion concerned with interrelation of classes, specialization of classes with attributes.

2. Explain Data design in detail. (Nov/Dec 2009)

DATA DESIGN

DATA FLOW DIAGRAMS (DFD)

DFD are directed graphs in which the nodes specify processing activities and the arcs specify data items transmitted between processing nodes.Data flow diagram (DFD) serves two purposes. To provide an indication of how data are transformed as the move through the system and To depict the functions that transforms that data flow. Data flow diagram (DFD) –provides an indication of how data are transformed as they move through the system; also depicts functions that transform the data flow (a function is represented in a DFD using a process specification or PSPEC). Shows the relationship of external entities process or transforms data items and data stores DFD’s cannot show procedural detail (e.g. conditionals or loops) only the flow of data through the software. Refinement from one DFD level to the next should follow approximately a 1:5 ratio (this ratio will reduce as the refinement proceeds) To model real-time systems, structured analysis notation must be available for time continuous data and event processing (e.g. Ward and Mellore or Hately and Pirbhai) Creating Data Flow Diagram: Level 0 data flow diagram should depict the system as a single bubble. Primary input and output should be carefully noted. Refinement should begin by consolidating candidate processes, data objects, and stored to be represented at the next level. Label all arrows with meaningful names Information flow must be maintained from one level to level Refine one bubble at a time Write a PSPEC (a “mini-spec” written using English or another natural language or a program design language) for each bubble in the final DFD.

3. Functional Modeling and Information Flow (DFD): Creating Data Flow Diagram: Level 0 data flow diagram should depict the system as a single bubble. Primary input and output should be carefully noted. Refinement should begin by consolidating candidate processes, data objects, and stored to be represented at the next level. Label all arrows with meaningful names Information flow must be maintained from one level to level Refine one bubble at a time Write a PSPEC (a “mini-spec” written using English or another natural language or a program design language) for each bubble in the final DFD. 4. How do we assess the quality of software design? (4 marks-Nov/Dec 2012)

Software Design process and concepts:

A Software design is a meaningful engineering representation of some software product is to be built. A design can be traced to the customer’s requirements and can be assessed for quality against predefined criteria. During the design process the software requirements model is transformed into design models describe the details of the data structures, system architecture, interface, and components. Each design product is reviewed for quality before moving to the next phase of software development.

Design Specification Models: Data design –created by transforming the analysis information model (data dictionary and ERD) into data structures required to implement the software. Architectural design-defines the relationships among the major structural elements of the software, it is derived from the system specification, the analysis model, the subsystem interactions defined in the analysis model (dfd). Interface design-describes how the software elements communicate with each other, with other systems, and with human users, the data flow and control flow diagrams provide much the necessary information. Component –Level design-created by transforming the structural elements defined by the software architecture in to procedural descriptions of software components using information obtained from the PSPEC, CSPEC, and STD

Design Guidelines: A design should: Exhibit good architectural structure. Be modular Contain distinct representatives of data architecture, interfaces, and components (modules) Lead to data structures are appropriate for the objects to be implemented that exhibit independent functional characteristics Lead to interfaces reduce the complexity of connections between modules and with the external environment Be derived using a reputable method is driven by information obtained during software requirements Design Principles: The design Process should not suffer from tunnel vision. Should be traceable to the analysis model Should not reinvent the wheel, Should minimize intellectual distance between software And the problem as it exits in the real world Should exhibit uniformly and integration Should be structured to accommodate change. Should be structured to degrade gently, even with bad data, events, or operating conditions are encountered Should be assessed for quality as it is being created Should be reviewed to minimize conceptual (semantic) errors. Fundamental Software Design Concepts:

Abstraction-allows designers to focus on solving a problem without being concerned about irrelevant lower level details(procedural abstraction –named sequence of events, data abstraction-named collection of data objects) Refinement –process of elaboration where the designer provides successively more detail for each design component. Modularity – the degree to which software can be understood by examining its components independently of one another. Software architecture – overall structure of the software components and the ways in which structure provides conceptual integrity for a system. Control hierarchy or program structure-represents the module organization and implies a control hierarchy, but does not represent the procedural aspects of the software (e,g,event sequences) Structural portioning – horizontal partitioning defines three partitions(input, data transformations, and output); vertical partitioning (factoring) distributes control in a top down manner(control decisions in top level modules and processing work in the lower level modules) Data structure –representation of the logical relationship among individual data elements (requires at least as much attention as algorithm design) Software procedure –precise specification of processing (event sequences, decision points, repetitive operations, data organization/structure)

5. Discuss the important issues to be addressed in designing “User Interfaces”. (May / June 2013)

THE USER INTERFACE System users often judge a system by its interface rather than its functionality A poorly designed interface can cause a user to make catastrophic errors Poor user interface design is the reason why so many software systems are never used

Graphical user interfaces Most users of business systems interact with these systems through graphical interfaces although, in some cases, legacy text-based interfaces are still used

GUI advantages They are easy to learn and use. o Users without experience can learn to use the system quickly. The user may switch quickly from one task to another and can interact with several different applications. o Information remains visible in its own window when attention is switched. Fast, full-screen interaction is possible with immediate access to anywhere on the screen User-centred design The aim of this chapter is to sensitise software engineers to key issues underlying the design rather than the implementation of user interfaces User-centred design is an approach to UI design where the needs of the user are paramount and where the user is involved in the design process

6. Explain coupling and cohesion with examples(16)(May/june 2009)

COHESION:

Cohesion is an interaction within a single object of software component. It reflects the single-purposeless of an object. Coincidentally cohesive is cohesive that performs a set of tasks, that relate to each other object. Logically cohesive is a cohesive process that performs tasks that are related logically each other objects. Method cohesion, like the function cohesion, means that a method should carry only function. Inheritance cohesion concerned with interrelation of classes, specialization of classes with attributes. Coupling: Coupling is a measure of interconnection among modules in a software structure. It depends on the interface complexity between modules, the point at which entry or reference is made to a module, and what data pass across the interface. It is a measure of the strength of association established by a connection from one object or s/w component to another. It is binary relationship: A is coupled with B. Types of coupling: Common Coupling:

It is high coupling occurs when a number of modules (object) Reference a global data area. The objects will access a global data space for both to read and write operations of attributes. Content Coupling: It is the degree of coupling. It occurs when one object or module makes use of data control information maintained within the boundary of another object or module. It refers to attributes or methods of another object. Control coupling: It is characterized by passage of control between modules or objects. It is very common in most software designs.

It involves explicit control of the processing logic of one object by another.

Stamp Coupling: The connection involves passing an aggregate data structure to another object, which uses only a portion of the components of the data structure.It is found when a portion of a data structure is passed via a module or object interface.

Data Coupling: It is low degree of coupling. The connection involves either simple data items or aggregate structures all of whose elements are used by the receiving object. This should be the goal of an architectural design. It is exhibited in the portion of structure.

UNIT-IV PART – A 1. Why testing is an important activity? (June 2009)

A good testing program is a tool for both the agency and the integrator/supplier; it typically identifies the end of the “development” phase of the project, establishes the criteria for project acceptance, and establishes the start of the warranty period

2. What is regression testing? (June 2009)

Regression testing is a type of software testing that seeks to uncover new software bugs, or regressions, in existing functional and non-functional areas of a system after changes such as enhancements, patches or configuration changes, have been made to them.

3. Differentiate between Black Box Testing and White Box Testing. (June 2009)

Criteria Black Box Testing White Box Testing Black Box Testing is a software White Box Testing is a software testing method in which the internal testing method in which the Definition structure/ design/ implementation of internal structure/ design/ the item being tested is NOT known implementation of the item being to the tester tested is known to the tester. Mainly applicable to higher levels of Mainly applicable to lower levels Levels testing:Acceptance Testing of testing:Unit Testing Applicable To System Testing Integration Testing Generally, independent Software Responsibility Generally, Software Developers Testers 4. What is Unit Testing? (Apr / May 2011)

Unit testing is a software testing method by which individual units of source code, sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures are tested to determine if they are fit for use.

5. What is Behavioral testing? (Apr / May 2011)

(Behavioral tests) can measure symptoms of disease and mental performance, but can only provide indirect measurements of brain function and may not be practical in all animals.

6. What is Boundary Value Analysis? (Apr / May 2011)

Boundary value analysis is a software testing technique in which tests are designed to include representatives of boundary values. The idea comes from the Boundary (topology). Given that we have a set of test vectors to test the system, a topology can be defined on that set.

7. What are the major components of Test plan? (Nov/Dec 2012)

Design Verification or Compliance test - to be performed during the development or approval stages of the product, typically on a small sample of units.

Manufacturing or Production test - to be performed during preparation or assembly of the product in an ongoing manner for purposes of performance verification and quality control.

Acceptance or Commissioning test - to be performed at the time of delivery or installation of the product

.

8. Brief the benefits of White-Box testing. (Nov/Dec 2012)

White Box Testing (also known as Clear Box Testing, Open Box Testing, Glass Box Testing, Transparent Box Testing, Code-Based Testing or Structural Testing) is a software testing method in which the internal structure/design/implementation of the item being tested is known to the tester. The tester chooses inputs to exercise paths through the code and determines the appropriate outputs. Programming know-how and the implementation knowledge is essential. White box testing is testing beyond the user interface and into the nitty-gritty of a system.

Benefits:

Testing can be commenced at an earlier stage. One need not wait for the GUI to be available.

Testing is more thorough, with the possibility of covering most paths. 9. What is the difference between errors and faults? (Nov/Dec 2012)

Error: “A difference…between a computed result and the correct result”

Fault: “An incorrect step, process, or data definition in a computer program” 10. What do you mean by fault identification? (Nov/Dec 2012)

Fault detection and isolation is a subfield of control engineering which concerns itself with monitoring a system, identifying when a fault has occurred, and pinpointing the type of fault and its location.

11. Define hazard. (Nov/Dec 2011)

A hazard analysis is used as the first step in a process used to assess risk. The result of a hazard analysis is the identification of different type of hazards. A hazard is a potential condition and exists or not (probability is 1 or 0). It may in single existence or in combination with other hazards (sometimes called events) and conditions become an actual Functional Failure or Accident.

12. What is the difference between alpha and beta testing? (May/june 2009)

Alpha Testing Beta Testing (Field Testing)

1. It is always performed by the developers 1. It is always performed by the customers at the software development site. at their own site. 2. Sometimes it is also performed by 2. It is not performed by Independent Independent Testing Team. Testing Team. 3. Alpha Testing is not open to the market 3. Beta Testing is always open to the market and public and public. 4. It is conducted for the software 4. It is usually conducted for software application and project. product.

13. Smoke Testing: • Software components already translated into code are integrated into a build. • A series of tests designed to expose errors that will keep the build from performing its functions are created. • The build is integrated with the other builds and the entire product is smoke tested daily using either top-down or bottom integration.

PART – B 1. Explain the concept of System Testing and discuss various types of System Testing. (June 2009)

SYSTEM TESTING S/w is incorporated with other system elements like hardware, people, information and a series of system integration and validation tests are conducted. These tests fall outside the scope of the software process and are not conducted solely by s/w engineers. System testing is actually a series of different tests whose primary purpose is to fully exercise the computer based system. Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions. Types of system testing:

1. Recovery testing 2. Security testing 3. Stress testing 4. Performance testing Recovery testing: Many computer based systems must recover from faults and resume processing within a prespecified time. Recovery testing is a system test that forces the s/w to fail in a variety of ways and verifies that recovery is properly performed. If recovery requires human intervention the mean time to repair is evaluated to determine whether it is within acceptable limits. Security testing: Security testing attempts to verify that protection mechanism built into a system will, in fact, protect it from improper penetration.During security testing, the tester plays the role of the individual who desires to penetrate the system. Stress testing: This executes a system in a manner that demands resources in abnormal quantity, frequency or volume.Essentially, the tester attempts to break the program. A variation of stress testing is a techniques called sensitivity testing. In some situations, a very small range of data contained within the bounds of valid data for a program may cause extreme and even erroneous processing or profound performance degradation.

2. How do you define equivalence partitioning for testing? (June 2009)

EQUIVALENCE PARTITIONING It is a black box testing method that divides the inputs domain of a program into classes of data from which test cases can be derived.Test case design for equivalence partitioning is based on an evaluation of equivalence classes for an input condition. The input data to a program usually fall into number of different classes. These classes have common characteristics, for example positive numbers, negative numbers strings without blanks and so on. Programs normally behave in a comparable way for all members of a class. Because of this equivalent behavior, these classes are sometimes called equivalent partitions or domains. A systematic approach to defect testing is based on identifying a set of equivalence partitions which must be handled by a program. Guidelines for defining equivalence classes: 1. If an input condition specifies a range, one valid and two invalid equivalence classes are defined. 2. If an input condition requires a specific value, one valid and two invalid equivalence classes are defined. 3. If an input condition specifies a member of a set one valid and one invalid equivalence class are defined. 4. If an input condition is Boolean, one valid and one invalid class are defined. Test cases for each input domain data item can be developed and executed by applying the guidelines for the derivation of equivalence classes.

3. Explain in detail about Black Box Testing. (Nov/Dec 2011)

BLACK BOX TESTING:

Black box testing is also called as behavioral testing. This focuses on the functional requirements of the s/w. Black box testing enables the s/w engineer to derive sets of input conditions that will fully exercise all functional requirements for a program. Errors found by black box testing: 1. incorrect or missing functions 2. interface errors 3. Errors in data structures or external data base access Various black box testing method: 1. Equivalent partitioning 2. boundary value analysis 3. comparison testing 4. orthogonal array testing

4. Write a detailed note on System Testing and Debugging. (Nov/Dec 2011)

SYSTEM TESTING AND DEBUGGING: S/w is incorporated with other system elements like hardware, people, information and a series of system integration and validation tests are conducted. These tests fall outside the scope of the software process and are not conducted solely by s/w engineers. System testing is actually a series of different tests whose primary purpose is to fully exercise the computer based system. Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions. Types of system testing:

Recovery testing Security testing Stress testing Performance testing

5. Why unit testing is so important? Explain the concept of Unit testing in detail. (Nov/Dec 2011)

Unit testing is not a new concept. It’s been there since the early days of the programming. Usually developers and sometime white box testers write unit tests to improve code quality by verifying each and every unit of the code used to implement functional requirements (aka test driven development TDD or test-first development).Most of us might know the classic definition of unit testing -“Unit testing is the method of verifying smallest piece of testable code against its purpose.” If the purpose or requirement failed then unit test has failed.

Importance of Writing Unit Tests

Unit testing is used to design robust software components that help maintain code and eliminate the issues in code units. We all know the importance of finding and fixing the defects in early stage of software development cycle. Unit testing serves the same purpose. Unit testing is the integral part of the agile software development process. When nightly build run unit test suite should run and report should be generated. If any of the unit test has failed then QA team should not accept that build for verification. If we set this as a standard process, many defects would be caught in the early development cycle, saving much testing time.

Benefits:

Testing can be done in the early phases of the software development life cycle when other modules may not be available for integration

Fixing an issue in unit testing can fix many other issues occurring in later development and testing stages

6. What are the types of Acceptance Tests? Explain. How will you document the test? (Nov/Dec 2012) Acceptance testing: This is the final stage in the testing process before the system is accepted for operational use. The system is tested with data supplied by the system producer rather than stimulated test data. Acceptance testing may reveal errors and omissions in the system requirements definition because the real data exercise the system in different ways from the test data. Acceptance testing may also reveal requirements problems where the systems facilities do not really meet the user’s needs or the system performance is unacceptable. 7. Write note on (Nov/Dec 2010)

a. Validation Testing Validation testing : At the end of integration testing, s/w is completely assembled as a package, interfacing errors have been uncovered and corrected, and a final series of software tests. Validation succeeds when s/w functions in a manner that can be reasonably expected by the customer. After each validation has been conducted, one of the two possible conditions exist: 1. The information or performance characteristic conform to specification and are accepted.

2. A derivation from specifications is uncovered and a deficiency list is created. It is often necessary to negotiate with the customer to establish a method for resolving deficiencies. Configuration review:

This is an important element of the validation process. The intent of the review is to ensure that all elements of the s/w configuration have been properly developed. b. System Testing. S/w is incorporated with other system elements like hardware, people, information and a series of system integration and validation tests are conducted. These tests fall outside the scope of the software process and are not conducted solely by s/w engineers. System testing is actually a series of different tests whose primary purpose is to fully exercise the computer based system. Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions. Types of system testing:

Recovery testing Security testing Stress testing Performance testing

8. Describe the testing objectives and its principles. (Apr/May 2011)

TESTING OBJECTIVES: 1. Testing is a process of executing a program with the intent of finding an error. 2. A good test case is one that has a high probability of finding an as yet undiscovered error. 3. A successful test is one that uncovers an as yet undiscovered error.

Testing should systematically uncover different classes of errors in a minimum amount of time and with a minimum amount of effort. A secondary benefit of testing is that it demonstrates that the software appears to be working as stated in the specifications. The data collected through testing can also provide an indication of the software's reliability and quality. But, testing cannot show the absence of defect -- it can only show that software defects are present. 9. Briefly explain the basic path testing in detail. (Apr/May 2011)

BASIS PATH TESTING:

The basis path method enables the test case designer to derive a logical complexity measure of a procedural design and use this measure as a guide for defining and use this measure as a guide for defining a basis set of execution paths.

Flow graph notation

Flow graph is a simple notation for the representation of control flow. Each structured construct has a corresponding flow graph symbol.

Flow graph node: Represents one or more procedural statements.

Edges or links: Represent flow control.

UNIT-V PART – A 1. Define Metrics. (Nov/Dec 11)

2. What is meant by Task Network? (Nov/Dec 11)

The use of a directed graph in the form of a project network, allows precedence relationships between tasks to be displayed visually, along with slack time for tasks and the critical path of the project.

3. Define direct and indirect measures of Software. (June 2009)

Direct Measures – attributes do not depend on other attributes or entities

Indirect Measures- attributes may depend on other entities.

4. List out two important factors of software risks. (June 2009)

Unavailability of Resource

No Proper Project Plan

5. What functions are implemented by a SCM repository? (June 2009) 6. What are the elements of an effective measurement process? (June 2009)

7. What are the basic principles of software project scheduling? (June 2009)

Proper Resource Planning, Resource Utilization, Risk Management

8. How can software measurement be categorized? (Apr / May 2011)

As per quality factors the software measurements are categorized as ‘Direct’ and ‘Indirect Measures’.

9. Write the two projects scheduling methods applied to software development? (Apr / May 2011)

COCOMO MODEL, DELPHI METHOD

10. Write short note on QFD. (Nov/Dec 2012).

Quality Function Deployment (QFD) was developed to bring this personal interface to modern manufacturing and business. In today's industrial society, where the growing distance between producers and users is a concern, QFD links the needs of the customer (end user) with design, development, engineering, manufacturing, and service functions.

11. Define software reliability and software availability. (Apr/May 2011)

Reliability: Software works to satisfy the user requirements without faults.

Availability: Product always available to all users to continue their activities.

12. What are the various activities during software project planning? (May/june 2009)

Resource Allocation * Training the Staff Members

Risk Management * Configuration Management Scheduling

13. What are the risk management activities? (May/june 2009)

Resource Control, Staff Training and Monitoring.

14. Define Function point. Based on a combination of program characteristics • external inputs and outputs; • user interactions; • external interfaces; • files used by the system.

PART – B 1. Describe the activities of SCM in detail. (June 2009)

New versions of software systems are created as they change o For different machines/OS

o Offering different functionality

o Tailored for particular user requirements Configuration management is concerned with managing evolving software systems o System change is a team activity o CM aims to control the costs and effort involved in making changes to a system

2. Briefly explain the metrics used for estimating cost? (June 2009) SOFTWARE COST ESTIMATION: Predicting the resources required for a software development process. SOFTWARE COST COMPONENTS: *Hardware and software *Travel and training *Efforts costs *Salaries of engineers involved in the project *Social and insurance costs *Effort costs must take overheads into account *Costs of shared facilities

COSTING AND PRICING: *Estimates are made to discover the cost, to the developer of producing a software system. *There is not a simple relationship between the development ost and the price charged to the customer.

PROGRAMMER PRODUCTIVITY: *A measure of the rate at which individual engineers involved in software development produce software and associated documentation. * Not quality oriented although quality assurance is a factor in productivity assessment PRODUCTIVITY MEASURES: *Size related measures based on some output from the software process. This may be lines of delivered source code, object code instructions etc., * Function related measures based on an estimate of the functionality of the delivered software. MEASUREMENT PROBLEM: *Estimating the size of the measure.

3. Explain about COCOMO model & Delphi Method. (Nov/Dec 2011)

COCOMO model

An empirical model based on project experience.

Well-documented, ‗independent‘ model which is not tied to a specific software vendor.

Long history from initial version published in 1981 (COCOMO-81) through various

instantiations to COCOMO 2.

COCOMO 2 takes into account different approaches to software development, reuse, etc.

4. Write a note on the following. (Nov/Dec 2011) a. Software Cost Estimation-Refer Question 2

b. Taxonomy of CASE Tools.

Taxonomy of CASE tools:

The computer aided software engineering (CASE) is an automated support for the software engineering process.

The workshop for software engineering has been called an integrated project support environment and the tools that fill the workshop are collectively called CASE.

CASE provides the software engineer with the ability to automate manual activities and to improve engineering insight. CASE tools help to ensure that quality is designed in before the produce is built.

Different levels of CASE technology:

1. Production process support technology. This includes support for process activities such as specification, design, implementation, testing, and so on. These were the earliest and consequently are the most nature CASE products. 2. Process management technology. This includes tools to support process modeling and process management. These tools will call on production process support tools to support specific process activities. 3. Meta CASE technology. Meta CASE tools are generators which are used to create production process and Process management support tools. It is necessary to create taxonomy of CASE tools to better understand the breadth of CASE and to better appreciate where such tools can be applied in the software engineering process.

Different classification dimensions of CASE: CASE tools can be classified By function. By their roles as instruments for managers or technical people. By their use in the various steps of the software engineering process. By environment architecture that supports them. By their origin or cost.

5. Briefly discuss about the Risk Management activities. (Nov/Dec 2011)

Risk management: Risks are potential problems that affect successful completion of project which involves uncertainty and potential lose . Risk analysis and management helps the s/w team to overcome the problems caused by the risks.

The work product is called a Risk Mitigation, Monitoring and Management Plan(RMMMP). (a) risk strategies: * reactive strategies: also known as fire fighting, project team sets resources aside to deal with the problem and does nothing until the risks became a problem. * Pro active strategies: risk management begins long before technical works starts , risks are identified and prioritized by importance, the team lead builds a plan to avoid such risks. (b) S/W risks: * Project risk: * Technical risk: threaten product quality and time line * business risk: threaten the validity of the s/w. * Known risk: predictable from careful evaluation of current project plan and those extrapolated from past project experience. * Unknown risk: some problems simply occur without warning. (c) Risk identification: * Product – specific risk: the project plan and s/w statement of scope are examined to identify any specific characteristics. * Generic risk: Potential threads to any s/w products. (d) Risk impact: * Risk components: performance, cost, support, schedule * Risk impact: negligible, marginal , critical, catastrophic. The risk drivers affecting each risk component are classified according to their impact category and potential consequence of each undetected s/w fault.

6. Explain the various software measurement metrics in detail. (Apr / May 2011)

7. Explain the Delphi method of Software Cost estimation. (Nov/Dec 2009) 8. What is the significance of decomposition in software project estimation? Explain the techniques of decomposition in detail. (Nov/Dec 2012)

Decomposing the system into subsystems can help the project experts to identify the resources required for developing subsystems. From that they can able to prepare how much resources required for the whole system.

9. Explain the stages of the CASE life cycle. (8 marks-Nov/Dec 2012)

Inception, Elaboration, Implementation and Testing are the different life cycle phase in CASE development process model.

It is an Iterative and Incremental Model.

10. Explain in detail the CASE repositories. (8 marks- Nov/Dec 2012) 11. How to estimate software cost? Explain the different methods in detail. (Nov/Dec 2010)