C:\Vision\17Performance Pt 2.Wpd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Retina and Vision

CHAPTER 19 The Retina and Vision The visual system is arguably the most important system through which our brain gathers information about our surroundings, and forms one of our most complex phys- iological systems. In vertebrates, light entering the eye through the lens is detected by photosensitive pigment in the photoreceptors, converted to an electrical signal, and passed back through the layers of the retina to the optic nerve, and from there, through the visual nuclei, to the visual cortex of the brain. At each stage, the signal passes through an elaborate system of biochemical and neural feedbacks, the vast majority of which are poorly, if at all, understood. Although there is great variety in detail between the eyes of different species, a number of important features are qualitatively conserved. Perhaps the most striking of these features is the ability of the visual system to adapt to background light. As the background light level increases, the sensitivity of the visual system is decreased, which allows for operation over a huge range of light levels. From a dim starlit night to a bright sunny day, the background light level varies over 10 orders of magnitude (Hood and Finkelstein, 1986), and yet our eyes continue to operate across all these levels without becoming saturated with light. The visual system accomplishes this by ensuring that its sensitivity varies approximately inversely with the background light, a relationship known as Weber’s law (Weber, 1834) and one that we discuss in detail in the next section. Because of this adaptation, the eye is more sensitive to changes in light level than to a steady input. -

Chromatic Mach Bands: Behavioral Evidence for Lateral Inhibition in Human Color Vision

Perception &: Psychophysics 1987, 41 (2), 173-178 Chromatic Mach bands: Behavioral evidence for lateral inhibition in human color vision COLIN WARE University ofNew Brunswick, Fredericton, New Brunswick, Canada and Wll..LIAM B. COWAN National Research Council of Canada, Ottawa, Ontario, Canada Apparent hue shifts consistent with lateral inhibition in color mechanisms were measured, in five purely chromatic gradients, for 7 observers. Apparent lightness shifts were measured in achro matic versions of the same gradients, and a correlation was found to exist between the magni tude of the chromatic shifts and the magnitude ofthe achromatic shifts. The data can be modeled by a function that approximates the activity of color-sensitive neurons found in the monkey cor tex. Individual differences and the failure of some previous researchers to find the effect can be accounted for by differences in the function parameters for different observers. One of the intensity gradients used by Ernst Mach an achromatic component, chromatic shifts could be (1886/1967) to demonstrate what have become known as caused by a coupling to the achromatic effect through, "Mach bands" is graphed in Figure 1 (curve C). Ob for example, the Bezold-Briicke effect. They also argue servers of a pattern with this luminance profile report a that, because prolonged viewing was allowed, chromatic bright band at the point where the luminance begins to Mach bands may be an artifact ofsuccessive contrast and decline. As with others like it, thisobservation represents eye movements and not evidence for lateral interactions. one of the principal sources ofevidence for spatially in On the other side, those who find chromatic Mach bands hibitory interactions in the human visual system. -

COGS 101A: Sensation and Perception 1

COGS 101A: Sensation and Perception 1 Virginia R. de Sa Department of Cognitive Science UCSD Lecture 4: Coding Concepts – Chapter 2 Course Information 2 • Class web page: http://cogsci.ucsd.edu/ desa/101a/index.html • Professor: Virginia de Sa ? I’m usually in Chemistry Research Building (CRB) 214 (also office in CSB 164) ? Office Hours: Monday 5-6pm ? email: desa at ucsd ? Research: Perception and Learning in Humans and Machines For your Assistance 3 TAS: • Jelena Jovanovic OH: Wed 2-3pm CSB 225 • Katherine DeLong OH: Thurs noon-1pm CSB 131 IAS: • Jennifer Becker OH: Fri 10-11am CSB 114 • Lydia Wood OH: Mon 12-1pm CSB 114 Course Goals 4 • To appreciate the difficulty of sensory perception • To learn about sensory perception at several levels of analysis • To see similarities across the sensory modalities • To become more attuned to multi-sensory interactions Grading Information 5 • 25% each for 2 midterms • 32% comprehensive final • 3% each for 6 lab reports - due at the end of the lab • Bonus for participating in a psych or cogsci experiment AND writing a paragraph description of the study You are responsible for knowing the lecture material and the assigned readings. Read the readings before class and ask questions in class. Academic Dishonesty 6 The University policy is linked off the course web page. You will all have to sign a form in section For this class: • Labs are done in small groups but writeups must be in your own words • There is no collaboration on midterms and final exam Last Class 7 We learned about the cells in the Retina This Class 8 Coding Concepts – Every thing after transduction (following Chris Johnson’s notes) Remember:The cells in the retina are neurons 9 neurons are specialized cells that transmit electrical/chemical information to other cells neurons generally receive a signal at their dendrites and transmit it electrically to their soma and axon. -

MTTTS17 Dimensionality Reduction and Visualization

MTTTS17 Dimensionality reduction and visualization Spring 2020 Lecture 5: Human perception Jaakko Peltonen jaakko dot peltonen at tuni dot fi 1 Human perception (part 1) Aoccdrnig to a rscheearch at Cmabrigde Uinervtisy, it deosn't mttaer in waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht the frist and lsat ltteer be at the rghit pclae. The rset can be a toatl mses and you can sitll raed it wouthit porbelm. Tihs is bcuseae the huamn mnid deos not raed ervey lteter by istlef, but the wrod as a wlohe. 2 Human perception (part 1) Most strikingly, a recent paper showed only an 11% slowing when people read words with reordered internal letters: 3 Human perception (part 1) 4 Human perception (part 1) 5 Human perception (part 1) 6 Human perception (part 1) 7 Human perception (part 1) 8 Human perception (part 1) 9 Human perception Human perception and visualization • Visualization is young as a science • The conceptual framework of the science of visualization is based on the human perception • If care is not taken bad designs may be standardized 10 Human perception Gibson’s affordance theory 11 Human perception Sensory and arbitrary symbols 12 Human perception Sensory symbols: resistance to instructional bias 13 Human perception Arbitrary symbols (Could you tell the difference between 10000 dots and 9999 dots?) 14 Stages of perceptual processing 1. Parallel processing to extract low-level properties of the visual scene ! rapid parallel processing ! extraction of features, orientation, color, texture, and movement patterns ! iconic store ! bottom-up, data driven processing 2. -

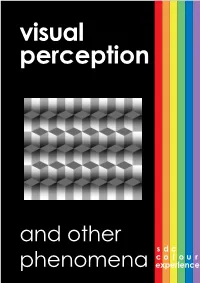

Visual Perception

visual perception and other s d c c o l o u r phenomena experience Visual perception Visual perception is the process of using vision to acquire information about the surrounding environment or situation. Perception relies on an interaction between the eye and the brain and in many cases what we perceive is not, in fact, reality. There are four different ways in which humans visually perceive the world. When we observe an object we recognise: brightness, movement, shape, colour. The illustration on the front cover appears to have two distinctly different sets of horizontal diamonds, one darker than the other. As shown above with an area removed this is an incorrect perception as in reality the diamonds are all identical. Ouchi effect Named after the Japanese artist Hajime Ouchi who discovered that when horizontal and vertical patterns are combined the eye jitters over the image and the brain perceives movement where none exists. Blinking effect Try to count the dots in the diagram above. Despite a static image, your eyes will make it dynamic and attempt to fill in the yellow circles with the blue of the background. The reason behind this is not yet fully understood. When coloured lines cross over neutral background the dots appear to be coloured. If the neutral lines cross the coloured then the dots appear neutral. When the grids are distorted the illusion is reduced or disappears completely as shown in the examples above. Dithering This is a colour reproduction technique in which dots or pixels are arranged in such a way that allows us to perceive more colours than are actually there. -

Informing Computer Vision with Optical Illusions

Informing Computer Vision with Optical Illusions Nasim Nematzadeh David M. W. Powers Trent Lewis College of Science and Engineering College of Science and Engineering College of Science and Engineering Flinders University Flinders University Flinders University Adelaide, Australia Adelaide, Australia Adelaide, Australia [email protected] [email protected] [email protected] Abstract— Illusions are fascinating and immediately catch the increasingly detailed biological characterization of both people's attention and interest, but they are also valuable in retinal and cortical cells over the last half a century (1960s- terms of giving us insights into human cognition and perception. 2010s), there remains considerable uncertainty, and even some A good theory of human perception should be able to explain the controversy, as to the nature and extent of the encoding of illusion, and a correct theory will actually give quantifiable visual information by the retina, and conversely of the results. We investigate here the efficiency of a computational subsequent processing and decoding in the cortex [6, 8]. filtering model utilised for modelling the lateral inhibition of retinal ganglion cells and their responses to a range of Geometric We explore the response of a simple bioplausible model of Illusions using isotropic Differences of Gaussian filters. This low-level vision on Geometric/Tile Illusions, reproducing the study explores the way in which illusions have been explained misperception of their geometry, that we reported for the Café and shows how a simple standard model of vision based on Wall and some Tile Illusions [2, 5] and here will report on a classical receptive fields can predict the existence of these range of Geometric illusions. -

CROWN 85: Visual Perception: a Window to Brain and Behavior Lecture 5

CROWN 85: Visual Perception: A Window to Brain and Behavior Lecture 5 Crown 85: Visual Perception: A Window to Brain and Behavior Lecture 5: Structure of and Information Processing in the Retina 1 lectures 5 better make it a triple (3 x) 1 CROWN 85: Visual Perception: A Window to Brain and Behavior Lecture 5 blind spot demonstration (close left eye) blind spot 2 CROWN 85: Visual Perception: A Window to Brain and Behavior Lecture 5 temporal right eye nasal + 0 pupil factoids • controls amount of light entering eye • depth of focus (vergence-accommodation-pupil reflex) • often limits optics to center of cornea yielding fewer aberrations 6 3 CROWN 85: Visual Perception: A Window to Brain and Behavior Lecture 5 lecture 5 outline Crown 85 Winter 2016 Visual Perception: A Window to Brain and Behavior Lecture 5: Structure of and Information: Processing in the Retina Reading: Joy of Perception Retina Eye Brain and Vision Web Vision How the Retina Works (American Scientist) [advanced] Looking: Information Processing in the Retina (Sinauer) How Lateral Inhibition Enhances Visual Edges YouTube) OVERVIEW: Once an image has been formed on the retina and visual transduction has occurred, neurons in the retina and the brain are ready to begin some serious information processing. In this lecture we will first discuss the structure of the retina and then look at the some perceptual phenomena related to the functioning of receptors and the transformations of visual information by neural networks found in the retina. 7 Why do animals have pupils of different shapes? Ryann Miguel - Crown 85 4 CROWN 85: Visual Perception: A Window to Brain and Behavior Lecture 5 Review The size and shape of a pupil, The pupil: hole in the middle of such as a pinhole, affects what the iris through which light enters the eye amount of light hits the back of the eye and the quality and strength of an image. -

Mach Band Type Lateral Inhibition in Different Sense Organs*

Mach Band Type Lateral Inhibition in Different Sense Organs* GEORG VON BEKISY From the Laboratory of Sensory Science, University of Hawaii, Honolulu, Hawaii ABSTRACT Experiments were done on the skin with shearing forces, vibrations, and heat stimuli and on the tongue with taste stimuli to show that the well known Mach bands are not exclusively a visual phenomenon. On the contrary, it is not difficult to produce areas of a decreased sensation magnitude corre- sponding to the dark Mach bands in vision. It is shown on a geometrical model of nervous interaction that the appearance of Mach bands for certain patterns of stimulus distribution is correlated with nervous inhibition surrounding the area of sensation. This corroborates the earlier finding that surrounding every area transmitting sensation there is an area simultaneously transmitting inhibi- tion. INTRODUCTION The importance of lateral inhibition in sensory perception has become more and more evident from recent research. Formerly, lateral inhibition was con- sidered a small side effect. But it now appears that without lateral inhibition much sensory stimulus localization would be lost. To illustrate the magnitude of lateral inhibition in hearing, we can for instance arrange 20 to 40 loud-speakers extending 10-20 ft. in a single row. Common sense would lead us to expect to perceive a sound source with a size comparable to the length of the row. Instead of this, when the distance between the loud-speakers and the observer is about 3 ft, the observed size of the sound source is of the order of the diameter of one single loud-speaker. -

QPR No. 87 191 (XIV

XIV. NEUROPHYSIOLOGY Academic and Research Staff Dr. W. S. McCulloch Prof. B. Pomeranz Dr. A. Natapoff Prof. J. Y. Lettvin Dr. T. O. Binford Dr. A. Taub Prof. P. D. Wall Dr. S-H. Chung B. Howland Prof. M. Blum Dr. H. Hartman Diane Major Prof. J. E. Brown Dr. Dora Jassik-Gerschenfeld W. H. Pitts Prof. P. R. Gross Dr. K. Kornacker Sylvia G. Rabin Dr. R. Moreno-Diaz Graduate Students G. P. Nelson J. I. Simpson W. A. Wright A. A COMMENT ON SHIFT REGISTERS AND NEURAL NETSt 1 Shift registers, linear and nonlinear, can be regarded as a subclass of neural nets, not vice and, therefore, the results of the general theory of neural nets apply to them, versa. A useful technique for investigating neural nets is provided by the state transi- tion matrix. We shall apply it to shift-register networks. The notation will be the same as that previously used. 1 Let an SR network be a neural net of N neurons and M external inputs, defined by a set of N Boolian equations of the form Yl(t) = fl(xl(t-1),x2(t-1),... ,xM(t-1); Yl(t-1), .. ,YN(t-1)) y (t) = y 1(t-1) 2 (1) YN(t) = YN-l(t-1), ) external inputs where fl(x 1 ,x2 ,' ..'XM; Y1 2' " " YN can be any Boolian function of the y2' ", y N. The SR network corresponds to a X 1 , x 2 , ... , xM, and of the outputs yl', shift register of N delay elements, which is linear or nonlinear, depending upon the nature of the function fl. -

ABSTRACT • Visible Light; 380Nm~825Nm ABSTRACT • Visible Light; 380Nm~825Nm • Cone Cell; at the Foveola(Center of the Eye)

생리현상에 대한 공학적해석 10: The Eye as a Transducer ABSTRACT • Visible light; 380nm~825nm : lens, retina • Cone cell; at the foveola(center of the eye) : 3um in diameter, 0.7' visual angle • Amplitude range : 4500:1(cone) ; 7 min dark adaptation time : 22:1(rod); 30 min dark adaptation time • Photoreceptors : generate graded potential : proportional to logarithm of visual stimulus 1 서울대학교 대학원 의용생체공학 협동과정 생리현상에 대한 공학적해석 10: The Eye as a Transducer ABSTRACT • Retina; five layers 1. photoreceptors; receptor potential 2. bipolar cells ; subtraction 3. horizontal cells; gather receptor potentials → local value 4. ganglion cells; translate to APs 5. amacrine cells; detection of illuminance change • Cones 1. red cones; 65%, respond maximally to yellow 2. gg;reen cones; 33%, resppygond maximally to green 3. blue cones; 2%, respond maximally to indigo 2 서울대학교 대학원 의용생체공학 협동과정 생리현상에 대한 공학적해석 10: The Eye as a Transducer ABSTRACT • SbjtiSubjective sensati on; sat ura tion &h& hue : depend on the ratio red: green: blue(CR:CG:CB) :white: white =(CR :C: CG :C: CB) = 90:45:1 • Standard chromaticity diagram : 0.9 log CR + 0.1log CB -log CG ↔ log CG -log CB - topologically similar to standard diagram : 3-D map in visual cortex; 2-D color map + illuminance : chromaticity diagram for color blindness • Contrast enhancement : B&W enhancement, color enhancement : hypothetical retinal model 3 서울대학교 대학원 의용생체공학 협동과정 생리현상에 대한 공학적해석 10: The Eye as a Transducer Charac ter is tics o f the Eye • Viibllihisible light; e lectromagnet ic wave : 825nm(3.6×1014Hz)~380nm(7.9 ×1014Hz) -

Early Visual Processing: Receptive Fields & Retinal Processing (Chapter 2, Part 2)

Early Visual Processing: Receptive Fields & Retinal Processing (Chapter 2, part 2) Lecture 5 Jonathan Pillow Sensation & Perception (PSY 345 / NEU 325) Princeton University, Spring 2019 1 What does the retina do? 1. Transduction this is a major, • Conversion of energy from one form to another important (i.e., “light” into “electrical energy”) concept 2. Processing • Amplification of very weak signals (1-2 photons can be detected!) • Compression of image into more compact form so that information can be efficiently sent to the brain optic nerve = “bottleneck” analogy: jpeg compression of images 2 3 Basic anatomy: photomicrograph of the retina 4 outer inner retina retinal ganglion cell bipolar cone cell optic disc (blind spot) optic nerve 5 What’s crazy about this is that the light has to pass through all the other junk in our eye before getting to photoreceptors! Cephalopods (squid, octopus): did it right. • photoreceptors in innermost layer, no blind spot! Debate: 1. accident of evolution? OR 2. better to have photoreceptors near blood supply? 6 outer inner retina RPE (retinal pigment epithelium) retinal ganglion cell bipolar cone cell optic disc (blind spot) optic nerve 7 blind spot demo 8 phototransduction: converting light to electrical signals rods cones • respond in low light • respond in daylight (“scotopic”) (“photopic”) • only one kind: don’t • 3 different kinds: process color responsible for color processing • 90M in humans • 4-5M in humans 9 phototransduction: converting light to electrical signals outer segments • packed with -

The Role of Spatiotemporal Edges in Visibility and Visual Masking

The role of spatiotemporal edges in visibility and visual masking Stephen L. Macknik*†, Susana Martinez-Conde*, and Michael M. Haglund‡ *Department of Neurobiology, Harvard Medical School, 220 Longwood Avenue, Boston, MA 02115; and ‡Duke University Medical Center, Division of Neurosurgery, Box 3807, Durham, NC 27710 Communicated by David H. Hubel, Harvard Medical School, Boston, MA, March 30, 2000 (received for review November 2, 1999) What parts of a visual stimulus produce the greatest neural signal? stimulus temporal modulation (i.e., flicker). It is not yet clear, Previous studies have explored this question and found that the however, which parts of the stimulus’s lifetime are most effective onset of a stimulus’s edge is what excites early visual neurons most in generating inhibitory signals. Here, we probe the role of strongly. The role of inhibition at the edges of stimuli has remained inhibition at spatiotemporal edges using a combination of psy- less clear, however, and the importance of neural responses chophysics and electrophysiology. We moreover used an optical associated with the termination of stimuli has only recently been imaging technique (14, 15) to observe activity-correlated signals examined. Understanding all of these spatiotemporal parameters derived by spatial edges on the surface of the primary visual (the excitation and inhibition evoked by the stimulus’s onset and cortex. termination, as well as its spatial edges) is crucial if we are to Visual masking is a phenomenon in which an otherwise visible develop a general principle concerning the relationship between stimulus, called a target, is rendered invisible (or less visible) by neural signals and the parts of the stimulus that generate them.