A Theodicy for Artificial Universes: Moral Considerations on Simulation Hypotheses

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pocketciv a Solitaire Game of Building Civilizations

PocketCiv A solitaire game of building civilizations. (version:07.11.06) OBJECT: Starting with a few tribes, lead your civilization through the ages to become...civilized. COMPONENTS: What you will definitely need: • The deck of Event cards If you are playing, basic, no frills, true PocketCiv style, you will additionally need: • A pencil • A pad of paper • This set of rules • A printout of the Advances and Wonders If you want to play a bunch of pre-built Scenarios, you will need: • The Scenario book Finally, if you want a full “board game” experience, you will need to download, printout and mount: • The files that contains the graphics for the Resource Tiles, and Land Masses • The deck of Advances and Wonders • Poker chips (for keeping track of Gold) • Glass Beads or wood cubes (for use as Tribes) A FEW WORDS OF NOTE: It should be noted here that since this is a solitaire game, the system is fairly open-ended to interpretation, and how you want to play it. For example, while in the following "Build Your World" section, it talks about creating a single Frontier area; there's nothing that prevents you from breaking up the Frontier into two areas, which would give you two Sea areas, as if your Empire is in the middle of a peninsula. It's your world, feel free to play with it as much as you like. Looking at the scenario maps will give you an idea how varied you can make your world. However, your first play should conform fairly close to these given rules, as the rules and events on the cards pertain to these rules. -

UNIT COUNTER POOL Both

11/23/2020 UNIT COUNTER POOL Home New Member Application Members Guide About Us Open Match Requests Unit Counter Pool AHIKS UNIT COUNTER POOL To request a lost counter, rulebook or accessory, email the UCP custodian, Brian Laskey at [email protected] ! Please Note: In order to use the Unit Counter Pool you must be a current member of AHIKS. If you are not a member but would like to find out how to join please go to the New Member Application page or click here!! AVALON HILL-VICTORY GAMES Across Five Aprils General 25-2 Counter Insert Advanced Civilization Bulge ‘81 Afrika Korps Empires in Arms Air Assault on Crete 1776 Anzio Tac Air ASL (Beyond Valor, Red Barricades, Yanks) B-17 General 26-3 Counter Insert Bismarck Flight Leader Blitzkreig Firepower Bitter Woods (1st ed. No Utility), 2nd ed Merchant of Venus Breakout Normandy Bulge ‘65 General 28-5 Counter Insert Bulge ’81 Midway/Guadalcanal Expansion Bulge ’91 Bull Run Caesar’s Legions Merchant of Venus Chancellorsville Panzer Armee Afrika Civil War Panzer Blitz Desert Storm (Gulf Strike: Desert Shield) Panzerkrieg D-Day Panzer Leader Devil’s Den Russian Campaign 1809 Siege of Jerusalem (Roman Only) Empires in Arms 1776 Firepower Stalingrad (Original) Flashpoint Golan Stalingrad (AHgeneral.org version) Flat Top (No Markers) Storm over Arnhem Fortress Europa Squad Leader France 1940 Submarine Gettysburg ‘77 Tactics II GI Anvil (German & SS Infantry; Small Third Reich file:///C:/Users/Owner/Desktop/UNIT COUNTER POOL.html 1/5 11/23/2020 UNIT COUNTER POOL Arms) Guadalcanal Tobruk Turning -

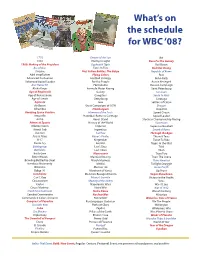

What's on the Schedule For

What’s on the schedule for WBC ‘08? 1776 Empire of the Sun Ra! 1830 Enemy In Sight Race For the Galaxy 1960: Making of the President Euphrat & Tigris Rail Baron Ace of Aces Facts In Five Red Star Rising Acquire Fast Action Battles: The Bulge Republic of Rome Adel Verpflichtet Flying Colors Risk Advanced Civilization Football Strategy Robo Rally Advanced Squad Leader For the People Russia Besieged ASL Starter Kit Formula De Russian Campaign Afrika Korps Formula Motor Racing Saint Petersburg Age of Empires III Galaxy San Juan Age of Renaissance Gangsters Santa Fe Rails Age of Steam Gettysburg Saratoga Agricola Goa Settlers of Catan Air Baron Great Campaigns of ACW Shogun Alhambra Hamburgum Slapshot Amazing Space Venture Hammer of the Scots Speed Circuit Amun-Re Hannibal: Rome vs Carthage Squad Leader Anzio Here I Stand Stockcar Championship Racing Athens & Sparta History of the World Successors Atlantic Storm Imperial Superstar Baseball Attack Sub Ingenious Sword of Rome Auction Ivanhoe Through the Ages Axis & Allies Kaiser's Pirates Thurn & Taxis B-17 Kingmaker Ticket To Ride Battle Cry Kremlin Tigers In the Mist Battlegroup Liar's Dice Tikal Battleline Lost Cities Titan BattleLore Manoeuver Titan Two Bitter Woods Manifest Destiny Titan: The Arena Brawling Battleship Steel March Madness Trans America Breakout Normandy Medici Twilight Struggle Britannia Memoir '44 Union Pacific Bulge '81 Merchant of Venus Up Front Candidate Monsters Ravage America Vegas Showdown Can't Stop Monty's Gamble Victory in the Pacific Carcassonne Mystery of the -

Archaeology, Antiquarianism, and the Landscape in American Women's Writing, 1820--1890 Christina Healey University of New Hampshire, Durham

University of New Hampshire University of New Hampshire Scholars' Repository Doctoral Dissertations Student Scholarship Spring 2009 Excavating the landscapes of American literature: Archaeology, antiquarianism, and the landscape in American women's writing, 1820--1890 Christina Healey University of New Hampshire, Durham Follow this and additional works at: https://scholars.unh.edu/dissertation Recommended Citation Healey, Christina, "Excavating the landscapes of American literature: Archaeology, antiquarianism, and the landscape in American women's writing, 1820--1890" (2009). Doctoral Dissertations. 475. https://scholars.unh.edu/dissertation/475 This Dissertation is brought to you for free and open access by the Student Scholarship at University of New Hampshire Scholars' Repository. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of University of New Hampshire Scholars' Repository. For more information, please contact [email protected]. EXCAVATING THE LANDSCAPES OF AMERICAN LITERATURE- ARCHAEOLOGY, ANTIQUARIAN ISM, AND THE LANDSCAPE IN AMERICAN WOMEN'S WRITING, 1820-1890 BY CHRISTINA HEALEY B.A., Providence College, 1999 M.A., Boston College, 2004 DISSERTATION Submitted to the University of New Hampshire in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy , in English May, 2009 UMI Number: 3363719 Copyright 2009 by Healey, Christina INFORMATION TO USERS The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed-through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. -

Asian and African Civilizations: Course Description, Topical Outline, and Sample Unit. INSTITUTION Columbia Univ., New York, NY

DOCUMENT RESUME ED 423 174 SO 028 555 AUTHOR Beaton, Richard A. TITLE Asian and African Civilizations: Course Description, Topical Outline, and Sample Unit. INSTITUTION Columbia Univ., New York, NY. Esther A. and Joseph Klingenstein Center for Independent School Education. PUB DATE 1995-00-00 NOTE 294p.; Photographs and illustrations may not reproduce well. AVAILABLE FROM Esther A. and Joseph Klingenstein Center for Independent School Education, Teachers College, Columbia University, 525 West 120th Street, Box 125, New York, NY, 10027. PUB TYPE Dissertations/Theses Practicum Papers (043) EDRS PRICE MF01/PC12 Plus Postage. DESCRIPTORS *African Studies; *Asian Studies; Course Content; *Course Descriptions; Ethnic Groups; Foreign Countries; *Indians; Non Western Civilization; Secondary Education; Social Studies; World History IDENTIFIERS Africa; Asia; India ABSTRACT This paper provides a skeleton of a one-year course in Asian and African civilizations intended for upper school students. The curricular package consists of four parts. The first part deals with the basic shape and content of the course as envisioned. The remaining three parts develop a specific unit on classical India with a series of teacher notes, a set of student readings that can be used according to individual needs, and a prose narrative of content with suggestions for extension and inclusion. (EH) ******************************************************************************** Reproductions supplied by EDRS are the best that can be z:Lad *s from the original document. -

Scott Stephenson History of Computer Game Design

Scott Stephenson History of Computer Game Design Civilization: Sid Meier’s Legacy Acclaimed as one of the greatest computer games of all time, Sid Meier’s Civilization and its follow-up Sid Meier’s Civilization II rocked the gaming world with a delicate balance of strategy and simulation. Listed as Gamespot U.K.’s number one all- time series among both computer and console games, “Civ” and “Civ II” are universally recognized for the level to which their creator, hall-of-fame designer Sid Meier, raised the bar in terms of overall outstanding game-play. Few games have risen to the level of excellence that these games have achieved in computer gaming history. Civilization, published in 1991 by MicroProse, puts the player in the role of a lone settler in a vast, uncharted world in the year 2000 B.C., and gives him the task of building an empire to “stand the test of time.”1 To accomplish this goal, the player must take on the roles of general, city manager, diplomat, scientist, and economist, as winning involves not only managing the art of war against rival civilizations, but also maintaining the prosperity of the player’s empire. The player must balance “the four impulses of Civilization”—exploration, economics, knowledge, and conquest—to become more powerful, either through peaceful negotiations or world domination. In 1996, MicroProse released Civilization II, a sequel that vastly improved on the original Civ while maintaining the integrity of the original’s game-play. The inspiration for Civilization came from the Avalon Hill board game of the same name, set in the Mediterranean area of Europe and North Africa. -

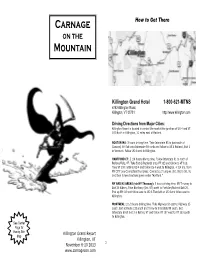

2013 Carnage Booklet 9-13

Carnage How to Get There on the Mountain Killington Grand Hotel 1 -800 -621 -MTNS 4763 Killington Road, Killington, VT 05751 http://www.killington.com Driving Directions from Major Cities: Killington Resort is located in central Vermont at the junction of US 4 and VT 100 North in Killington, 11 miles east of Rutland. BOSTON MA : 3 hours driving time. Take Interstate 93 to just south of Concord, NH Exit onto Interstate 89 north and follow to US 4 Rutland, Exit 1 in Vermont. Follow US 4 west to Killington. HARTFORD CT : 3 1/4 hours driving time. Follow Interstate 91 to north of Bellows Falls, VT. Take Exit 6 (Rutland) onto VT 103 and follow to VT 100. Take VT 100 north to US 4 and follow US 4 west to Killington. 4 3/4 hrs. from NY CITY (via Connecticut Turnpike): Connecticut Turnpike (Int. 95) to Int. 91 and then follow directions given under "Hartford." NY AND NJ AREAS (via NY Thruway): 5 hours driving time. NY Thruway to Exit 24 Albany. Take Northway (Int. 87) north to Fort Ann/Rutland Exit 20. Pick up NY 149 and follow east to US 4. Turn left on US 4 and follow east to Killington. MONTREAL: 3 1/2 hours driving time. Take Highway 10 east to Highway 35 south. Exit at Route 133 south and follow to Interstate 89 south. Exit Interstate 89 at Exit 3 in Bethel, VT and follow VT 107 west to VT 100 south to Killington. See Center Page for Handy Site Map Killington Grand Resort Killington, VT November 8-10 2013 2 www.carnagecon.com Welcome Join us in Killington, Vermont for the 16th annual Carnage convention, a celebration of tabletop gaming. -

![World History--Part 1. Teacher's Guide [And Student Guide]](https://docslib.b-cdn.net/cover/1845/world-history-part-1-teachers-guide-and-student-guide-2081845.webp)

World History--Part 1. Teacher's Guide [And Student Guide]

DOCUMENT RESUME ED 462 784 EC 308 847 AUTHOR Schaap, Eileen, Ed.; Fresen, Sue, Ed. TITLE World History--Part 1. Teacher's Guide [and Student Guide]. Parallel Alternative Strategies for Students (PASS). INSTITUTION Leon County Schools, Tallahassee, FL. Exceptibnal Student Education. SPONS AGENCY Florida State Dept. of Education, Tallahassee. Bureau of Instructional Support and Community Services. PUB DATE 2000-00-00 NOTE 841p.; Course No. 2109310. Part of the Curriculum Improvement Project funded under the Individuals with Disabilities Education Act (IDEA), Part B. AVAILABLE FROM Florida State Dept. of Education, Div. of Public Schools and Community Education, Bureau of Instructional Support and Community Services, Turlington Bldg., Room 628, 325 West Gaines St., Tallahassee, FL 32399-0400. Tel: 850-488-1879; Fax: 850-487-2679; e-mail: cicbisca.mail.doe.state.fl.us; Web site: http://www.leon.k12.fl.us/public/pass. PUB TYPE Guides - Classroom - Learner (051) Guides Classroom Teacher (052) EDRS PRICE MF05/PC34 Plus Postage. DESCRIPTORS *Academic Accommodations (Disabilities); *Academic Standards; Curriculum; *Disabilities; Educational Strategies; Enrichment Activities; European History; Greek Civilization; Inclusive Schools; Instructional Materials; Latin American History; Non Western Civilization; Secondary Education; Social Studies; Teaching Guides; *Teaching Methods; Textbooks; Units of Study; World Affairs; *World History IDENTIFIERS *Florida ABSTRACT This teacher's guide and student guide unit contains supplemental readings, activities, -

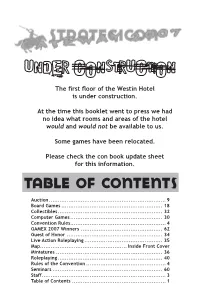

Table of Contents

The first floor of the Westin Hotel is under construction. At the time this booklet went to press we had no idea what rooms and areas of the hotel would and would not be available to us. Some games have been relocated. Please check the con book update sheet for this information. Table of Contents Auction .................................................................. 9 Board Games ......................................................... 18 Collectibles ........................................................... 32 Computer Games .................................................... 30 Convention Rules...................................................... 4 GAMEX 2007 Winners .............................................. 62 Guest of Honor ...................................................... 34 Live Action Roleplaying ............................................ 35 Map ................................................. Inside Front Cover Miniatures ............................................................ 36 Roleplaying ........................................................... 40 Rules of the Convention ............................................. 4 Seminars .............................................................. 60 Staff ...................................................................... 3 Table of Contents ..................................................... 1 WELCOME On behalf of the entire staff of Strategicon, our warmest convention greetings! We’re sure you’ll find Gateway a pleasant and memorable experience, -

Anthems, Sonnets, and Chants

Anthems, Sonnets, and Chants Anthems, Sonnets, and Chants Recovering the African American Poetry of the 1930s JON WOODSON THE OHIO STATE UNIVERSITY PRESS • COLUMBUS Copyright © 2011 by The Ohio State University. All rights reserved. Library of Congress Cataloging-in-Publication Data Woodson, Jon. Anthems, sonnets, and chants : recovering the African American poetry of the 1930s / Jon Woodson. p. cm. Includes bibliographical references and index. ISBN-13: 978-0-8142-1146-5 (cloth : alk. paper) ISBN-10: 0-8142-1146-1 (cloth : alk. paper) ISBN-13: 978-0-8142-9245-7 (cd) 1. American poetry—African American authors—History and criticism. 2. Depres- sions—1929—United States. 3. Existentialism in literature. 4. Racism in literature. 5. Italo-Ethiopian War, 1935–1936—Influence. I. Title. PS153.N5W66 2011 811'5209896073—dc22 2010022344 This book is available in the following editions: Cloth (ISBN 978-0-8142-1146-5) CD-ROM (ISBN 978-0-8142-9245-7) Cover design by Laurence Nozik Text design by Jennifer Shoffey Forsythe Type set in Adobe Minion Pro Printed by Thomson-Shore, Inc. The paper used in this publication meets the minimum requirements of the American National Standard for Information Sciences—Permanence of Paper for Printed Library Materials. ANSI Z39.48-1992. 9 8 7 6 5 4 3 2 1 FOR LYNN CUrrIER SMITH WOODSON Contents Acknowledgments ix List of Abbreviations xi Introduction 1 Chapter 1 The Crash of 1929 and the Great Depression: Three Long Poems 15 Chapter 2 Existential Crisis: The Sonnet and Self-Fashioning in the Black Poetry of the 1930s 69 Chapter 3 “Race War”: African American Poetry on the Italo-Ethiopian War 142 A Concluding Note 190 Appendix: Poems 197 Notes 235 Works Cited 253 Index 271 Acknowledgments My thanks to Dorcas Haller—professor, librarian, and chair of the Library Department at the Community College of Rhode Island—for finding the books that I could not find. -

1830 1835 1940 Agricola Anno Domini

Titolo 1830 1835 1940 1807: The Eagles turn East 1808: Napoleon en Espana 1830 the game of railroads and robber barons 1914: Glory's End 1940: The Fall of France 2nd Fleet 3rd Fleet 4 Monkeys 7 Ages 7 Wonders 7 Wonders: Cities 7 Wonders: Wonder Pack A Castle for all season A Game of Thrones Across the Potomac Advanced Civilization Advanced Heroquest Afrika Korps Age of Empires 3 L'era delle scoperte Age of Napoleon 1805-1815 Age of Renaissance Age of Steam Agricola Air Bridge to Victory Air Force Al Rashid Alpha Omega America in Flames Anhk-Morpork Anno Domini: Invenzioni e Scoperte Anno Domini: Sotto i Riflettori Antietam Aquaretto Arcane legion Arcanum Archipelago Arkham Horror Arkham Horror - L'orrore di Kingsport Aruga la tartaruga Assault on Leningrad Assist Atlantis Atlantis Treasure Atmosfear DVD Attila Avalam Ave Caesar - 1st Edition Axis & Allies Axis & Allies - D-Day Axis & Allies - Europe Axis & Allies - Pacific Babylon 5 - League of Worlds Babylon 5 - Psi Corps Babylon 5 - Shadow Babylon 5 - Vorlon Empire Back to Iraq Balkan Hell Banana Balance Bang! Bang! La Pallottola Bang! wild west show Barbarians Batik Battle Cry Battle of Bulge Battle of Napoleon Battle of the Alma Battlemist Battleship Battlestar Galactica Battlestar galactica Pegasus Battlestar Galactica: Exodus Expansion Battlestar Galactica: Pegasus Expansion Battletech BauSquitMiao Beowolf the legend Big Kini Birth of a nation Blitzkrieg 1940 Blokus Blood Bowl Blood Master Bloodbath at Kursk Bloody bunà Blue Max Bolide Bonnie and clyde Britannia Bruges Budapest 45 -

New Model Predicts That We're Probably the Only Advanced Civilization in the Observable Universe 22 June 2018, by Matt Williams

New model predicts that we're probably the only advanced civilization in the observable universe 22 June 2018, by Matt Williams conducted by Anders Sandberg, a Research Fellow at the Future of Humanity Institute and a Martin Senior Fellow at Oxford University; Eric Drexler, the famed engineer who popularized the concept of nanotechnology; and Toby Ord, the famous Australian moral philosopher at Oxford University. For the sake of their study, the team took a fresh look at the Drake Equation, the famous equation proposed by astronomer Dr. Frank Drake in the 1960s. Based on hypothetical values for a number of factors, this equation has traditionally been used to demonstrate that – even if the amount of life developing at any given site is small – the sheer According to a new theory argued by Anders Sandberg, multitude of possible sites should yield a large Eric Drexler and Toby Ord, the answer to the Fermi number of potentially observable civilizations. Paradox may be simple: humanity is alone in the universe. Credit: ESA/Gaia/DPAC This equation states that the number of civilizations (N) in our galaxy that we might able to communicate can be determined by multiplying the average rate of star formation in our galaxy (R*), The Fermi Paradox remains a stumbling block the fraction of those stars which have planets (fp), when it comes to the search for extra-terrestrial the number of planets that can actually support life intelligence (SETI). Named in honor of the famed (ne), the number of planets that will develop life (fl), physicist Enrico Fermi who first proposed it, this the number of planets that will develop intelligent paradox addresses the apparent disparity between life (fi), the number civilizations that would develop the expected probability that intelligent life is transmission technologies (fc), and the length of plentiful in the universe, and the apparent lack of time that these civilizations would have to transmit evidence of extra-terrestrial intelligence (ETI).