Journaling File Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

Development of a Verified Flash File System ⋆

Development of a Verified Flash File System ? Gerhard Schellhorn, Gidon Ernst, J¨orgPf¨ahler,Dominik Haneberg, and Wolfgang Reif Institute for Software & Systems Engineering University of Augsburg, Germany fschellhorn,ernst,joerg.pfaehler,haneberg,reifg @informatik.uni-augsburg.de Abstract. This paper gives an overview over the development of a for- mally verified file system for flash memory. We describe our approach that is based on Abstract State Machines and incremental modular re- finement. Some of the important intermediate levels and the features they introduce are given. We report on the verification challenges addressed so far, and point to open problems and future work. We furthermore draw preliminary conclusions on the methodology and the required tool support. 1 Introduction Flaws in the design and implementation of file systems already lead to serious problems in mission-critical systems. A prominent example is the Mars Explo- ration Rover Spirit [34] that got stuck in a reset cycle. In 2013, the Mars Rover Curiosity also had a bug in its file system implementation, that triggered an au- tomatic switch to safe mode. The first incident prompted a proposal to formally verify a file system for flash memory [24,18] as a pilot project for Hoare's Grand Challenge [22]. We are developing a verified flash file system (FFS). This paper reports on our progress and discusses some of the aspects of the project. We describe parts of the design, the formal models, and proofs, pointing out challenges and solutions. The main characteristic of flash memory that guides the design is that data cannot be overwritten in place, instead space can only be reused by erasing whole blocks. -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

Allgemeines Abkürzungsverzeichnis

Allgemeines Abkürzungsverzeichnis L. -

Use of Seek When Writing Or Reading Binary Files

Title stata.com file — Read and write text and binary files Description Syntax Options Remarks and examples Stored results Reference Also see Description file is a programmer’s command and should not be confused with import delimited (see [D] import delimited), infile (see[ D] infile (free format) or[ D] infile (fixed format)), and infix (see[ D] infix (fixed format)), which are the usual ways that data are brought into Stata. file allows programmers to read and write both text and binary files, so file could be used to write a program to input data in some complicated situation, but that would be an arduous undertaking. Files are referred to by a file handle. When you open a file, you specify the file handle that you want to use; for example, in . file open myfile using example.txt, write myfile is the file handle for the file named example.txt. From that point on, you refer to the file by its handle. Thus . file write myfile "this is a test" _n would write the line “this is a test” (without the quotes) followed by a new line into the file, and . file close myfile would then close the file. You may have multiple files open at the same time, and you may access them in any order. 1 2 file — Read and write text and binary files Syntax Open file file open handle using filename , read j write j read write text j binary replace j append all Read file file read handle specs Write to file file write handle specs Change current location in file file seek handle query j tof j eof j # Set byte order of binary file file set handle byteorder hilo j lohi j 1 j 2 Close -

File Storage Techniques in Labview

File Storage Techniques in LabVIEW Starting with a set of data as if it were generated by a daq card reading two channels and 10 samples per channel, we end up with the following array: Note that the first radix is the channel increment, and the second radix is the sample number. We will use this data set for all the following examples. The first option is to send it directly to a spreadsheet file. spreadsheet save of 2D data.png The data is wired to the 2D array input and all the defaults are taken. This will ask for a file name when the program block is run, and create a file with data values, separated by tab characters, as follows: 1.000 2.000 3.000 4.000 5.000 6.000 7.000 8.000 9.000 10.000 10.000 20.000 30.000 40.000 50.000 60.000 70.000 80.000 90.000 100.000 Note that each value is in the format x.yyy, with the y's being zeros. The default format for the write to spreadsheet VI is "%.3f" which will generate a floating point number with 3 decimal places. If a number with higher decimal places is entered in the array, it would be truncated to three. Since the data file is created in row format, and what you really need if you are going to import it into excel, is column format. There are two ways to resolve this, the first is to set the transpose bit on the write function, and the second, is to add an array transpose, located in the array pallet. -

System Calls System Calls

System calls We will investigate several issues related to system calls. Read chapter 12 of the book Linux system call categories file management process management error handling note that these categories are loosely defined and much is behind included, e.g. communication. Why? 1 System calls File management system call hierarchy you may not see some topics as part of “file management”, e.g., sockets 2 System calls Process management system call hierarchy 3 System calls Error handling hierarchy 4 Error Handling Anything can fail! System calls are no exception Try to read a file that does not exist! Error number: errno every process contains a global variable errno errno is set to 0 when process is created when error occurs errno is set to a specific code associated with the error cause trying to open file that does not exist sets errno to 2 5 Error Handling error constants are defined in errno.h here are the first few of errno.h on OS X 10.6.4 #define EPERM 1 /* Operation not permitted */ #define ENOENT 2 /* No such file or directory */ #define ESRCH 3 /* No such process */ #define EINTR 4 /* Interrupted system call */ #define EIO 5 /* Input/output error */ #define ENXIO 6 /* Device not configured */ #define E2BIG 7 /* Argument list too long */ #define ENOEXEC 8 /* Exec format error */ #define EBADF 9 /* Bad file descriptor */ #define ECHILD 10 /* No child processes */ #define EDEADLK 11 /* Resource deadlock avoided */ 6 Error Handling common mistake for displaying errno from Linux errno man page: 7 Error Handling Description of the perror () system call. -

(12) United States Patent (10) Patent No.: US 8,965,180 B2 Knight Et Al

USOO89651 80B2 (12) United States Patent (10) Patent No.: US 8,965,180 B2 Knight et al. (45) Date of Patent: *Feb. 24, 2015 (54) SYSTEMS AND METHODS FOR 21/4884 (2013.01); H04N 2 1/84 (2013.01); CONVERTING INTERACTIVE MULTIMEDIA H04L 65/602 (2013.01); G1 I B2220/2562 CONTENT AUTHORED FOR DISTRIBUTION (2013.01) VIAA PHYSICAL MEDIUM FOR USPC .......................................................... 386/282 ELECTRONIC DISTRIBUTION (58) Field of Classification Search USPC ......... 386/278, 279, 280, 281, 282,283, 284, (75) Inventors: Anthony David Knight, San Jose, CA 386/285, 290 (US); Ian Michael Lewis, Oxfordshire See application file for complete search history. (GB); Andrew Maurice Devitt, London (GB) (56) References Cited (73) Assignee: Rovi Guides, Inc., Santa Clara, CA (US) U.S. PATENT DOCUMENTS 4,838,843. A 6, 1989 Westhoff (*) Notice: Subject to any disclaimer, the term of this 5,313,881 A 5/1994 Morgan patent is extended or adjusted under 35 U.S.C. 154(b) by 66 days. (Continued) This patent is Subject to a terminal dis FOREIGN PATENT DOCUMENTS claimer. EP O865.362 B1 T 2003 (21) Appl. No.: 13/182,376 JP 2001344828 12/2001 (Continued) (22) Filed: Jul. 13, 2011 OTHER PUBLICATIONS (65) Prior Publication Data Apple Inc., "iTunes Extra iTunes LP Development: Template How US 2012/OO 14674 A1 Jan. 19, 2012 To v1.1”. Jan. 26, 2010, 58 pgs. (Continued) Related U.S. Application Data Primary Examiner — Tat Chio (60) Provisional application No. 61/364,001, filed on Jul. (74) Attorney, Agent, or Firm — Ropes & Gray LLP 13, 2010. (57) ABSTRACT (51) Int. -

Enhancing the Accuracy of Synthetic File System Benchmarks Salam Farhat Nova Southeastern University, [email protected]

Nova Southeastern University NSUWorks CEC Theses and Dissertations College of Engineering and Computing 2017 Enhancing the Accuracy of Synthetic File System Benchmarks Salam Farhat Nova Southeastern University, [email protected] This document is a product of extensive research conducted at the Nova Southeastern University College of Engineering and Computing. For more information on research and degree programs at the NSU College of Engineering and Computing, please click here. Follow this and additional works at: https://nsuworks.nova.edu/gscis_etd Part of the Computer Sciences Commons Share Feedback About This Item NSUWorks Citation Salam Farhat. 2017. Enhancing the Accuracy of Synthetic File System Benchmarks. Doctoral dissertation. Nova Southeastern University. Retrieved from NSUWorks, College of Engineering and Computing. (1003) https://nsuworks.nova.edu/gscis_etd/1003. This Dissertation is brought to you by the College of Engineering and Computing at NSUWorks. It has been accepted for inclusion in CEC Theses and Dissertations by an authorized administrator of NSUWorks. For more information, please contact [email protected]. Enhancing the Accuracy of Synthetic File System Benchmarks by Salam Farhat A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor in Philosophy in Computer Science College of Engineering and Computing Nova Southeastern University 2017 We hereby certify that this dissertation, submitted by Salam Farhat, conforms to acceptable standards and is fully adequate in scope and quality to fulfill the dissertation requirements for the degree of Doctor of Philosophy. _____________________________________________ ________________ Gregory E. Simco, Ph.D. Date Chairperson of Dissertation Committee _____________________________________________ ________________ Sumitra Mukherjee, Ph.D. Date Dissertation Committee Member _____________________________________________ ________________ Francisco J. -

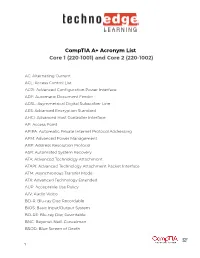

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

![[13주차] Sysfs and Procfs](https://docslib.b-cdn.net/cover/8218/13-sysfs-and-procfs-338218.webp)

[13주차] Sysfs and Procfs

1 7 Computer Core Practice1: Operating System Week13. sysfs and procfs Jhuyeong Jhin and Injung Hwang Embedded Software Lab. Embedded Software Lab. 2 sysfs 7 • A pseudo file system provided by the Linux kernel. • sysfs exports information about various kernel subsystems, HW devices, and associated device drivers to user space through virtual files. • The mount point of sysfs is usually /sys. • sysfs abstrains devices or kernel subsystems as a kobject. Embedded Software Lab. 3 How to create a file in /sys 7 1. Create and add kobject to the sysfs 2. Declare a variable and struct kobj_attribute – When you declare the kobj_attribute, you should implement the functions “show” and “store” for reading and writing from/to the variable. – One variable is one attribute 3. Create a directory in the sysfs – The directory have attributes as files • When the creation of the directory is completed, the directory and files(attributes) appear in /sys. • Reference: ${KERNEL_SRC_DIR}/include/linux/sysfs.h ${KERNEL_SRC_DIR}/fs/sysfs/* • Example : ${KERNEL_SRC_DIR}/kernel/ksysfs.c Embedded Software Lab. 4 procfs 7 • A special filesystem in Unix-like operating systems. • procfs presents information about processes and other system information in a hierarchical file-like structure. • Typically, it is mapped to a mount point named /proc at boot time. • procfs acts as an interface to internal data structures in the kernel. The process IDs of all processes in the system • Kernel provides a set of functions which are designed to make the operations for the file in /proc : “seq_file interface”. – We will create a file in procfs and print some data from data structure by using this interface. -

Refs: Is It a Game Changer? Presented By: Rick Vanover, Director, Technical Product Marketing & Evangelism, Veeam

Technical Brief ReFS: Is It a Game Changer? Presented by: Rick Vanover, Director, Technical Product Marketing & Evangelism, Veeam Sponsored by ReFS: Is It a Game Changer? OVERVIEW Backing up data is more important than ever, as data centers store larger volumes of information and organizations face various threats such as ransomware and other digital risks. Microsoft’s Resilient File System or ReFS offers a more robust solution than the old NT File System. In fact, Microsoft has stated that ReFS is the preferred data volume for Windows Server 2016. ReFS is an ideal solution for backup storage. By utilizing the ReFS BlockClone API, Veeam has developed Fast Clone, a fast, efficient storage backup solution. This solution offers organizations peace of mind through a more advanced approach to synthetic full backups. CONTEXT Rick Vanover discussed Microsoft’s Resilient File System (ReFS) and described how Veeam leverages this technology for its Fast Clone backup functionality. KEY TAKEAWAYS Resilient File System is a Microsoft storage technology that can transform the data center. Resilient File System or ReFS is a valuable Microsoft storage technology for data centers. Some of the key differences between ReFS and the NT File System (NTFS) are: ReFS provides many of the same limits as NTFS, but supports a larger maximum volume size. ReFS and NTFS support the same maximum file name length, maximum path name length, and maximum file size. However, ReFS can handle a maximum volume size of 4.7 zettabytes, compared to NTFS which can only support 256 terabytes. The most common functions are available on both ReFS and NTFS.