Speech Recognition (Speech to Text) Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

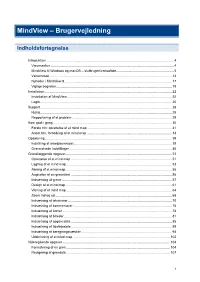

Mindview – Brugervejledning

MindView – Brugervejledning Indholdsfortegnelse Introduktion .......................................................................................................................................... 4 Varemærker ..................................................................................................................................... 4 MindView til Windows og macOS – slutbrugerlicensaftale .............................................................. 5 Velkommen .................................................................................................................................... 13 Nyheder i MindView 8 .................................................................................................................... 17 Vigtige begreber ............................................................................................................................. 19 Installation.......................................................................................................................................... 22 Installation af MindView ................................................................................................................. 22 Login .............................................................................................................................................. 25 Support .............................................................................................................................................. 28 Hjælp ............................................................................................................................................. -

List of New Applications Added in ARL #2586

List of new applications added in ARL #2586 Application Name Publisher NetCmdlets 2016 /n software 1099 Pro 2009 Corporate 1099 Pro 1099 Pro 2020 Enterprise 1099 Pro 1099 Pro 2008 Corporate 1099 Pro 1E Client 5.1 1E SyncBackPro 9.1 2BrightSparks FindOnClick 2.5 2BrightSparks TaxAct 2002 Standard 2nd Story Software Phone System 15.5 3CX Phone System 16.0 3CX 3CXPhone 16.3 3CX Grouper Plus System 2021 3M CoDeSys OPC Server 3.1 3S-Smart Software Solutions 4D 15.0 4D Duplicate Killer 3.4 4Team Disk Drill 4.1 508 Software NotesHolder 2.3 Pro A!K Research Labs LibraryView 1.0 AB Sciex MetabolitePilot 2.0 AB Sciex Advanced Find and Replace 5.2 Abacre Color Picker 2.0 ACA Systems Password Recovery Toolkit 8.2 AccessData Forensic Toolkit 6.0 AccessData Forensic Toolkit 7.0 AccessData Forensic Toolkit 6.3 AccessData Barcode Xpress 7.0 AccuSoft ImageGear 17.2 AccuSoft ImagXpress 13.6 AccuSoft PrizmDoc Server 13.1 AccuSoft PrizmDoc Server 12.3 AccuSoft ACDSee 2.2 ACD Systems ACDSync 1.1 ACD Systems Ace Utilities 6.3 Acelogix Software True Image for Crucial 23. Acronis Acrosync 1.6 Acrosync Zen Client 5.10 Actian Windows Forms Controls 16.1 Actipro Software Opus Composition Server 7.0 ActiveDocs Network Component 4.6 ActiveXperts Multiple Monitors 8.3 Actual Tools Multiple Monitors 8.8 Actual Tools ACUCOBOL-GT 5.2 Acucorp ACUCOBOL-GT 8.0 Acucorp TransMac 12.1 Acute Systems Ultimate Suite for Microsoft Excel 13.2 Add-in Express Ultimate Suite for Microsoft Excel 21.1 Business Add-in Express Ultimate Suite for Microsoft Excel 21.1 Personal Add-in Express -

Dragon® Naturallyspeaking® for Education Helping All Students Reach Their Full Potential

DRAGON® NATURALLYSPEAKING® FOR EDUCATION HELPING ALL STUDENTS REACH THEIR FULL POTENTIAL A REVOLUTIONARY EDUCATIONAL TOOL Dragon® NaturallySpeaking®, the world’s best-selling speech recognition software for the PC, enables students, teachers and school administrators to talk to their computers and watch their spoken words appear in documents, email, instant messages and more — three times faster than most people type — with up to 99% accuracy. It lets users dictate and edit in programs like Microsoft® Word, Excel® and Outlook® and OpenOffice Writer, surf the Web using Internet Explorer® and Mozilla® Firefox®, and command and control virtually any Windows application — just by speaking. Dragon NaturallySpeaking has gained broad acceptance as a cost-effective accessibility tool for a wide range of students. It not only provides an alternative input method for students with physical disabilities, but it has also been shown to help students with learning disabilities make significant advances in the areas of reading, writing and spelling. By eliminating the physical demands of typing and cognitive barriers to written communication, Dragon can help all students better realize their full potential. Speech recognition is gaining traction in the general education arena, too. Because people of all abilities are using speech to interact with mainstream technology — computers, handheld devices, navigation systems, gaming consoles, and more — students need to be trained in using voice interfaces. That’s why more middle and secondary schools are adopting Dragon as part of their business education curriculum. This training helps students boost productivity, prepare for tomorrow’s workforce, and prevent increasingly common repetitive stress injuries. REDUCE THE STRESS AND ANXIETY ASSOCIATED WITH HOMEWORK AND REPORTS Imagine the new levels of freedom and success students could experience by easily transferring their ideas into written text. -

Download English Mindview 8

MindView User Guide Table of Contents Introduction .......................................................................................................................................... 4 Trademarks ...................................................................................................................................... 4 MindView Windows and macOS – End User License Agreement .................................................. 5 Welcome ........................................................................................................................................ 13 What's new in MindView 8 ............................................................................................................. 17 Important terms .............................................................................................................................. 19 Installation and login .......................................................................................................................... 22 Installing MindView ........................................................................................................................ 22 Logging in ...................................................................................................................................... 25 Support .............................................................................................................................................. 28 Getting help ................................................................................................................................... -

Insight Public Sector, Inc. 534242 Addn 444 6/23/2021 Page 1 of 198

Insight Public Sector, Inc. 534242 Addn 444 6/23/2021 Page 1 of 198 Unit of Product Name Manufacturer Manufacturer Part No Vendor Part No Description Measure State Price NMD-10MO- Licensing Warranties 1E LTD NMD-10MO-COTERM COTERM 1E LTD COTERM MARCH RWNL 10MO Each $2.93 NMD-4KMAINT- Licensing Warranties 1E LTD NMD-4KMAINT-RWNL RWNL 1E LTD NOMAD 4K MAINT RWNL Each $3.91 Display Accessories 3M COMPANY AG240W9B AG240W9B 3M - Display anti-glare filter - 24" wide Each $52.15 3M High Clarity Filter - Notebook privacy filter - black - Desktop Accessories 3M COMPANY HCNMS003 HCNMS003 for Microsoft Surface Pro Each $37.83 Display Accessories 3M COMPANY MS80B MS80B 3M - Monitor stand - black, silver Each $51.19 3M Gel Mouse Pad Wrist Rest MW310LE - mouse Imaging Accessories 3M COMPANY MW310LE MW310LE pad with wrist pillow Each $19.94 3M Privacy Filter for 23" Widescreen Monitor - Printer Accessories 3M COMPANY PF230W9B PF230W9B Display privacy filter - 23" wide - black Each $94.46 3M Privacy Filter for HP Elite X2 1012 notebook Desktop Accessories 3M COMPANY PFNHP012 PFNHP012 privacy filter Each $45.40 License Business App 7EDIT 1807470 1807470 7EDIT 2.X PRO Each $225.39 Accell Powramid Air Power Center and USB Charging Station - Surge protector - AC 120 V - 1800 Watt - Notebook Batteries ACCELL D080B-030K D080B-030K ACCESSDATAoutput connectors: : FTK 6 - SUITE6 ft - black - PERPETUAL FOR Each $39.80 License Business App ACCESSDATA CORP 13000100 13000100-DPS DPS Each $3,681.85 Licensing Warranties ACCESSDATA CORP 13000300 13000300-DPS ACCESSDATA -

Machine Sensible Form Without a Written Authorization from Matchware Or Its Publishers

MindView 8 Documentation Table of Contents Introduction Trademarks MatchWare License Agreement What is a mind map? Mind Maps in education Mind Maps in business Overview What's new? Using MindView 8 purchased from the App Store Important terms Setting up Logging in Setting up your preferences The MindView workspace Dragon® integration (AT Edition only) Quick Start Quick Start First step: Creating the mind map Second step: enhancing the mind map Basic tasks Creating a mind map Opening a mind map Entering the subject Inserting branches Navigating a mind map Inserting icons Inserting pictures Inserting sounds Attaching objects Inserting text notes Inserting comments Using sources Using citations Inserting time information Inserting task data Saving a mind map Further tasks Editing a root or branch Changing the look of a mind map Moving a branch Moving a picture Creating a legend Filtering a MindMap Focusing on a branch Presenting a mind map Zooming in and out Using the Pictures Collection Using the overview panel Using the inspectors Using the Objects inspector Using Capture Finding and replacing text Using predictive suggestions Setting up suggestion sources Creating branch connections Creating links Inserting a new map Detaching a branch Checking spelling Printing a mind map Views Using different views Using the mind map view Using the Top Down view Using the Left/Right view Using the Timeline view Using the Outline view Using the Gantt view Setting up a project plan Defining the global project settings Defining calendars Entering and editing -

Mindview 6 Dokumentation

Einführung MindView 6 Dokumentation Einführung ............................................................................................................................................... 5 Warenzeichen ...................................................................................................................................... 5 MatchWare Lizenzvertrag.................................................................................................................... 5 Willkommen ......................................................................................................................................... 9 Entwerfen von Print-Dokumenten .................................................................................................... 9 Arbeiten mit Zahlen ........................................................................................................................ 10 Arbeiten mit Aufgabenlisten ........................................................................................................... 10 Arbeiten mit Projektplänen ............................................................................................................. 11 Arbeiten mit Zeitleisten .................................................................................................................. 11 Arbeiten mit Präsentationen und Websites.................................................................................... 12 Arbeiten mit den fertigen Vorlagen ............................................................................................... -

Download Mindview 8

MindView - Benutzerhandbuch Inhaltsverzeichnis Einführung ........................................................................................................................................... 4 Warenzeichen .................................................................................................................................. 4 MindView Windows und macOS – Endnutzer-Lizenzvereinbarung ................................................ 5 Willkommen ................................................................................................................................... 13 Was ist neu in MindView 8 ............................................................................................................. 17 Wichtige Begriffe ............................................................................................................................ 19 Installieren und Anmelden ................................................................................................................. 23 MindView installieren ..................................................................................................................... 23 Anmelden ....................................................................................................................................... 26 Support .............................................................................................................................................. 29 Hilfe-Möglichkeiten ........................................................................................................................ -

Dragon Naturallyspeaking for Education

DRAGON® NATURALLYSPEAKING® FOR EDUCATION HELPING ALL STUDENTS REACH THEIR FULL POTENTIAL A REVOLUTIONARY EDUCATIONAL TOOL Dragon® NaturallySpeaking®, the world’s best-selling speech recognition software for the PC, enables students, teachers and school administrators to talk to their computers and watch their spoken words appear in documents, email, instant messages and more — three times faster than most people type — with up to 99% accuracy. It lets users dictate and edit in programs like Microsoft® Word, Excel® and Outlook® and OpenOffice® Writer, surf the Web using Internet Explorer® and Mozilla® Firefox®, and command and control virtually any Windows application — just by speaking. Dragon NaturallySpeaking has gained broad acceptance as a cost-effective accessibility tool for a wide range of students. It not only provides an alternative input method for students with physical disabilities, but it has also been shown to help students with learning disabilities make significant advances in the areas of reading, writing and spelling. By eliminating the physical demands of typing and cognitive barriers to written communication, Dragon can help all students better realize their full potential. Speech recognition is gaining traction in the general education arena, too. Because people of all abilities are using speech to interact with mainstream technology — computers, handheld devices, navigation systems, gaming consoles, and more — students need to be trained in using voice interfaces. That’s why more middle and secondary schools are adopting Dragon as part of their business education curriculum. This training helps students boost productivity, prepare for tomorrow’s workforce, and prevent increasingly common repetitive stress injuries. REDUCE THE STRESS AND ANXIETY ASSOCIATED WITH HOMEWORK AND REPORTS Imagine the new levels of freedom and success students could experience by easily transferring their ideas into written text. -

Dragon Installation and User Guide

Installation and User Guide Version 12 Dragon 12 Installation and User Guide Copyright 1991 - 2012. Dragon Professional Version 12.0. This material may not include some last-minute technical changes and/or revisions to the software. Changes are periodically made to the information described here. Future versions of this material will incorporate these changes. Nuance Communications, Inc. may have patents or pending patent applications covering subject matter in this document. The furnishing of this document does not give you any license to such patents. No part of this material or software may be reproduced in any form or by any means, including, without limitation, electronic or mechanical, such as photocopying or recording, or by any information storage and retrieval systems, without the express written consent of Nuance Communications, Inc. Specifications are subject to change without notice. Copyright © 2012 Nuance Communications, Inc. All rights reserved. Nuance, the Nuance logo, ScanSoft, Dragon, DragonBar, the Dragon logo, NaturallySpeaking, RealSpeak, Nothing But Speech (NBS), Natural Language Technology, Full Text Control, BestMatch, MouseGrid, and Vocabulary Editor are registered trademarks or trademarks of Nuance Communications, Inc. All other names and trademarks referenced herein are trademarks of Nuance Communications, Inc., or their respective owners. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks, including the following: Pentium is a registered trademark -

Mindview 7 Documentation

MindView - brugervejledning Indhold Introduktion Varemærker MatchWare-licensaftale Hvad er et mind map? Mind maps i uddannelsessektoren Mind maps i erhvervslivet Oversigt Nyheder Brug af MindView 7 købt på App Store Vigtige begreber Kom godt i gang Kom godt i gang Første trin: oprettelse af et mind map Andet trin: forbedring af et mind map Indstilling Indstilling af præferencer Arbejdsområdet i MindView Integration med Dragon® (kun AT-versionen) Grundlæggende opgaver Oprettelse af et mind map Åbning af et mind map Angivelse af emne Indsætning af grene Navigation i et mind map Indsætning af ikoner Indsætning af billeder Indsætning af lyde Vedlægning af objekter Indsætning af tekstnoter Indsætning af kommentarer Brug af kilder Brug af kildehenvisninger Indsætning af tidsoplysninger Indsætning af opgavedata Arkivering af et mind map Videregående opgaver Redigering af en rod eller gren Ændring af udseendet i et mind map Flytning af en gren Flytning af et billede Oprettelse af en forklaring Filtrering af et mind map Fokus på en gren Præsentation af et mind map Zoom ind og ud Brug af billedkataloget Brug af panelet Oversigt Brug af infovinduer Brug af panelet Objekter Brug af opsnapningsfunktionen Søgning og erstatning af tekst Brug af prædiktive forslag Opsætning af forslagskilder Oprettelse af grenforbindelser Oprettelse af links Indsætning af et nyt map Frigørelse af en gren Stavekontrol Udskrivning af et mind map Visninger Brug af forskellige visninger Brug af Mind Map-visningen Brug af Top/bund-visningen Brug af Venstre/højre-visningen -

Boost Your Productivity

SpeechMike Air Wireless dictation microphone Noise-canceling microphone Comfortable ergonomic design Integrated trackball Boost your productivity with a professional dictation solution The Philips SpeechMike Air is specifically designed to meet the tough challenges of document-intensive professions. It represents the perfection of desktop dictation, no longer restraining it by a cord. The pure, timeless design, its outstanding ergonomics, and the sophisticated functionality in conjunction with LFH3000 series superb recording quality make it a milestone in professional dictation. Enhance your productivity • Comfortable ergonomic design for one-thumb operation • Integrated trackball for navigation through documents Superior audio quality • Noise-canceling microphone for accurate speech recognition results • Integrated sound card and speaker ensuring crystal clear playback Designed for professionals • Geared for Dragon NaturallySpeaking speech recognition software • Can be fully integrated into existing workflow systems • Wireless dictation microphone for unparalleled cordless user-experience No 1 in SPS global customer satisfaction survey 09/2015. SpeechMike Air wireless dictation microphone LFH3000 series Highlights Ergonomic zone design Noise-canceling microphone Ready for integration Designed with three ergonomic zones, it has The superior microphone reduces Philips provides a software development been tested and proven by users to have the background noise and is optimized for speech kit (SDK) for speech, office, and business most comfortable fit in the human hand. The recognition. The built-in headset socket application developers that allows for dictation zone is optimized to keep thumb allows you to attach the noise-canceling convenient programming of interfaces when movement to a minimum and allows for blind headset for hands-free recording and even integrating the SpeechMike into professional operation.