Arrested Development How Policy Failure Impairs Internet Progress

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Operaing the EPON Protocol Over Coaxial Distribuion Networks Call for Interest

Operang the EPON protocol over Coaxial Distribu&on Networks Call for Interest 08 November 2011 IEEE 802.3 Ethernet Working Group Atlanta, GA 1 Supporters Bill Powell Alcatel-Lucent Steve Carlson High Speed Design David Eckard Alcatel-Lucent Hesham ElBakoury Huawei Alan Brown Aurora Networks Liming Fang Huawei Dave Baran Aurora Networks David Piehler Neophotonics Edwin MalleIe Bright House Networks Amir Sheffer PMC-Sierra John Dickinson Bright House Networks Greg Bathrick PMC-Sierra Ed Boyd Broadcom ValenWn Ossman PMC-Sierra Howard Frazier Broadcom Alex Liu Qualcomm Lowell Lamb Broadcom Dylan Ko Qualcomm Mark Laubach Broadcom Steve Shellhammer Qualcomm Will Bliss Broadcom Mike Peters Sumitomo Electric Industries Robin Lavoie Cogeco Cable Inc. Yao Yong Technical Working CommiIee of China Radio & Ma SchmiI CableLabs TV Associaon Doug Jones Comcast Cable Bob Harris Time Warner Cable Jeff Finkelstein Cox Networks Kevin A. Noll Time Warner Cable John D’Ambrosia Dell Hu Baomin Wuhan Yangtze OpWcal Technologies Co.,Ltd. Zhou Zhen Fiberhome Telecommunicaon Ye Yonggang Wuhan Yangtze OpWcal Technologies Co.,Ltd. Technologies Zheng Zhi Wuhan Yangtze OpWcal Technologies Co.,Ltd. Boris Brun Harmonic Inc. Marek Hajduczenia ZTE Lior Assouline Harmonic Inc. Meiyan Zang ZTE David Warren HewleI-Packard Nevin R Jones ZTE 2 Objec&ves for This Mee&ng • To measure the interest in starWng a study group to develop a standards project proposal (a PAR and 5 Criteria) for: Operang the EPON protocol over Coaxial DistribuWon Networks • This meeWng does not: – Fully explore the problem – Debate strengths and weaknesses of soluWons – Choose any one soluWon – Create PAR or five criteria – Create a standard or specificaon 3 Agenda • IntroducWon • Market PotenWal • High Level Concept • Why Now? • Q&A • Straw Polls 4 The Brief History of EPON 2000 EPON Today.. -

Draft Revised Optical Transport Networks & Technologies

INTERNATIONAL TELECOMMUNICATION UNION STUDY GROUP 15 TELECOMMUNICATION TD 107 Rev.2(PLEN/15) STANDARDIZATION SECTOR STUDY PERIOD 2013-2016 English only Original: English Question(s): 3/15 1-12 July 2013 TD Source: Rapporteur Q3/15 Title: Draft Revised Optical Transport Networks & Technologies Standardization Work Plan, Issue 17 This TD includes the draft of Revised Optical Transport Networks & Technologies Standardization Work Plan, Issue 17. Contact: Yoshinori Koike Tel: +81-422-59-6723 NTT Corporation Fax: +81-422-59-3493 Japan Email: [email protected] Attention: This is not a publication made available to the public, but an internal ITU-T Document intended only for use by the Member States of ITU, by ITU-T Sector Members and Associates, and their respective staff and collaborators in their ITU related work. It shall not be made available to, and used by, any other persons or entities without the prior written consent of ITU-T. - 2 - TD 107 (PLEN/15) Optical Transport Networks & Technologies Standardization Work Plan Issue 167, September July 20123 1. General Optical and other Transport Networks & Technologies Standardization Work Plan is a living document. It may be updated even between meetings. The latest version can be found at the following URL. http://www.itu.int/ITU-T/studygroups/com15/otn/ Proposed modifications and comments should be sent to: Yoshinori Koike [email protected] Tel. +81 422 59 6723 2. Introduction Today's global communications world has many different definitions for Optical and other Transport networks and many different technologies that support them. This has resulted in a number of different Study Groups within the ITU-T, e.g. -

Ethernet (IEEE 802.3)

Computer Networking MAC Addresses, Ethernet & Wi-Fi Lecturers: Antonio Carzaniga Silvia Santini Assistants: Ali Fattaholmanan Theodore Jepsen USI Lugano, December 7, 2018 Changelog ▪ V1: December 7, 2018 ▪ V2: March 1, 2017 ▪ Changes to the «tentative schedule» of the lecture 2 Last time, on December 5, 2018… 3 What about today? ▪Link-layer addresses ▪Ethernet (IEEE 802.3) ▪Wi-Fi (IEEE 802.11) 4 Link-layer addresses 5 Image source: https://divansm.co/letter-to-santa-north-pole-address/letter-to-santa-north-pole-address-fresh-day-18-santa-s-letters/ Network adapters (aka: Network interfaces) ▪A network adapter is a piece of hardware that connects a computer to a network ▪Hosts often have multiple network adapters ▪ Type ipconfig /all on a command window to see your computer’s adapters 6 Image source: [Kurose 2013 Network adapters: Examples “A 1990s Ethernet network interface controller that connects to the motherboard via the now-obsolete ISA bus. This combination card features both a BNC connector (left) for use in (now obsolete) 10BASE2 networks and an 8P8C connector (right) for use in 10BASE-T networks.” https://en.wikipedia.org/wiki/Network_interface_controller TL-WN851ND - WLAN PCI card 802.11n/g/b 300Mbps - TP-Link https://tinyurl.com/yamo62z9 7 Network adapters: Addresses ▪Each adapter has an own link-layer address ▪ Usually burned into ROM ▪Hosts with multiple adapters have thus multiple link- layer addresses ▪A link-layer address is often referred to also as physical address, LAN address or, more commonly, MAC address 8 Format of a MAC address ▪There exist different MAC address formats, the one we consider here is the EUI-48, used in Ethernet and Wi-Fi ▪6 bytes, thus 248 possible addresses ▪ i.e., 281’474’976’710’656 ▪ i.e., 281* 1012 (trillions) Image source: By Inductiveload, modified/corrected by Kju - SVG drawing based on PNG uploaded by User:Vtraveller. -

Network Working Group A. Clemm Internet-Draft J

Network Working Group A. Clemm Internet-Draft J. Medved Intended status: Experimental E. Voit Expires: September 22, 2013 Cisco Systems March 21, 2013 Mounting YANG-Defined Information from Remote Datastores draft-clemm-netmod-mount-00 Abstract This document introduces a new capability that allows YANG datastores to reference and incorporate information from remote datastores. This is accomplished using a new YANG data model that allows to define and manage datastore mount points that reference data nodes in remote datastores. The data model includes a set of YANG extensions for the purposes of declaring such mount points. Status of This Memo This Internet-Draft is submitted in full conformance with the provisions of BCP 78 and BCP 79. Internet-Drafts are working documents of the Internet Engineering Task Force (IETF). Note that other groups may also distribute working documents as Internet-Drafts. The list of current Internet- Drafts is at http://datatracker.ietf.org/drafts/current/. Internet-Drafts are draft documents valid for a maximum of six months and may be updated, replaced, or obsoleted by other documents at any time. It is inappropriate to use Internet-Drafts as reference material or to cite them other than as "work in progress." This Internet-Draft will expire on September 22, 2013. Copyright Notice Copyright (c) 2013 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (http://trustee.ietf.org/license-info) in effect on the date of publication of this document. -

Ethernet (IEEE 802.3)

Ethernet (IEEE 802.3) • Ethernet is a family of computer networking technologies for local area (LAN) and larger networks. • It was commercially introduced in 1980 while it was first standardized in 1983 as IEEE 802.3, and has since been refined to support higher bit rates and longer link distances. • Over time, Ethernet has largely replaced competing wired LAN technologies such as token ring, FDDI, and ARCNET. • The Ethernet standards comprise several wiring and signaling variants of the OSI physical layer in use with Ethernet. • The original 10BASE5 Ethernet used coaxial cable as a shared medium. • Later the coaxial cables were replaced with twisted pair and fiber optic links in conjunction with hubs or switches. 9 Various standard defined for IEEE802.3 (Old Ethernet) 10Base5 -- thickwire coaxial 10Base2 -- thinwire coaxial or cheapernet 10BaseT -- twisted pair 10BaseF -- fiber optics 9 Fast Ethernet 100BaseTX and 100BaseF • Old Ethernet: CSMA/CD, Shared Media, and Half Duplex Links • Fast Ethernet: No CSMA/CD, Dedicated Media, and Full Duplex Links • Data rates have been incrementally increased from the original 10 megabits per second to 100 gigabits per second over its history. • Systems communicating over Ethernet divide a stream of data into shorter pieces called frames. Each frame contains source and destination addresses and error-checking data so that damaged data can be detected and re-transmitted. • As per the OSI model, Ethernet provides services up to and including the data link layer. • Evolution: 9 Shared media 9 Repeaters and hubs 9 Bridging and switching 9 Advanced networking • Varieties of Ethernet: Ethernet physical layer: 9 The Ethernet physical layer is the physical layer component of the Ethernet family of computer network standards. -

Draft Revised Optical Transport Networks & Technologies

- 1 - OTN Work Plan – September 2012 (issue 16) Optical Transport Networks & Technologies Standardization Work Plan Issue 16, September 2012 1. General Optical and other Transport Networks & Technologies Standardization Work Plan is a living document. It may be updated even between meetings. The latest version can be found at the following URL. http://www.itu.int/ITU-T/studygroups/com15/otn/ Proposed modifications and comments should be sent to: Yoshinori Koike [email protected] Tel. +81 422 59 6723 2. Introduction Today's global communications world has many different definitions for Optical and other Transport networks and many different technologies that support them. This has resulted in a number of different Study Groups within the ITU-T, e.g. SG 11, 12, 13, and 15 developing Recommendations related to Optical and other Transport. Moreover, other standards development organizations (SDOs), forums and consortia are also active in this area. Recognising that without a strong coordination effort there is the danger of duplication of work as well as the development of incompatible and non-interoperable standards, WTSA-08 designated Study Group 15 as Lead Study Group on Optical and other Transport Networks and Technology, with the mandate to: study the appropriate core Questions (Question 6, 7, 9, 10, 11, 12, 13, 14 and 15/15), define and maintain overall (standards) framework, in collaboration with other SGs and SDOs), coordinate, assign and prioritise the studies done by the Study Groups (recognising their mandates) to ensure the development of consistent, complete and timely Recommendations, Study Group 15 entrusted WP 3/15, under Question 3/15, with the task to manage and carry out the Lead Study Group activities on Optical and other Transport Networks and Technology. -

Fttx Networks

FTTx Networks TELCOMA Copyright © TELCOMA. All Rights Reserved Introduction to FTTx Copyright © TELCOMA. All Rights Reserved Physical Technologies for Communication ❏ The twisted pair copper wire is the oldest, and still widely deployed technology that supports a single analog telephone line to the home. ❏ Digital Subscriber Line (DSL) technology is used to transport digital data. ❏ The TV signals are brought into homes by using coaxial cable from a master antenna which are called community antenna television (CATV) systems. Copyright © TELCOMA. All Rights Reserved (contd...) Physical Technologies for Communication ❏ But now a days, there is an evolution of the technology from coaxial cable emanating from the central receive point, to hybrid fiber-coax (HFC) systems in which the signal is taken by the fiber optic cable from the headend or hub to a node. ❏ The low signal loss compared to that of coax is the advantage of fiber. ❏ Hence, the signal is transmitted to larger distances without amplifying it. ❏ Therefore, this technology provides better reliability, better quality, and lower operational expenses (op-ex). Copyright © TELCOMA. All Rights Reserved (contd...) Physical Technologies for Communication ❏ HFC extension to the smallest node that serves only one home brings the third technology called fiber-to-the-home (FTTH). ❏ Only passive components are used to build FTTH systems that improves reliability and no need to made provision to obtain power from commercial sources and no need of backup power. ❏ As a result, there is reduction in both capital and operational expenses and enhancement in the reliability and quality of the received signals. Copyright © TELCOMA. All Rights Reserved (contd...) Terminology ❏ In telephone background, a central office (CO) is the point where signals are assembled to go to subscribers and is called headend in a cable TV background. -

IEEE 802.1/.3 Joint Interim Standard Registration Deadline August 29Th

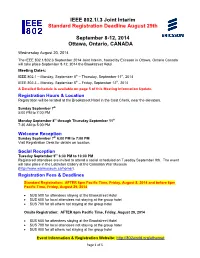

IEEE 802.1/.3 Joint Interim Standard Registration Deadline August 29th September 8-12, 2014 Ottawa, Ontario, CANADA Wednesday August 20, 2014 The IEEE 802.1/802.3 September 2014 Joint Interim, hosted by Ericsson in Ottawa, Ontario Canada will take place September 8-12, 2014 the Brookstreet Hotel. Meeting Dates: IEEE 802.1 – Monday, September 8th – Thursday, September 11th, 2014 IEEE 802.3 – Monday, September 8th – Friday, September 12th, 2014 A Detailed Schedule is available on page 5 of this Meeting Information Update. Registration Hours & Location Registration will be located at the Brookstreet Hotel in the Coat Check, near the elevators. Sunday September 7th 5:00 PM to 7:00 PM Monday September 8th through Thursday September 11th 7:30 AM to 5:00 PM Welcome Reception Sunday September 7th 6:00 PM to 7:00 PM Visit Registration Desk for details on location. Social Reception Tuesday September 9th 6:30 PM to 10:00 PM Registered attendees are invited to attend a social scheduled on Tuesday September 9th. The event will take place in the LeBreton Gallery at the Canadian War Museum (http://www.warmuseum.ca/home/). Registration Fees & Deadlines Standard Registration: AFTER 6pm Pacific Time, Friday, August 8, 2014 and before 6pm Pacific Time, Friday, August 29, 2014 $US 500 for attendees staying at the Brookstreet Hotel $US 600 for local attendees not staying at the group hotel $US 700 for all others not staying at the group hotel Onsite Registration: AFTER 6pm Pacific Time, Friday, August 29, 2014 $US 600 for attendees staying at the Brookstreet Hotel $US 700 for local attendees not staying at the group hotel $US 800 for all others not staying at the group hotel Event Information & Registration Website: http://802world.org/ethernet Page 1 of 5 Registration Policy Meeting Registration: Registration is available through a secure website and requires a valid credit card for the payment of the registration fee; checks are NOT accepted for registration. -

International Standard Iso/Iec/Ieee 8802-3

This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-80007551 INTERNATIONAL ISO/IEC/IEEE STANDARD 8802-3 2017-03-01 AMENDMENTSecond edition 6 2018-10 Information technology — Telecommunications and information exchange between systems — Local and metropolitan area networks — Specific requirements — Part 3: Standard for Ethernet AMENDMENT 6: Physical layer specifications and management Tparametersechnologies de l'information for ethernet — Télécommunications passive etoptical échange networksd'information entre protocol systèmes — over Réseaux coax locaux et métropolitains — Prescriptions spécifiques — Partie 3: Norme pour Ethernet AMENDEMENT 6 Reference number ISO/IEC/IEEE 8802-3:2017/Amd.6:2018(E) © IEEE 201 6 This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-80007551 ISO/IEC/IEEE 8802-3:2017/Amd.6:2018(E) COPYRIGHT PROTECTED DOCUMENT © IEEE 201 6 All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO or IEEE at the respective address below or ISO’s member body in the country of the requester. ISO copyright office Institute of Electrical and Electronics Engineers, Inc CP 401 • Ch. de Blandonnet 8 3 Park Avenue, New York CH-1214 Vernier, Geneva NY 10016-5997, USA Phone: +41 22 749 01 11 Fax: +41 22 749 09 47 Email: [email protected] Email: [email protected] Website: www.iso.org Website: www.ieee.org Published in Switzerland ii © IEEE 2016 – All rights reserved This preview is downloaded from www.sis.se. -

International Standard Iso/Iec/ Ieee 8802-3

This is a preview - click here to buy the full publication INTERNATIONAL ISO/IEC/ STANDARD IEEE 8802-3 Third edition 202 - 1 02 Telecommunications and exchange between information technology systems — Requirements for local and metropolitan area networks — Part 3: Standard for Ethernet Télécommunications et échange entre systèmes informatiques — Exigences pour les réseaux locaux et métropolitains — Partie 3: Norme pour Ethernet Reference number ISO/IEC/IEEE 8802-3:202 (E) 1 © IEEE 20 18 This is a preview - click here to buy the full publication ISO/IEC/IEEE 8802-3:2021(E) COPYRIGHT PROTECTED DOCUMENT © IEEE 20 18 All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from IEEE at the address below. Institute of Electrical and Electronics Engineers, Inc 3 Park Avenue, New York NY 10016-5997, USA Email: [email protected] Website:Published www.ieee.org in Switzerland ii © IEEE 20 – All rights reserved 18 This is a preview - click here to buy the full ISO/IEC/IEEEpublication 8802-3:2021(E) Foreword ISO (the International Organization for Standardization) and IEC (the International Electrotechnical Commission) form the specialized system for worldwide standardization. National bodies that are members of ISO or IEC participate in the development of International Standards through technical committees established by the respective organization to deal with particular fields of technical activity. -

Design, Implementation and Performance Study of Gigabit Ethernet Protocol Using Embedded Technologies

DESIGN, IMPLEMENTATION AND PERFORMANCE STUDY OF GIGABIT ETHERNET PROTOCOL USING EMBEDDED TECHNOLOGIES A Thesis submitted to Goa University for the Award of the degree of DOCTOR OF PHILOSOPHY in ELECTRONICS By Vinaya Rajendra Gad Research Guide Prof. G. M. Naik Goa University Taleigao Goa 2014 i Certificate This is to certify that the thesis entitled “Design, Implementation And Performance Study Of Gigabit Ethernet Protocol Using Embedded Technologies”, submitted by Mrs. Vinaya Rajendra Gad, for the award of the degree of Doctor of Philosophy in Electronics, is based on her original and independent work carried out by her during the period of study, under my supervision. The thesis or any part thereof has not been previously submitted for any other degree or diploma in any University or institute. Place : Goa University ( G. M. Naik ) Date: 15th April 2014 Research Guide ii Statement I state that the present thesis entitled “Design, Implementation And Performance Study Of Gigabit Ethernet Protocol Using Embedded Technologies” is my original contribution and the same has not been submitted on any occasion for any other degree or diploma of this University or any other University / Institute. To the best of my knowledge, the present study is the first comprehensive work of its kind in the area mentioned. The literature related to the problem investigated has been cited. Due acknowledgements have been made wherever facilities and suggestions have been availed of. Place: Goa University (V. R. Gad) th Date: 15 April 2014 Candidate iii Dedicated with love to my parents Shri. Anand Puttu Gaonkar and Smt. Medha Anand Gaonkar iv Acknowledgements “No great Discovery was ever made without a bold guess.” Isaac Newton I express my sincere appreciation to my supervisor, Dr. -

Upstream Polling Protocols for Flow Control in PON/Xdsl Hybrid Access Networks Anu Mercian, Elliot I

1 Upstream Polling Protocols for Flow Control in PON/xDSL Hybrid Access Networks Anu Mercian, Elliot I. Gurrola, Frank Aurzada, Michael P. McGarry, and Martin Reisslein Abstract—In a hybrid PON/xDSL access network, multiple networks have significantly smaller degrees of cost sharing Customer Premise Equipment (CPE) nodes connect over indi- compared to public metropolitan and core networks; thereby vidual Digital Subscriber Lines (DSLs) to a drop-point device. increasing cost per paying subscriber. As a result, access The drop-point device, which is typically reverse powered from the customer, is co-located with an Optical Network Unit (ONU) network technologies must keep installation costs low [1]. of the Passive Optical Network (PON). We demonstrate that the Utilizing existing bandwidth-limited copper wire or shared drop-point experiences very high buffer occupancies when no optical fiber will keep installation costs low [2]. flow control or standard Ethernet PAUSE frame flow control In this paper we present our study of hybrid access networks is employed. In order to reduce the buffer occupancies in the that utilize both copper wire and shared optical fiber. The drop-point, we introduce two gated flow control protocols that extend the polling-based PON medium access control to the DSL shared optical fiber extends from the service provider’s central segments between the CPEs and the ONUs. We analyze the timing office to a drop-point whereby the final few hundred meters of the gated flow control mechanisms to specify the latest possible to the subscriber premise are reached by existing twisted-pair time instant when CPEs can start the DSL upstream transmis- copper wire.