Det. Sample Sort

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DC Console Using DC Console Application Design Software

DC Console Using DC Console Application Design Software DC Console is easy-to-use, application design software developed specifically to work in conjunction with AML’s DC Suite. Create. Distribute. Collect. Every LDX10 handheld computer comes with DC Suite, which includes seven (7) pre-developed applications for common data collection tasks. Now LDX10 users can use DC Console to modify these applications, or create their own from scratch. AML 800.648.4452 Made in USA www.amltd.com Introduction This document briefly covers how to use DC Console and the features and settings. Be sure to read this document in its entirety before attempting to use AML’s DC Console with a DC Suite compatible device. What is the difference between an “App” and a “Suite”? “Apps” are single applications running on the device used to collect and store data. In most cases, multiple apps would be utilized to handle various operations. For example, the ‘Item_Quantity’ app is one of the most widely used apps and the most direct means to take a basic inventory count, it produces a data file showing what items are in stock, the relative quantities, and requires minimal input from the mobile worker(s). Other operations will require additional input, for example, if you also need to know the specific location for each item in inventory, the ‘Item_Lot_Quantity’ app would be a better fit. Apps can be used in a variety of ways and provide the LDX10 the flexibility to handle virtually any data collection operation. “Suite” files are simply collections of individual apps. Suite files allow you to easily manage and edit multiple apps from within a single ‘store-house’ file and provide an effortless means for device deployment. -

BIMM 143 Introduction to UNIX

BIMM 143 Introduction to UNIX Barry Grant http://thegrantlab.org/bimm143 Do it Yourself! Lets get started… Mac Terminal PC Git Bash SideNote: Terminal vs Shell • Shell: A command-line interface that allows a user to Setting Upinteract with the operating system by typing commands. • Terminal [emulator]: A graphical interface to the shell (i.e. • Mac users: openthe a window Terminal you get when you launch Git Bash/iTerm/etc.). • Windows users: install MobaXterm and then open a terminal Shell prompt Introduction To Barry Grant Introduction To Shell Barry Grant Do it Yourself! Print Working Directory: a.k.a. where the hell am I? This is a comment line pwd This is our first UNIX command :-) Don’t type the “>” bit it is the “shell prompt”! List out the files and directories where you are ls Q. What do you see after each command? Q. Does it make sense if you compare to your Mac: Finder or Windows: File Explorer? On Mac only :( open . Note the [SPACE] is important Download any file to your current directory/folder curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip ls unzip bggn213_01_unix.zip Q. Does what you see at each step make sense if you compare to your Mac: Finder or Windows: File Explorer? Download any file to your current directory/folder curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip List out the files and directories where you are (NB: Use TAB for auto-complete) ls bggn213_01_unix.zip Un-zip your downloaded file unzip bggn213_01_unix.zip curlChange -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip directory (i.e. -

Cluster Generate — Generate Grouping Variables from a Cluster Analysis

Title stata.com cluster generate — Generate grouping variables from a cluster analysis Description Quick start Menu Syntax Options Remarks and examples Also see Description cluster generate creates summary or grouping variables from a hierarchical cluster analysis; the result depends on the function. A single variable may be created containing a group number based on the requested number of groups or cutting the dendrogram at a specified (dis)similarity value. A set of new variables may be created if a range of group sizes is specified. Users can add more cluster generate functions; see[ MV] cluster programming subroutines. Quick start Generate grouping variable g5 with 5 groups from the most recent cluster analysis cluster generate g5 = groups(5) As above, 4 grouping variables (g4, g5, g6, and g7) with 4, 5, 6, and 7 groups cluster generate g = groups(4/7) As above, but use the cluster analysis named myclus cluster generate g = groups(4/7), name(myclus) Generate grouping variable mygroups from the most recent cluster analysis by cutting the dendrogram at dissimilarity value 38 cluster generate mygroups = cut(38) Menu Statistics > Multivariate analysis > Cluster analysis > Postclustering > Summary variables from cluster analysis 1 2 cluster generate — Generate grouping variables from a cluster analysis Syntax Generate grouping variables for specified numbers of clusters cluster generate newvar j stub = groups(numlist) , options Generate grouping variable by cutting the dendrogram cluster generate newvar = cut(#) , name(clname) option Description name(clname) name of cluster analysis to use in producing new variables ties(error) produce error message for ties; default ties(skip) ignore requests that result in ties ties(fewer) produce results for largest number of groups smaller than your request ties(more) produce results for smallest number of groups larger than your request Options name(clname) specifies the name of the cluster analysis to use in producing the new variables. -

Student Number: Surname: Given Name

Computer Science 2211a Midterm Examination Sample Solutions 9 November 20XX 1 hour 40 minutes Student Number: Surname: Given name: Instructions/Notes: The examination has 35 questions on 9 pages, and a total of 110 marks. Put all answers on the question paper. This is a closed book exam. NO ELECTRONIC DEVICES OF ANY KIND ARE ALLOWED. 1. [4 marks] Which of the following Unix commands/utilities are filters? Correct answers are in blue. mkdir cd nl passwd grep cat chmod scriptfix mv 2. [1 mark] The Unix command echo HOME will print the contents of the environment variable whose name is HOME. True False 3. [1 mark] In C, the null character is another name for the null pointer. True False 4. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod a=x abc.dat is equivalent to the command: a. chmod 755 abc.dat b. chmod 711 abc.dat c. chmod 155 abc.dat d. chmod 111 abc.dat e. none of the above 5. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod ug+w abc.dat is equivalent to the command: a. chmod 766 abc.dat b. chmod 764 abc.dat c. chmod 754 abc.dat d. chmod 222 abc.dat e. none of the above 2 6. [3 marks] The protection code for def.dat is currently dr-xr--r-- , and the protection code for def.dat/ghi.dat is currently -r-xr--r-- . Give one or more chmod commands that will set the protections properly so that the owner of the two files will be able to delete ghi.dat using the command rm def.dat/ghi.dat chmod u+w def.dat or chmod –r u+w def.dat 7. -

Unix Programming

P.G DEPARTMENT OF COMPUTER APPLICATIONS 18PMC532 UNIX PROGRAMMING K1 QUESTIONS WITH ANSWERS UNIT- 1 1) Define unix. Unix was originally written in assembler, but it was rewritten in 1973 in c, which was principally authored by Dennis Ritchie ( c is based on the b language developed by kenThompson. 2) Discuss the Communication. Excellent communication with users, network User can easily exchange mail,dta,pgms in the network 3) Discuss Security Login Names Passwords Access Rights File Level (R W X) File Encryption 4) Define PORTABILITY UNIX run on any type of Hardware and configuration Flexibility credits goes to Dennis Ritchie( c pgms) Ported with IBM PC to GRAY 2 5) Define OPEN SYSTEM Everything in unix is treated as file(source pgm, Floppy disk,printer, terminal etc., Modification of the system is easy because the Source code is always available 6) The file system breaks the disk in to four segements The boot block The super block The Inode table Data block 7) Command used to find out the block size on your file $cmchk BSIZE=1024 8) Define Boot Block Generally the first block number 0 is called the BOOT BLOCK. It consists of Hardware specific boot program that loads the file known as kernal of the system. 9) Define super block It describes the state of the file system ie how large it is and how many maximum Files can it accommodate This is the 2nd block and is number 1 used to control the allocation of disk blocks 10) Define inode table The third segment includes block number 2 to n of the file system is called Inode Table. -

UNIX File System

22-Feb-19 Terminology • A user – any one who has Unix account on the system. • Unix recognizes a user by a number called user id. Access Permissions • A super user: – has the maximum set of privileges in the system – also know as system administrator – can change the system – must have a lot of experience and training • Users can be organized into groups. • One or more users can belong to multiple groups. 1 2 1 2 Figure 4-5 Access Permission Code Directory and File Permissions • The protection on a file is referred to as its file modes • Linux supports three types of access permissions: r read w write x execute - permission denied Linux assigns different permission to owner, group and other users 3 4 3 4 Access Types Checking Permissions • To check the permissions of an existing file or an Access Type Meaning on File Meaning on Dir. existing directory, use the command: ls –l r (read) View file contents List directory contents • Example: (open, read) ux% ls –l unix w (write) Change file contents - Change directory contents - Be careful !!! total 387 drwxr--r-- 1 z036473 student 862 Feb 7 19:22 unixgrades -rw-r--r-- 1 z036473 student 0 Jun 24 2003 uv.nawk x (execute) Run executable file - Make it your cwd -rw-r--r-- 1 z036473 student 0 Jun 24 2003 wx.nawk - Access files (by name) in it -rw-r--r-- 1 z036473 student 0 Jun 24 2003 yz.nawk - Permission denied Permission denied 5 6 5 6 1 22-Feb-19 Figure 4-7 Figure 4-6 Changing Permissions The chmod Command 7 8 7 8 Figure 4-8 Changing Permissions: Symbolic Mode Changing Permissions: Symbolic Mode ux% ls -li sort.c 118283 -rw-r--r-- 1 krush csci 80 Feb 27 12:23 sort.c Example 1: To change the permissions on the file “sort.c” using Symbolic mode, so that: a) Everyone may read and execute it b) Only the owner and group may write to it. -

Standard TECO (Text Editor and Corrector)

Standard TECO TextEditor and Corrector for the VAX, PDP-11, PDP-10, and PDP-8 May 1990 This manual was updated for the online version only in May 1990. User’s Guide and Language Reference Manual TECO-32 Version 40 TECO-11 Version 40 TECO-10 Version 3 TECO-8 Version 7 This manual describes the TECO Text Editor and COrrector. It includes a description for the novice user and an in-depth discussion of all available commands for more advanced users. General permission to copy or modify, but not for profit, is hereby granted, provided that the copyright notice is included and reference made to the fact that reproduction privileges were granted by the TECO SIG. © Digital Equipment Corporation 1979, 1985, 1990 TECO SIG. All Rights Reserved. This document was prepared using DECdocument, Version 3.3-1b. Contents Preface ............................................................ xvii Introduction ........................................................ xix Preface to the May 1985 edition ...................................... xxiii Preface to the May 1990 edition ...................................... xxv 1 Basics of TECO 1.1 Using TECO ................................................ 1–1 1.2 Data Structure Fundamentals . ................................ 1–2 1.3 File Selection Commands ...................................... 1–3 1.3.1 Simplified File Selection .................................... 1–3 1.3.2 Input File Specification (ER command) . ....................... 1–4 1.3.3 Output File Specification (EW command) ...................... 1–4 1.3.4 Closing Files (EX command) ................................ 1–5 1.4 Input and Output Commands . ................................ 1–5 1.5 Pointer Positioning Commands . ................................ 1–5 1.6 Type-Out Commands . ........................................ 1–6 1.6.1 Immediate Inspection Commands [not in TECO-10] .............. 1–7 1.7 Text Modification Commands . ................................ 1–7 1.8 Search Commands . -

How to Build a Search-Engine with Common Unix-Tools

The Tenth International Conference on Advances in Databases, Knowledge, and Data Applications Mai 20 - 24, 2018 - Nice/France How to build a Search-Engine with Common Unix-Tools Andreas Schmidt (1) (2) Department of Informatics and Institute for Automation and Applied Informatics Business Information Systems Karlsruhe Institute of Technologie University of Applied Sciences Karlsruhe Germany Germany Andreas Schmidt DBKDA - 2018 1/66 Resources available http://www.smiffy.de/dbkda-2018/ 1 • Slideset • Exercises • Command refcard 1. all materials copyright, 2018 by andreas schmidt Andreas Schmidt DBKDA - 2018 2/66 Outlook • General Architecture of an IR-System • Naive Search + 2 hands on exercices • Boolean Search • Text analytics • Vector Space Model • Building an Inverted Index & • Inverted Index Query processing • Query Processing • Overview of useful Unix Tools • Implementation Aspects • Summary Andreas Schmidt DBKDA - 2018 3/66 What is Information Retrieval ? Information Retrieval (IR) is finding material (usually documents) of an unstructured nature (usually text) that satisfies an informa- tion need (usually a query) from within large collections (usually stored on computers). [Manning et al., 2008] Andreas Schmidt DBKDA - 2018 4/66 What is Information Retrieval ? need for query information representation how to match? document document collection representation Andreas Schmidt DBKDA - 2018 5/66 Keyword Search • Given: • Number of Keywords • Document collection • Result: • All documents in the collection, cotaining the keywords • (ranked by relevance) Andreas Schmidt DBKDA - 2018 6/66 Naive Approach • Iterate over all documents d in document collection • For each document d, iterate all words w and check, if all the given keywords appear in this document • if yes, add document to result set • Output result set • Extensions/Variants • Ranking see examples later ... -

Sort, Uniq, Comm, Join Commands

The 19th International Conference on Web Engineering (ICWE-2019) June 11 - 14, 2019 - Daejeon, Korea Powerful Unix-Tools - sort & uniq & comm & join Andreas Schmidt Department of Informatics and Institute for Automation and Applied Informatics Business Information Systems Karlsruhe Institute of Technologie University of Applied Sciences Karlsruhe Germany Germany Andreas Schmidt ICWE - 2019 1/10 sort • Sort lines of text files • Write sorted concatenation of all FILE(s) to standard output. • With no FILE, or when FILE is -, read standard input. • sorting alpabetic, numeric, ascending, descending, case (in)sensitive • column(s)/bytes to be sorted can be specified • Random sort option (-R) • Remove of identical lines (-u) • Examples: • sort file city.csv starting with the second column (field delimiter: ,) sort -k2 -t',' city.csv • merge content of file1.txt and file2.txt and sort the result sort file1.txt file2.txt Andreas Schmidt ICWE - 2019 2/10 sort - examples • sort file by country code, and as a second criteria population (numeric, descending) sort -t, -k2,2 -k4,4nr city.csv numeric (-n), descending (-r) field separator: , second sort criteria from column 4 to column 4 first sort criteria from column 2 to column 2 Andreas Schmidt ICWE - 2019 3/10 sort - examples • Sort by the second and third character of the first column sort -t, -k1.2,1.2 city.csv • Generate a line of unique random numbers between 1 and 10 seq 1 10| sort -R | tr '\n' ' ' • Lottery-forecast (6 from 49) - defective from time to time ;-) seq 1 49 | sort -R | head -n6 -

Basic Unix Command

Basic Unix Command The Unix command has the following common pattern command_name options argument(s) Here we are trying to give some of the basic unix command in Unix Information Related man It is used to see the manual of the various command. It helps in selecting the correct options to get the desirable output. Just need to give man command finger Getting information about a local and remote user. Sometimes you may want to know what all user are currently logged into the Unix system then this will help passwd Changing your password for the user. If you are logged in, then enter passwd,it will change the password for the current user. From root login you can change password for any user by using passwd who Finding out who is logged on which find the source directory of the executable being used uname print name of current system. On Solaris uname -X will give the cpu and various other informations uptime Time since the last reboot File listing and directory manipulation command ls List contents of Directory(s) Syntax: ls options OPTIONS The following options are supported:-a Lists all entries, including those that begin with a dot (.), which are normally not listed. -d If an argument is a directory, lists only its name (not its contents); often used with -l to get the status of a directory. -n The same as -l, except that the owner's UID and group's GID numbers are printed, rather than the associated character strings. -r Reverses the order of sort to get reverse alphabetic or oldest first as appropriate. -

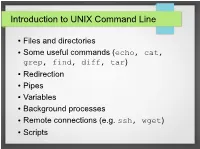

Introduction to UNIX Command Line

Introduction to UNIX Command Line ● Files and directories ● Some useful commands (echo, cat, grep, find, diff, tar) ● Redirection ● Pipes ● Variables ● Background processes ● Remote connections (e.g. ssh, wget) ● Scripts The Command Line ● What is it? ● An interface to UNIX ● You type commands, things happen ● Also referred to as a “shell” ● We'll use the bash shell – check you're using it by typing (you'll see what this means later): ● echo $SHELL ● If it doesn't say “bash”, then type bash to get into the bash shell Files and Directories / home var usr mcuser abenson drmentor science catvideos stuff data code report M51.fits simulate.c analyze.py report.tex Files and Directories ● Get a pre-made set of directories and files to work with ● We'll talk about what these commands do later ● The “$” is the command prompt (yours might differ). Type what's listed after hit, then press enter. $$ wgetwget http://bit.ly/1TXIZSJhttp://bit.ly/1TXIZSJ -O-O playground.tarplayground.tar $$ tartar xvfxvf playground.tarplayground.tar Files and directories $$ pwdpwd /home/abenson/home/abenson $$ cdcd playgroundplayground $$ pwdpwd /home/abenson/playground/home/abenson/playground $$ lsls animalsanimals documentsdocuments sciencescience $$ mkdirmkdir mystuffmystuff $$ lsls animalsanimals documentsdocuments mystuffmystuff sciencescience $$ cdcd animals/mammalsanimals/mammals $$ lsls badger.txtbadger.txt porcupine.txtporcupine.txt $$ lsls -l-l totaltotal 88 -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 19441944 MayMay 3131 18:0318:03 badger.txtbadger.txt -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 13471347 MayMay 3131 18:0518:05 porcupine.txtporcupine.txt Files and directories “Present Working Directory” $$ pwdpwd Shows the full path of your current /home/abenson/home/abenson location in the filesystem. -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering.