Towards Integrated Oil and Gas Databases in Iraq

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ar2011 02 Briefing Papers for Web.Indd

ALASKA DIVISION OF GEOLOGICAL & GEOPHYSICAL SURVEYS FY12 Project Description DIGITAL GEOLOGIC DATABASE PROJECT In 2000, the Alaska Division of Geological & Geophysical Surveys (DGGS) saw an urgent need to develop a geologic database system to provide the architecture for consistent data input and organization. That database system now includes data identifica- tion and retrieval functions that guide and encourage users to access geologic data online. This project was initiated as part of the federally funded Minerals Data and Information Rescue in Alaska (MDIRA) program; ongoing data input, use, and maintenance of the database system are now an integral part of DGGS’s operations supported by State General Funds. DGGS’s digital geologic database (Geologic & Earth Resources Information Library of Alaska [GERILA]) has three primary objec- tives: (1) Maintain this spatially referenced geologic database system in a centralized data and information architecture with access to new DGGS geologic data; (2) create a functional, map-based, on-line system that allows the public to find and identify the type and geographic locations of geologic data available from DGGS and then retrieve and view or download the selected data along with national-standard metadata (http://www.dggs .alaska .gov/pubs/); and (3) integrate DGGS data with data from other, related geoscience agencies through the multi-agency web portal, http://akgeology.info. During the first 11 years, the project work group established a secure and stable enterprise database structure, started loading data into the database, and created multiple Web-based user interfaces. As a result, the public can access Alaska-related reports and maps published by DGGS, the U.S. -

Databases to Support Gis/Rs Analyses of Earth Systems for Civil Society

DESIGN AND IMPLEMENTATION OF “FACTUAL” DATABASES TO SUPPORT GIS/RS ANALYSES OF EARTH SYSTEMS FOR CIVIL SOCIETY Tsehaie Woldai * , Ernst Schetselaar Department of Earth Systems Analysis (ESA), International Institute for Geo-information Science and Earth Observation (ITC), P.O. Box 6, 7500 AA, Hengelosestraat 99, ENSCHEDE, The Netherlands, [email protected] , [email protected] KEY WORDS: ‘factual’ databases, field data capture, geographic information systems, geoscience, multidisciplinary surface categorizations, remote sensing ABSTRACT: With the increasing access to various types of remotely sensed and ancillary spatial data, there is a growing demand for an independent source of reliable ground data to systematically support information extraction for gaining an understanding of earth systems. Conventionally maps, ad-hoc sample campaigns or exclusively remotely sensed data are used but these approaches are not well suited for effective data integration. This paper discusses avenues towards alternative methods for field data acquisition geared to the extraction of information from remotely sensed and ancillary spatial datasets from a geoscience perspective. We foresee that the merging of expertise in recently developed mobile technology, database design and thematic geoscientific knowledge, provides the potential to deliver innovative strategies for the acquisition and analysis of ground data that could profoundly alter the methods how we model earth systems today. With further development and maturation of such research methodologies we ultimately envisage a situation where planners and researchers can with the same ease as they nowadays download remotely sensed data over the web, download standardized and reliable ‘factual’ datasets from the surface of the earth to process downloaded remotely sensed data for effective extraction of information. -

E-CONTENT Practical Core Course (GRB CC 202)

E-CONTENT COMPILED AND DESIGNED BY SABA KHANAM E-CONTENT Practical Core Course THEMATIC CARTOGRAPHY (GRB CC 202) COMPILED AND DESIGNED BY SABA KHANAM DEPARTMENT OF GEOGRAPHY SABA KHANAM E-mail id: [email protected] , Contact no. 8604633742 E-CONTENT COMPILED AND DESIGNED BY SABA KHANAM UNIT III SABA KHANAM E-mail id: [email protected] , Contact no. 8604633742 E-CONTENT COMPILED AND DESIGNED BY SABA KHANAM CARTOGRAPHIC OVERLAYS The objects in a spatial database are representations of real-world entities with associated attributes. The power of a GIS comes from its ability to look at entities in their geographical context and examine relationships between entities. thus a GIS database is much more than a collection of objects and attributes. in this unit we look at the ways a spatial database can be assembled from simple objects. For e.g. how are lines linked together to form complex hydrologic or transportation networks. For e.g. how can points, lines or areas be used to represent more complex entities like surfaces? POINT DATA 1. the simplest type of spatial object 2. choice of entities which will be represented as points depends on the scale of the map/study 3. e.g. on a large scale map - encode building structures as point locations 4. e.g. on a small scale map - encode cities as point locations 5. the coordinates of each point can be stored as two additional attributes 6. information on a set of points can be viewed as an extended attribute table each row is a point - all information about the point is contained in the row each column is an attribute two of the columns are the coordinates overhead - Point data here northing and easting represent y and x coordinates each point is independent of every other point, represented as a separate row in the database model LINE DATA Infrastructure networks transportation networks - highways and railroads utility networks - gas, electric, telephone, water airline networks - hubs and routes canals SABA KHANAM E-mail id: [email protected] , Contact no. -

Geologic Resource Evaluation Scoping Summary for Marsh

Geologic Resource Evaluation Scoping Summary Marsh-Billings-Rockefeller National Historical Park & Saint-Gaudens National Historic Site Geologic Resources Division National Park Service The Geologic Resource Evaluation (GRE) Program provides each of 270 identified natural area National Park Service (NPS) units with a geologic scoping meeting, a digital geologic map, and a geologic resource evaluation report. Geologic scoping meetings generate an evaluation of the adequacy of existing geologic maps for resource management, provide an opportunity for discussion of park-specific geologic management issues and, if possible, include a site visit with local geologic experts. The purpose of these meetings is to identify geologic mapping coverage and needs, distinctive geologic processes and features, resource management issues, and potential monitoring and research needs. Outcomes of this scoping process are a scoping summary (this report), a digital geologic map, and a geologic resource evaluation report. The National Park Service held a GRE scoping meeting for Marsh-Billings-Rockefeller National Historical Park (MABI) and Saint-Gaudens National Historic Site (SAGA) on July 10, 2007 at the University of Massachusetts, Amherst. Tim Connors (NPS-GRD) facilitated the discussion of map coverage and Bruce Heise (NPS-GRD) led the discussion regarding geologic processes and features at the two units. Participants at the meeting included NPS staff from the parks, Northeast Temperate Network, and Geologic Resources Division, geologists from the University -

Digital Mapping Techniques ‘02— Workshop Proceedings

cov02 5/20/03 3:19 PM Page 1 So Digital Mapping Techniques ‘02— Workshop Proceedings May 19-22, 2002 Salt Lake City, Utah Convened by the Association of American State Geologists and the United States Geological Survey Hosted by the Utah Geological Survey U.S. GEOLOGICAL SURVEY OPEN-FILE REPORT 02-370 Printed on recycled paper CONTENTS I U.S. Department of the Interior U.S. Geological Survey Digital Mapping Techniques ʻ02— Workshop Proceedings Edited by David R. Soller May 19-22, 2002 Salt Lake City, Utah Convened by the Association of American State Geologists and the United States Geological Survey Hosted by the Utah Geological Survey U.S. GEOLOGICAL SURVEY OPEN-FILE REPORT 02-370 2002 II CONTENTS CONTENTS III This report is preliminary and has not been reviewed for conformity with the U.S. Geological Survey editorial standards. Any use of trade, product, or firm names in this publication is for descriptive purposes only and does not imply endorsement by the U.S. Government or State governments II CONTENTS CONTENTS III CONTENTS Introduction By David R. Soller (U.S. Geological Survey). 1 Oral Presentations The Value of Geologic Maps and the Need for Digitally Vectorized Data By James C. Cobb (Kentucky Geological Survey) . 3 Compilation of a 1:24,000-Scale Geologic Map Database, Phoenix Metropoli- tan Area By Stephen M. Richard and Tim R. Orr (Arizona Geological Survey). 7 Distributed Spatial Databases—The MIDCARB Carbon Sequestration Project By Gerald A. Weisenfluh (Kentucky Geological Survey), Nathan K. Eaton (Indiana Geological Survey), and Ken Nelson (Kansas Geological Survey) . -

Digital Geologic Mapping Methods

INTERNATIONAL JOURNAL OF GEOLOGY Issue 3, Volume 2, 2008 Digital geologic mapping methods: from field to 3D model M. De Donatis, S. Susini and G. Delmonaco Abstract—Classical geologic mapping is one of the main techniques software 2DMove and 3DMove by Midland Valley Exploration Ltd. used in geology where pencils, paper base map and field book are the This paper reports a case study of digital mapping carried out in traditional tools of field geologists. In this paper, we describe a new the Southern Apennines. This work is part of an ENEA project, method of digital mapping from field work to buiding three funded by the Italian Ministry of University and Research, devoted to dimensional geologic maps, including GIS maps and geologic cross- heritage sites prone to landslide risk where local-scale geologic and sections. The project consisted of detailed geologic mapping of the geomorphic mapping is fundamental in the preliminary stage of the are of Craco village (Matera - Italy). The work started in the lab by project. implementing themes for defining a cartographic base (aerial photos, topographic and geologic maps) and for field work (developing II. Study area symbols for outcrops, dip data, boundaries, faults, and landslide The study area (Fig. 1) is located in the Southern Apennines, types). Special prompts were created ad hoc for data collection. All around Craco, a small village in the Matera Province along a ridge data were located or mapped through GPS. It was possible to easily between the Bruscata and Salandrella stream. store any types of documents (digital pictures, notes, and sketches), linked to an object or a geo-referenced point. -

Computer-Based Data Acquisition and Visualization Systems in Field Geology

Computer-based data acquisition and visualization systems in fi eld geology: Results from 12 years of experimentation and future potential Terry L. Pavlis, Richard Langford, Jose Hurtado, and Laura Serpa Department of Geological Sciences, University of Texas at El Paso, El Paso, Texas 79968, USA ABSTRACT attributing is limited to critical information tem (GPS) receivers, digital cameras, recording with all objects carrying a special “note” fi eld compass inclinometers, compass-inclinometer Paper-based geologic mapping is now for input of nonstandard information. We devices that log data and position, and laser archaic, and it is essential that geologists suggest that when fi eld GIS systems become rangefi nders allow us to do things that were transition out of paper-based fi eld work and the norm, fi eld geology should enjoy a revo- impossible only a decade ago. This technology embrace new fi eld geographic information lution both in the attitude of the fi eld geolo- allows new approaches to obtaining, organizing, system (GIS) technology. Based on ~12 yr gist toward his or her data and the ability to and distributing data in all fi eld sciences. This of experience with using handheld comput- address problems using the fi eld information. technology will undoubtedly lead to major new ers and a variety of fi eld GIS software, we However, fi eld geologists will need to adjust advances in fi eld geology, and hopefully revital- have developed a working model for using to the changing technology, and many long- ize this foundation of the geosciences. fi eld GIS systems. Currently this system uses established fi eld paradigms should be reeval- In this paper we explore this issue of changing software products from ESRI (Environmen- uated. -

Digital Geologic Mapping for the Commonwealth Warren H

IN FFOCUS Digital Geologic Mapping for the Commonwealth Warren H. Anderson and Gerald A. Weisenfluh August 2003 The Digital Geologic mandated that the entire United States be tion that relate to the age, composition, mapped geologically. The legislation and structure of mineral features. Mapping Program was reauthorized in 1998. Because of this complexity, the informa- he Digital Geologic Mapping The Kentucky Geological Survey tion cannot be stored in a single data Tprogram (www.uky.edu/KGS/ has used funding from this program to structure. Individual themes must be mapping/mapping.html) has been one of produce 7.5-minute digital geologic created to represent different kinds of the largest and most successful programs quadrangle maps at a scale of 1:24,000 geologic features in a digital format. of the Kentucky Geological Survey (1 inch=2,000 feet). Geologic features are rendered in vector during the past 7 years. It is providing format to permit variation in scale the foundation of digital mapping and Program achievements without degrading quality. The geologic the database structure for future digital map data are supplied in ESRI shapefile and Web-based products and services. It he initial goal was to have format for use in geographic information is a cooperative effort between KGS and Tcomplete digital coverage for the systems. Commercially and publicly the U.S. Geological Survey, as part of entire state by the year 2007. Currently, available software can be used to view the National Cooperative Geologic the program staff are ahead of schedule, or analyze the shapefiles on a personal Mapping Program (ncgmp.usgs.gov). -

Kentucky Geology May 2000

Kentucky Geological Survey entucky Geology UNIVERSITY OF KENTUCKY K Earth ResourcesOur Common Wealth Winter 2004 Volume 5, Number 1 Kentucky Geological Potential in the Rough Creek Graben Survey 228 Mining & Mineral New natural gas research planned Resources Bldg. he Kentucky Geological Survey is planning a new research consortium to study the deep natural University of Kentucky Tgas potential of the Rough Creek Graben in western Kentucky. The project will focus on the Lexington, KY Cambrian (Rome/Eau Claire) and possibly Precambrian rocks in the graben and surrounding areas in 40506-0107 Kentucky and southern Illinois. This research is dependent on funding from the U.S. Department of 859.257.5500 Energy and joint industry participation. The project would be structured similarly to the recently fax 859.257.1147 completed Rome Trough Consortium project in eastern Kentucky, Ohio, and West Virginia. Final www.uky.edu/KGS products will include: James Cobb, State ♦ Structural and stratigraphic well- Geologist and log cross sections votion2wp2for2ough2greek2qren2tudy Director ♦ Digitized well logs tudy2ere John Kiefer, Assistant ough2greek2qren State Geologist ♦ Interpretation of available seismic @onnets2with2eelfoot2ift2to2southwestA Carol Ruthven, Editor, data Kentucky Geology ♦ Structure and isopach maps of potential reservoir intervals and Our mission is to basement increase knowledge and ♦ Core descriptions understanding of the mineral, energy, and ♦ Exploration models and final water resources, report geologic hazards, and Companies interested in partici- H PS wiles geology of Kentucky for pating in this project are encouraged to the benefit of the contact Dave Harris at 859.257.5500 Commonwealth and ext. 173 (e-mail [email protected]) or Jim Drahovzal at 859.257.5500 ext. -

Proceedings of a Workshop on Digital Mapping Techniques: Methods for Geologic Map Data Capture, Management, and Publication

Proceedings of a Workshop on Digital Mapping Techniques: Methods for Geologic Map Data Capture, Management, and Publication Edited by David R. Seller June 2-5, 1997 Lawrence, Kansas Convened by the Association of American State Geologists and the United States Geological Survey Hosted by the Kansas Geological Survey U.S. GEOLOGICAL SURVEY OPEN-FILE REPORT 97-269 This report is preliminary and has not been reviewed for conformity with U.S. Geological Survey editorial standards. Any use of trade, product, or firm names in this publication is for descriptive purposes only and does not imply endorsement by the U.S. Government or State governments. FOREWORD If American citizens want a balanced and acceptable quality of life that is economi cally and environmentally secure, the states and the nation must provide a reliable geo logic-map foundation for decision-making and public policy. In this time of government downsizing, the state geological surveys and the U.S. Geological Survey are confronting a severe geologic- mapping crisis. Less than twenty percent of the United States is ade quately covered by geologic maps that are detailed and accurate enough for today's deci sions. Critical ground-water, fossil- fuel, mineral-resource, and environmental issues require accurate and up-to-date geologic information almost always in the form of maps to arrive at viable solutions. There are hundreds of examples of the important role geologic mapping plays in our society. In 1988, the Association of American State Geologists (AASG) and the United States Geological Survey (USGS) began to draft legislation that would require and fund a major, cooperative, nationwide geologic-mapping program to be administered by the USGS. -

Oilfield Acronym

Oilfield Acronym MARSEC (US Coast Guard) Maritime Security System NTAS (US Department of Homeland Seurity) National Security Advisory System GAR (World Health Organization) Global Alert and Response DBCP 1,2-dibromo-3-chloropropane WQ A textural parameter used for CBVWE computations (Halliburton) OT A Well On Test ABAN Abandonment AGM Above ground marker AMSL above mean sea level AOF Absolute open flow AOFP absolute open-flow potential AGRU acid gas removal unit ACOU Acoustic ADCP Acoustic Doppler Current Profile (system) ALR acoustic log report ACQU Acquisition log aMDEA activated methyldiethanolamine AFP Active Fire Protection APS active pipe support ART Actuator running tool ANPR Advance notice of proposed rulemaking ADROC advanced rock properties report ALC aertical seismic profile acoustic log calibration report AAR After action reviews ACHE Air-Cooled Heat Exchanger AIRG airgun AIRRE airgun report AC Alaminos Canyon Gulf of Mexico leasing area ABSA Alberta Boilers Safety Association AHBDF along hole (depth) below Derrick floor AHD along hole depth AIV Alternate isolation valve ART Alternate response technologies AADE American Association of Drilling Engineers AAODC American Association of Oilwell Drilling Contractors (obsolete; superseded by IADC) AAPG American Association of Petroleum Geologists AAPL American Association of Professional Landmen ABS American Bureau of Shipping AIChE American Institute of Chemical Engineers AIME American Institute of Mining, Metallurgical, and Petroleum Engineers ANSI American National Standards Institute API American Petroleum Institute API American Petroleum Institute: organization which sets unit standards in the oil and gas industry ASNT American Society for Nondestructive Testing ASTM American Society for Testing and Materials ASCE American Society of Chemical Engineers ASME American Society of Mechanical Engineers WAV3 Amplitude (in Seismics) AVO Amplitude variation with offset (seismic) WellCAP An IADC standard for well control training APC Anadarko Petroleum Corp. -

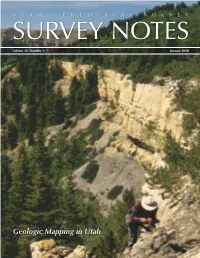

Geologic Mapping in Utah CONTENTS the DIRECTOR’S PERSPECTIVE GEOLOGIC MAPPING in UTAH

UTAH GEOLOGICAL SURVEY SURVEY NOTES Volume 40, Number 1 January 2008 Geologic Mapping in Utah CONTENTS THE DIRECTOR’S PERSPECTIVE GEOLOGIC MAPPING IN UTAH ..........1–6 by Richard G. Allis Digital Technology ..............................1 From Field to Published Map ......... 3 This issue of Survey Notes high- differential should diminish dur- News and Short Notes ...................... 5 lights the digital mapping tech- ing 2008. Utah’s oil production GeoSights ..................................................... 7 nologies that the Utah Geological in 2007 increased by 9 percent Glad You Asked ........................................... 8 Survey (UGS) is now using to to 19.5 million barrels, continu- Energy News ............................................ 10 improve the efficiency and accuracy ing the growth trend that began Teacher’s Corner ..................................... 11 of Utah’s geologic maps. In com- in 2004 when oil prices started Survey News ............................................. 12 parison to the country as a whole, to rise. Coal production in 2007 New Publications .................................... 12 the percentage of the state covered decreased by 10 percent to 24 by relatively detailed geologic maps million tons due to mine closures. Design: Liz Paton is low. At present rates of mapping, Non-fuel mineral production Cover: UGS geologist Bob Biek examines Cretaceous outcrop we expect to complete geologic values continue to be dominated while mapping on the Kolob Terrace near Zion National mapping of the whole state at a by copper and molybdenum from Park. Photo by Mike Hylland. scale of 1:100,000 by about 2015; at the Bingham mine. Finally, ura- the same time we are also mapping high-priority nium production resumed in 2007 in southeast- urban growth areas at 1:24,000.