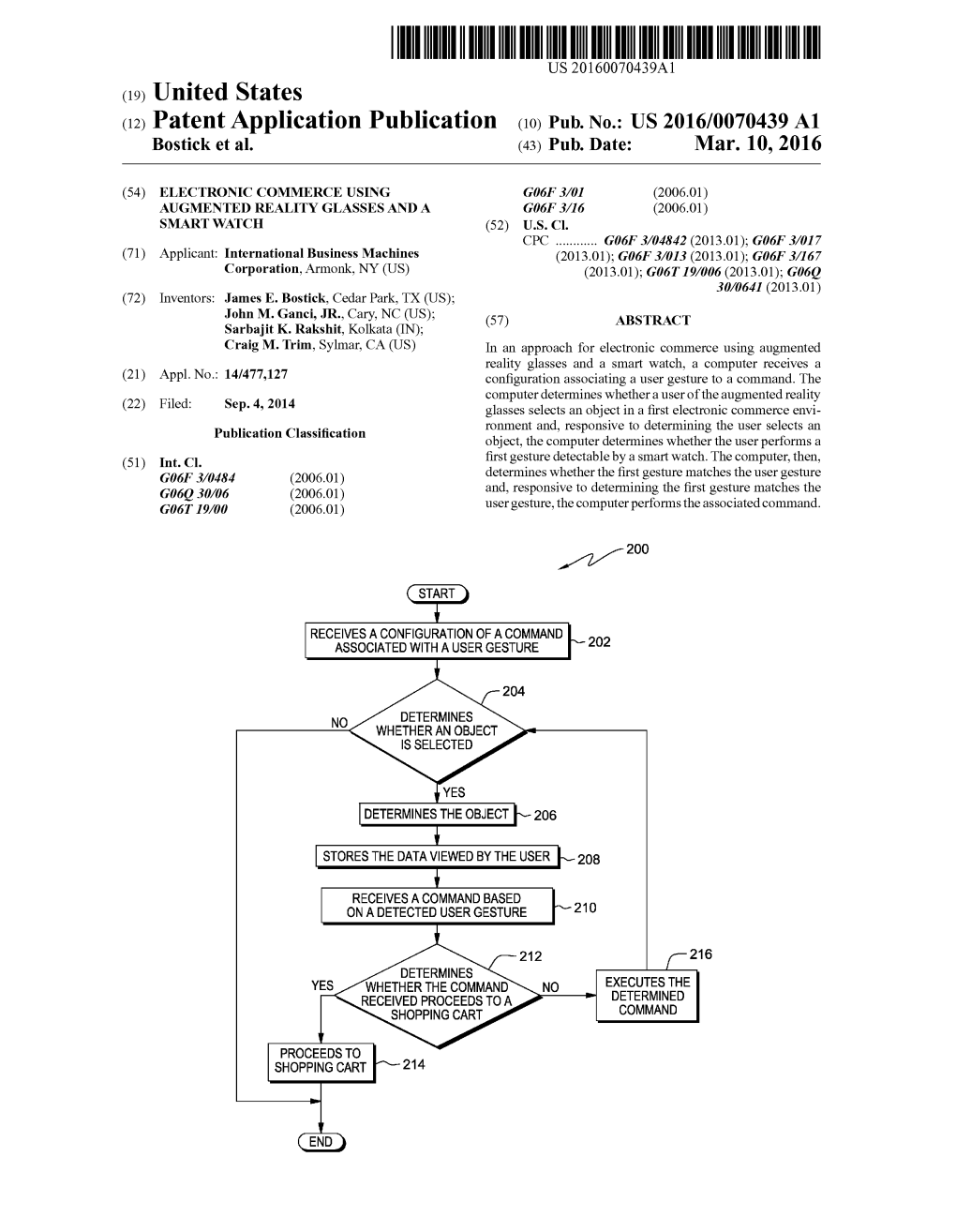

(12) Patent Application Publication (10) Pub. No.: US 2016/0070439 A1 Bostick Et Al

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Literature Study Concerning Wearable Computers for Use in Process Plants

Literature study: Wearable Control Room /LWHUDWXUHVWXG\FRQFHUQLQJZHDUDEOHFRPSXWHUVIRUXVHLQSURFHVVSODQWV This report is a literature study concerning different aspects of wearable computers. The report is meant to be used mainly as background information for defining relevant projects within the program “wearable control room” as well as an introduction of this research topics for Ph.D. students and other interested readers. ,QWURGXFWLRQ This report presents the state of the research concerning wearable computers with focus on use within process plants. In addition to wearable computers, the report focuses on human factors as well as the organisational and social aspects of substituting the existing centralised control room with distributed wearable computers. Research related to wearable computers has existed for several years, but most of the research has concentrated on other aspects and goals than developing a wearable control room for process plants. A wearable computer can generally be defined as (Bass 1997): • it may be used while the wearer is in motion; • it may be used while one or both hands are free or occupied with other tasks; • it exists within the corporeal envelope of the user, i.e., it should be not merely attached to the body but becomes an integral part of the person's clothing; • it must allow the user to maintain control; • it must exhibit constancy, in the sense that it should be constantly available. The following chapters outline the state of work related to different topics, applications and finally, universities and research institutions working with wearable computers. Chapter 2 pre- sents a short review of equipment available on the market. -

Tutorial on Designing for Wearability

Tutorial on Designing for Wearability Francine Gemperle and Peter Sellar Carnegie Mellon University Pittsburgh, PA 15213 USA [email protected], [email protected] http://www.wearablegroup.org ABSTRACT At the Second International Symposium on The objective of this workshop will be to Wearable Computing we presented a paper titled familiarize the group with two things. The role Design for Wearability [1]. In this paper we of Industrial Design in an interdisciplinary discussed a set of guidelines for designing design process and how to Design for wearable products to fit the three dimensional Wearability. The intended audience for this shapes of the dynamic human body. This paper tutorial is anyone who is managing or also presented a set of wearable forms to be used participating in an interdisciplinary development as a reference tool for the creation of wearable team designing wearable hardware. This tutorial products. In this tutorial we will share our will also be valuable for anyone who is process for both creating a wearable computer interested the role of industrial design in the housing to fit the human body and designing the development of a wearable computer. Some component placement on a printed circuit board knowledge of the field of wearable computing to meet the complex and organic shape of the will be helpful. For the exercise, participants computer housing. This tutorial will focus on an should be comfortable working with their hands iterative and interdisciplinary design process. We and talking in a group. will present a case study application of the Design for Wearability work. We will do an INSTRUCTORS exercise creating wearable shapes for computers Francine Gemperle has been a Design and negotiating those shapes with the internal Researcher with Carnegie Mellon’s Wearable components of a computer. -

WT41N0 Wearable Computer Spec Sheet

PRODUCT SPEC SHEET WT41N0 FEATURES Ergonomic hands-free wearable design Award-winning ergonomic design increases user WT41N0 comfort and productivity THE NEXT GENERATION IN RUGGED WEARABLE VOICE AND High-performance nextgeneration DATA MOBILE COMPUTERS platform Best-in-class dual core Easily increase productivity and eliminate errors in your warehouse or distribution center with next processor provides the generation hands-free voice and data. With the major increase in package volume driven by multi-channel support, power to run virtually any demands for the ultimate in customer service, plus increasing regulations for traceability, you need to move more enterprise application items through your warehouse or distribution center and capture more information about those items than ever before. With the WT41N0 wearable mobile computer on the arms of your workers, you will. Now, workers can keep their 802.11a/b/g/n WLAN hands and eyes on the materials they are handling ' no time is lost handling paper or a handheld mobile device. Add a Easily connects to existing ring-style scanner worn on a finger and workers can capture 1-D and 2-D bar codes on the fly, able to document the WLAN for fast integration; path of that item for full traceability ' and verify that the right items are in the right orders, shipped to the right 802.11n and support for customers at the right time. The result? Improved customer satisfaction and loyalty. More throughput with the same advanced Zebra's WLAN staff, driving staff utilization up. And less time spent capturing more information on item movement, driving the cost of features greatly improves compliance with traceability regulations down. -

Classification of Smart Environment Scenarios in Combination with a Human-Wearable- Environment-Communication Using Wireless Connectivity

CLASSIFICATION OF SMART ENVIRONMENT SCENARIOS IN COMBINATION WITH A HUMAN-WEARABLE- ENVIRONMENT-COMMUNICATION USING WIRELESS CONNECTIVITY Kristof Friess1 and Prof. Dr. Dr. h.c. Volker Herwig2 1Department of Applied Computer Science, University of Applied Science Erfurt, Erfurt, Germany [email protected] 2Department of Applied Computer Science, University of Applied Science Erfurt, Erfurt, Germany [email protected] ABSTRACT The development of computer technology has been rapid. Not so long ago, the first computer was developed which was large and bulky. Now, the latest generation of smartphones has a calculation power, which would have been considered those of supercomputers in 1990. For a smart environment, the person recognition and re-recognition is an important topic. The distribution of new technologies like wearable computing is a new approach to the field of person recognition and re-recognition. This article lays out the idea of identifying and re-identifying wearable computing devices by listening to their wireless communication connectivity like Wi-Fi and Bluetooth and building a classification of interaction scenarios for the combination of human-wearable-environment. KEYWORDS Wireless Network, Smart Environment, Wearable-Computing, UbiComp, Interaction-Scenarios 1. INTRODUCTION With the growing market of worn computer systems like smartphones and smartwatches, in short wearables, the possible interaction between human and computer has changed. In a short time, also the interaction between human, computer and the environment will change. There are unlimited use cases where a human uses the computer as an assistant for filtering data, processing information or storing context information. Now and in the near future, there are a lot of use cases coming up, where not the device itself helps the human to become smarter, but the environment, based on the knowledge about the human, acts smartly. -

Ubiquitous Computing: Trends and History

Ubiquitous Computing: Trends and History Lecture 2 CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 1 Introduction Review: What is Ubiquitous Computing? • Immerses computers in a real environment • Sensors support interact with and control the environment. • Limited power supply, storage, memory and bandwidth. • Operate unattended (much like embedded systems). • Devices are mobile/wireless. • May reside on a person (wearable computing). • Have special peripherals. • Contrast this with virtual reality which immerses humans in a computer generated articial environment. CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 2 Historical Origins and Trends Computers are becoming smaller and cheaper over time • Originally few computers many operators . Machines Expensive and Large . People (relatively) cheap • Trend toward more computers per person . Users may not be tech savvy . Even tech savvy users have limited time . Minimal intervention is required People don't want to be separated from their data • But spying on users upsets them • And can violate laws - security is important • Mobility and wireless access are critical. CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 3 Some Popular Views Many visions were popularized in the press • First to work on it, although other visionaries preceded him • Entertainment Industry (Ian Fleming, Gene Rodenberry) • Vanaver Bush's seminal article [1] As We Might Think predicted the WWW and Ubiquitous Computing in 1945! • Vernor Vinge (retired Computer Science Professor and Science ction writer) has interesting ubiquitous computing visions. • Movies: The Terminator, numerous Philip K. Dick books and screen plays (Blade Runner, Total Recall, Minority Report). Has been popular in the research community for over a decade CSI 660, William A. -

Wearable Technology for Enhanced Security

Communications on Applied Electronics (CAE) – ISSN : 2394-4714 Foundation of Computer Science FCS, New York, USA Volume 5 – No.10, September 2016 – www.caeaccess.org Wearable Technology for Enhanced Security Agbaje M. Olugbenga, PhD Babcock University Department of Computer Science Ogun State, Nigeria ABSTRACT Sproutling. Watches like the Apple Watch, and jewelry such Wearable's comprise of sensors and have computational as Cuff and Ringly. ability. Gadgets such as wristwatches, pens, and glasses with Taking a look at the history of computer generations up to the installed cameras are now available at cheap prices for user to present, we could divide it into three main types: mainframe purchase to monitor or securing themselves. Nigerian faced computing, personal computing, and ubiquitous or pervasive with several kidnapping in schools, homes and abduction for computing[4]. The various divisions is based on the number ransomed collection and other unlawful acts necessitate these of computers per users. The mainframe computing describes reviews. The success of the wearable technology in medical one large computer connected to many users and the second, uses prompted the research into application into security uses. personal computing, as one computer per person while the The method of research is the use of case studies and literature term ubiquitous computing however, was used in 1991 by search. This paper takes a look at the possible applications of Paul Weiser. Weiser depicted a world full of embedded the wearable technology to combat the cases of abduction and sensing technologies to streamline and improve life [5]. kidnapping in Nigeria. Nigeria faced with several kidnapping in schools and homes General Terms are in dire need for solution. -

The Challenges of Wearable Computing: Part 2

THE CHALLENGES OF WEARABLE COMPUTING: PART 2 WEARABLE COMPUTING PURSUES AN INTERFACE IDEAL OF A CONTINUOUSLY WORN, INTELLIGENT ASSISTANT THAT AUGMENTS MEMORY, INTELLECT, CREATIVITY, COMMUNICATION, AND PHYSICAL SENSES AND ABILITIES. MANY CHALLENGES AWAIT WEARABLE DESIGNERS. PART 2 BEGINS WITH THE CHALLENGES OF NETWORK RESOURCES AND PRIVACY CONCERNS. THIS SURVEY DESCRIBES THE POSSIBILITIES OFFERED BY WEARABLE SYSTEMS AND, IN DOING SO, DEMONSTRATES ATTRIBUTES UNIQUE TO THIS CLASS OF COMPUTING. Challenges throughput. Another serious issue is open The most immediately striking challenge standards to enable interoperability between in designing wearable computers is creating different services. For example, only one long- appropriate interfaces. However, the issues of range radio should be necessary to provide power use, heat dissipation, networking, and telephony, text messaging, Global Positioning privacy provide a necessary framework in System (GPS) correction signals, and so on. which to discuss interface. Part 1 of this arti- For wearable computers, networking cle covers the first two of these issues; Part 2 involves communication off body to the fixed begins with the networking discussion. network, on body among devices, and near Thad Starner body with objects near the user. Each of these Networking three network types requires different design Georgia Institute of As with any wireless mobile device, the decisions. Designers must also consider pos- amount of power and the type of services sible interference between the networks. Technology available can constrain networking. Wearable computers could conserve resources through Off-body communications. Wireless commu- improved coordination with the user inter- nication from mobile devices to fixed infra- face. For example, the speed at which a given structure is the most thoroughly researched of information packet is transferred can be bal- these issues. -

Wearable and Ubiquitous Computing

Education & Training Editor: Scott F. Midkiff ■ Virginia Tech ■ [email protected] Wearable and Ubiquitous Computing Tom Martin EDITOR’S INTRODUCTION ture and thickness of the user’s device primarily depends on the relative power This issue’s column continues its coverage of innovative courses in pervasive computing. consumption and expense of computa- Tom Martin of Virginia Tech describes a course on wearable and ubiquitous computing that tion, communication, and local storage. he developed and has taught twice. He describes the course’s scope, assignments and grad- Because wearable and ubiquitous ing, and design projects and his experiences with them. Please send me your comments and computing systems face significant suggestions for future columns. —Scott Midkiff power consumption challenges, the course spends a good amount of time on low-power design and system-level irginia Tech has offered a course on • User input/output devices power management. These topics are V wearable and ubiquitous comput- • Location and context awareness also some of my main research areas ing in the Bradley Department of Elec- • Application case studies and let me connect teaching and re- trical and Computer Engineering twice: search. I begin this section of the class one at the senior or master’s level in The wearable and ubiquitous com- with the power consumption mecha- spring 2002 (15 students) and another puting overview begins with Mark nisms in digital CMOS (complementary at the master’s level in spring 2003 (11 Weiser’s papers from the early 1990s1–2 metal-oxide semiconductor) circuits and students). The course aims to provide and more recent articles by Mahadev then move on to higher-level power students with an appreciation of current Satyanarayanan3 and Thad Starner.4–5 management problems, such as dynamic wearable and ubiquitous computing These provide a road map and motiva- CPU speed-setting, low-power compi- research issues and give them hands-on tion for topics covered later in the lation and source code modification, design experience. -

An Object-Oriented Software Architecture for 3D Mixed Reality Applications

An Object-Oriented Software Architecture for 3D Mixed Reality Applications Wayne Piekarski and Bruce H. Thomas Wearable Computer Laboratory School of Computer and Information Science University of South Australia Mawson Lakes, SA, 5095, Australia {wayne, thomas}@cs.unisa.edu.au Abstract MR systems are usually sensor driven and so the software architecture is based on data flow. Figure 1 shows this data flow This paper presents a new software architecture for 3D from an overall perspective with sensor data arriving into the mixed reality applications, named Tinmith-evo5. Currently there MR system, being processed by specific application code and are a limited number of existing toolkits for the development of configurations, and then rendering the final output to the HMD 3D mixed reality applications, each optimised for a particular of the user. The data flow model is supported by the use of ob- feature but at the detriment of others. Complex interactive user jects to perform specific actions such as processing tracker data, interfaces and applications require extensive supporting infra- combining results, and rendering 3D graphics. Objects allow structure, and can be hampered by inadequate support. The problems to be broken down into small separate tasks to sim- Tinmith-evo5 architecture is optimised to develop mobile aug- plify software development. Objects are then connected together mented reality and other interactive 3D applications on port- into a directed graph, and as new values enter the system, the able platforms with limited resources. This architecture is im- values are processed through the graph as a flow of data, adjust- plemented in C++ with an object-oriented data flow design, an ing the current state and eventually rendering to the HMD. -

Towards Wearable Cognitive Assistance

Towards Wearable Cognitive Assistance Kiryong Ha, Zhuo Chen, Wenlu Hu, Wolfgang Richter, Padmanabhan Pillaiy, and Mahadev Satyanarayanan Carnegie Mellon University and yIntel Labs ABSTRACT Wearable devices such as Google Glass offer a glimmer of We describe the architecture and prototype implementation hope to users in cognitive decline. These devices integrate of an assistive system based on Google Glass devices for first-person image capture, sensing, processing and commu- users in cognitive decline. It combines the first-person im- nication capabilities in an aesthetically elegant form factor. age capture and sensing capabilities of Glass with remote Through context-aware real-time scene interpretation (in- processing to perform real-time scene interpretation. The cluding recognition of objects, faces, activities, signage text, system architecture is multi-tiered. It offers tight end-to-end and sounds), we can create software that offers helpful guid- latency bounds on compute-intensive operations, while ad- ance for everyday life much as a GPS navigation system dressing concerns such as limited battery capacity and lim- helps a driver. Figure 1 presents a hypothetical scenario ited processing capability of wearable devices. The system that is suggestive of the future we hope to create. gracefully degrades services in the face of network failures An ideal cognitive assistive system should function \in the and unavailability of distant architectural tiers. wild"with sufficient functionality, performance and usability to be valuable at any time and place. It should also be suf- Categories and Subject Descriptors ficiently flexible to allow easy customization for the unique D.4.7 [Software]: Operating System { Organization and disabilities of an individual. -

Forms of Wearable Computer”, S

Copyright Notice IET This paper is a postprint of a paper submitted to and accepted for publication in the Proceedings of the IEE Eurowearable 2003 Conference, and is subject to Institution of Engineering and Technology Copyright. The copy of record is available at the IET Digital Library. “Forms of wearable computer”, S. I. Woolley, J. W. Cross, S. Ro, R. Foster, G. Reynolds, C. Baber, H. Bristow and A. Schwirtz, IEE Eurowearable 2003 Conference, September 2003. DOI: http://dx.doi.org/10.1049/ic:20030146 A revised version of this paper was also published in Electronics Systems and Software, also subject to Institution of Engineering and Technology Copyright. The copy of record is also available at the IET Digital Library. "Count three for wearable computers", Sandra Woolley, James Cross, Soonghwan Ro, Rob Foster, Greg Reynolds et al., Electronics Systems and Software, vol. 2, no. 1, pp. 34 – 38, February 2004 DOI: http://dx.doi.org/10.1049/ess:20040106 FORMS OF WEARABLE COMPUTER S. I. Woolley, J. W. Cross, S. Ro, R. Foster, G. Reynolds, C. Baber, H. Bristow, A. Schwirtz Electronic, Electrical and Computer Engineering, The University of Birmingham, U.K. ABSTRACT He suggested that, in the future, rooms might contain more than 100 tab-like computers, 10 or 20 This paper introduces the microprocessor, tabs and 1 or 2 boards, observing that "Hundreds communicating and sensing technologies relevant of computers in a room could seem intimidating at to wearable computing. It reviews the trends and first, just as hundreds of volts coursing through challenges that form part of the evolution of wires in the walls once did." Since then his vision computer technology, from a computer filling a of so many embedded computer systems appears room to a room full of invisible computers. -

Using the Wearable Computer in the Construction Industry

A Case Study: Using The Wearable Computer In The Construction Industry by Scott Fuller, Zhihui Ding, and Anoop Sattineni ABSTRACT: This paper aims to explore the prospective applications of cutting- edge technology like wearable computers in construction industry. The history, current research, application areas and future trends of wearable computing are investigated. The first-hand experience of the authors through a well-designed field experiment is introduced in detail to show how the technologies actually work. The field experiment is performed using three different methods, including by hand, by Palm PC and by wearable computer. The pros and cons for each are analyzed and compared to reveal why wearable computer is a better tool to help construction professionals to perform their daily duties like “punch-list”. The results in summary showed that in immediate future, wearable computer is a better solution for construction than Palm PCs. But the advocates of the wearable computer should always bear in mind that the technologies are always developing. Palm PCs are still expanding their functionality while maintaining the mobility. It is not impossible that tomorrow they could have the same storage and processing capacities as wearable computers today. So Palm PCs are going to be strong competitors of wearable computer. KEYWORDS: Construction, Palm PC, Punch List, Wearable Computers 1. INTRODUCTION: the situation. With features like heads-up displays, unobtrusive input devices, and The construction industry is on the edge of personal wireless local area networks, the something that could drastically improve the wearable computer can act as an intelligent entire construction process. Recently, a new assistant.