National Certificate: Business Analyses

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learn Python the Hard Way

ptg11539604 LEARN PYTHON THE HARD WAY Third Edition ptg11539604 Zed Shaw’s Hard Way Series Visit informit.com/hardway for a complete list of available publications. ed Shaw’s Hard Way Series emphasizes instruction and making things as ptg11539604 Zthe best way to get started in many computer science topics. Each book in the series is designed around short, understandable exercises that take you through a course of instruction that creates working software. All exercises are thoroughly tested to verify they work with real students, thus increasing your chance of success. The accompanying video walks you through the code in each exercise. Zed adds a bit of humor and inside jokes to make you laugh while you’re learning. Make sure to connect with us! informit.com/socialconnect LEARN PYTHON THE HARD WAY A Very Simple Introduction to the Terrifyingly Beautiful World of Computers and Code Third Edition ptg11539604 Zed A. Shaw Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The author and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. -

Effectiveness of Software Testing Techniques in Enterprise: a Case Study

MYKOLAS ROMERIS UNIVERSITY BUSINESS AND MEDIA SCHOOL BRIGITA JAZUKEVIČIŪTĖ (Business Informatics) EFFECTIVENESS OF SOFTWARE TESTING TECHNIQUES IN ENTERPRISE: A CASE STUDY Master Thesis Supervisor – Assoc. Prof. Andrej Vlasenko Vilnius, 2016 CONTENTS INTRODUCTION .................................................................................................................................. 7 1. THE RELATIONSHIP BETWEEN SOFTWARE TESTING AND SOFTWARE QUALITY ASSURANCE ........................................................................................................................................ 11 1.1. Introduction to Software Quality Assurance ......................................................................... 11 1.2. The overview of Software testing fundamentals: Concepts, History, Main principles ......... 20 2. AN OVERVIEW OF SOFTWARE TESTING TECHNIQUES AND THEIR USE IN ENTERPRISES ...................................................................................................................................... 26 2.1. Testing techniques as code analysis ....................................................................................... 26 2.1.1. Static testing ...................................................................................................................... 26 2.1.2. Dynamic testing ................................................................................................................. 28 2.2. Test design based Techniques ............................................................................................... -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Liste Von Programmiersprachen

www.sf-ag.com Liste von Programmiersprachen A (1) A (21) AMOS BASIC (2) A# (22) AMPL (3) A+ (23) Angel Script (4) ABAP (24) ANSYS Parametric Design Language (5) Action (25) APL (6) Action Script (26) App Inventor (7) Action Oberon (27) Applied Type System (8) ACUCOBOL (28) Apple Script (9) Ada (29) Arden-Syntax (10) ADbasic (30) ARLA (11) Adenine (31) ASIC (12) Agilent VEE (32) Atlas Transformatikon Language (13) AIMMS (33) Autocoder (14) Aldor (34) Auto Hotkey (15) Alef (35) Autolt (16) Aleph (36) AutoLISP (17) ALGOL (ALGOL 60, ALGOL W, ALGOL 68) (37) Automatically Programmed Tools (APT) (18) Alice (38) Avenue (19) AML (39) awk (awk, gawk, mawk, nawk) (20) Amiga BASIC B (1) B (9) Bean Shell (2) B-0 (10) Befunge (3) BANCStar (11) Beta (Programmiersprache) (4) BASIC, siehe auch Liste der BASIC-Dialekte (12) BLISS (Programmiersprache) (5) Basic Calculator (13) Blitz Basic (6) Batch (14) Boo (7) Bash (15) Brainfuck, Branfuck2D (8) Basic Combined Programming Language (BCPL) Stichworte: Hochsprachenliste Letzte Änderung: 27.07.2016 / TS C:\Users\Goose\Downloads\Softwareentwicklung\Hochsprachenliste.doc Seite 1 von 7 www.sf-ag.com C (1) C (20) Cluster (2) C++ (21) Co-array Fortran (3) C-- (22) COBOL (4) C# (23) Cobra (5) C/AL (24) Coffee Script (6) Caml, siehe Objective CAML (25) COMAL (7) Ceylon (26) Cω (8) C for graphics (27) COMIT (9) Chef (28) Common Lisp (10) CHILL (29) Component Pascal (11) Chuck (Programmiersprache) (30) Comskee (12) CL (31) CONZEPT 16 (13) Clarion (32) CPL (14) Clean (33) CURL (15) Clipper (34) Curry (16) CLIPS (35) -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Digital Rights Management and the Process of Fair Use Timothy K

University of Cincinnati College of Law University of Cincinnati College of Law Scholarship and Publications Faculty Articles and Other Publications Faculty Scholarship 1-1-2006 Digital Rights Management and the Process of Fair Use Timothy K. Armstrong University of Cincinnati College of Law Follow this and additional works at: http://scholarship.law.uc.edu/fac_pubs Part of the Intellectual Property Commons Recommended Citation Armstrong, Timothy K., "Digital Rights Management and the Process of Fair Use" (2006). Faculty Articles and Other Publications. Paper 146. http://scholarship.law.uc.edu/fac_pubs/146 This Article is brought to you for free and open access by the Faculty Scholarship at University of Cincinnati College of Law Scholarship and Publications. It has been accepted for inclusion in Faculty Articles and Other Publications by an authorized administrator of University of Cincinnati College of Law Scholarship and Publications. For more information, please contact [email protected]. Harvard Journal ofLaw & Technology Volume 20, Number 1 Fall 2006 DIGITAL RIGHTS MANAGEMENT AND THE PROCESS OF FAIR USE Timothy K. Armstrong* TABLE OF CONTENTS I. INTRODUCTION: LEGAL AND TECHNOLOGICAL PROTECTIONS FOR FAIR USE OF COPYRIGHTED WORKS ........................................ 50 II. COPYRIGHT LAW AND/OR DIGITAL RIGHTS MANAGEMENT .......... 56 A. Traditional Copyright: The Normative Baseline ........................ 56 B. Contemporary Copyright: DRM as a "Speedbump" to Slow Mass Infringement .......................................................... -

IEC 61850: Role of Conformance Testing in Successful Integration

1 IEC 61850: Role of Conformance Testing in Successful Integration Eric A. Udren, KEMA T&D Consulting Dave Dolezilek, Schweitzer Engineering Laboratories, Inc. Abstract—The IEC 61850 Standard, Communications Net- a single standard solution for communications integration hav- works and Systems in Substations, provides an internationally ing high-level capabilities not available from protocols in prior recognized method of local and wide area data communications use. The most important technical objectives were: for substation and system-wide protective relaying, integration, control, monitoring, metering, and testing. It has built-in capabil- 1. Use self-description and object modeling technol- ity for high-speed control and data sharing over the communica- ogy to simplify the integration and configuration tions network, eliminating most dedicated control wiring as well process for the user. as dedicated communications channels among substations. IEC 2. Dramatically increase the functional capabilities, 61850 facilitates systems built from multiple vendors’ IEDs. Many vendors have supported the standard throughout its crea- sophistication and complexity of the integration to tion, and they are developing products to handle all the needed meet users’ ultimate relaying, control, and enterprise functions. data integration needs. This paper is the third in a series on the evolution of IEC 3. Incorporate robust, very high-speed control commu- 61850. It focuses on the purpose and value of conformance test- nications messaging that can operate among relays ing and certification. IEC 61850 is aimed at making it easy for and other IEDs to eliminate panel wiring and con- utilities to install and integrate single-vendor or multivendor trols. control and protection systems in substations and to integrate existing communications. -

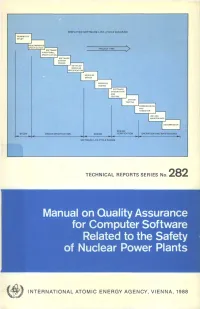

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

A Framework for Evaluating Performance of Software Testing Tools

INTERNATIONAL JOURNAL OF SCIENTIFIC & TECHNOLOGY RESEARCH VOLUME 9, ISSUE 02, FEBRUARY 2020 ISSN 2277-8616 A Framework For Evaluating Performance Of Software Testing Tools Pramod Mathew Jacob, Prasanna Mani Abstract: Software plays a pivotal role in this technology era. Due to its increased applicable domains, quality of the software being developed is to be monitored and controlled. Software organization follows many testing methodologies to perform quality management. Testing methodologies can be either manual or automated. Automated testing tools got massive acceptability among software professionals due to its enhanced features and functionalities than that of manual testing. There are hundreds of test automation tools available, among which some perform exceptionally well. Due to the availability of large set of tools, it is a herculean task for the project manager to choose the appropriate automation tool, which is suitable for their project domain. In this paper, we derive a software testing tool selection model which evaluates the performance aspects of a script-based testing tool. Experimental evaluation proves that, it can be used to compare and evaluate various performance characteristics of commercially accepted test automation tools based on user experience as well as system performance. Index Terms: Automated testing, Software testing, Test script, Testing Tool, Test bed, Verification and Validation. —————————— ◆ —————————— 1 INTRODUCTION S OFTWARE is advanced in recent days by enhancing its applicable domains. Software is embedded in almost all electronic gadgets and systems. In this scenario the quality of the software plays a significant role. The customer or end – user should be satisfied which is primarily depended on quality and capability of the software being developed. -

Website Testing • Black-Box Testing

18 0672327988 CH14 6/30/05 1:23 PM Page 211 14 IN THIS CHAPTER • Web Page Fundamentals Website Testing • Black-Box Testing • Gray-Box Testing The testing techniques that you’ve learned in previous • White-Box Testing chapters have been fairly generic. They’ve been presented • Configuration and by using small programs such as Windows WordPad, Compatibility Testing Calculator, and Paint to demonstrate testing fundamentals and how to apply them. This final chapter of Part III, • Usability Testing “Applying Your Testing Skills,” is geared toward testing a • Introducing Automation specific type of software—Internet web pages. It’s a fairly timely topic, something that you’re likely familiar with, and a good real-world example to apply the techniques that you’ve learned so far. What you’ll find in this chapter is that website testing encompasses many areas, including configuration testing, compatibility testing, usability testing, documentation testing, security, and, if the site is intended for a worldwide audience, localization testing. Of course, black-box, white- box, static, and dynamic testing are always a given. This chapter isn’t meant to be a complete how-to guide for testing Internet websites, but it will give you a straightfor- ward practical example of testing something real and give you a good head start if your first job happens to be looking for bugs in someone’s website. Highlights of this chapter include • What fundamental parts of a web page need testing • What basic white-box and black-box techniques apply to web page testing • How configuration and compatibility testing apply • Why usability testing is the primary concern of web pages • How to use tools to help test your website 18 0672327988 CH14 6/30/05 1:23 PM Page 212 212 CHAPTER 14 Website Testing Web Page Fundamentals In the simplest terms, Internet web pages are just documents of text, pictures, sounds, video, and hyperlinks—much like the CD-ROM multimedia titles that were popular in the mid 1990s. -

Contents at a Glance

02_088702 ftoc.qxp 9/26/06 10:13 PM Page ix Contents at a Glance Introduction .................................................................1 Part I: Programming a Computer....................................7 Chapter 1: Learning Computer Programming for the First Time .................................9 Chapter 2: All about Programming Languages .............................................................19 Chapter 3: How to Write a Program ...............................................................................37 Chapter 4: The Tools of a Computer Programmer.......................................................49 Part II: The Building Blocks of Programming ................63 Chapter 5: Getting Started...............................................................................................65 Chapter 6: The Structure of a Computer Program.......................................................75 Chapter 7: Variables, Constants, and Comments.........................................................91 Chapter 8: Crunching Numbers and Playing with Strings.........................................103 Chapter 9: Making Decisions with Branching Statements ........................................119 Chapter 10: Repeating Yourself with Loops................................................................143 Chapter 11: Dividing a Program into Subprograms ...................................................157 Chapter 12: Storing Stuff in Arrays...............................................................................175 Chapter -

Michael Bolton in NZ Pinheads, from the Testtoolbox, Kiwi Test Teams & More!

NZTester The Quarterly Magazine for the New Zealand Software Testing Community and Supporters ISSUE 4 JUL - SEP 2013 FREE In this issue: Interview with Bryce Day of Catch Testing at ikeGPS Five Behaviours of a Highly Effective Time Lord Tester On the Road Again Hiring Testers Michael Bolton in NZ Pinheads, From the TestToolbox, Kiwi Test Teams & more! NZTester Magazine Editor: Geoff Horne [email protected] [email protected] ph. 021 634 900 P O Box 48-018 Blockhouse Bay Auckland 0600 New Zealand www.nztester.co.nz Advertising Enquiries: [email protected] Disclaimer: Articles and advertisements contained in NZTester Magazine are published in good faith and although provided by people who are experts in their fields, NZTester make no guarantees or representations of any kind concerning the accuracy or suitability of the information contained within or the suitability of products and services advertised for any and all specific applications and uses. All such information is provided “as is” and with specific disclaimer of any warranties of merchantability, fitness for purpose, title and/or non-infringement. The opinions and writings of all authors and contributors to NZTester are merely an expression of the author’s own thoughts, knowledge or information that they have gathered for publication. NZTester does not endorse such authors, necessarily agree with opinions and views expressed nor represents that writings are accurate or suitable for any purpose whatsoever. As a reader of this magazine you disclaim and hold NZTester, its employees and agents and Geoff Horne, its owner, editor and publisher, harmless of all content contained in this magazine as to its warranty of merchantability, fitness for purpose, title and/or non-infringement.