Mathematics of Language

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Extended Finite Autómata O Ver Group S

Extended finite autómata o ver group s Víctor Mitrana , Ralf Stiebe Abstract Some results from Dassow and Mitrana (Internat. J. Comput. Algebra (2000)), Griebach (Theoret. Comput. Sci. 7 (1978) 311) and Ibarra et al. (Theoret. Comput. Sci. 2 (1976) 271) are generalized for finite autómata over arbitrary groups. The closure properties of these autómata are poorer and the accepting power is smaller when abelian groups are considered. We prove that the addition of any abelian group to a finite automaton is less powerful than the addition of the multiplicative group of rational numbers. Thus, each language accepted by a finite au tomaton over an abelian group is actually a unordered vector language. Characterizations of the context-free and recursively enumerable languages classes are set up in the case of non-abelian groups. We investigate also deterministic finite autómata over groups, especially over abelian groups. Keywords: Finite autómata over groups; Closure properties; Accepting capacity; Interchange lemma; Free groups 1. Introduction One of the oldest and most investigated device in the autómata theory is the finite automaton. Many fundamental properties have been established and many problems are still open. Unfortunately, the finite autómata without any external control have a very limited accepting power. DirTerent directions of research have been considered for overcoming this limitation. The most known extensión added to a finite autómata is the pushdown memory. In this way, a considerable increasing of the accepting capacity has been achieved: the pushdown autómata are able to recognize all context-free languages. Another simple and natural extensión, related somehow to the pushdown memory, was considered in a series of papers [2,4,5], namely to associate an element of a given group to each configuration, but no information regarding the associated element is allowed. -

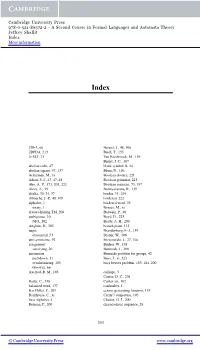

A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More Information

Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information Index 2DFA, 66 Berstel, J., 48, 106 2DPDA, 213 Biedl, T., 135 3-SAT, 21 Van Biesbrouck, M., 139 Birget, J.-C., 107 abelian cube, 47 blank symbol, B, 14 abelian square, 47, 137 Blum, N., 106 Ackerman, M., xi Boolean closure, 221 Adian, S. I., 43, 47, 48 Boolean grammar, 223 Aho, A. V., 173, 201, 223 Boolean matrices, 73, 197 Alces, A., 29 Boonyavatana, R., 139 alfalfa, 30, 34, 37 border, 35, 104 Allouche, J.-P., 48, 105 bordered, 222 alphabet, 1 bordered word, 35 unary, 1 Borges, M., xi always-halting TM, 209 Borwein, P., 48 ambiguous, 10 Boyd, D., 223 NFA, 102 Brady, A. H., 200 Angluin, D., 105 branch point, 113 angst Brandenburg, F.-J., 139 existential, 54 Brauer, W., 106 antisymmetric, 92 Brzozowski, J., 27, 106 assignment Bucher, W., 138 satisfying, 20 Buntrock, J., 200 automaton Burnside problem for groups, 42 pushdown, 11 Buss, J., xi, 223 synchronizing, 105 busy beaver problem, 183, 184, 200 two-way, 66 Axelrod, R. M., 105 calliope, 3 Cantor, D. C., 201 Bader, C., 138 Cantor set, 102 balanced word, 137 cardinality, 1 Bar-Hillel, Y., 201 census generating function, 133 Barkhouse, C., xi Cerny’s conjecture, 105 base alphabet, 4 Chaitin, G. J., 200 Berman, P., 200 characteristic sequence, 28 233 © Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information 234 Index chess, -

Applications of an Infinite Square-Free Co-Cfl”

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Theoretical Computer Science 49 (1987) 113-119 113 North-Holland APPLICATIONS OF AN INFINITE SQUARE-FREE CO-CFL” Michael G. MAIN Department of Computer Science, University of Colorado, Boulder, CO 80309, U.S.A. Walter BUCHER Institutes for information Processing, Technical Universify sf Graz, A-8010 Graz, Austria David HAUSSLER Department of Mathematics and Computer Science, University of Denver, Denver, CO 80208, U.S.A. Abstract. We disprove several conjectures about context-free languages. The proofs use the set of all strings which are nor prefixes of Thue’s infinite square-free sequence. This is a context-free language with an infinite square-free complement. 1. Introduction A square is an immediately repeated nonempty string, e.g., au, abub, newyorknewyork. A string x is called square-conruining if it contains a substring which is a square. For example, the string mississippi contains the segment iss twice in a row; in fact, mississippi contains a total of five squares. On the other hand, colorudo contains no squares (the character o appears several times, but not consecu- tively). A string without squares is called square-free. In a study of context-free languages, Autebert et al. [2,3] collected several conjectures about square-containing strings. One of the weakest conjectures was that no context-free language (CFL) could include all of the square-containing strings and still have an infinite complement. Contrary to this conjecture, such a language has recently been constructed [ 141. -

On the Language of Primitive Words*

CORE Metadata, citation and similar papers at core.ac.uk Provided by Elsevier - Publisher Connector Theoretical Computer Science ELSEVIER Theoretical Computer Science 161 (1996) 141-156 On the language of primitive words* H. Petersen *J Universitiit Stuttgart, Institut fiir Informatik, Breitwiesenstr. 20-22, D-70565 Stuttgart, Germany Received April 1994; revised June 1995 Communicated by P. Enjalbert Abstract A word is primitive if it is not a proper power of a shorter word. We prove that the set Q of primitive words over an alphabet is not an unambiguous context-free language. This strengthens the previous result that Q cannot be deterministic context-free. Further we show that the same holds for the set L of Lyndon words. We investigate the complexity of Q and L. We show that there are families of constant-depth, polynomial-size, unbounded fan-in boolean circuits and two-way deterministic pushdown automata recognizing both languages. The latter result implies efficient decidability on the RAM model of computation and we analyze the number of comparisons required for deciding Q. Finally we give a new proof showing a related language not to be context-free, which only relies on properties of semi-linear and regular sets. 1. Introduction This paper presents some further steps towards characterizing the set of primitive words over some fixed alphabet in terms of classical formal language theory and com- plexity. The notion of primitivity of words plays a central role in algebraic coding theory [28] and combinatorial theory of words [23,24]. Recently attention has been drawn to the language Q of all primitive words over an alphabet with at least two symbols. -

Pattern Selector Grammars and Several Parsing Algorithms in the Context-Free Style

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector JOURNAL OF COMPUTER AND SYSTEM SCIENCES 30, 249-273 (1985) Pattern Selector Grammars and Several Parsing Algorithms in the Context-free Style J. GONCZAROWSKI AND E. SHAMIR* Institute of Mathematics and Computer Science, The Hebrew University of Jerusalem, Jerusalem 91904, Israel Received March 30, 1982; accepted March 5, 1985 Pattern selector grammars are defined in general. We concentrate on the study of special grammars, the pattern selectors of which contain precisely k “one% (0*( 10*)k) or k adjacent “one? (O*lkO*). This means that precisely k symbols (resp. k adjacent symbols) in each sen- tential form are rewritten. The main results concern parsing algorithms and the complexity of the membership problem. We first obtain a polynomial bound on the shortest derivation and hence an NP time bound for parsing. In the case k = 2, we generalize the well-known context- free dynamic programming type algorithms, which run in polynomial time. It is shown that the generated languages, for k = 2, are log-space reducible to the context-free languages. The membership problem is thus solvable in log2 space. 0 1985 Academic Press, Inc. 1. INTRODUCTION The parsing and membership testing algorithms for context-free (CF) grammars occupy a peculiar border position in the complexity hierarchy. The dynamic programming algorithm runs in 0(n3) steps [21], but more relined methods reduce the problem to matrix multiplication with O(n*+‘) run-time, where a < 1 ([20]. Earley’s algorithm runs in time O(n*) for grammars with bounded ambiguity [4]. -

Comparisons of Parikh's Condition

J. Lopez, G. Ramos, and R. Morales, \Comparaci´onde la Condici´onde Parikh con algunas Condiciones de los Lenguajes de Contexto Libre", II Jornadas de Inform´atica y Autom´atica, pp. 305-314, 1996. NICS Lab. Publications: https://www.nics.uma.es/publications COMPARISONS OF PARIKH’S CONDITION TO OTHER CONDITIONS FOR CONTEXT-FREE LANGUAGES G. Ramos-Jiménez, J. López-Muñoz and R. Morales-Bueno E.T.S. de Ingeniería Informática - Universidad de Málaga Dpto. Lenguajes y Ciencias de la Computación P.O.B. 4114, 29080 - Málaga (SPAIN) e-mail: [email protected] Abstract: In this paper we first compare Parikh’s condition to various pumping conditions - Bar-Hillel’s pumping lemma, Ogden’s condition and Bader-Moura’s condition; secondly, to interchange condition; and finally, to Sokolowski’s and Grant’s conditions. In order to carry out these comparisons we present some properties of Parikh’s languages. The main result is the orthogonality of the previously mentioned conditions and Parikh’s condition. Keywords: Context-Free Languages, Parikh’s Condition, Pumping Lemmas, Interchange Condition, Sokolowski’s and Grant’s Condition. 1. INTRODUCTION The context-free grammars and the family of languages they describe, context free languages, were initially defined to formalize the grammatical properties of natural languages. Afterwards, their considerable practical importance was noticed, specially for defining programming languages, formalizing the notion of parsing, simplifying the translation of programming languages and in other string-processing applications. It’s very useful to discover the internal structure of a formal language class during its study. The determination of structural properties allows us to increase our knowledge about this language class. -

Extended Finite Automata Over Groups

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Discrete Applied Mathematics 108 (2001) 287–300 Extended ÿnite automata over groups Victor Mitranaa;∗;1, Ralf Stiebeb aFaculty of Mathematics, University of Bucharest, Str. Academiei 14, 70109, Bucharest, Romania bFaculty of Computer Science, University of Magdeburg, P.O. Box 4120, D-39016, Magdeburg, Germany Received 18 November 1998; revised 4 October 1999; accepted 31 January 2000 Abstract Some results from Dassow and Mitrana (Internat. J. Comput. Algebra (2000)), Griebach (Theoret. Comput. Sci. 7 (1978) 311) and Ibarra et al. (Theoret. Comput. Sci. 2 (1976) 271) are generalized for ÿnite automata over arbitrary groups. The closure properties of these automata are poorer and the accepting power is smaller when abelian groups are considered. We prove that the addition of any abelian group to a ÿnite automaton is less powerful than the addition of the multiplicative group of rational numbers. Thus, each language accepted by a ÿnite au- tomaton over an abelian group is actually a unordered vector language. Characterizations of the context-free and recursively enumerable languages classes are set up in the case of non-abelian groups. We investigate also deterministic ÿnite automata over groups, especially over abelian groups. ? 2001 Elsevier Science B.V. All rights reserved. Keywords: Finite automata over groups; Closure properties; Accepting capacity; Interchange lemma; Free groups 1. Introduction One of the oldest and most investigated device in the automata theory is the ÿnite automaton. Many fundamental properties have been established and many problems are still open. -

Position-And-Length-Dependent Context-Free Grammars – a New Type of Restricted Rewriting

Position-and-Length-Dependent Context-Free Grammars – A New Type of Restricted Rewriting fCFL flCFL fldCFL eREG fedREG REG feREG CFL lCFL feCFL fedCFL fledCFL fleCFL fREG eCFL leCFL ledCFL Vom Fachbereich Informatik der Technischen Universität Kaiserslautern zur Erlangung des akademischen Grades Doktor der Naturwissenschaften (Dr. rer. nat) genehmigte Dissertation von Frank Weinberg Dekan: Prof. Dr. Klaus Schneider Vorsitzender der Prüfungskommission: Prof. Dr. Hans Hagen 1. Berichterstatter: Prof. Dr. Markus Nebel 2. Berichterstatter: Prof. Dr. Hening Fernau Datum der wissenschaftlichen Aussprache: 3. März 2014 D 386 Abstract For many decades, the search for language classes that extend the context-free laguages enough to include various languages that arise in practice, while still keeping as many of the useful properties that context-free grammars have – most notably cubic parsing time – has been one of the major areas of research in formal language theory. In this thesis we add a new family of classes to this field, namely position-and-length- dependent context-free grammars. Our classes use the approach of regulated rewriting, where derivations in a context-free base grammar are allowed or forbidden based on, e.g., the sequence of rules used in a derivation or the sentential forms, each rule is applied to. For our new classes we look at the yield of each rule application, i.e. the subword of the final word that eventually is derived from the symbols introduced by the rule application. The position and length of the yield in the final word define the position and length of the rule application and each rule is associated a set of positions and lengths where it is allowed to be applied. -

On the Succinctness Properties of Unordered Context-Free Grammars M

ON THE SUCCINCTNESS PROPERTIES OF UNORDERED CONTEXT-FREE GRAMMARS M. Drew Moshier and William C. Rounds Electrical Engineering and Computer Science Department University of Michigan Ann Arbor, Michigan 48109 1 Abstract ally in all cases. (Unfortunately, this does not solve the P - NP question!) We prove in this paper that unordered, or ID/LP Barton's reduction took a known NP-complete prob- grammars, are e.xponentially more succinct than context- lem, the vertex-coverproblem, and reduced it to the URP free grammars, by exhibiting a sequence (L,~) of finite for ID/LP. The reduction makes crucial use of grammars languages such that the size of any CFG for L,~ must whose production size can be arbitrarilylarge. Define the grow exponentially in n, but which can be described by fan-out of a grammar to be the largest total number of polynomial-size ID/LP grammars. The results have im- symbol occurrences on the right hand side of any produc- plications for the description of free word order languages. tion. For a CFG, this would be the maximum length of any RHS; for an ID/LP grammar, we would count sym- bols and their multiplicities. Barton's reduction does the 2 Introduction following. For each instance of the vertex cover problem, Context free grammars in immediate dominance and of size n, he constructs a string w and an ID/LP grammar of fanout proportional to n such that the instance has a linear precedence format were used in GPSG [3] as a skele- vertex cover if and only if the string is generated by the ton for metarule generation and feature checking. -

Languages Generated by Iterated Idempotencies Klaus-Peter Leupold Isbn: Núm

UNVERSITAT ROVIRA I VIRGILI LANGUAGES GENERATED BY ITERATED IDEMPOTENCIES KLAUS-PETER LEUPOLD ISBN: NÚM. 978-84-691-2653-0 / DL: T.1412-2008/73 Universitat Rovira i Virgili Facultat de Lletres Departament de Filologies Romàniques Languages Generated by Iterated Idempotencies PhD Dissertation Presented by Peter LEUPOLD Supervised by Juhani KARHUMÄKI and Victor MITRANA Tarragona, 2006 UNVERSITAT ROVIRA I VIRGILI LANGUAGES GENERATED BY ITERATED IDEMPOTENCIES KLAUS-PETER LEUPOLD ISBN: NÚM. 978-84-691-2653-0 / DL: T.1412-2008/73 UNVERSITAT ROVIRA I VIRGILI LANGUAGES GENERATED BY ITERATED IDEMPOTENCIES KLAUS-PETER LEUPOLD ISBN: NÚM. 978-84-691-2653-0 / DL: T.1412-2008/73 Supervisors Professor Juhani Karhumäki Department of Mathematics University of Turku 20014 Turku Finland and Professor Victor Mitrana Grup de Recerca de Linguïstica Matemàtica Universitat Rovira i Virgili Pça. Imperial Tàrraco 1 43005 Tarragona Catalunya Spain and Faculty of Mathematics and Computer Science Bucharest University Str. Academiei 14 70109 Bucure¸sti Romania UNVERSITAT ROVIRA I VIRGILI LANGUAGES GENERATED BY ITERATED IDEMPOTENCIES KLAUS-PETER LEUPOLD ISBN: NÚM. 978-84-691-2653-0 / DL: T.1412-2008/73 UNVERSITAT ROVIRA I VIRGILI LANGUAGES GENERATED BY ITERATED IDEMPOTENCIES KLAUS-PETER LEUPOLD ISBN: NÚM. 978-84-691-2653-0 / DL: T.1412-2008/73 Foreword Not being a big friend of rituals and formalities, I was thinking about leaving out the usual sermon of thank-yous that opens all the theses I have read. However, I now have strong doubts that I will explicitly express the gratitude I feel in the context of this thesis towards many people. Therefore I have decided to follow the tradition of listing them here anyway. -

Comparisons of Parikh's Condition to Other Conditions for Context-Free Languages

G. Ramos, J. Lopez, and R. Morales, \Comparisons of Parikhs conditions to other conditions for context-free languages", Theoretical Computer Science, vol. 202, pp. 231-244, 1998. NICS Lab. Publications: https://www.nics.uma.es/publications Theoretical Computer Science ELSEVIER Theoretical Computer Science 202 (1998)23 l-244 Note Comparisons of Parikh’s condition to other conditions for context-free languages G. Ramos-Jimenez, J. Lopez-Muiioz, R. Morales-Buena* E. T.S. de Ingenieria Infornuitica, Universidad de Mrilaga, Dpto. Lenguajes y Ciencias de la Computacibn, P.O.B. 4114. 29080-Mblaga, Spain Received May 1997; revised July 1997 Communicated by M. Nivat Abstract In this paper we first compare Parikh’s condition to various pumping conditions ~ Bar- Hillel’s pumping lemma, Ogden’s condition and Bader-Moura’s condition; secondly, to inter- change condition; and finally, to Sokolowski’s and Grant“s conditions. In order to carry out these comparisons we present some properties of Parikh’s languages. The main result is the orthogonality of the previously mentioned conditions and Parikh’s condition. 0 1998- Elsevier Science B.V. All rights reserved Keywords: Context-free languages; Parikh’s condition; Pumping lemmas; Interchange condi- tion; Sokolowski’s and Grant’s condition 1. Introduction The context-free grammars and the family of languages they describe, context free languages, were initially defined to formalize the grammatical properties of natural languages. Afterwards, their considerable practical importance was noticed, specially for defining programming languages, formalizing the notion of parsing, simplifying the translation of programming languages and in other string-processing applications. It is very useful to discover the internal structure of a formal language class during its study. -

Generalized Results on Monoids As Memory

Generalized Results on Monoids as Memory Ozlem¨ Salehi Flavio D’Alessandro Bo˘gazic¸i University Universit`adi Roma “La Sapienza” Department of Computer Engineering Dipartimento di Matematica Bebek 34342 Istanbul,˙ Turkey∗ Piazzale Aldo Moro 2, 00185 Roma, Italy [email protected] Bo˘gazic¸i University Department of Mathematics Bebek 34342, Istanbul,˙ Turkey† [email protected] A. C. Cem Say Bo˘gazic¸i University Department of Computer Engineering Bebek 34342 Istanbul,˙ Turkey [email protected] We show that some results from the theory of group automata and monoid automata still hold for more general classes of monoids and models. Extending previous work for finite automata over n n commutative groups, we prove that the context-free language L1∗ = {a b : n ≥ 1}∗ can not be rec- ognized by any rational monoid automaton over a finitely generated permutable monoid. We show that the class of languages recognized by rational monoid automata over finitely generated com- pletely simple or completely 0-simple permutable monoids is a semi-linear full trio. Furthermore, we investigate valence pushdown automata, and prove that they are only as powerful as (finite) va- lence automata. We observe that certain results proven for monoid automata can be easily lifted to the case of context-free valence grammars. 1 Introduction A group automaton is a nondeterministic finite automaton equipped with a register which holds an el- ement of a group. The register is initialized with the identity element of the group, and modified by applying the group operation at each step. An input string is accepted if the register is equal to the iden- tity element at the end of the computation.