Chess Board and Piece Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Combinatorics on the Chessboard

Combinatorics on the Chessboard Interactive game: 1. On regular chessboard a rook is placed on a1 (bottom-left corner). Players A and B take alternating turns by moving the rook upwards or to the right by any distance (no left or down movements allowed). Player A makes the rst move, and the winner is whoever rst reaches h8 (top-right corner). Is there a winning strategy for any of the players? Solution: Player B has a winning strategy by keeping the rook on the diagonal. Knight problems based on invariance principle: A knight on a chessboard has a property that it moves by alternating through black and white squares: if it is on a white square, then after 1 move it will land on a black square, and vice versa. Sometimes this is called the chameleon property of the knight. This is related to invariance principle, and can be used in problems, such as: 2. A knight starts randomly moving from a1, and after n moves returns to a1. Prove that n is even. Solution: Note that a1 is a black square. Based on the chameleon property the knight will be on a white square after odd number of moves, and on a black square after even number of moves. Therefore, it can return to a1 only after even number of moves. 3. Is it possible to move a knight from a1 to h8 by visiting each square on the chessboard exactly once? Solution: Since there are 64 squares on the board, a knight would need 63 moves to get from a1 to h8 by visiting each square exactly once. -

A Package to Print Chessboards

chessboard: A package to print chessboards Ulrike Fischer November 1, 2020 Contents 1 Changes 1 2 Introduction 2 2.1 Bugs and errors.....................................3 2.2 Requirements......................................4 2.3 Installation........................................4 2.4 Robustness: using \chessboard in moving arguments..............4 2.5 Setting the options...................................5 2.6 Saving optionlists....................................7 2.7 Naming the board....................................8 2.8 Naming areas of the board...............................8 2.9 FEN: Forsyth-Edwards Notation...........................9 2.10 The main parts of the board..............................9 3 Setting the contents of the board 10 3.1 The maximum number of fields........................... 10 1 3.2 Filling with the package skak ............................. 11 3.3 Clearing......................................... 12 3.4 Adding single pieces.................................. 12 3.5 Adding FEN-positions................................. 13 3.6 Saving positions..................................... 15 3.7 Getting the positions of pieces............................ 16 3.8 Using saved and stored games............................ 17 3.9 Restoring the running game.............................. 17 3.10 Changing the input language............................. 18 4 The look of the board 19 4.1 Units for lengths..................................... 19 4.2 Some words about box sizes.............................. 19 4.3 Margins......................................... -

53Rd WORLD CONGRESS of CHESS COMPOSITION Crete, Greece, 16-23 October 2010

53rd WORLD CONGRESS OF CHESS COMPOSITION Crete, Greece, 16-23 October 2010 53rd World Congress of Chess Composition 34th World Chess Solving Championship Crete, Greece, 16–23 October 2010 Congress Programme Sat 16.10 Sun 17.10 Mon 18.10 Tue 19.10 Wed 20.10 Thu 21.10 Fri 22.10 Sat 23.10 ICCU Open WCSC WCSC Excursion Registration Closing Morning Solving 1st day 2nd day and Free time Session 09.30 09.30 09.30 free time 09.30 ICCU ICCU ICCU ICCU Opening Sub- Prize Giving Afternoon Session Session Elections Session committees 14.00 15.00 15.00 17.00 Arrival 14.00 Departure Captains' meeting Open Quick Open 18.00 Solving Solving Closing Lectures Fairy Evening "Machine Show Banquet 20.30 Solving Quick Gun" 21.00 19.30 20.30 Composing 21.00 20.30 WCCC 2010 website: http://www.chessfed.gr/wccc2010 CONGRESS PARTICIPANTS Ilham Aliev Azerbaijan Stephen Rothwell Germany Araz Almammadov Azerbaijan Rainer Staudte Germany Ramil Javadov Azerbaijan Axel Steinbrink Germany Agshin Masimov Azerbaijan Boris Tummes Germany Lutfiyar Rustamov Azerbaijan Arno Zude Germany Aleksandr Bulavka Belarus Paul Bancroft Great Britain Liubou Sihnevich Belarus Fiona Crow Great Britain Mikalai Sihnevich Belarus Stewart Crow Great Britain Viktor Zaitsev Belarus David Friedgood Great Britain Eddy van Beers Belgium Isabel Hardie Great Britain Marcel van Herck Belgium Sally Lewis Great Britain Andy Ooms Belgium Tony Lewis Great Britain Luc Palmans Belgium Michael McDowell Great Britain Ward Stoffelen Belgium Colin McNab Great Britain Fadil Abdurahmanović Bosnia-Hercegovina Jonathan -

Issue 16, June 2019 -...CHESSPROBLEMS.CA

...CHESSPROBLEMS.CA Contents 1 Originals 746 . ISSUE 16 (JUNE 2019) 2019 Informal Tourney....... 746 Hors Concours............ 753 2 Articles 755 Andreas Thoma: Five Pendulum Retros with Proca Anticirce.. 755 Jeff Coakley & Andrey Frolkin: Multicoded Rebuses...... 757 Arno T¨ungler:Record Breakers VIII 766 Arno T¨ungler:Pin As Pin Can... 768 Arno T¨ungler: Circe Series Tasks & ChessProblems.ca TT9 ... 770 3 ChessProblems.ca TT10 785 4 Recently Honoured Canadian Compositions 786 5 My Favourite Series-Mover 800 6 Blast from the Past III: Checkmate 1902 805 7 Last Page 808 More Chess in the Sky....... 808 Editor: Cornel Pacurar Collaborators: Elke Rehder, . Adrian Storisteanu, Arno T¨ungler Originals: [email protected] Articles: [email protected] Chess drawing by Elke Rehder, 2017 Correspondence: [email protected] [ c Elke Rehder, http://www.elke-rehder.de. Reproduced with permission.] ISSN 2292-8324 ..... ChessProblems.ca Bulletin IIssue 16I ORIGINALS 2019 Informal Tourney T418 T421 Branko Koludrovi´c T419 T420 Udo Degener ChessProblems.ca's annual Informal Tourney Arno T¨ungler Paul R˘aican Paul R˘aican Mirko Degenkolbe is open for series-movers of any type and with ¥ any fairy conditions and pieces. Hors concours compositions (any genre) are also welcome! ! Send to: [email protected]. " # # ¡ 2019 Judge: Dinu Ioan Nicula (ROU) ¥ # 2019 Tourney Participants: ¥!¢¡¥£ 1. Alberto Armeni (ITA) 2. Rom´eoBedoni (FRA) C+ (2+2)ser-s%36 C+ (2+11)ser-!F97 C+ (8+2)ser-hsF73 C+ (12+8)ser-h#47 3. Udo Degener (DEU) Circe Circe Circe 4. Mirko Degenkolbe (DEU) White Minimummer Leffie 5. Chris J. Feather (GBR) 6. -

More About Checkmate

MORE ABOUT CHECKMATE The Queen is the best piece of all for getting checkmate because it is so powerful and controls so many squares on the board. There are very many ways of getting CHECKMATE with a Queen. Let's have a look at some of them, and also some STALEMATE positions you must learn to avoid. You've already seen how a Rook can get CHECKMATE XABCDEFGHY with the help of a King. Put the Black King on the side of the 8-+k+-wQ-+( 7+-+-+-+-' board, the White King two squares away towards the 6-+K+-+-+& middle, and a Rook or a Queen on any safe square on the 5+-+-+-+-% same side of the board as the King will give CHECKMATE. 4-+-+-+-+$ In the first diagram the White Queen checks the Black King 3+-+-+-+-# while the White King, two squares away, stops the Black 2-+-+-+-+" King from escaping to b7, c7 or d7. If you move the Black 1+-+-+-+-! King to d8 it's still CHECKMATE: the Queen stops the Black xabcdefghy King moving to e7. But if you move the Black King to b8 is CHECKMATE! that CHECKMATE? No: the King can escape to a7. We call this sort of CHECKMATE the GUILLOTINE. The Queen comes down like a knife to chop off the Black King's head. But there's another sort of CHECKMATE that you can ABCDEFGH do with a King and Queen. We call this one the KISS OF 8-+k+-+-+( DEATH. Put the Black King on the side of the board, 7+-wQ-+-+-' the White Queen on the next square towards the middle 6-+K+-+-+& and the White King where it defends the Queen and you 5+-+-+-+-% 4-+-+-+-+$ get something like our next diagram. -

Caissa Nieuws

rvd v CAISSA NIEUWS -,1. 374 Met een vraagtecken priJ"ken alleen die zetten, die <!-Cl'.1- logiSchcnloop der partij werkelijk ingrijpend vemol"en. Voor zichzelf·sprekende dreigingen zijn niet vermeld. · Ah, de klassieken ... april 1999 Caissanieuws 37 4 april 1999 Colofon Inhoud Redactioneel meer is, hij zegt toe uit de doeken te doen 4. Gerard analyseert de pijn. hoe je een eindspeldatabase genereert. CaïssaNieuws is het clubblad van de Wellicht is dat iets voor Ed en Leo, maar schaakvereniging Caïssa 6. Rikpareert Predrag. Dik opgericht 1-5-1951 6. Dennis komt met alle standen. verder ook voor elke Caïssaan die zijn of it is een nummer. Met een schaak haar spel wil verbeteren. Daar zijn we wel Clublokaal: Oranjehuis 8. Derde nipt naar zege. dik Van Ostadestraat 153 vrije avond en nog wat feestdagen in benieuwd naar. 10. Zesde langs afgrond. 1073 TKAmsterdam hetD vooruitzicht is dat wel prettig. Nog aan Tot slot een citaat uit de bespreking van Telefoon - clubavond: 679 55 59 dinsda_g_ 13. Maarten heeftnotatie-biljet op genamer is het te merken dat er mensen Robert Kikkert van een merkwaardig boek Voorzitter de rug. zijn die de moeite willen nemen een bij dat weliswaar de Grünfeld-verdediging als Frans Oranje drage aan het clubblad te leveren zonder onderwerp heeft, maar ogenschijnlijk ook Oudezijds Voorburgwal 109 C 15. Tuijl vloert Vloermans. 1012 EM Amsterdam 17. Johan ziet licht aan de horizon. dat daar om gevraagd is. Zo moet het! een ongebruikelijke visieop ons multi-culti Telefoon 020 627 70 17 18. Leo biedt opwarmertje. In deze aflevering van CN zet Gerard wereldje bevat en daarbij enpassant het we Wedstrijdleider interne com12etitie nauwgezet uiteen dat het beter kanen moet reldvoedselverdelingsvraagstukaan de orde Steven Kuypers 20. -

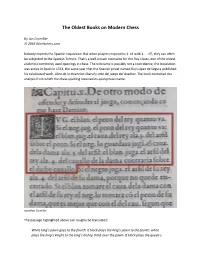

The Oldest Books on Modern Chess

The Oldest Books on Modern Chess By Jon Crumiller © 2016 Worldchess.com Nobody expects the Spanish Inquisition. But when players respond to 1. e4 with 1. … e5, they can often be subjected to the Spanish Torture. That’s a well-known nickname for the Ruy López, one of the oldest and most commonly used openings in chess. The nickname is possibly not a coincidence; the Inquisition was active in Spain in 1561, the same year that the Spanish priest named Ruy López de Segura published his celebrated work, Libro de la Invencion liberal y Arte del juego del Axedrez. The book contained the analysis from which the chess opening received its eponymous name. Jonathan Crumiller The passage highlighted above can roughly be translated: White king’s pawn goes to the fourth. If black plays the king’s pawn to the fourth: white plays the king’s knight to the king’s bishop third, over the pawn. If black plays the queen’s knight to queen’s bishop third: white plays the king’s bishop to the fourth square of the contrary queen’s knight, opposed to that knight. Or in our modern chess language: 1. e4, e5 2. Nf3, Nc6 3. Bb5. Jonathan Crumiller On the right is how the Ruy López opening would have looked four centuries ago with a standard chess set and board in Spain. This Spanish chess set is one of my oldest complete sets (along with a companion wooden set of the same era). Jonathan Crumiller Here is the Ruy López as seen with that companion set displayed on a Spanish chessboard, also from the 1600’s. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

Multilinear Algebra and Chess Endgames

Games of No Chance MSRI Publications Volume 29, 1996 Multilinear Algebra and Chess Endgames LEWIS STILLER Abstract. This article has three chief aims: (1) To show the wide utility of multilinear algebraic formalism for high-performance computing. (2) To describe an application of this formalism in the analysis of chess endgames, and results obtained thereby that would have been impossible to compute using earlier techniques, including a win requiring a record 243 moves. (3) To contribute to the study of the history of chess endgames, by focusing on the work of Friedrich Amelung (in particular his apparently lost analysis of certain six-piece endgames) and that of Theodor Molien, one of the founders of modern group representation theory and the first person to have systematically numerically analyzed a pawnless endgame. 1. Introduction Parallel and vector architectures can achieve high peak bandwidth, but it can be difficult for the programmer to design algorithms that exploit this bandwidth efficiently. Application performance can depend heavily on unique architecture features that complicate the design of portable code [Szymanski et al. 1994; Stone 1993]. The work reported here is part of a project to explore the extent to which the techniques of multilinear algebra can be used to simplify the design of high- performance parallel and vector algorithms [Johnson et al. 1991]. The approach is this: Define a set of fixed, structured matrices that encode architectural primitives • of the machine, in the sense that left-multiplication of a vector by this matrix is efficient on the target architecture. Formulate the application problem as a matrix multiplication. -

Super Human Chess Engine

SUPER HUMAN CHESS ENGINE FIDE Master / FIDE Trainer Charles Storey PGCE WORLD TOUR Young Masters Training Program SUPER HUMAN CHESS ENGINE Contents Contents .................................................................................................................................................. 1 INTRODUCTION ....................................................................................................................................... 2 Power Principles...................................................................................................................................... 4 Human Opening Book ............................................................................................................................. 5 ‘The Core’ Super Human Chess Engine 2020 ......................................................................................... 6 Acronym Algorthims that make The Storey Human Chess Engine ......................................................... 8 4Ps Prioritise Poorly Placed Pieces ................................................................................................... 10 CCTV Checks / Captures / Threats / Vulnerabilities ...................................................................... 11 CCTV 2.0 Checks / Checkmate Threats / Captures / Threats / Vulnerabilities ............................. 11 DAFiii Attack / Features / Initiative / I for tactics / Ideas (crazy) ................................................. 12 The Fruit Tree analysis process ............................................................................................................ -

Chess-Training-Guide.Pdf

Q Chess Training Guide K for Teachers and Parents Created by Grandmaster Susan Polgar U.S. Chess Hall of Fame Inductee President and Founder of the Susan Polgar Foundation Director of SPICE (Susan Polgar Institute for Chess Excellence) at Webster University FIDE Senior Chess Trainer 2006 Women’s World Chess Cup Champion Winner of 4 Women’s World Chess Championships The only World Champion in history to win the Triple-Crown (Blitz, Rapid and Classical) 12 Olympic Medals (5 Gold, 4 Silver, 3 Bronze) 3-time US Open Blitz Champion #1 ranked woman player in the United States Ranked #1 in the world at age 15 and in the top 3 for about 25 consecutive years 1st woman in history to qualify for the Men’s World Championship 1st woman in history to earn the Grandmaster title 1st woman in history to coach a Men's Division I team to 7 consecutive Final Four Championships 1st woman in history to coach the #1 ranked Men's Division I team in the nation pnlrqk KQRLNP Get Smart! Play Chess! www.ChessDailyNews.com www.twitter.com/SusanPolgar www.facebook.com/SusanPolgarChess www.instagram.com/SusanPolgarChess www.SusanPolgar.com www.SusanPolgarFoundation.org SPF Chess Training Program for Teachers © Page 1 7/2/2019 Lesson 1 Lesson goals: Excite kids about the fun game of chess Relate the cool history of chess Incorporate chess with education: Learning about India and Persia Incorporate chess with education: Learning about the chess board and its coordinates Who invented chess and why? Talk about India / Persia – connects to Geography Tell the story of “seed”. -

Yugoslavia Staunton Chess Set in Ebony & Boxwood with Mission

Read the "Yugoslavia Staunton Chess Set in Ebony & Boxwood with Mission Craft African Padauk Chess Board - 3.875\" King" for your favorite. Here you will find reasonable how to and details many special offers. This chess set package includes our Yugoslavia Staunton Chess Set in ebony and boxwood matched with our Mission Craft African Padauk and Maple Solid Wood Chess Board. The polished black ebony pieces create a beautiful contrast with the red colors of the African padauk - they look stunning together! Our Yugoslavia Staunton originates from the chess set designed for the 1950 Chess Olympiad held in Dubrovnik,Yugoslavia. This unique and handsome Staunton design has since become a favorite for chess players around the world and one of our most popular chess sets. We made a few minor changes such as adding a tapered base to enhance appearance and balance of the chess pieces while maintaining the integrity of the intended design. You\'ll love playing with this chess set whether it\'s a casual game at home or a tournament match. The king is 3.875\" tall with a 1.625\" wide base and features a traditional formee cross. The pieces are triple-weighted to produce a low-center of gravity and exceptional stability on the chess board. The pieces are padded with thick green baize for a nice cushion when picking up and moving or sliding across the chess board. The pieces are individually hand polished to beautiful luster. Our African Padauk and Maple Mission Craft Solid Wood Chess Board is simplistically beautiful and profoundly designed.