Developing Virtual Reality Applications: the Design and Evaluation of Virtual Reality Development Tools for Novice Users

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Three Dimensional Computer Graphics Federates for the 2012 SISO Smackdown Federation

Three Dimensional Computer Graphics Federates for the 2012 SISO Smackdown Federation Crystal Fordyce (843) 513-8980 [email protected] Bradley C. Schricker Dynetics, Inc. Swetha Govindaiah 1002 Explorer Blvd. (256) 714-3018 Huntsville, AL 35806 [email protected] (256) 964-4979 [email protected] Sean Muratet (256) 417-8237 Mikel D. Petty [email protected] University of Alabama in Huntsville 301 Sparkman Drive, Shelby Center 144 Daniel A. O’Neil Huntsville, AL 35899 Marshall Space Flight Center (256) 824-4368 Huntsville, AL 35811 [email protected] (256) 544-5405 [email protected] Abstract: The Simulation Interoperability Standards Organization (SISO) Smackdown is a two-year old annual event held at the 2012 Spring Simulation Interoperability Workshop (SIW). A primary objective of the Smackdown event is to provide college students with hands-on experience in developing distributed simulations using High Level Architecture (HLA). Participating for the second time, the University of Alabama in Huntsville (UAHuntsville) deployed four federates, two federates simulated a communications server and a lunar communications satellite with a radio. The other two federates generated 3D computer graphics displays for the communication satellite constellation and for the surface based lunar resupply mission. Using the Light-Weight Java Graphics Library, the satellite display federate presented a lunar-texture mapped sphere of the moon and four Telemetry Data Relay Satellites (TDRS), which received object attributes from the lunar communications satellite federate to drive their motion. The surface mission display federate was an enhanced version of the federate developed by ForwardSim, Inc. for the 2011 Smackdown simulation. Enhancements included a dead-reckoning algorithm and a visual indication of which communication satellite was in line of sight of Hadley Rille. -

A Topic Model Approach to Represent and Classify American Football Plays

A Topic Model Approach to Represent and Classify American Football Plays Jagannadan Varadarajan1 1 Advanced Digital Sciences Center of Illinois, Singapore [email protected] 2 King Abdullah University of Science and Technology, Saudi Indriyati Atmosukarto1 Arabia [email protected] 3 University of Illinois at Urbana-Champaign, IL USA Shaunak Ahuja1 [email protected] Bernard Ghanem12 [email protected] Narendra Ahuja13 [email protected] Automated understanding of group activities is an important area of re- a Input ba Feature extraction ae Output motion angle, search with applications in many domains such as surveillance, retail, Play type templates and labels time & health care, and sports. Despite active research in automated activity anal- plays player role run left pass left ysis, the sports domain is extremely under-served. One particularly diffi- WR WR QB RB QB abc Documents RB cult but significant sports application is the automated labeling of plays in ] WR WR . American Football (‘football’), a sport in which multiple players interact W W ....W 1 2 D run right pass right . WR WR on a playing field during structured time segments called plays. Football . RB y y y ] QB QB 1 2 D RB plays vary according to the initial formation and trajectories of players - w i - documents, yi - labels WR Trajectories WR making the task of play classification quite challenging. da MedLDA modeling pass midrun mid pass midrun η βk K WR We define a play as a unique combination of offense players’ trajec- WR y QB d RB QB RB tories as shown in Fig. -

Three Dimensional Computer Graphics Federates for the 2012 Smackdown Simulation

Three Dimensional Computer Graphics Federates for the 2012 Smackdown Simulation Crystal Fordyce (843) 513-8980 [email protected] Swetha Govindaiah (256) 714-3018 [email protected] Sean Muratet (256) 417-8237 [email protected] Daniel A. O’Neil Marshall Space Flight Center Huntsville, AL 35811 (256) 544-5405 [email protected] Bradley C. Schricker Dynetics, Inc. 1002 Explorer Blvd. Huntsville, AL 35806 (256) 964-4979 [email protected] Abstract: The Simulation Interoperability Standards Organization (SISO) Smackdown is a two-year old annual event held at the 2012 Spring Simulation Interoperability Workshop (SIW). A primary objective of the Smackdown event is to provide college students with hands-on experience in developing distributed simulations using High Level Architecture (HLA). Participating for the second time, the University of Alabama in Huntsville (UAHuntsville) deployed four federates, two federates simulated a communications server and a lunar communications satellite with a radio. The other two federates generated 3D computer graphics displays for the communication satellite constellation and for the surface based lunar resupply mission. Using the Light-Weight Java Graphics Library, the satellite display federate presented a lunar-texture mapped sphere of the moon and four Telemetry Data Relay Satellites (TDRS), which received object attributes from the lunar communications satellite federate to drive their motion. The surface mission display federate was an enhanced version of the federate developed by ForwardSim, Inc. for the 2011 Smackdown simulation. Enhancements included a dead-reckoning algorithm and a visual indication of which communication satellite was in line of sight of Hadley Rille. This paper concentrates on these two federates by describing the functions, algorithms, HLA object attributes received from other federates, development experiences and recommendations for future, participating Smackdown teams. -

Automatic Annotation of American Football Video Footage for Game Strategy Analysis

https://doi.org/10.2352/ISSN.2470-1173.2021.6.IRIACV-303 © 2021, Society for Imaging Science and Technology Automatic Annotation of American Football Video Footage for Game Strategy Analysis Jacob Newman, Jian-Wei Lin, Dah-Jye Lee, and Jen-Jui Liu, Brigham Young University, Provo, Utah, USA Abstract helping coaches study and understand both how their team plays Annotation and analysis of sports videos is a challeng- and how other teams play. This could assist them in game plan- ing task that, once accomplished, could provide various bene- ning before the game or, if allowed, making decisions in real time, fits to coaches, players, and spectators. In particular, Ameri- thereby improving the game. can Football could benefit from such a system to provide assis- This paper presents a method of player detection from a sin- tance in statistics and game strategy analysis. Manual analysis of gle image immediately before the play starts. YOLOv3, a pre- recorded American football game videos is a tedious and ineffi- trained deep learning network, is used to detect the visible play- cient process. In this paper, as a first step to further our research ers, followed by a ResNet architecture which labels the players. for this unique application, we focus on locating and labeling in- This research will be a foundation for future research in tracking dividual football players from a single overhead image of a foot- key players during a video. With this tracking data, automated ball play immediately before the play begins. A pre-trained deep game analysis can be accomplished. learning network is used to detect and locate the players in the image. -

Using Camera Motion to Identify Types of American Football Plays

USING CAMERA MOTION TO IDENTIFY TYPES OF AMERICAN FOOTBALL PLAYS Mihai Lazarescu, Svetha Venkatesh School of Computing, Curtin University of Technology, GPO Box U1987, Perth 6001, W.A. Email: [email protected] ABSTRACT learning algorithm used to train the system. The results This paper presents a method that uses camera motion pa- are described in Section 5 with the conclusions presented rameters to recognise 7 types of American Football plays. in Section 6. The approach is based on the motion information extracted from the video and it can identify short and long pass plays, 2. PREVIOUS WORK short and long running plays, quaterback sacks, punt plays and kickoff plays. This method has the advantage that it is Motion parameters have been used in the past since they fast and it does not require player or hall tracking. The sys- provide a simple, fast and accurate way to search multi- tem was trained and tested using 782 plays and the results media databases for specific shots (for example a shot of show that the system has an overall classification accuracy a landscape is likely to involve a significant amount of pan, of 68%. whilst a shot of an aerobatic sequence is likely to contain roll). 1. INTRODUCTION Previous work done towards tbe recognition of Ameri- can Football plays has generally involved methods that com- The automatic indexing and retrieval of American Football bine clues from several sources such as video, audio and text plays is a very difficult task for which there are currently scripts. no tools available. The difficulty comes from both the com- A method for tracking players in American Football grey- plexity of American Football itself as well as the image pro- scale images that makes use of context knowledge is de- cessing involved for an accurate analysis of the plays. -

Alexey Patrushev Analysis of Software Engineering Data with Self-Organizing Maps

ALEXEY PATRUSHEV ANALYSIS OF SOFTWARE ENGINEERING DATA WITH SELF-ORGANIZING MAPS Master of Science thesis Examiner: prof. Kari Systä Examiner and topic approved by the Faculty Council of the Faculty of Computing and Electrical Engineering on 4th May 2016 i ABSTRACT Alexey Patrushev: Analysis of software engineering data with self-organizing maps, Tampere University of Technology Master of Science Thesis, 72 pages, 17 Appendix pages January 2016 Master’s Degree Programme in Information Technology Major: Pervasive Systems Examiner: Professor Kari Systä Keywords: Self-Organizing Maps, Software Engineering Data, Machine Learning Nowadays software developers have tools that help to assist them during development and management processes of software products. These tools store large amount of Software Engineering data resulted from these processes. Analysis of the data can reveal valuable information about project performance and discover useful business patterns used during development. This information can be utilized to find projects where teams have some management problems and help to improve situation. Currently existing methods in the field have applicability limitations, because they require an expert knowledge for evaluation and are not capable to deal with large number of projects. In this thesis, we will explore possibility to apply Machine Learning methods to analysis of software engineering data. Machine Learning methods are capable to build a model from sample inputs and produce data-driven predictions. They have been gaining popularity over the last decades and show promising results in applications, where human expertise was traditionally used. In this work, we attempt to extract and analyze software project management patterns from software engineering data stored in the GitHub repositories. -

Prioritizing Pull Requests

Prioritizing pull requests Version of June 17, 2015 Erik van der Veen Prioritizing pull requests THESIS submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE in COMPUTER SCIENCE by Erik van der Veen born in Voorburg, the Netherlands Software Engineering Research Group Q42 Department of Software Technology Waldorpstraat 17F Faculty EEMCS, Delft University of Technology 2521 CA Delft, the Netherlands The Hague, the Netherlands www.ewi.tudelft.nl www.q42.com c 2014 Erik van der Veen. Cover picture: Finding the pull request that needs the most attention. Prioritizing pull requests Author: Erik van der Veen Student id: 1509381 Email: [email protected] Abstract Previous work showed that in the pull-based development model integrators face challenges with regard to prioritizing work in the face of multiple concurrent pull requests. We identified the manual prioritization heuristics applied by integrators and ex- tracted features from these heuristics. The features are used to train a machine learning model, which is capable of predicting a pull request’s importance. The importance is then used to create a prioritized order of the pull requests. Our main contribution is the design and initial implementation of a prototype service, called PRioritizer, which automatically prioritizes pull requests. The service works like a priority inbox for pull requests, recommending the top pull requests the project owner should focus on. It keeps the pull request list up-to-date when pull requests are merged or closed. In addition, the service provides functionality that GitHub is currently lacking. We implemented pairwise pull request conflict detection and several new filter and sorting options e.g. -

0X0a I Don't Know Gregor Weichbrodt FROHMANN

0x0a I Don’t Know Gregor Weichbrodt FROHMANN I Don’t Know Gregor Weichbrodt 0x0a Contents I Don’t Know .................................................................4 About This Book .......................................................353 Imprint ........................................................................354 I Don’t Know I’m not well-versed in Literature. Sensibility – what is that? What in God’s name is An Afterword? I haven’t the faintest idea. And concerning Book design, I am fully ignorant. What is ‘A Slipcase’ supposed to mean again, and what the heck is Boriswood? The Canons of page construction – I don’t know what that is. I haven’t got a clue. How am I supposed to make sense of Traditional Chinese bookbinding, and what the hell is an Initial? Containers are a mystery to me. And what about A Post box, and what on earth is The Hollow Nickel Case? An Ammunition box – dunno. Couldn’t tell you. I’m not well-versed in Postal systems. And I don’t know what Bulk mail is or what is supposed to be special about A Catcher pouch. I don’t know what people mean by ‘Bags’. What’s the deal with The Arhuaca mochila, and what is the mystery about A Bin bag? Am I supposed to be familiar with A Carpet bag? How should I know? Cradleboard? Come again? Never heard of it. I have no idea. A Changing bag – never heard of it. I’ve never heard of Carriages. A Dogcart – what does that mean? A Ralli car? Doesn’t ring a bell. I have absolutely no idea. And what the hell is Tandem, and what is the deal with the Mail coach? 4 I don’t know the first thing about Postal system of the United Kingdom. -

Poli12-Bootstrappingsmalltalk-SCP

Bootstrapping Reflective Systems: The Case of Pharo G. Polito1,2,∗, S. Ducasse1, L. Fabresse2, N. Bouraqadi2, B. van Ryseghem1 Abstract Bootstrapping is a technique commonly known by its usage in language definition by the introduction of a compiler written in the same language it compiles. This process is important to understand and modify the definition of a given language using the same language, taking benefit of the abstractions and expression power it provides. A bootstrap, then, supports the evolution of a language. However, the infrastructure of reflective systems like Smalltalk includes, in addition to a compiler, an environment with several self-references. A reflective system bootstrap should consider all its infrastructural components. In this paper, we propose a definition of bootstrap for object-oriented reflective systems, we describe the architecture and components it should contain and we analyze the challenges it has to overcome. Finally, we present a reference bootstrap process for a reflective system and Hazelnut, its implementation for bootstrapping the Pharo Smalltalk-inspired system. Keywords: Object-Oriented Programming and Design, Bootstrap, Smalltalk, Software Evolution 1. Introduction Reflective systems are those that reason about and act upon themselves [21]. A causal connection exists between the program and its representation inside the program itself as a meta-program [16]. This reflective architecture introduces self-references: an object-oriented system is composed by objects, which are instances of classes, which are also objects, and so on. These self-references, also known as meta-circularities [6], allow the manipulation of several meta-levels on one infrastructure. Reflective systems traditionally modify their self-representation to evolve and define new abstractions. -

Proceedings.Pdf

Goals and scopes •The goals of the workshop is to create a forum around advances or experience in Smalltalk and to trigger discussions and exchanges of ideas. Participants are invited to submit research articles. We will not enforce any length restriction. However we expect papers of two kinds: –Short position papers describing emerging ideas. –Long research papers with deeper description of experiments and of research results. •We welcome contributions on all aspects, theoretical as well as practical, of Smalltalk related topics such as: –Aspect-oriented programming, –Design patterns, –Experience reports, –Frameworks, –Implementation, –new dialects or languages implemented in Smalltalk, –Interaction with other languages, –Meta-programming and Meta-modeling, –Tools Publication •Both submissions and final papers must be prepared using the ACM SIGPLAN 10 point format. Templates for Word and LaTeX are available at http://www.acm.org/sigs/sigplan/authorInformation.htm •Authors of the best accepted papers will also be invited to submit extended versions for publication in a special issue of Elsevier "Science of Computer Programming: Methods of Software Design: Techniques and Applications" •Please submit your paper through EasyChair on http://www.easychair.org/conferences/?conf=iwst2012 Program Chairs •Loïc Lagadec Lab-STICC, UBO, France •Alain Plantec, Lab-STICC, UBO, France Program Committee •Gabriela Arevalo, Universidad Nacional de Quilmes, Agentina •Alexandre Bergel University of Chile •Andrew P. Black Portland State University, US •Marcus -

2018-3-Hr01-Ka205-060151 Agrient

Project funded by Erasmus+ / Key Action 2 ‐ Cooperation for innovation and the exchange of good practices, Strategic Partnerships for Youth AGRIENT – Enhancing Youth Entrepreneurship Skills, Careers Guidance and Competences in Agriculture through a Game Based Virtual Reality Platform 2018‐3‐HR01‐KA205‐060151 Project funded by Erasmus+ / Key Action 2 ‐ Cooperation for innovation and the exchange of good practices, Strategic Partnerships for Youth 2018‐3‐HR01‐KA205‐060151 AGRIENT Enhancing Youth Entrepreneurship Skills, Careers Guidance and Competences in Agriculture through a Game Based Virtual Reality Platform Work Package: IO2A1 Product/Deliverable: State of the art in Virtual reality and Virtual Worlds Version: 1 Date: September, 2019 Type: Distribution: Project Partners Responsible Partner: CTE Author: CTE Contributors: All Partners Approved by: Date: Project funded by Erasmus+ / Key Action 2 ‐ Cooperation for innovation and the exchange of good practices, Strategic Partnerships for Youth Table of Contents Introduction ........................................................................................................................................ 5 Virtual Reality ..................................................................................................................................... 5 Augmented reality .............................................................................................................................. 6 The concept of the Industry Revolution ............................................................................................ -

FOSDEM 2013 Schedule

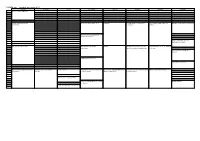

FOSDEM 2013 - Saturday 2013-02-02 (1/9) Janson K.1.105 Ferrer Chavanne Lameere H.1301 H.1302 H.1308 10:30 Welcome to FOSDEM 2013 10:45 11:00 How we made the Jenkins QEMU USB status report 2012 Rockbuild The neat guide to Fedora RPM LinuxonAndroid and SlapOS on Wayland for Application Developers community Packaging Android 11:15 11:30 CRIU: Checkpoint and Restore (mostly) In Userspace 11:45 Ubuntu Online Accounts for application developers 12:00 The Devil is in the Details Vtrill: Rbridges for Virtual PTXdist Building RPM packages from Git Emdedded distro shootout: buildroot Networking repositories with git-buildpackage vs. Debian Better software through user 12:15 research 12:30 Bringing Xen to CentOS-6 Sketching interactions 12:45 13:00 The Open Observatory of Network Porting Fedora to 64-bit ARM Coding Goûter A brief tutorial on Xen's advanced Guacamayo -- Building Multimedia Package management and creation ARM v7 State of the Body ↴ Interference systems security features Appliance with Yocto ↴ in Gentoo Linux ↴ 13:15 Spoiling and Counter-spoiling 13:30 oVirt Live Storage Migration - Under Modern CMake ↴ the Hood ↴ ZONE: towards a better news feed ↴ 13:45 FOSDEM 2013 - Saturday 2013-02-02 (2/9) H.1309 H.2213 H.2214 AW1.120 AW1.121 AW1.125 AW1.126 Guillissen 10:30 10:45 11:00 Metaphor and BDD XMPP 101 Storytelling FLOSS Welcome and Introduction The room open() process Scripting Apache OpenOffice: Welcome to the Perl d… Introductory Nutshell Programs Inheritance versus Roles (Writer, Calc, Impress) 11:15 Signal/Collect: Processing Large