Deep Deinterlacing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shadow Telecinetelecine

ShadowShadow TelecineTelecine HighHigh performanceperformance SolidSolid StateState DigitalDigital FilmFilm ImagingImaging TechnologyTechnology Table of contents • Introduction • Simplicity • New Scanner Design • All Digital Platform • The Film Look • Graphical Control Panel • Film Handling • Main Features • Six Sector Color Processor • Cost of ownership • Summary SHADOWSHADOTelecine W Telecine Introduction The Film Transfer market is changing const- lantly. There are a host of new DTV formats required for the North American Market and a growing trend towards data scanning as opposed to video transfer for high end compositing work. Most content today will see some form of downstream compression, so quiet, stable images are still of paramount importance. With the demand growing there is a requirement for a reliable, cost effective solution to address these applications. The Shadow Telecine uses the signal proce- sing concept of the Spirit DataCine and leve- rages technology and feature of this flagship product. This is combined with a CCD scan- ner witch fulfils the requirements for both economical as well as picture fidelity. The The Film Transfer market is evolving rapidly. There are a result is a very high performance producthost allof new DTV formats required for the North American the features required for today’s digital Marketappli- and a growing trend towards data scanning as cation but at a greatly reduced cost. opposed to video transfer for high end compositing work. Most content today will see some form of downstream Unlike other Telecine solutions availablecompression, in so quiet, stable images are still of paramount importance. With the demand growing there is a this class, the Shadow Telecine is requirementnot a for a reliable, cost effective solution to re-manufactured older analog Telecine,address nor these applications. -

Problems on Video Coding

Problems on Video Coding Guan-Ju Peng Graduate Institute of Electronics Engineering, National Taiwan University 1 Problem 1 How to display digital video designed for TV industry on a computer screen with best quality? .. Hint: computer display is 4:3, 1280x1024, 72 Hz, pro- gressive. Supposing the digital TV display is 4:3 640x480, 30Hz, Interlaced. The problem becomes how to convert the video signal from 4:3 640x480, 60Hz, Interlaced to 4:3, 1280x1024, 72 Hz, progressive. First, we convert the video stream from interlaced video to progressive signal by a suitable de-interlacing algorithm. Thus, we introduce the de-interlacing algorithms ¯rst in the following. 1.1 De-Interlacing Generally speaking, there are three kinds of methods to perform de-interlacing. 1.1.1 Field Combination Deinterlacing ² Weaving is done by adding consecutive ¯elds together. This is ¯ne when the image hasn't changed between ¯elds, but any change will result in artifacts known as "combing", when the pixels in one frame do not line up with the pixels in the other, forming a jagged edge. This technique retains full vertical resolution at the expense of half the temporal resolution. ² Blending is done by blending, or averaging consecutive ¯elds to be displayed as one frame. Combing is avoided because both of the images are on top of each other. This instead leaves an artifact known as ghosting. The image loses vertical resolution and 1 temporal resolution. This is often combined with a vertical resize so that the output has no numerical loss in vertical resolution. The problem with this is that there is a quality loss, because the image has been downsized then upsized. -

Cathode-Ray Tube Displays for Medical Imaging

DIGITAL IMAGING BASICS Cathode-Ray Tube Displays for Medical Imaging Peter A. Keller This paper will discuss the principles of cathode-ray crease the velocity of the electron beam for tube displays in medical imaging and the parameters increased light output from the screen; essential to the selection of displays for specific 4. a focusing section to bring the electron requirements. A discussion of cathode-ray tube fun- beam to a sharp focus at the screen; damentals and medical requirements is included. 9 1990bu W.B. Saunders Company. 5. a deflection system to position the electron beam to a desired location on the screen or KEY WORDS: displays, cathode ray tube, medical scan the beam in a repetitive pattern; and irnaging, high resolution. 6. a phosphor screen to convert the invisible electron beam to visible light. he cathode-ray tube (CRT) is the heart of The assembly of electrodes or elements mounted T almost every medical display and its single within the neck of the CRT is commonly known most costly component. Brightness, resolution, as the "electron gun" (Fig 2). This is a good color, contrast, life, cost, and viewer comfort are analogy, because it is the function of the electron gun to "shoot" a beam of electrons toward the all strongly influenced by the selection of a screen or target. The velocity of the electron particular CRT by the display designer. These beam is a function of the overall accelerating factors are especially important for displays used voltage applied to the tube. For a CRT operating for medical diagnosis in which patient safety and at an accelerating voltage of 20,000 V, the comfort hinge on the ability of the display to electron velocity at the screen is about present easily readable, high-resolution images 250,000,000 mph, or about 37% of the velocity of accurately and rapidly. -

LENOVO LEGION Y27gq-20 the MIGHTY GAME CHANGER

LENOVO LEGION Y27gq-20 THE MIGHTY GAME CHANGER The Lenovo Legion Y27gq-20 is an ultra-responsive gaming monitor STEP UP YOUR GAME with impressive features to add maximum thrill to your gameplay. Designed to meet the unique requirements of avid gamers, Seamless Gaming this 27-inch monitor with NVIDIA® G-SYNC™ technology, Experience 165 Hz refresh rate, and 1ms response time delivers superior No Ghosting performance every time. The NearEdgeless QHD, or Motion Blur anti-glare display, and optional monitor USB speaker with sound by Harman Kardon provide stunning Brilliant Ergonomic audio-visual quality for an optimized Design gaming experience. INSTANTANEOUS RESPONSE FOR THE ULTIMATE GAMING EXPERIENCE For time-critical games where every millisecond Technology: counts, the winning combination of the 165 Hz NVIDIA® G-SYNC™ refresh rate and 1ms extreme response time eliminates streaking, ghosting, and motion blur to give you Refresh Rate: a competitive edge. The NVIDIA® G-SYNC™ technology* 165 Hz on the Lenovo Legion Y27gq-20 dynamically matches the Response Time: refresh rate of the display to the frame rate output of the GPU. 1ms This helps eliminate screen tearing, prevent display stutter, and minimize input lag, allowing you to enjoy ultra-responsive and distraction-free gaming. *NVIDIA® G-SYNC™ can only work with NVIDIA® GeForce™ series graphics card. Users need to enable the G-SYNC™ function in NVIDIA® Control panel. SUPERIOR AUDIO-VISUAL QUALITY FOR IMMERSIVE GAMING The Y27gq-20 gaming-focused monitor, featuring a NearEdgeless QHD, anti-glare display with 2560 x 1440 resolution produces outstanding Display: visuals with vivid clarity to boost your gaming sessions. -

Viarte Remastering of SD to HD/UHD & HDR Guide

Page 1/3 Viarte SDR-to-HDR Up-conversion & Digital Remastering of SD/HD to HD/UHD Services 1. Introduction As trends move rapidly towards online content distribution and bigger and brighter progressive UHD/HDR displays, the need for high quality remastering of SD/HD and SDR to HDR up-conversion of valuable SD/HD/UHD assets becomes more relevant than ever. Various technical issues inherited in legacy content hinder the immersive viewing experience one might expect from these new HDR display technologies. In particular, interlaced content need to be properly deinterlaced, and frame rate converted in order to accommodate OTT or Blu-ray re-distribution. Equally important, film grain or various noise conditions need to be addressed, so as to avoid noise being further magnified during edge-enhanced upscaling, and to avoid further perturbing any future SDR to HDR up-conversion. Film grain should no longer be regarded as an aesthetic enhancement, but rather as a costly nuisance, as it not only degrades the viewing experience, especially on brighter HDR displays, but also significantly increases HEVC/H.264 compressed bit-rates, thereby increases online distribution and storage costs. 2. Digital Remastering and SDR to HDR Up-Conversion Process There are several steps required for a high quality SD/HD to HD/UHD remastering project. The very first step may be tape scan. The digital master forms the baseline for all further quality assessment. isovideo's SD/HD to HD/UHD digital remastering services use our proprietary, state-of-the-art award- winning Viarte technology. Viarte's proprietary motion processing technology is the best available. -

Flexibility for Alternative Content

ALTERNATIVE CONTENT DMS SCALERS More flexibility for Alternative Content Kinoton’s Markus Näther explains the technology behind the company’s DMS Scalers -Cinema projectors using Series analog component, composite, S-Video and why high-quality cinema scalers employ very II 2K DLP Cinema® technology VGA inputs for connecting PCs or laptops, DVD complex mathematical algorithms to achieve support not only true 2K content, players, satellite receivers, digital encoders, smooth transitions. Pixels to be added are but also different video formats. cable receivers and many other sources, up to interpolated from the interim values of their If it comes to alternative content, HDMI inputs for Blu-Ray players etc. Premium neighbouring pixels, and if pixels have to be D scalers even offer SDI and HD-SDI inputs for though, they quickly reach their limits – the deleted, the same principle is used to smooth range of different picture resolutions, video professional sources. the transitions between the residual pixels. rendering techniques, frame rates and refresh Perfect Image Adjustment How well a scaler accomplishes this demanding rates used on the video market is simply too The different video sources – like classic TV, task basically depends on its processing power. large. Professional media scalers can convert HDTV, DVD or Blu-Ray, just to name the most Kinoton’s premium scaler model DMS DC2 incompatible video signals into the ideal input common ones – work with different image PRO realises image format changes without signal for D-Cinema projectors, and in addition resolutions. The ideal screen resolution for any loss of sharpness, the base model HD DMS enhance the projector’s capabilities of connect- the projection of alternative content with 2K still provides an acceptable image quality for ing alternate content sources. -

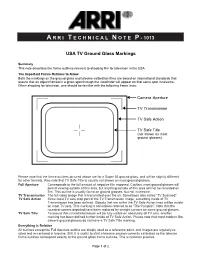

A R R I T E C H N I C a L N O T E

A R R I T E C H N I C A L N O T E P - 1013 USA TV Ground Glass Markings Summary This note describes the frame outlines relevant to shooting film for television in the USA. The Important Frame Outlines to Know Both the markings on the ground glass and telecine calibration films are based on international standards that assure that an object framed in a given spot through the viewfinder will appear on that same spot in telecine. When shooting for television, one should be familiar with the following frame lines: Camera Aperture TV Transmission TV Safe Action TV Safe Title (not shown on most ground glasses) Please note that the frame outlines pictured above are for a Super 35 ground glass, and will be slightly different for other formats. Also note that TV Safe Title is usually not shown on most ground glasses. Full Aperture Corresponds to the full amount of negative film exposed. Caution: most ground glasses will permit viewing outside of this area, but anything outside of this area will not be recorded on film. This outline is usually found on ground glasses, but not in telecine. TV Transmission The full video image that is transmitted over the air. Sometimes also called “TV Scanned”. TV Safe Action Since most TV sets crop part of the TV Transmission image, a marking inside of TV Transmission has been defined. Objects that are within the TV Safe Action lines will be visible on most TV sets. This marking is sometimes referred to as “The Pumpkin”. -

Spirit 4K® High-Performance Film Scanner with Bones and Datacine®

Product Data Sheet Spirit 4K® High-Performance Film Scanner with Bones and DataCine® Spirit 4K Film Scanner/Bones Combination Digital intermediate production – the motion picture workflow in which film is handled only once for scan- ning and then processed with a high-resolution digital clone that can be down-sampled to the appropriate out- put resolution – demands the highest resolution and the highest precision scanning. While 2K resolution is widely accepted for digital post production, there are situations when even a higher re- solution is required, such as for digital effects. As the cost of storage continues to fall and ultra-high resolu- tion display devices are introduced, 4K postproduction workflows are becoming viable and affordable. The combination of the Spirit 4K high-performance film scanner and Bones system is ahead of its time, offe- ring you the choice of 2K scanning in real time (up to 30 frames per second) and 4K scanning at up to 7.5 fps depending on the selected packing format and the receiving system’s capability. In addition, the internal spatial processor of the Spirit 4K system lets you scan in 4K and output in 2K. This oversampling mode eli- minates picture artifacts and captures the full dynamic range of film with 16-bit signal processing. And in either The Spirit 4K® from DFT Digital Film Technology is 2K or 4K scanning modes, the Spirit 4K scanner offers a high-performance, high-speed Film Scanner and unrivalled image detail, capturing that indefinable film DataCine® solution for Digital Intermediate, Commer- look to perfection. cial, Telecine, Restoration, and Archiving applications. -

Lc-60Le650u 60" Class 1080P Led Smart Tv

LC-60LE650U 60" CLASS 1080P LED SMART TV 6 SERIES LED SMART TV AQUOS 1080P LED DISPLAY Breathtaking HD images, greater brightness and contrast SMART TV With Dual-Core Processor and built-in Wi-Fi 120Hz REFRESH RATE Precision clarity during fast-motion scenes SLIM DESIGN Ultra Slim Design POWERFUL 20W AUDIO High fidelity with clear voice Big, bold and brainy - the LC-60LE650U is an LED Smart TV that delivers legendary AQUOS picture quality and unlimited content choices, seamless control, and instant connectivity through SmartCentral™. The AQUOS 1080p LED Display dazzles with advanced pixel structure for the most breathtaking HD images, a 4 million: 1 dynamic contrast ratio, and a 120Hz refresh rate for precision clarity during fast-motion scenes. A Smart TV with Dual-Core processor and built in WiFi, the LC-60LE650U lets you quickly access apps streaming movies, music, games, and websites. Using photo-alignment technology that’s precision Unlimited content, control, and instant See sharper, more electrifying action with the most crafted to let more light through in bright scenes and connectivity. AQUOS® TVs with advanced panel refresh rates available today. The shut more light out in dark scenes, the AQUOS SmartCentral™ give you more of what you 120Hz technology delivers crystal-clear images 1080p LED Display with a 4 million: 1 contrast ratio crave. From the best streaming apps, to the even during fast-motion scenes. creates a picture so real you can see the difference. easiest way to channel surf and connect your devices, it’s that easy. -

BA(Prog)III Yr 14/04/2020 Displays Interlacing and Progressive Scan

BA(prog)III yr 14/04/2020 Displays • Colored phosphors on a cathode ray tube (CRT) screen glow red, green, or blue when they are energized by an electron beam. • The intensity of the beam varies as it moves across the screen, some colors glow brighter than others. • Finely tuned magnets around the picture tube aim the electrons onto the phosphor screen, while the intensity of the beamis varied according to the video signal. This is why you needed to keep speakers (which have strong magnets in them) away from a CRT screen. • A strong external magnetic field can skew the electron beam to one area of the screen and sometimes caused a permanent blotch that cannot be fixed by degaussing—an electronic process that readjusts the magnets that guide the electrons. • If a computer displays a still image or words onto a CRT for a long time without changing, the phosphors will permanently change, and the image or words can become visible, even when the CRT is powered down. Screen savers were invented to prevent this from happening. • Flat screen displays are all-digital, using either liquid crystal display (LCD) or plasma technologies, and have replaced CRTs for computer use. • Some professional video producers and studios prefer CRTs to flat screen displays, claiming colors are brighter and more accurately reproduced. • Full integration of digital video in cameras and on computers eliminates the analog television form of video, from both the multimedia production and the delivery platform. • If your video camera generates a digital output signal, you can record your video direct-to-disk, where it is ready for editing. -

A Review and Comparison on Different Video Deinterlacing

International Journal of Research ISSN NO:2236-6124 A Review and Comparison on Different Video Deinterlacing Methodologies 1Boyapati Bharathidevi,2Kurangi Mary Sujana,3Ashok kumar Balijepalli 1,2,3 Asst.Professor,Universal College of Engg & Technology,Perecherla,Guntur,AP,India-522438 [email protected],[email protected],[email protected] Abstract— Video deinterlacing is a key technique in Interlaced videos are generally preferred in video broadcast digital video processing, particularly with the widespread and transmission systems as they reduce the amount of data to usage of LCD and plasma TVs. Interlacing is a widely used be broadcast. Transmission of interlaced videos was widely technique, for television broadcast and video recording, to popular in various television broadcasting systems such as double the perceived frame rate without increasing the NTSC [2], PAL [3], SECAM. Many broadcasting agencies bandwidth. But it presents annoying visual artifacts, such as made huge profits with interlaced videos. Video acquiring flickering and silhouette "serration," during the playback. systems on many occasions naturally acquire interlaced video Existing state-of-the-art deinterlacing methods either ignore and since this also proved be an efficient way, the popularity the temporal information to provide real-time performance of interlaced videos escalated. but lower visual quality, or estimate the motion for better deinterlacing but with a trade-off of higher computational cost. The question `to interlace or not to interlace' divides the TV and the PC communities. A proper answer requires a common understanding of what is possible nowadays in deinterlacing video signals. This paper outlines the most relevant methods, and provides a relative comparison. -

EBU Tech 3315-2006 Archiving: Experiences with TK Transfer to Digital

EBU – TECH 3315 Archiving: Experiences with telecine transfer of film to digital formats Source: P/HDTP Status: Report Geneva April 2006 1 Page intentionally left blank. This document is paginated for recto-verso printing Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Contents Introduction ......................................................................................................... 5 Decisions on Scanning Format .................................................................................... 5 Scanning tests ....................................................................................................... 6 The Results .......................................................................................................... 7 Observations of the influence of elements of film by specialists ........................................ 7 Observations on the results of the formal evaluations .................................................... 7 Overall conclusions .............................................................................................. 7 APPENDIX : Details of the Tests and Results ................................................................... 9 3 Archiving: Experiences with telecine transfer of film to digital formats Tech 3315 Page intentionally left blank. This document is paginated for recto-verso printing 4 Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Archiving: Experience with telecine transfer of film to digital formats