Topic Modelling Extraction of “Mann Ki Baat”

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Amit Shah at Parivartan Yatra Rally in Mysore

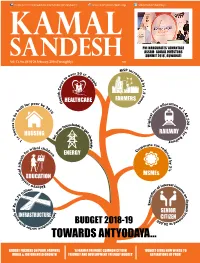

https://www.facebook.com/Kamal.Sandesh/ www.kamalsandesh.org @kamalsandeshbjp PM INAUGURAtes ‘ADVANTAGE ASSAM- GLOBAL INVESTORS SUMMit 2018’, GUWAHATI Vol. 13, No. 04 16-28 February, 2018 (Fortnightly) `20 HEALTHCARE FARMERS HOUSING RAILWAY ENERGY MSMEs EDUCATION SENIOR INFRASTRUCTURE BUDGET 2018-19 CITIZEN TOWARDS ANTYODAYA... BUDGET FOCUSES ON POOR, FARMERS ‘A FARMER FRIENDLY, COMMON CITIZEN ‘BUDGET GIVES NEW WINGS TO RURAL & JOB ORIENTED GROWTH FRIENDLY AND DEVELOPMENT FRIENDLY BUDGEt’ 16-28 FEBRUARY,ASP IRATIONS2018 I KAMAL OF P SANDESHoor’ I 1 Karnataka BJP welcomes BJP National President Shri Amit Shah at Parivartan Yatra Rally in Mysore. BJP National President Shri Amit Shah hoisting the tri-colour on the 69th Republic Day celebration at BJP HQ, New Delhi. BJP National President Shri Amit Shah paying floral tributes Shri Amit Shah addressing the SARBANSDANI to to Sant Ravidas ji on his Jayanti at BJP H.Q. in New Delhi commemorate the 350th birth anniversary of Guru Gobind 2 I KAMAL SANDESH I 16-28 FEBRUARY, 2018 Singh ji at Chandani Chowk, New Delhi. Fortnightly Magazine Editor Prabhat Jha Executive Editor Dr. Shiv Shakti Bakshi Associate Editors Ram Prasad Tripathy Vikash Anand Creative Editors Vikas Saini Mukesh Kumar Phone +91(11) 23381428 FAX +91(11) 23387887 BUDGET FOCUSES ON POOR, FARMERS RURAL & JOB E-mail ORIENTED GROWTH [email protected] Finance Minister Shri Arun Jaitley presented on February 1, 2018 general [email protected] 06 Budget 2018-19 in Parliament. It was second time the budget was Website: www.kamalsandesh.org presented on first of February instead following colonial era practices of... VAICHARIKI 17 C M SIDDARAMAIAH SYNONYMOUS WITH Aspects of Economics 21 CORRUPTION: AMIT SHAH BJP National President Shri SHRADHANJALI Amit Shah said Karnataka CM Siddaramaiah was synonymous Chintaman Vanga / Hukum Singh 23 with corruption and added 15 CONGRESS GOVERNMENT that the state government was ARTICLE IN KARNATAKA IS ON EXIT protecting the killers of Hindu.. -

Standing Committee on Information Technology (2016-17)

STANDING COMMITTEE ON INFORMATION TECHNOLOGY (2016-17) 34 SIXTEENTH LOK SABHA MINISTRY OF INFORMATION AND BROADCASTING DEMANDS FOR GRANTS (2017-18) THIRTY-FOURTH REPORT LOK SABHA SECRETARIAT NEW DELHI March, 2017/ Phalguna, 1938 (Saka) THIRTY-FOURTH REPORT STANDING COMMITTEE ON INFORMATION TECHNOLOGY (2016-17) (SIXTEENTH LOK SABHA) MINISTRY OF INFORMATION AND BROADCASTING DEMANDS FOR GRANTS (2017-18) Presented to Lok Sabha on 09.03.2017 Laid in Rajya Sabha on 10.03.2017 LOK SABHA SECRETARIAT NEW DELHI March, 2017/ Phalguna, 1938 (Saka) CONTENTS Page COMPOSITION OF THE COMMITTEE (iii) ABBREVIATIONS (iv) INTRODUCTION (v) REPORT PART I I. Introductory 1 II. Mandate of the Ministry of Information and Broadcasting 1 III. Implementation status of recommendations of the Committee contained in the 2 Twenty-third Report on Demands for Grants (2016-17) IV. Twelfth Five Year Plan Fund Utilization 3 V. Budget (2016-17) Performance and Demands for Grants 2017-18) 4 VI. Broadcasting Sector 11 a. Allocation to Prasar Bharati and its Schemes 12 b. Grant in aid to Prasar Bharati for Kisan Channel 21 c. Financial Performance of AIR and DD (2016-17) 23 d. Action taken on Sam Pitroda Committee Recommendations 28 e. Main Secretariat Schemes under Broadcasting Sector 31 VII. Information Sector 48 a. Indian Institute of Mass Communication (IIMC) 51 b. Directorate of Advertising and Visual Publicity (DAVP) 56 c. Direct Contact Programme by Directorate of Field Publicity 65 VIII. Film Sector 69 a. National Museum of Indian Cinema (Film Division) 73 b. Infrastructure Development Programmes Relating to Film sector 75 c. Development Communication & Dissemination of Filmic Content 79 d. -

Peculiarities of Indian English As a Separate Language

Propósitos y Representaciones Jan. 2021, Vol. 9, SPE(1), e913 ISSN 2307-7999 Special Number: Educational practices and teacher training e-ISSN 2310-4635 http://dx.doi.org/10.20511/pyr2021.v9nSPE1.913 RESEARCH ARTICLES Peculiarities of Indian English as a separate language Características del inglés indio como idioma independiente Elizaveta Georgievna Grishechko Candidate of philological sciences, Assistant Professor in the Department of Foreign Languages, Faculty of Economics, RUDN University, Moscow, Russia. ORCID: https://orcid.org/0000-0002-0799-1471 Gaurav Sharma Ph.D. student, Assistant Professor in the Department of Foreign Languages, Faculty of Economics, RUDN University, Moscow, Russia. ORCID: https://orcid.org/0000-0002-3627-7859 Kristina Yaroslavovna Zheleznova Ph.D. student, Assistant Professor in the Department of Foreign Languages, Faculty of Economics, RUDN University, Moscow, Russia. ORCID: https://orcid.org/0000-0002-8053-703X Received 02-12-20 Revised 04-25-20 Accepted 01-13-21 On line 01-21-21 *Correspondence Cite as: Email: [email protected] Grishechko, E., Sharma, G., Zheleznova, K. (2021). Peculiarities of Indian English as a separate language. Propósitos y Representaciones, 9 (SPE1), e913. Doi: http://dx.doi.org/10.20511/pyr2021.v9nSPE1.913 © Universidad San Ignacio de Loyola, Vicerrectorado de Investigación, 2021. This article is distributed under license CC BY-NC-ND 4.0 International (http://creativecommons.org/licenses/by-nc-nd/4.0/) Peculiarities of Indian English as a separate language Summary The following paper will reveal the varieties of English pronunciation in India, its features and characteristics. This research helped us to consider the history of occurrence of English in India, the influence of local languages on it, the birth of its own unique English, which is used in India now. -

Routledge Handbook of Indian Cinemas the Indian New Wave

This article was downloaded by: 10.3.98.104 On: 28 Sep 2021 Access details: subscription number Publisher: Routledge Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: 5 Howick Place, London SW1P 1WG, UK Routledge Handbook of Indian Cinemas K. Moti Gokulsing, Wimal Dissanayake, Rohit K. Dasgupta The Indian New Wave Publication details https://www.routledgehandbooks.com/doi/10.4324/9780203556054.ch3 Ira Bhaskar Published online on: 09 Apr 2013 How to cite :- Ira Bhaskar. 09 Apr 2013, The Indian New Wave from: Routledge Handbook of Indian Cinemas Routledge Accessed on: 28 Sep 2021 https://www.routledgehandbooks.com/doi/10.4324/9780203556054.ch3 PLEASE SCROLL DOWN FOR DOCUMENT Full terms and conditions of use: https://www.routledgehandbooks.com/legal-notices/terms This Document PDF may be used for research, teaching and private study purposes. Any substantial or systematic reproductions, re-distribution, re-selling, loan or sub-licensing, systematic supply or distribution in any form to anyone is expressly forbidden. The publisher does not give any warranty express or implied or make any representation that the contents will be complete or accurate or up to date. The publisher shall not be liable for an loss, actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly or indirectly in connection with or arising out of the use of this material. 3 THE INDIAN NEW WAVE Ira Bhaskar At a rare screening of Mani Kaul’s Ashad ka ek Din (1971), as the limpid, luminescent images of K.K. Mahajan’s camera unfolded and flowed past on the screen, and the grave tones of Mallika’s monologue communicated not only her deep pain and the emptiness of her life, but a weighing down of the self,1 a sense of the excitement that in the 1970s had been associated with a new cinematic practice communicated itself very strongly to some in the auditorium. -

Introduction to Bengali, Part I

R E F O R T R E S U M E S ED 012 811 48 AA 000 171 INTRODUCTION TO BENGALI, PART I. BY- DIMOCK, EDWARD, JR. AND OTHERS CHICAGO UNIV., ILL., SOUTH ASIALANG. AND AREA CTR REPORT NUMBER NDEA.--VI--153 PUB DATE 64 EDRS PRICE MF -$1.50 HC$16.04 401P. DESCRIPTORS-- *BENGALI, GRAMMAR, PHONOLOGY, *LANGUAGE INSTRUCTION, FHONOTAPE RECORDINGS, *PATTERN DRILLS (LANGUAGE), *LANGUAGE AIDS, *SPEECHINSTRUCTION, THE MATERIALS FOR A BASIC COURSE IN SPOKENBENGALI PRESENTED IN THIS BOOK WERE PREPARED BYREVISION OF AN EARLIER WORK DATED 1959. THE REVISIONWAS BASED ON EXPERIENCE GAINED FROM 2 YEARS OF CLASSROOMWORK WITH THE INITIAL COURSE MATERIALS AND ON ADVICE AND COMMENTS RECEIVEDFROM THOSE TO WHOM THE FIRST DRAFT WAS SENT FOR CRITICISM.THE AUTHORS OF THIS COURSE ACKNOWLEDGE THE BENEFITS THIS REVISIONHAS GAINED FROM ANOTHER COURSE, "SPOKEN BENGALI,"ALSO WRITTEN IN 1959, BY FERGUSON AND SATTERWAITE, BUT THEY POINTOUT THAT THE EMPHASIS OF THE OTHER COURSE IS DIFFERENTFROM THAT OF THE "INTRODUCTION TO BENGALI." FOR THIS COURSE, CONVERSATIONAND DRILLS ARE ORIENTED MORE TOWARDCULTURAL CONCEPTS THAN TOWARD PRACTICAL SITUATIONS. THIS APPROACHAIMS AT A COMPROMISE BETWEEN PURELY STRUCTURAL AND PURELYCULTURAL ORIENTATION. TAPE RECORDINGS HAVE BEEN PREPAREDOF THE MATERIALS IN THIS BOOK WITH THE EXCEPTION OF THEEXPLANATORY SECTIONS AND TRANSLATION DRILLS. THIS BOOK HAS BEEN PLANNEDTO BE USED IN CONJUNCTION WITH THOSE RECORDINGS.EARLY LESSONS PLACE MUCH STRESS ON INTONATION WHIM: MUST BEHEARD TO BE UNDERSTOOD. PATTERN DRILLS OF ENGLISH TO BENGALIARE GIVEN IN THE TEXT, BUT BENGALI TO ENGLISH DRILLS WERE LEFTTO THE CLASSROOM INSTRUCTOR TO PREPARE. SUCH DRILLS WERE INCLUDED,HOWEVER, ON THE TAPES. -

KPMG FICCI 2013, 2014 and 2015 – TV 16

#shootingforthestars FICCI-KPMG Indian Media and Entertainment Industry Report 2015 kpmg.com/in ficci-frames.com We would like to thank all those who have contributed and shared their valuable domain insights in helping us put this report together. Images Courtesy: 9X Media Pvt.Ltd. Phoebus Media Accel Animation Studios Prime Focus Ltd. Adlabs Imagica Redchillies VFX Anibrain Reliance Mediaworks Ltd. Baweja Movies Shemaroo Bhasinsoft Shobiz Experential Communications Pvt.Ltd. Disney India Showcraft Productions DQ Limited Star India Pvt. Ltd. Eros International Plc. Teamwork-Arts Fox Star Studios Technicolour India Graphiti Multimedia Pvt.Ltd. Turner International India Ltd. Greengold Animation Pvt.Ltd UTV Motion Pictures KidZania Viacom 18 Media Pvt.Ltd. Madmax Wonderla Holidays Maya Digital Studios Yash Raj Films Multiscreen Media Pvt.Ltd. Zee Entertainmnet Enterprises Ltd. National Film Development Corporation of India with KPMG International Cooperative (“KPMG International”), a Swiss entity. All rights reserved. entity. (“KPMG International”), a Swiss with KPMG International Cooperative © 2015 KPMG, an Indian Registered Partnership and a member firm of the KPMG network of independent member firms affiliated and a member firm of the KPMG network of independent member firms Partnership KPMG, an Indian Registered © 2015 #shootingforthestars FICCI-KPMG Indian Media and Entertainment Industry Report 2015 with KPMG International Cooperative (“KPMG International”), a Swiss entity. All rights reserved. entity. (“KPMG International”), a Swiss with KPMG International Cooperative © 2015 KPMG, an Indian Registered Partnership and a member firm of the KPMG network of independent member firms affiliated and a member firm of the KPMG network of independent member firms Partnership KPMG, an Indian Registered © 2015 #shootingforthestars: FICCI-KPMG Indian Media and Entertainment Industry Report 2015 Foreword Making India the global entertainment superpower 2014 has been a turning point for the media and entertainment industry in India in many ways. -

GLOBAL HUMANITIES Year 6, Vol

8 GLOBAL HUMANITIES Year 6, Vol. 8, 2021 – ISSN 2199–3939 Editors Frank Jacob and Francesco Mangiapane Identity and Nationhood Editorial by Texts by Frank Jacob and Francesco Mangiapane Amrita De Sophie Gueudet Frank Jacob Udi Lebel and Zeev Drori edizioni Museo Pasqualino edizioni Museo Pasqualino direttore Rosario Perricone GLOBAL HUMANITIES 8 Biannual Journal ISSN 2199-3939 Editors Frank Jacob Nord Universitet, Norway Francesco Mangiapane University of Palermo, Italy Scientific Board GLOBAL HUMANITIES Jessica Achberger Dario Mangano Year 6, Vol. 8, 2021 – ISSN 2199–3939 University of Lusaka, Zambia University of Palermo, Italy Editors Frank Jacob and Francesco Mangiapane Giuditta Bassano Gianfranco Marrone IULM University, Milano, Italy University of Palermo, Italy Saheed Aderinto Tiziana Migliore Western Carolina University, USA University of Urbino, Italy Bruce E. Bechtol, Jr. Sabine Müller Angelo State University, USA Marburg University, Germany Stephan Köhn Rosario Perricone Cologne University, Germany University of Palermo, Italy 8 GLOBAL HUMANITIES Year 6, Vol. 8, 2021 – ISSN 2199–3939 Editors Frank Jacob and Francesco Mangiapane Identity and Nationhood Editorial by Texts by Frank Jacob and Francesco Mangiapane Amrita De Sophie Gueudet Frank Jacob Udi Lebel and Zeev Drori edizioni Museo Pasqualino https://doi.org/10.53123/GH_8_5 Masculinities in Digital India Trolls and Mediated Affect Amrita De SUNY Binghamton [email protected] Abstract. This article analyzes the proliferation of post-2014 social media trolling in In- dia assessing how a pre-planned virtually mediated affective deployment produces phys- ical ramifications in real spaces. I first unpack Indian Prime Minister Narendra Modi’s hyper-masculine social media figuration; then study the generative impact of brand Modi Masculinity through a processual affective rendering regulated by hired social media in- fluencers and digital media strategists. -

View, He Set the Standards of Debate in Parliament

Exclusive Focus ge fudy iM+sa gSa ç.k djds] viuk ru&eu viZ.k djds ftn gS ,d lw;Z mxkuk gS] vEcj ls Åapk tkuk gS ,d Hkkjr u;k cukuk gS] ,d Hkkjr u;k cukuk gS -ujsUæ eksnh The Prime Minister’s address on the occasion of the 72nd Independence Day from the ramparts of the historic Red Fort laid out the great pace with which India has transformed over the last 4 years. Through mentioning developments of several government initiatives, the government led by PM Modi has moved significantly from ‘policy paralyses’ to a ‘reform, perform and transform’ approach. Some of the key highlights of the speech includes:- The Constitution of India given to us by Dr. Babasaheb Ambedkar has spoken about justice for all. The recently concluded Parliament session was one devoted to 1 social justice. The Parliament session witnessed the passage of the bill to create an OBC Commission. Dial 1922 to hear www.mygov.in 1 PM Narendra Modi’s https://transformingindia.mygov.in MANN KI BAAT #Transformingindia Women officers commissioned in short service will get opportunity 2 for permanent commission like their male counterparts. The practise of Triple Talaq has caused great injustice among women. 3 I ensure the Muslim sister that I will work to ensure justice is done to them. If we had continued at the same pace at which toilets were being built 4 in 2013, the pace at which electrification was happening in 2013, then it would have taken us decades to complete them. The demand for MSP was pending for years. -

'Mann Ki Baat 2.0' on 30.08.2020

9/2/2020 Press Information Bureau Prime Minister's Office English rendering of PM’s address in the 15th Episode of ‘Mann Ki Baat 2.0’ on 30.08.2020 Posted On: 30 AUG 2020 11:33AM by PIB Delhi My dear countrymen, Namaskar. Generally, this period is full of festivals; fairs are held at various places; it’s the time for religious ceremonies too. During these times of Corona crises, on the one hand people are full of exaltation and enthusiasm too; and yet in a way there’s also a discipline that touches our heart. Broadly speaking in a way, there is a feeling of responsibility amongst citizens. People are getting along with their day to day tasks, while taking care of themselves and others as well. At every event being organised in the country, the kind of patience and simplicity being witnessed this time is unprecedented. Even Ganeshotsav is being celebrated online at certain places; at most places eco-friendly Ganesh idols have been installed. Friends, if we observe very minutely, one thing will certainly draw our attention- our festivals and the environment. There has always been a deep connect between the two. On the one hand, our festivals implicitly convey the message of co- existence with the environment and nature; on the other, many festivals are celebrated precisely for protecting nature. For example, in West Champaran district of Bihar, people belonging to Thaaru tribal community have been observing a sixty-hour lockdown for centuries…..in their own words- ‘Saath ghante ke Barna’. The Thaaru community has adopted BARNA as a tradition for protection of nature; that too for centuries. -

Urdu Literature in Pakistan: a Site for Alternative Visions and Dissent

christina oesterheld Urdu Literature in Pakistan: A Site for Alternative Visions and Dissent Iqbal’s Idea of a Muslim Homeland—Vision and Reality To a great extent Pakistan owes its existence to the vision of one of the greatest Urdu poets, !All"ma Mu#ammad Iqb"l (1877–1938). Not only had Iqb"l expressed the idea of a separate homeland for South Asian Muslims, he had also formulated his vision of the ideal Muslim state and the ideal Muslim. These ideals are at the core of his poetry in Urdu and Persian. Iqb"l didn’t live to see the creation of the independent state of Pakistan and was thus unable to exert any influence on what became of his vision. Nevertheless, he has been celebrated as Pakistan’s national poet and as the spiritual father of the nation ever since the adoption of the Pakistan Resolution by the All-India Muslim League in 1940. In Fate# Mu#ammad Malik’s words: “In Pakistan Iqb"l is universally quoted, day in and day out from the highest to the lowest. His poetry is recited from the cradle to the corridors of power, from the elementary school to the parliament house” (1999a, 90). Anybody dealing with Pakistan is sooner or later exposed to this official version of Iqb"l. Presumably much less known is the fact that soon afterwards, Pakistani writers and poets started to question the le- gitimacy of this appropriation of Iqb"l by representatives of the Pakistani state, by educational institutions and the official media. Several Urdu po- ets wrote parodies of his famous poems, not to parody Iqb"l’s poetry as such but to highlight the wide gulf between words and deeds, between the proclaimed ideals and Pakistani reality. -

Reviving Public Service Broadcasting for Governance: a Case Study in India

NO. 2 VOL. 2/ JUNE 2017 http://dx.doi.org/10.222.99/arpap/2017.15 Reviving Public Service Broadcasting for Governance: A Case Study in India MEGHANA HR ABSTRACT Received: 12 March 2017 In the age of digitalization, radio and television are regarded as Accepted: 05 April 2017 Published: 14 June 2017 traditional media. With the popularity of internet, the traditional media are perceived as a passé. This stance stays Corresponding author: staunch to public broadcasting media. The public broadcasting Meghana HR media in India is witnessing a serious threat by the private sector Research Scholar Under the Guidance of Dr. ever since privatization policies were introduced way back in the H.K. Mariswamy Bangalore early 90s. The private radio stations and television channels are University, India consigned with an element of entertainment that the former Email: meghs.down2earth@gmail. public broadcasting media severely lacked. Doordarshan (DD) com and All India Radio (AIR) which is a part of the broadcasting media in India in the recent time are trying to regain its lost sheen through more engaging programmers. One such effort is the programme „Mann Ki Baat‟, an initiative by Prime Minister Narendra Modi. The programme aims at delivering the voice of Prime Minister to the public and also obtains the concerns of the masses. At a time when private television and radio stations have accomplished the mission of luring large audience in India, a government initiated programme – Mann Ki Baath has made a difference in repossessing the lost charm of public broadcasting media in India. This paper intends to explore the case study of „Mann Ki Baath‟ programme as a prolific initiative in generating revenue to public broadcasting media and also accenting the ideas of the government. -

Dr. Gurpreet Singh Lehal

CURRICULUM VITAE Gurpreet Singh Lehal Professor, Computer Science Department, Punjabi University, Patiala. Director, Research Centre for Punjabi Language, Literature and Culture Punjabi University, Patiala. Degrees: B.Sc. (Maths Hons.) , Punjab University, Chandigarh M.Sc. (Maths Hons.) , Punjab University, Chandigarh M.E. (Computer Science) Thapar Institute of Engineering & Technology, Patiala PhD (Computer Science), Punjabi University, Patiala Employment: 1988-1995, Systems Analyst/Research Engineer, Thapar Corporate Research & Development Centre, Patiala 1995-2000, Lecturer in Department of Computer Science and Engineering, Punjabi University, Patiala 2000-2003, Assistant Professor in Department of Computer Science and Engineering, Thapar Institute of Engineering & Technology , Patiala From 2003, Professor in Department of Computer Science and Engineering, Punjabi University Administrative Experience: 1. Dean, College Development Council, Punjabi University Patiala (From 2021) 2. Director, Centre for Artificial Intelligence and Data Science (From 2018) 3. Director, Research Centre for Punjabi Language, Literature and Culture, Punjabi University Patiala (From 2004) 4. Dean, Faculty of Computing Sciences, Punjabi University Patiala (2016-2018) 5. Nominated Director, State Bank of Patiala (2014-2017) 6. Dean, Faculty of Physical Sciences, Punjabi University Patiala (2013-2015) 7. Head, Department of Computer Science, Punjabi University Patiala (2006-2009) 8. Warden, Boys Hostel No. 7, Punjabi University Patiala (1997-1999) Member of International/National Committees: . Member, ICANN (Internet Corporation for Assigned Names and Numbers) India Universal Acceptance Local Initiative . Member, Panel on Character set for Indian Languages for Mobile phones, Bureau of Indian Standards . Member, ICANN (Internet Corporation for Assigned Names and Numbers) Neo-Brahmi Generation Panel for Gurmukhi Script . Member, Committee for development of Script Grammar for Punjabi, Ministry of Communications and Information Technology, Government of India .