A Deep Learning Approach to Galaxy Cluster X-Ray Masses Michelle Ntampaka1, 2, J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Science Initiative 1

Data Science Initiative 1 3 credits in data and computational science, 1 credit in societal implications and opportunities, Data Science Initiative 1 elective credit to be drawn from a wide range of focused applications or deeper theoretical exploration, and 1 credit capstone experience. Director We also offer an option as a 5-th Year Master's Program if you are an Sohini Ramachandran undergraduate at Brown. This allows you to substitute maximally 2 credits Direfctor of Graduate Studies with courses you have already taken. Samuel S. Watson Master of Science in Data Science Brown University's Data Science Initiative serves as a campus hub Semester I for research and education in data science. Engaging partners across DATA 1010 Probability, Statistics, and Machine 2 campus and beyond, the DSI 's mission is to facilitate and conduct both Learning domain-driven and fundamental research in data science, increase data DATA 1030 Hands-on Data Science 1 fluency and educate the next generation of data scientists, and ultimately DATA 1050 Data Engineering 1 explore the impact of the data revolution on culture, society, and social justice. We envision our role in the university and beyond as something to Semester II build over time, with the flexibility to meet the changing needs of Brown’s DATA 2020 Statistical Learning 1 students and research community. DATA 2040 Deep Learning and Special Topics in Data 1 The Master’s Program in Data Science (Master of Science, Science ScM) prepares students from a wide range of disciplinary backgrounds DATA 2080 Data and Society 1 for distinctive careers in data science. -

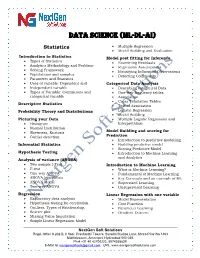

Data Science (ML-DL-Ai)

Data science (ML-DL-ai) Statistics Multiple Regression Model Building and Evaluation Introduction to Statistics Model post fitting for Inference Types of Statistics Examining Residuals Analytics Methodology and Problem- Regression Assumptions Solving Framework Identifying Influential Observations Populations and samples Detecting Collinearity Parameter and Statistics Uses of variable: Dependent and Categorical Data Analysis Independent variable Describing categorical Data Types of Variable: Continuous and One-way frequency tables categorical variable Association Cross Tabulation Tables Descriptive Statistics Test of Association Probability Theory and Distributions Logistic Regression Model Building Picturing your Data Multiple Logistic Regression and Histogram Interpretation Normal Distribution Skewness, Kurtosis Model Building and scoring for Outlier detection Prediction Introduction to predictive modelling Inferential Statistics Building predictive model Scoring Predictive Model Hypothesis Testing Introduction to Machine Learning and Analytics Analysis of variance (ANOVA) Two sample t-Test Introduction to Machine Learning F-test What is Machine Learning? One-way ANOVA Fundamental of Machine Learning ANOVA hypothesis Key Concepts and an example of ML ANOVA Model Supervised Learning Two-way ANOVA Unsupervised Learning Regression Linear Regression with one variable Exploratory data analysis Model Representation Hypothesis testing for correlation Cost Function Outliers, Types of Relationship, -

Data Science: the Big Picture Data Science with R Exploratory Data Analysis with R Data Visualization with R (3-Part)

Deep Learning: The Future of AI @MatthewRenze #DevoxxUK Human Cat Dog Car Job Postings for Machine Learning Source: Indeed.com Average Salary by Job Type (USA) $108,000 $101,000 $100,000 Source: Stack Overflow 2017 What is deep learning? What can it do for me? How do I get started? What is deep learning? Deep Learning Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Artificial Machine Deep Intelligence Learning Learning Artificial Intelligence Explicit programming Explicit programming Encoding domain knowledge Explicit programming Encoding domain knowledge Statistical patterns detection Machine Learning Machine Learning Artificial Machine Statistics Intelligence Learning 푓 푥 푓 푥 Prediction Data Function 푓 푥 Prediction Data Function Cat Dog 푓 푥 Prediction Data Function Cat Dog Is cat? 푓 푥 Prediction Data Function Cat Dog Is cat? Yes Artificial Neuron 푥1 푥2 푦 푥3 inputs neuron outputs Artificial Neuron Σ Artificial Neuron Σ Artificial Neuron 휔1 휔2 휔3 Artificial Neuron 휔0 Artificial Neuron 휔0 휔1 휔2 휔3 Artificial Neuron 푥 1 휔0 휔1 푥2 휑 휔2 Σ 푦 푚 휔3 푥3 푦푘 = 휑 푤푘푗푥푗 푗=0 Artificial Neural Network Artificial Neural Network input hidden output Artificial Neural Network Forward propagation Artificial Neural Network Forward propagation Backward propagation Artificial Neural Network Artificial Neural Network -

Explainable Deep Learning Models in Medical Image Analysis

Journal of Imaging Review Explainable Deep Learning Models in Medical Image Analysis Amitojdeep Singh 1,2,* , Sourya Sengupta 1,2 and Vasudevan Lakshminarayanan 1,2 1 Theoretical and Experimental Epistemology Laboratory, School of Optometry and Vision Science, University of Waterloo, Waterloo, ON N2L 3G1, Canada; [email protected] (S.S.); [email protected] (V.L.) 2 Department of Systems Design Engineering, University of Waterloo, Waterloo, ON N2L 3G1, Canada * Correspondence: [email protected] Received: 28 May 2020; Accepted: 17 June 2020; Published: 20 June 2020 Abstract: Deep learning methods have been very effective for a variety of medical diagnostic tasks and have even outperformed human experts on some of those. However, the black-box nature of the algorithms has restricted their clinical use. Recent explainability studies aim to show the features that influence the decision of a model the most. The majority of literature reviews of this area have focused on taxonomy, ethics, and the need for explanations. A review of the current applications of explainable deep learning for different medical imaging tasks is presented here. The various approaches, challenges for clinical deployment, and the areas requiring further research are discussed here from a practical standpoint of a deep learning researcher designing a system for the clinical end-users. Keywords: explainability; explainable AI; XAI; deep learning; medical imaging; diagnosis 1. Introduction Computer-aided diagnostics (CAD) using artificial intelligence (AI) provides a promising way to make the diagnosis process more efficient and available to the masses. Deep learning is the leading artificial intelligence (AI) method for a wide range of tasks including medical imaging problems. -

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018)

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018) Chika Yinka-Banjo, PhD Ayorkor Korsah, PhD University of Lagos Ashesi University Nigeria Ghana Outline • Introduction to Machine learning • Reinforcement learning definitions • Example reinforcement learning problems • The Markov decision process • The optimal policy • Value function & Q-value function • Bellman Equation • Q-learning • Building a simple Q-learning agent (coding) • Recap • Where to go from here? Introduction to Machine learning • Artificial Intelligence (AI) is the study and design of Intelligent agents. • An Intelligent agent can perceive its environment through sensors and it can act on its environment through actuators. • E.g. Agent: Humanoid robot • Environment: Earth? • Sensors: Camera, tactile sensor etc. • Actuators: Motors, grippers etc. • Machine learning is a subfield of Artificial Intelligence Branches of AI Introduction to Machine learning • Machine learning techniques learn from data without being explicitly programmed to do so. • Machine learning models enable the agent to learn from its own experience by extracting useful information from feedback from its environment. • Three types of learning feedback: • Supervised learning • Unsupervised learning • Reinforcement learning Branches of Machine learning Supervised learning • Supervised learning: the machine learning model is trained on many labelled examples of input-output pairs. • Such that when presented with a novel input, the model can estimate accurately what the correct output should be. • Data(x, y): x is input data, y is label Supervised learning task in the form of classification • Goal: learn a function to map x -> y • Examples include; Classification, regression object detection, image captioning etc. Unsupervised learning • Unsupervised learning: here the model extract useful information from unlabeled and unstructured data. -

An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’

‘An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’ by Dr Clarence N W Tan, PhD Bachelor of Science in Electrical Engineering Computers (1986), University of Southern California, Los Angeles, California, USA Master of Science in Industrial and Systems Engineering (1989), University of Southern California Los Angeles, California, USA Masters of Business Administration (1989) University of Southern California Los Angeles, California, USA Graduate Diploma in Applied Finance and Investment (1996) Securities Institute of Australia Diploma in Technical Analysis (1996) Australian Technical Analysts Association Doctor of Philosophy Bond University (1997) URL: http://w3.to/ctan/ E-mail: [email protected] School of Information Technology, Bond University, Gold Coast, QLD 4229, Australia Table of Contents Table of Contents 1. INTRODUCTION TO ARTIFICIAL INTELLIGENCE AND ARTIFICIAL NEURAL NETWORKS ..........................................................................................................................................2 1.1 INTRODUCTION ...........................................................................................................................2 1.2 ARTIFICIAL INTELLIGENCE ..........................................................................................................2 1.3 ARTIFICIAL INTELLIGENCE IN FINANCE .......................................................................................4 1.3.1 Expert System -

![Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science](https://docslib.b-cdn.net/cover/5074/lecture-23-recurrent-neural-network-scs4049-02-machine-learning-and-data-science-675074.webp)

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science Seongsik Park ([email protected]) AI Department, Dongguk University Tentative schedule week topic date (수 / 월) 1 Machine Learning Introduction & Basic Mathematics 09.02 / 09.07 2 Python Practice I & Regression 09.09 / 09.14 3 AI Department Seminar I & Clustering I 09.16 / 09.21 4 Clustering II & Classification I 09.23 / 09.28 5 Classification II (추석) / 10.05 6 Python Practice II & Support Vector Machine I 10.07 / 10.12 7 Support Vector Machine II & Decision Tree and Ensemble Learning 10.14 / 10.19 8 Mid-Term Practice & Mid-Term Exam 10.21 / 10.26 9 휴강 & Dimensional Reduction I 10.28 / 11.02 10 Dimensional Reduction II & Neural networks and Back Propagation I 11.04 / 11.09 11 Neural networks and Back Propagation II & III 11.11 / 11.16 12 AI Department Seminar II & Convolutional Neural Network 11.18 / 11.23 13 Python Practice III & Recurrent Neural network 11.25 / 11.30 14 Model Optimization & Autoencoders 12.02 / 12.07 15 Final exam (휴강)/ 12.14 1/22 Introduction of recurrent neural network (RNN) • Rumelhart, et. al., Learning Internal Representations by Error Propagation (1986) • fx(1); :::; x(t−1); x(t); x(t+1); :::; x(N)g와 같은 sequence를 처리하는데 특화 • RNN 은 긴 시퀀스를 처리할 수 있으며, 길이가 변동적인 시퀀스도 처리 가능 • Parameter sharing: 전체 sequence에서 파라미터를 공유함 • CNN은 시퀀스를 다룰 수 없나? • 시간 축으로 1-D convolution ) 시간 지연 신경망도 가능하지만 깊이가 얕음 (shallow) • RNN은 깊은 구조(deep)가 가능함 • Data type • 일반적으로 xt 는 시계열 데이터 (time series, temporal data) • 여기서 t = 1; 2; :::; N은 time step 또는 sequence 내에서의 순서 • 전체 길이 N은 변동적 2/22 Recurrent Neurons Up to now we have mostly looked at feedforward neural networks, where the activa‐ tions flow only in one direction, from the input layer to the output layer (except for a few networks in Appendix E). -

Budgeted Reinforcement Learning in Continuous State Space

Budgeted Reinforcement Learning in Continuous State Space Nicolas Carrara∗ Edouard Leurent∗ SequeL team, INRIA Lille – Nord Europey SequeL team, INRIA Lille – Nord Europey [email protected] Renault Group, France [email protected] Romain Laroche Tanguy Urvoy Microsoft Research, Montreal, Canada Orange Labs, Lannion, France [email protected] [email protected] Odalric-Ambrym Maillard Olivier Pietquin SequeL team, INRIA Lille – Nord Europe Google Research - Brain Team [email protected] SequeL team, INRIA Lille – Nord Europey [email protected] Abstract A Budgeted Markov Decision Process (BMDP) is an extension of a Markov Decision Process to critical applications requiring safety constraints. It relies on a notion of risk implemented in the shape of a cost signal constrained to lie below an – adjustable – threshold. So far, BMDPs could only be solved in the case of finite state spaces with known dynamics. This work extends the state-of-the-art to continuous spaces environments and unknown dynamics. We show that the solution to a BMDP is a fixed point of a novel Budgeted Bellman Optimality operator. This observation allows us to introduce natural extensions of Deep Reinforcement Learning algorithms to address large-scale BMDPs. We validate our approach on two simulated applications: spoken dialogue and autonomous driving3. 1 Introduction Reinforcement Learning (RL) is a general framework for decision-making under uncertainty. It frames the learning objective as the optimal control of a Markov Decision Process (S; A; P; Rr; γ) S×A with measurable state space S, discrete actions A, unknown rewards Rr 2 R , and unknown dynamics P 2 M(S)S×A , where M(X ) denotes the probability measures over a set X . -

Jesford Senior Data Scientist

JesFord Senior Data Scientist contact profile [email protected] Experienced Data Scientist with a passion for applied machine learning and open source soft- linkedin.com/in/jesford ware. I bring a practical product driven mindset, an obsession with best practices, and a 206.446.6874 demonstrated ability to collaborate effectively across teams to achieve goals. I am a regular website conference speaker at events including PyCon, PluralsightLIVE, and VisInPractice. jesford.github.io experience GitHub 2018–current Recursion Salt Lake City, UT @jesford Senior Data Scientist 11/2019–current Data Scientist 4/2018–11/2019 tech skills • Trained, optimized, and benchmarked deep neural networks (CNNs and Python graph NNs) to solve problems in drug discovery. pandas, numpy, scipy, • Technical lead on teams that productionized the first machine learning pytorch, keras, xgboost, models at Recursion, responsible for directing a small team of data sci- scikit-learn, jupyter, entists, and providing regular updates to VPs. seaborn, matplotlib, prefect, dash, plotly • Model interpretability including saliency maps, GradCAM, DeepDream. • Pioneered use of MCMC for Bayesian parameter fitting of experimental Git & GitHub data, and developed new visualizations to inform biologists. Bash • Active Learning for improving class imbalance & minimizing model bias. SQL • Owned creation of cleaned & standardized labelsets from noisy data, en- C/C++ abling comparison of ML models across data science teams. Travis-CI • Designed & built custom Google Cloud database for storing ML models, AWS & GCP metrics, labels, and predictions; created resources for data science users. Sphinx • Led data science team to adopt software best practices for reproducible HTML/CSS research and clean production quality code. -

The Essentials of Data Analytics and Machine Learning

Introduction The Essentials of Data Analytics and Machine Learning [A guide for anyone who wants to learn practical machining learning using R] Author: Dr. Mike Ashcroft Editor: Ali Syed This work is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/4.0/. ® 2016 Dr. Michael Ashcroft and Persontyle Limited Essentials of Data Analytics and Machine Learning 1 INTRODUCTION Module 1 Practical introduction to machine learning and predictive models, and it is intended to serve as a fundamental resource for advanced data scientists. You will develop an applied understanding of the principles of machine learning and able to develop practical solutions using predictive models. Introduction Machine Learning for Data Science and Analytics This is a guide on practical machine learning, and it is intended to serve as a fundamental resource for advanced data scientists. But what does that mean? One problem in explaining such a sentence is that the domain of modern data analytics is plagued by a large number of near-synonyms, most colored by assumptions, associations and prejudices. Data science, data analytics, statistics, machine learning, data mining, information mining, pattern recognition, artificial intelligence, etc. Let us define our terms! By data science we understand the general modern data analytics area. It is dominated by the application of advanced statistical techniques to copious data using powerful computers. The datasets worked with may be large or small, but the ability to get data on any and all topics is a defining feature of the age that would have made the statisticians of previous eras green with jealousy. -

Democratizing Data Science and Predictive Analytics

IDG Quick Poll * DATA MINING Democratizing Data Science and Predictive Analytics New research identifies business initiatives to extend the benefits of data mining, sciences, machine learning, and predictive analytics, and the challenges that companies are facing with these plans. BUSINESS INSIGHTS AND DECISION-MAKING in part, because 78% of respondents say they are have been supercharged in recent years by the struggling to find the right data mining strategy or combination of huge data collections and sophis- solution. Much of the difficulty in doing so stems ticated “big data” analytics tools. All too often, from the wide range of data mining and BI tools however, cutting-edge data mining and business available, and the many use cases to which they can intelligence (BI) capabilities remain sequestered be applied. within organizations. Many companies have found these data analysis The complex data mining landscape tools too costly, too complex, and/or too special- The data mining field is nothing if not diverse. A new survey ized to distribute to all of the potential users Survey respondents identify nearly 30 different into data mining throughout their various business units. Instead, data mining use cases, with the average respondent practices and data mining and BI have been restricted to small selecting about 10 that were relevant to them. In requirements has teams of business analysts and finance profession- fact, about half of the respondents identified five als, who use the tools for broad strategic purposes use cases: found that 92% that often overlook more tactical uses and needs. of respondents Analyze risk/risk management (cited by 51%) There are signs that this segregation is chang- Cybersecurity (event detection, analysis, want to deploy ing. -

Optimal Path Routing Using Reinforcement Learning

OPTIMAL PATH ROUTING USING REINFORCEMENT LEARNING Rajasekhar Nannapaneni Sr Principal Engineer, Solutions Architect Dell EMC [email protected] Knowledge Sharing Article © 2020 Dell Inc. or its subsidiaries. The Dell Technologies Proven Professional Certification program validates a wide range of skills and competencies across multiple technologies and products. From Associate, entry-level courses to Expert-level, experience-based exams, all professionals in or looking to begin a career in IT benefit from industry-leading training and certification paths from one of the world’s most trusted technology partners. Proven Professional certifications include: • Cloud • Converged/Hyperconverged Infrastructure • Data Protection • Data Science • Networking • Security • Servers • Storage • Enterprise Architect Courses are offered to meet different learning styles and schedules, including self-paced On Demand, remote-based Virtual Instructor-Led and in-person Classrooms. Whether you are an experienced IT professional or just getting started, Dell Technologies Proven Professional certifications are designed to clearly signal proficiency to colleagues and employers. Learn more at www.dell.com/certification 2020 Dell Technologies Proven Professional Knowledge Sharing 2 Abstract Optimal path management is key when applied to disk I/O or network I/O. The efficiency of a storage or a network system depends on optimal routing of I/O. To obtain optimal path for an I/O between source and target nodes, an effective path finding mechanism among a set of given nodes is desired. In this article, a novel optimal path routing algorithm is developed using reinforcement learning techniques from AI. Reinforcement learning considers the given topology of nodes as the environment and leverages the given latency or distance between the nodes to determine the shortest path.