Convolutional Neural Networks (CNN) for Data Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Science Initiative 1

Data Science Initiative 1 3 credits in data and computational science, 1 credit in societal implications and opportunities, Data Science Initiative 1 elective credit to be drawn from a wide range of focused applications or deeper theoretical exploration, and 1 credit capstone experience. Director We also offer an option as a 5-th Year Master's Program if you are an Sohini Ramachandran undergraduate at Brown. This allows you to substitute maximally 2 credits Direfctor of Graduate Studies with courses you have already taken. Samuel S. Watson Master of Science in Data Science Brown University's Data Science Initiative serves as a campus hub Semester I for research and education in data science. Engaging partners across DATA 1010 Probability, Statistics, and Machine 2 campus and beyond, the DSI 's mission is to facilitate and conduct both Learning domain-driven and fundamental research in data science, increase data DATA 1030 Hands-on Data Science 1 fluency and educate the next generation of data scientists, and ultimately DATA 1050 Data Engineering 1 explore the impact of the data revolution on culture, society, and social justice. We envision our role in the university and beyond as something to Semester II build over time, with the flexibility to meet the changing needs of Brown’s DATA 2020 Statistical Learning 1 students and research community. DATA 2040 Deep Learning and Special Topics in Data 1 The Master’s Program in Data Science (Master of Science, Science ScM) prepares students from a wide range of disciplinary backgrounds DATA 2080 Data and Society 1 for distinctive careers in data science. -

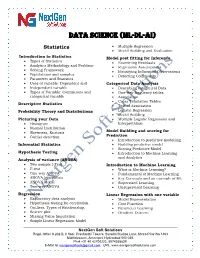

Data Science (ML-DL-Ai)

Data science (ML-DL-ai) Statistics Multiple Regression Model Building and Evaluation Introduction to Statistics Model post fitting for Inference Types of Statistics Examining Residuals Analytics Methodology and Problem- Regression Assumptions Solving Framework Identifying Influential Observations Populations and samples Detecting Collinearity Parameter and Statistics Uses of variable: Dependent and Categorical Data Analysis Independent variable Describing categorical Data Types of Variable: Continuous and One-way frequency tables categorical variable Association Cross Tabulation Tables Descriptive Statistics Test of Association Probability Theory and Distributions Logistic Regression Model Building Picturing your Data Multiple Logistic Regression and Histogram Interpretation Normal Distribution Skewness, Kurtosis Model Building and scoring for Outlier detection Prediction Introduction to predictive modelling Inferential Statistics Building predictive model Scoring Predictive Model Hypothesis Testing Introduction to Machine Learning and Analytics Analysis of variance (ANOVA) Two sample t-Test Introduction to Machine Learning F-test What is Machine Learning? One-way ANOVA Fundamental of Machine Learning ANOVA hypothesis Key Concepts and an example of ML ANOVA Model Supervised Learning Two-way ANOVA Unsupervised Learning Regression Linear Regression with one variable Exploratory data analysis Model Representation Hypothesis testing for correlation Cost Function Outliers, Types of Relationship, -

Data Science: the Big Picture Data Science with R Exploratory Data Analysis with R Data Visualization with R (3-Part)

Deep Learning: The Future of AI @MatthewRenze #DevoxxUK Human Cat Dog Car Job Postings for Machine Learning Source: Indeed.com Average Salary by Job Type (USA) $108,000 $101,000 $100,000 Source: Stack Overflow 2017 What is deep learning? What can it do for me? How do I get started? What is deep learning? Deep Learning Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Artificial Machine Deep Intelligence Learning Learning Artificial Intelligence Explicit programming Explicit programming Encoding domain knowledge Explicit programming Encoding domain knowledge Statistical patterns detection Machine Learning Machine Learning Artificial Machine Statistics Intelligence Learning 푓 푥 푓 푥 Prediction Data Function 푓 푥 Prediction Data Function Cat Dog 푓 푥 Prediction Data Function Cat Dog Is cat? 푓 푥 Prediction Data Function Cat Dog Is cat? Yes Artificial Neuron 푥1 푥2 푦 푥3 inputs neuron outputs Artificial Neuron Σ Artificial Neuron Σ Artificial Neuron 휔1 휔2 휔3 Artificial Neuron 휔0 Artificial Neuron 휔0 휔1 휔2 휔3 Artificial Neuron 푥 1 휔0 휔1 푥2 휑 휔2 Σ 푦 푚 휔3 푥3 푦푘 = 휑 푤푘푗푥푗 푗=0 Artificial Neural Network Artificial Neural Network input hidden output Artificial Neural Network Forward propagation Artificial Neural Network Forward propagation Backward propagation Artificial Neural Network Artificial Neural Network -

Explainable Deep Learning Models in Medical Image Analysis

Journal of Imaging Review Explainable Deep Learning Models in Medical Image Analysis Amitojdeep Singh 1,2,* , Sourya Sengupta 1,2 and Vasudevan Lakshminarayanan 1,2 1 Theoretical and Experimental Epistemology Laboratory, School of Optometry and Vision Science, University of Waterloo, Waterloo, ON N2L 3G1, Canada; [email protected] (S.S.); [email protected] (V.L.) 2 Department of Systems Design Engineering, University of Waterloo, Waterloo, ON N2L 3G1, Canada * Correspondence: [email protected] Received: 28 May 2020; Accepted: 17 June 2020; Published: 20 June 2020 Abstract: Deep learning methods have been very effective for a variety of medical diagnostic tasks and have even outperformed human experts on some of those. However, the black-box nature of the algorithms has restricted their clinical use. Recent explainability studies aim to show the features that influence the decision of a model the most. The majority of literature reviews of this area have focused on taxonomy, ethics, and the need for explanations. A review of the current applications of explainable deep learning for different medical imaging tasks is presented here. The various approaches, challenges for clinical deployment, and the areas requiring further research are discussed here from a practical standpoint of a deep learning researcher designing a system for the clinical end-users. Keywords: explainability; explainable AI; XAI; deep learning; medical imaging; diagnosis 1. Introduction Computer-aided diagnostics (CAD) using artificial intelligence (AI) provides a promising way to make the diagnosis process more efficient and available to the masses. Deep learning is the leading artificial intelligence (AI) method for a wide range of tasks including medical imaging problems. -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018)

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018) Chika Yinka-Banjo, PhD Ayorkor Korsah, PhD University of Lagos Ashesi University Nigeria Ghana Outline • Introduction to Machine learning • Reinforcement learning definitions • Example reinforcement learning problems • The Markov decision process • The optimal policy • Value function & Q-value function • Bellman Equation • Q-learning • Building a simple Q-learning agent (coding) • Recap • Where to go from here? Introduction to Machine learning • Artificial Intelligence (AI) is the study and design of Intelligent agents. • An Intelligent agent can perceive its environment through sensors and it can act on its environment through actuators. • E.g. Agent: Humanoid robot • Environment: Earth? • Sensors: Camera, tactile sensor etc. • Actuators: Motors, grippers etc. • Machine learning is a subfield of Artificial Intelligence Branches of AI Introduction to Machine learning • Machine learning techniques learn from data without being explicitly programmed to do so. • Machine learning models enable the agent to learn from its own experience by extracting useful information from feedback from its environment. • Three types of learning feedback: • Supervised learning • Unsupervised learning • Reinforcement learning Branches of Machine learning Supervised learning • Supervised learning: the machine learning model is trained on many labelled examples of input-output pairs. • Such that when presented with a novel input, the model can estimate accurately what the correct output should be. • Data(x, y): x is input data, y is label Supervised learning task in the form of classification • Goal: learn a function to map x -> y • Examples include; Classification, regression object detection, image captioning etc. Unsupervised learning • Unsupervised learning: here the model extract useful information from unlabeled and unstructured data. -

Keeping AI Legal

Vanderbilt Journal of Entertainment & Technology Law Volume 19 Issue 1 Issue 1 - Fall 2016 Article 5 2016 Keeping AI Legal Amitai Etzioni Oren Etzioni Follow this and additional works at: https://scholarship.law.vanderbilt.edu/jetlaw Part of the Computer Law Commons, and the Science and Technology Law Commons Recommended Citation Amitai Etzioni and Oren Etzioni, Keeping AI Legal, 19 Vanderbilt Journal of Entertainment and Technology Law 133 (2020) Available at: https://scholarship.law.vanderbilt.edu/jetlaw/vol19/iss1/5 This Article is brought to you for free and open access by Scholarship@Vanderbilt Law. It has been accepted for inclusion in Vanderbilt Journal of Entertainment & Technology Law by an authorized editor of Scholarship@Vanderbilt Law. For more information, please contact [email protected]. Keeping Al Legal Amitai Etzioni* and Oren Etzioni** ABSTRACT AI programs make numerous decisions on their own, lack transparency, and may change frequently. Hence, unassisted human agents, such as auditors, accountants, inspectors, and police, cannot ensure that Al-guided instruments will abide by the law. This Article suggests that human agents need the assistance of AI oversight programs that analyze and oversee operational AI programs. This Article asks whether operationalAIprograms should be programmed to enable human users to override them; without that, such a move would undermine the legal order. This Article also points out that Al operational programs provide high surveillance capacities and, therefore, are essential for protecting individual rights in the cyber age. This Article closes by discussing the argument that Al-guided instruments, like robots, lead to endangering much more than the legal order-that they may turn on their makers, or even destroy humanity. -

AI Ignition Ignite Your AI Curiosity with Oren Etzioni AI for the Common Good

AI Ignition Ignite your AI curiosity with Oren Etzioni AI for the common good From Deloitte’s AI Institute, this is AI Ignition—a monthly chat about the human side of artificial intelligence with your host, Beena Ammanath. We will take a deep dive into the past, present, and future of AI, machine learning, neural networks, and other cutting-edge technologies. Here is your host, Beena. Beena Ammanath (Beena): Hello, my name is Beena Ammanath. I am the executive director of the Deloitte AI Institute, and today on AI Ignition we have Oren Etzioni, a professor, an entrepreneur, and the CEO of Allen Institute for AI. Oren has helped pioneer meta search, online comparison shopping, machine reading, and open information extraction. He was also named Seattle’s Geek of the Year in 2013. Welcome to the show, Oren. I am so excited to have you on our AI Ignition show today. How are you doing? Oren Etzioni (Oren): I am doing as well as anyone can be doing in these times of COVID and counting down the days till I get a vaccine. Beena: Yeah, and how have you been keeping yourself busy during the pandemic? Oren: Well, we are fortunate that AI is basically software with a little sideline into robotics, and so despite working from home, we have been able to stay productive and engaged. It’s just a little bit less fun because one of the things I like to say about AI is that it’s still 99% human intelligence, and so obviously the human interactions are stilted. -

An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’

‘An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’ by Dr Clarence N W Tan, PhD Bachelor of Science in Electrical Engineering Computers (1986), University of Southern California, Los Angeles, California, USA Master of Science in Industrial and Systems Engineering (1989), University of Southern California Los Angeles, California, USA Masters of Business Administration (1989) University of Southern California Los Angeles, California, USA Graduate Diploma in Applied Finance and Investment (1996) Securities Institute of Australia Diploma in Technical Analysis (1996) Australian Technical Analysts Association Doctor of Philosophy Bond University (1997) URL: http://w3.to/ctan/ E-mail: [email protected] School of Information Technology, Bond University, Gold Coast, QLD 4229, Australia Table of Contents Table of Contents 1. INTRODUCTION TO ARTIFICIAL INTELLIGENCE AND ARTIFICIAL NEURAL NETWORKS ..........................................................................................................................................2 1.1 INTRODUCTION ...........................................................................................................................2 1.2 ARTIFICIAL INTELLIGENCE ..........................................................................................................2 1.3 ARTIFICIAL INTELLIGENCE IN FINANCE .......................................................................................4 1.3.1 Expert System -

![Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science](https://docslib.b-cdn.net/cover/5074/lecture-23-recurrent-neural-network-scs4049-02-machine-learning-and-data-science-675074.webp)

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science Seongsik Park ([email protected]) AI Department, Dongguk University Tentative schedule week topic date (수 / 월) 1 Machine Learning Introduction & Basic Mathematics 09.02 / 09.07 2 Python Practice I & Regression 09.09 / 09.14 3 AI Department Seminar I & Clustering I 09.16 / 09.21 4 Clustering II & Classification I 09.23 / 09.28 5 Classification II (추석) / 10.05 6 Python Practice II & Support Vector Machine I 10.07 / 10.12 7 Support Vector Machine II & Decision Tree and Ensemble Learning 10.14 / 10.19 8 Mid-Term Practice & Mid-Term Exam 10.21 / 10.26 9 휴강 & Dimensional Reduction I 10.28 / 11.02 10 Dimensional Reduction II & Neural networks and Back Propagation I 11.04 / 11.09 11 Neural networks and Back Propagation II & III 11.11 / 11.16 12 AI Department Seminar II & Convolutional Neural Network 11.18 / 11.23 13 Python Practice III & Recurrent Neural network 11.25 / 11.30 14 Model Optimization & Autoencoders 12.02 / 12.07 15 Final exam (휴강)/ 12.14 1/22 Introduction of recurrent neural network (RNN) • Rumelhart, et. al., Learning Internal Representations by Error Propagation (1986) • fx(1); :::; x(t−1); x(t); x(t+1); :::; x(N)g와 같은 sequence를 처리하는데 특화 • RNN 은 긴 시퀀스를 처리할 수 있으며, 길이가 변동적인 시퀀스도 처리 가능 • Parameter sharing: 전체 sequence에서 파라미터를 공유함 • CNN은 시퀀스를 다룰 수 없나? • 시간 축으로 1-D convolution ) 시간 지연 신경망도 가능하지만 깊이가 얕음 (shallow) • RNN은 깊은 구조(deep)가 가능함 • Data type • 일반적으로 xt 는 시계열 데이터 (time series, temporal data) • 여기서 t = 1; 2; :::; N은 time step 또는 sequence 내에서의 순서 • 전체 길이 N은 변동적 2/22 Recurrent Neurons Up to now we have mostly looked at feedforward neural networks, where the activa‐ tions flow only in one direction, from the input layer to the output layer (except for a few networks in Appendix E). -

Oren Etzioni, Phd: CEO of Allen Institute for AI

Behind the Tech Kevin Scott Podcast EP-26: Oren Etzioni, PhD: CEO of Allen Institute for AI [MUSIC] OREN ETZIONI: Whose responsibility is it? The responsibility and liability has to ultimately rest with a person. You can’t say, “Hey, you know, look, my car ran you over, it’s an AI car, I don’t know what it did, it’s not my fault, right?” You as the driver or maybe it’s the manufacturer if there’s some malfunction, but people have to be responsible for the behavior of the machines. [MUSIC] KEVIN SCOTT: Hi, everyone. Welcome to Behind the Tech. I'm your host, Kevin Scott, Chief Technology Officer for Microsoft. In this podcast, we're going to get behind the tech. We'll talk with some of the people who have made our modern tech world possible and understand what motivated them to create what they did. So, join me to maybe learn a little bit about the history of computing and get a few behind- the-scenes insights into what's happening today. Stick around. [MUSIC] CHRISTINA WARREN: Hello, and welcome to Behind the Tech. I’m Christina Warren, senior cloud advocate at Microsoft. KEVIN SCOTT: And I’m Kevin Scott. CHRISTINA WARREN: Today on the show, our guest is Oren Etzioni. Oren is a professor, entrepreneur, and is the chief executive officer for the Allen Institute for AI. So, Kevin, I’m guessing that you guys have already crossed paths in your professional pursuits. KEVIN SCOTT: Yeah, I’ve been lucky enough to know Oren for the past several years. -

Budgeted Reinforcement Learning in Continuous State Space

Budgeted Reinforcement Learning in Continuous State Space Nicolas Carrara∗ Edouard Leurent∗ SequeL team, INRIA Lille – Nord Europey SequeL team, INRIA Lille – Nord Europey [email protected] Renault Group, France [email protected] Romain Laroche Tanguy Urvoy Microsoft Research, Montreal, Canada Orange Labs, Lannion, France [email protected] [email protected] Odalric-Ambrym Maillard Olivier Pietquin SequeL team, INRIA Lille – Nord Europe Google Research - Brain Team [email protected] SequeL team, INRIA Lille – Nord Europey [email protected] Abstract A Budgeted Markov Decision Process (BMDP) is an extension of a Markov Decision Process to critical applications requiring safety constraints. It relies on a notion of risk implemented in the shape of a cost signal constrained to lie below an – adjustable – threshold. So far, BMDPs could only be solved in the case of finite state spaces with known dynamics. This work extends the state-of-the-art to continuous spaces environments and unknown dynamics. We show that the solution to a BMDP is a fixed point of a novel Budgeted Bellman Optimality operator. This observation allows us to introduce natural extensions of Deep Reinforcement Learning algorithms to address large-scale BMDPs. We validate our approach on two simulated applications: spoken dialogue and autonomous driving3. 1 Introduction Reinforcement Learning (RL) is a general framework for decision-making under uncertainty. It frames the learning objective as the optimal control of a Markov Decision Process (S; A; P; Rr; γ) S×A with measurable state space S, discrete actions A, unknown rewards Rr 2 R , and unknown dynamics P 2 M(S)S×A , where M(X ) denotes the probability measures over a set X .