Collective Cookbook: Best Practices in Visual Archiving Between News Organizations and Individual Collections

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

View Annual Report

2008 Annual Report ABOUT A. H. BELO CORPORATION A. H. Belo Corporation (NYSE: AHC) headquartered in Dallas, Texas, is a distinguished newspaper publishing and local news and information company that owns and operates four daily newspapers and a diverse group of Web sites. A. H. Belo publishes The Dallas Morning News, Texas’ leading newspaper and winner of eight Pulitzer Prizes since 1986; The Providence Journal, the oldest continuously-published daily newspaper in the U.S. and winner of four Pulitzer Prizes; The Press-Enterprise (Riverside, CA), serving southern California’s Inland Empire region and winner of one Pulitzer Prize; and the Denton Record-Chronicle. The Company publishes various specialty publications targeting niche audiences, and its partnerships and/or investments include the Yahoo! Newspaper Consortium and Classified Ventures, owner of cars.com. A. H. Belo also owns direct mail and commercial printing businesses. Dear Fellow Shareholders: A. H. Belo Corporation’s first year as a stand-alone publicly-held company was most notable for the dramatic differences in economic conditions versus what we anticipated when the Company was spun off from Belo Corp. on February 8, 2008. Planning for the spin-off, which began in May 2007, did not contemplate any scenario even remotely similar to what A. H. Belo and every other advertising-based company encountered as 2008 unfolded. I am proud of the ways in which the Company’s management and employees responded to these very difficult, unpredictable changes as we aggressively implemented strategies developed along with the Board of Directors. A. H. Belo’s strategy and the initiatives referenced above are detailed in a series of letters I have sent to shareholders and the Company’s employees since the spin-off, most recently prior to the release of the Company’s year-end financial results on February 17. -

PRESCRIPTION for PERIL How Insurance Fraud Finances Theft and Abuse of Addictive Prescription Drugs

PRESCRIPTION FOR PERIL How Insurance Fraud Finances Theft and Abuse of Addictive Prescription Drugs !"#$%&%"'()*#%'+&(,'+-.#'/0(1.#-2( !"#"$%"&'())* !"#$%&'(! )*+%*$ About the coalition... Founded in 1993, the Coalition Against Insurance Fraud is a national alliance of consumer groups, insurance companies and government agencies fi ghting all forms of insurance fraud through advocacy, public outreach and research. Visit www.InsuranceFraud.org. ! ! ! ! ! "#$!%&'()*&+,!-)*(.!-/&*&+,'!01,23!*&.!45(',!#2!4../+3/6,!7),'+)/83/#&!9)(:' #$%&'!()!*(+#'+#, About this Report . 3 Executive Summary . 4 Introduction . 8 Impact on Insurers & Society . .12 Gathering Storm: Causes of Diversion Epidemic . 14 “Perfect Storm of Abuse” . 21 Insurance Impact and Risk Exposure . 32 Workers Compensation: High Impact . 45 Combating Drug Diversion . 47 Conclusion: Cost of Inaction . .55 Endnotes . 56 Appendix . 65 !!!!!!!!!!;#*</3/#&!4:*/&'3!%&'()*&+,!-)*(.!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" 7=>?;=%70%@A!-@=!7>=%B!!!!!!!!!! ! ! ! ! ! ! ! ! ! ! ! ! -!!!!!!!!!!!!!!!!!!./012/3.4356!75/!.0/38 ! ! ! ! ! "#$!%&'()*&+,!-)*(.!-/&*&+,'!01,23!*&.!45(',!#2!4../+3/6,!7),'+)/83/#&!9)(:' $%(:#!#;<,!/0.5/4 his report examines largely unreported and elusive aspects of the crime known as drug diversion: the role insurance fraud plays in fi nancing this crime, and the high cost to health insurers and others. ! ese victims include health and workers compensation insurers, employers, pharmacy Tbenefi t managers (PBMs) and taxpayer health insurance programs such as Medicare and Medicaid. -

News for Dallas, Texas | Arts & Entertainment

Dallas Morning News | News for Dallas, Texas | Arts & Entertainment Entertainment Advertising Dallas, Texas Welcome, Visitor! Sign In/Become a registered member Monday April 24, 2006 2:21 p. Customize | Make This Your Home Page | E-mail m. CDT Newsletters | MySpecialsDirect | Subscribe to DMN Advertising Print | E-mail | Most E-mailed | RSS Discuss | Subscribe | Home News Archives SportsDay Find a Car Job Seekers Business History in the Find a Dealer Employers Entertainment remaking Sell Your Car CNE Lifestyles Opinion Homes for Sale Classifieds New Construction TicketCenter More Features BOOKS: 'Da Vinci Code' spurs Rentals DatingCenter Multimedia Selling a Home Photography debate about Christianity shopping News on Demand Browse All Ads Blogs/Chats/Forums Shop by Keyword Obituaries 06:04 PM CDT on Sunday, April 23, 2006 Weather Sell Your Stuff Cars Jobs By CHARLES EALY / The Dallas Homes Morning News Classifieds Advertising Archives Celebrations The Da Vinci Code has become DMN Resources more than a record-breaking best- Subscriber Services seller. It has spurred an unprecedented level of speculation about the history of Christianity. In fact, four Da Vinci imitators, all of them thrillers involving supposed conspiracy theories, are climbing the national hardcover best-seller charts. The big winners are Steve Berry's The Templar Legacy (No. 6), Javier Sierra's The Secret Supper (No. 8) and Raymond Khoury's The Last Templar (No. 15). Meanwhile, Kate Mosse's Labyrinth has been nibbling at the top 15, while The Da Vinci Code itself is No. 3 on the hardback list and No. 1 in paperback. And that's just in fiction. -

On Press Online

ON PRESS ONLINE Annual Report 1999 The Financial Chairman’s Belo Belo Belo Operating Corporate Financial Company Highlights Letter Properties Interactive Companies Information Information 1 2 3 8 12 16 30 31 Belo is one of the nation’s largest diversified media companies with a superior group of television broadcasting, newspaper publishing, cable news and interactive media assets. Belo enters the 21st Century with more than 157 years of experience in providing quality news and information to viewers and readers, and a long-standing commitment to community service and journalistic excellence. the company Belo is distinctively positioned as an industry leader in an ever-changing media environment. Committed to the standards of excellence that have shaped the Company’s achievements, Belo is focused on the future and the technological advancements that continue to make ours the most exciting business in the world today. 1 financial highlights Year ended December 31 (in thousands, except per share amounts) Revenues 1999 1998 1997 Broadcasting $ 598,637 (a) $ 593,426 $ 522,560 Newspaper Publishing 816,976 (b) 784,327 693,777 Interactive Media 6,520 3,214 718 Other 11,849 10,736 17,149 Net operating revenues $1,433,982 $1,391,703 $1,234,204 Earnings Broadcasting $ 143,200 (a) $ 143,751 (d) $ 136,715 Newspaper Publishing 176,985 (b) 138,289 (e) 154,612 Interactive Media (8,908) (2,778) (1,584) Other (7,726) (5,212) (9,237) Corporate Expenses (39,056) (40,965) (39,704) Earnings from operations $ 264,495 $ 233,085 $ 240,802 Operating Cash Flow -

District Department of Transportation Highway

DISTRICT DEPARTMENT OF TRANSPORTATION HIGHWAY SAFETY ANNUAL REPORT Anthony A. Williams Mayor Dan Tangherlini Carole A. Lewis, Chief Director Transportation Safety TABLE OF CONTENTS PLANNING & ADMINISTRATION 3 OCCUPANT PROTECTION DISTRICT DEPARTMENT OF TRANSPORTATION 10 METROPOLITAN POLICE DEPARTMENT 10 ASSOCIATES FOR RENEWED EDUCATION 17 GEORGE WASHINGTON UNIVERSITY 24 BICYCLE SAFETY WASHINGTON AREA BICYCLE ASSOCIATION 26 ALCOHOL COUNTERMEASURES WRAP 29 METROPOLITAN POLICE DEPARTMENT 39 YOUTH & ALCOHOL 41 POLICE TRAFFIC SERVICES, RED LIGHT ENFORCEMENT 43 PEDESTRIAN SAFETY METROPOLITAN POLICE DEPARTMENT 47 ROADWAY OPERATIONS PATROL/INCIDENT MANAGEMENT 52 CAMPAIGN SUMMARY REPORTS 58 2 PLANNING AND ADMINISTRATION DISTRICT DEPARTMENT OF TRANSPORTATION SECTION I: OVERVIEW INFORMATION The Highway Safety Office (Transportation Safety Division) is the focal point for highway safety issues in the District of Columbia. Along with the support of the Mayor’s Representative (Director, District Department of Transportation) the TSD provides leadership by developing, promoting, and coordinating programs; influencing public and private policy; and increasing public awareness of highway safety. Our partnerships include the Metropolitan Police Department, DC, Department of Motor Vehicles, Courts, and the University of the District of Columbia, hospitals, and private citizen organizations. The number of people killed in traffic crashes in the District of Columbia increased by four in 2005. This increased was due, in part, to speeding drivers and disobeying traffic signals. There were 49 traffic fatalities during 2005, up from 45 in 2004. The Planning and Administration program area includes those activities and costs necessary for the overall management and operations of the District of Columbia’s Highway Safety Office. The Chief of the Transportation Safety Division is responsible for the DC Highway Safety Program, and participates in activities that impact DDOT’s highway safety program and policies. -

The Internet and Homegrown Jihadist Terrorism Assessing U.S. Detection Techniques

Calhoun: The NPS Institutional Archive DSpace Repository Theses and Dissertations 1. Thesis and Dissertation Collection, all items 2010-12 The internet and homegrown jihadist terrorism assessing U.S. detection techniques Banez, Justin D. Monterey, California. Naval Postgraduate School http://hdl.handle.net/10945/5027 Downloaded from NPS Archive: Calhoun NAVAL POSTGRADUATE SCHOOL MONTEREY, CALIFORNIA THESIS THE INTERNET AND HOMEGROWN JIHADIST TERRORISM: ASSESSING U.S. DETECTION TECHNIQUES by Justin D. Bañez December 2010 Thesis Advisor: Erik J. Dahl Second Reader: Sean F. Everton Approved for public release; distribution is unlimited THIS PAGE INTENTIONALLY LEFT BLANK REPORT DOCUMENTATION PAGE Form Approved OMB No. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instruction, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden, to Washington headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503. 1. AGENCY USE ONLY (Leave blank) 2. REPORT DATE 3. REPORT TYPE AND DATES COVERED December 2010 Master’s Thesis 4. TITLE AND SUBTITLE The Internet and Homegrown Jihadist Terrorism: 5. FUNDING NUMBERS Assessing U.S. Detection Techniques 6. AUTHOR(S) Justin D. Bañez 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8. PERFORMING ORGANIZATION Naval Postgraduate School REPORT NUMBER Monterey, CA 93943-5000 9. -

USA National

USA National Hartselle Enquirer Alabama Independent, The Newspapers Alexander Islander, The City Outlook Andalusia Star Jacksonville News News Anniston Star Lamar Leader Birmingham News Latino News Birmingham Post-Herald Ledger, The Cullman Times, The Daily Marion Times-Standard Home, The Midsouth Newspapers Daily Mountain Eagle Millbrook News Monroe Decatur Daily Dothan Journal, The Montgomery Eagle Enterprise Ledger, Independent Moundville The Florence Times Daily Times Gadsden Times National Inner City, The Huntsville Times North Jefferson News One Mobile Register Voice Montgomery Advertiser Onlooker, The News Courier, The Opelika- Opp News, The Auburn News Scottsboro Over the Mountain Journal Daily Sentinel Selma Times- Pelican, The Journal Times Daily, The Pickens County Herald Troy Messenger Q S T Publications Tuscaloosa News Red Bay News Valley Times-News, The Samson Ledger Weeklies Abbeville Sand Mountain Reporter, The Herald Advertiser Gleam, South Alabamian, The Southern The Atmore Advance Star, The Auburn Plainsman Speakin' Out News St. Baldwin Times, The Clair News-Aegis St. Clair BirminghamWeekly Times Tallassee Tribune, Blount Countian, The The Boone Newspapers Inc. The Bulletin Centreville Press Cherokee The Randolph Leader County Herald Choctaw Thomasville Times Tri Advocate, The City Ledger Tuskegee Clanton Advertiser News, The Union Clarke County Democrat Springs Herald Cleburne News Vernon Lamar Democrat Conecuh Countian, The Washington County News Corner News Weekly Post, The County Reaper West Alabama Gazette Courier -

7021699273.Pdf

Attachment B FOR PUBLIC INSPECTION News Channels Carried by Top 14 MVPDs1 Carried by Callsign Network Description Programming Genre Resolution Language Comcast AAJTK AAJ TAK News SD Other N ABCNEWS ABC News Now News SD English N ALJZ ALJZ - Al Jazeera (Arabic) News SD Other N ALJZENG Al Jazeera English News SD English N ARABYIA Al Arabiya (International) News SD Other N ARYNEWS ARY News News SD Other N BANDN Band News News SD Other N BAY9 Bay News 9 Local News AN English N BAY9DT Bay News 9 DT Local News SD English N BAY9ES Bay 9 News in Espanol News SD Other N BBCARAB BBC Arabic News SD Other N BBCWLD BBC WORLD News SD English Y BCAT56 BCAT56 News Public Affairs SD English N BHMANGO Brighthouse Manatee Government Access News Public Affairs SD English N BLOOM Bloomberg Business Television News-Business SD English Y BLOOMHD Bloom Business Television HD News HD English Y BONTV Blue Ocean Network News SD English N BRONXGA Bronxville Government Access News-Public Affairs AN English N CABLENO CABLENOTICIAS News SD Spanish Y CALCHAN California Channel News-Public Affairs SD English Y CBB Community Bulletin Board News SD English Y CCTVNWS CCTV News News SD Other Y CFLN Central Florida News 13 News AN/SD English N CFLNHD Central Florida News 13 HD News HD English N CLTV Chicagoland Television News Local News SD English Y CN100 Comcast 100 News-Public Affairs SD English Y CN2 Cable News 2 News AN/SD English N CNBC CNBC News-Business SD English Y CNBCHD CNBC HD News-Business HD English Y CNBCWLD CNBC World News-Business SD English Y CNN Cable News -

TV Reception by Channel Low Power TV Stations and Translators

TV Reception By Channel Low Power TV Stations and Translators California - Colorado - Connecticut - Delaware - District of Columbia HD Channels underlined, with bold faced italic print Highlighted with LIGHT BLUE background. SD 16:9 Widescreen Channels with Regular print LT GRAY Updated February 2015 SPANISH Language channels in RED NOTES: CP = Construction Permit App = Application + = proposed new facility Mileage given from TV transmitter for protected coverage service under average conditions at least 50% of the time. d Notation after "Miles" indicates that the coverage pattern is directional, and overall numbers are approximate. Actual coverage will depend upon terrain between the transmitter and receive location, as well as any local obstructions. Distant reception can be enhanced with elevated antenna locations, as well as specialized antennas and preamplifiers. Compiled by MIKE KOHL at GLOBAL COMMUNICATIONS in Plain, Wisconsin Please E-Mail any corrections to: [email protected] We appreciate any information found by local observation of live signals. CALIFORNIA TV Reception By Channel Full & Low Power Stations & Translators Redding DIG Range CH Call Network Community (Transmitter) Lat-N Long-W Miles Digital Subchannels 5 K05ET-D NBC Alturas-Likely (Likely Mountain) 41 09 19 120 33 45 36 2.1 KOTI-NBC 2.2 This TV 20 K20DE-D PBS Alturas-Likely (Likely Mountain) 41 09 18 120 33 46 21-d 9.1 KIXE-PBS 9.2 Create 9.3 World 7 KASC-CA Azteca Am Atascadero (SW) (Tassajera Peak) 35 26 47 120 42 58 analog 7 KASC-Azteca Am 39 + KASC-CD Azteca -

Michael Donnadio

LISA T. WEGMANN [email protected] Plano, Texas 75093 (214) 679-0406 DIRECTOR OF SALES AND MARKETING Account Retention and Revenue Growth/ Client Relations/ Consultative Selling/ Negotiations / Staff Development – Recruiting, Coaching, Performance Management/ Sales Processes/ Digital Strategy /Digital Marketing – SEM/SEO/Display/Video Consistently meet or exceed revenue goals, frequently achieving top ranking and share Capturing new business and capitalizing on niche new business opportunities Motivating and training teams of diverse sales professionals Taking new products and concepts to market, rapidly securing new business Sales Leadership Training: PSS, PSC, Paradigm, and ES&A Education: BBA in Management from the University of Texas at Austin SELECTED ACCOMPLISHMENTS Doubled sales. Took over underperforming Time Warner Cable Media sales team. Met with each individual to identify his or her core strengths. Selected advertiser verticals that matched each rep’s interests and experience. Applied in- depth research to secure large health care, financial, and QSR contracts. Drove morale up, while doubling billing in just a few years. Established startup station as “The place to work”. Took over as DOS at TXCN. Crafted sales Standard Operating Procedures. Hired traffic, research and sales support staff. Individually trained each of the green AEs into solution- oriented sales consultants. Built market reputation as premier service provider, overcoming the mere 33% market cable penetration to exceed all sales goals. Built independent station into market competitor. WFAA entered into a Joint Sales Agreement (JSA) with an underperforming independent TV station (KFWD). Appointed to establish JSA sales program and team. Recruited and trained five new AEs. Leveraged personal business relationships to secure immediate advertising dollars. -

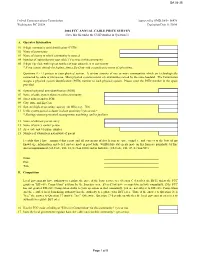

2004 FCC ANNUAL CABLE PRICE SURVEY (Save This File Under the CUID Number in Question 1)

DA 04-35 Federal Communications Commission Approved by OMB 3060 - 06476 Washington, DC 20554 Expiration Date 11/30/06 2004 FCC ANNUAL CABLE PRICE SURVEY (Save this file under the CUID number in Question 1) A. Operator Information 01 6-digit community unit identification (CUID) 02 Name of community 03 Name of county in which community is situated 04 Number of subscribers to your cable TV service in this community 05 5-digit Zip Code with highest number of your subscribers in community * If you cannot identify the highest, then a Zip Code with a significant portion of subscribers. Questions 6 - 11 pertain to your physical system. A system consists of one or more communities which are technologically connected by cable or microwave. Most physical systems consist of communities served by the same headend. The Commission assigns a physical system identification (PSID) number to each physical system. Please enter the PSID number in the space provided. 06 System's physical unit identification (PSID) 07 Name of cable system that serves this community 08 Street address and/or POB 09 City, state, and Zip Code 10 System's highest operating capacity (in MHz, e.g., 750) 11 Is this system part of a cluster in close proximity? (yes or no) * * Sharing common personnel, management, marketing, and/or facilities. 12 Name of ultimate parent entity 13 Name of survey contact person 14 Area code and telephone number 15 Number of subscribers nationwide of parent I certify that I have examined this report and all statements of fact herein are true, complete, and correct to the best of my knowledge, information, and belief, and are made in good faith. -

No. 11-10697 in the UNITED STATES COURT of APPEALS for the FIFTH CIRCUIT LARRY RANDALL POWELL; LAWRENCE WILLIAM DEORE; PAULA F

No. 11-10697 IN THE UNITED STATES COURT OF APPEALS FOR THE FIFTH CIRCUIT LARRY RANDALL POWELL; LAWRENCE WILLIAM DEORE; PAULA F. WATSON; GARY VAN WEST; RAUL PREZAS REYES; TIMOTHY ARTHUR O'LEARY; JAN MICHAEL HUBBARD; MICHAEL S. COONS; JOHN PAUL CHAMLESS; IRA HADNOT ALEXANDER; DEBORAH SUE VOORHEES; LINSTON ROBERT LOFLEY; KAREN PATTERSON; LINDA JONES; GARY STRATTON; EWINA H. SCHUMACHER; PAULETTE LADACH; STEPHEN WAYNE YOUNT, Plaintiffs-Appellants v. THE DALLAS MORNING NEWS, LP; BELO CORPORATION; BELO BENEFITS ADMINISTRATIVE COMMITTEE, as plan administrator for the G.B. Dealey Retirement Pension Plan and the Belo Savings Plan, Defendants-Appellees ________________________ ON APPEAL FROM THE UNITED STATES DISTRICT COURT FOR THE NORTHERN DISTRICT OF TEXAS ________________________ BRIEF AMICUS CURIAE OF AARP IN SUPPORT OF PLAINTIFFS-APPELLANTS, SUPPORTING REVERSAL ________________________ Mary Ellen Signorille Melvin Radowitz AARP Foundation Litigation AARP 601 E Street, NW 601 E Street, NW Washington, DC 20049 Washington, DC 20049 (202) 434-2060 ATTORNEYS FOR AMICUS CURIAE AARP CERTIFICATE OF INTERESTED PERSONS (1) Larry Powell et al v. The Dallas Morning News, LP et al, No. 11-10697 (2) The undersigned counsel of record certify that the following listed persons and entities as described in the fourth sentence of Rule 28.2.1 have an interest on the outcome of this case. These representations are made in order that the judges of this court may evaluate possible disqualifications or recusal. Appellants: Larry Randall Powell Lawrence William DeOre Paula F. Watson Gary Van West Raul Prezas Reyes Timothy Arthur O’Leary Jan Michael Hubbard Michael S. Coons John Paul Chamless Ira Hadnot Alexander Deborah Sue Voorhees Linston Robert Lofley Karen Patterson Linda Jones Gary Stratton Ewina H.