Encoding Arguments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Randomness and Computation

Randomness and Computation Rod Downey School of Mathematics and Statistics, Victoria University, PO Box 600, Wellington, New Zealand [email protected] Abstract This article examines work seeking to understand randomness using com- putational tools. The focus here will be how these studies interact with classical mathematics, and progress in the recent decade. A few representa- tive and easier proofs are given, but mainly we will refer to the literature. The article could be seen as a companion to, as well as focusing on develop- ments since, the paper “Calibrating Randomness” from 2006 which focused more on how randomness calibrations correlated to computational ones. 1 Introduction The great Russian mathematician Andrey Kolmogorov appears several times in this paper, First, around 1930, Kolmogorov and others founded the theory of probability, basing it on measure theory. Kolmogorov’s foundation does not seek to give any meaning to the notion of an individual object, such as a single real number or binary string, being random, but rather studies the expected values of random variables. As we learn at school, all strings of length n have the same probability of 2−n for a fair coin. A set consisting of a single real has probability zero. Thus there is no meaning we can ascribe to randomness of a single object. Yet we have a persistent intuition that certain strings of coin tosses are less random than others. The goal of the theory of algorithmic randomness is to give meaning to randomness content for individual objects. Quite aside from the intrinsic mathematical interest, the utility of this theory is that using such objects instead distributions might be significantly simpler and perhaps giving alternative insight into what randomness might mean in mathematics, and perhaps in nature. -

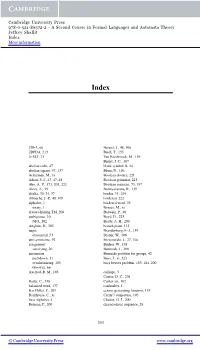

A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More Information

Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information Index 2DFA, 66 Berstel, J., 48, 106 2DPDA, 213 Biedl, T., 135 3-SAT, 21 Van Biesbrouck, M., 139 Birget, J.-C., 107 abelian cube, 47 blank symbol, B, 14 abelian square, 47, 137 Blum, N., 106 Ackerman, M., xi Boolean closure, 221 Adian, S. I., 43, 47, 48 Boolean grammar, 223 Aho, A. V., 173, 201, 223 Boolean matrices, 73, 197 Alces, A., 29 Boonyavatana, R., 139 alfalfa, 30, 34, 37 border, 35, 104 Allouche, J.-P., 48, 105 bordered, 222 alphabet, 1 bordered word, 35 unary, 1 Borges, M., xi always-halting TM, 209 Borwein, P., 48 ambiguous, 10 Boyd, D., 223 NFA, 102 Brady, A. H., 200 Angluin, D., 105 branch point, 113 angst Brandenburg, F.-J., 139 existential, 54 Brauer, W., 106 antisymmetric, 92 Brzozowski, J., 27, 106 assignment Bucher, W., 138 satisfying, 20 Buntrock, J., 200 automaton Burnside problem for groups, 42 pushdown, 11 Buss, J., xi, 223 synchronizing, 105 busy beaver problem, 183, 184, 200 two-way, 66 Axelrod, R. M., 105 calliope, 3 Cantor, D. C., 201 Bader, C., 138 Cantor set, 102 balanced word, 137 cardinality, 1 Bar-Hillel, Y., 201 census generating function, 133 Barkhouse, C., xi Cerny’s conjecture, 105 base alphabet, 4 Chaitin, G. J., 200 Berman, P., 200 characteristic sequence, 28 233 © Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information 234 Index chess, -

Kolmogorov Complexity of Graphs John Hearn Harvey Mudd College

Claremont Colleges Scholarship @ Claremont HMC Senior Theses HMC Student Scholarship 2006 Kolmogorov Complexity of Graphs John Hearn Harvey Mudd College Recommended Citation Hearn, John, "Kolmogorov Complexity of Graphs" (2006). HMC Senior Theses. 182. https://scholarship.claremont.edu/hmc_theses/182 This Open Access Senior Thesis is brought to you for free and open access by the HMC Student Scholarship at Scholarship @ Claremont. It has been accepted for inclusion in HMC Senior Theses by an authorized administrator of Scholarship @ Claremont. For more information, please contact [email protected]. Applications of Kolmogorov Complexity to Graphs John Hearn Ran Libeskind-Hadas, Advisor Michael Orrison, Reader May, 2006 Department of Mathematics Copyright © 2006 John Hearn. The author grants Harvey Mudd College the nonexclusive right to make this work available for noncommercial, educational purposes, provided that this copyright statement appears on the reproduced materials and notice is given that the copy- ing is by permission of the author. To disseminate otherwise or to republish re- quires written permission from the author. Abstract Kolmogorov complexity is a theory based on the premise that the com- plexity of a binary string can be measured by its compressibility; that is, a string’s complexity is the length of the shortest program that produces that string. We explore applications of this measure to graph theory. Contents Abstract iii Acknowledgments vii 1 Introductory Material 1 1.1 Definitions and Notation . 2 1.2 The Invariance Theorem . 4 1.3 The Incompressibility Theorem . 7 2 Graph Complexity and the Incompressibility Method 11 2.1 Complexity of Labeled Graphs . 12 2.2 The Incompressibility Method . -

Compressing Information Kolmogorov Complexity Optimal Decompression

Compressing information Optimal decompression algorithm Almost everybody now is familiar with compress- The definition of KU depends on U. For the trivial ÞÔ ÞÔ µ ´ µ ing/decompressing programs such as , , decompression algorithm U ´y y we have KU x Ö , , etc. A compressing program can x. One can try to find better decompression algo- be applied to any file and produces the “compressed rithms, where “better” means “giving smaller com- version” of that file. If we are lucky, the compressed plexities”. However, the number of short descrip- version is much shorter than the original one. How- tions is limited: There is less than 2n strings of length ever, no information is lost: the decompression pro- less than n. Therefore, for any fixed decompres- gram can be applied to the compressed version to get sion algorithm the number of words whose complex- n the original file. ity is less than n does not exceed 2 1. One may [Question: A software company advertises a com- conclude that there is no “optimal” decompression pressing program and claims that this program can algorithm because we can assign short descriptions compress any sufficiently long file to at most 90% of to some string only taking them away from other its original size. Would you buy this program?] strings. However, Kolmogorov made a simple but How compression works? A compression pro- crucial observation: there is asymptotically optimal gram tries to find some regularities in a file which decompression algorithm. allow to give a description of the file which is shorter than the file itself; the decompression program re- Definition 1 An algorithm U is asymptotically not µ 6 ´ µ · constructs the file using this description. -

The Folklore of Sorting Algorithms

IJCSI International Journal of Computer Science Issues, Vol. 4, No. 2, 2009 25 ISSN (Online): 1694-0784 ISSN (Print): 1694-0814 The Folklore of Sorting Algorithms Dr. Santosh Khamitkar1, Parag Bhalchandra2, Sakharam Lokhande 2, Nilesh Deshmukh2 School of Computational Sciences, Swami Ramanand Teerth Marathwada University, Nanded (MS ) 431605, India Email : 1 [email protected] , 2 [email protected] Abstract progress. Every researcher attempted optimization in past has found stuck to his / her observations regarding sorting The objective of this paper is to review the folklore knowledge experiments and produced his/ her own results. Since seen in research work devoted on synthesis, optimization, and these results were specific to software and hardware effectiveness of various sorting algorithms. We will examine environment therein, we found abundance in the sorting algorithms in the folklore lines and try to discover the complexity analysis which possibly could be thought as tradeoffs between folklore and theorems. Finally, the folklore the folklore knowledge. We tried to review this folklore knowledge on complexity values of the sorting algorithms will be considered, verified and subsequently converged in to knowledge in past research work and came to a conclusion theorems. that it was mainly devoted on synthesis, optimization, effectiveness in working styles of various sorting Key words: Folklore, Algorithm analysis, Sorting algorithm, algorithms. Computational Complexity notations. Our work is not mere commenting earlier work rather we are putting the knowledge embedded in sorting folklore 1. Introduction with some different and interesting view so that students, teachers and researchers can easily understand them. Folklore is the traditional beliefs, legend and customs, Synthesis, in terms of folklore and theorems, hereupon current among people. -

Analysis of Sorting Algorithms by Kolmogorov Complexity (A Survey)

Analysis of Sorting Algorithms by Kolmogorov Complexity (A Survey) Paul Vit¶anyi ¤ December 18, 2003 Abstract Recently, many results on the computational complexity of sorting algorithms were ob- tained using Kolmogorov complexity (the incompressibility method). Especially, the usually hard average-case analysis is ammenable to this method. Here we survey such results about Bub- blesort, Heapsort, Shellsort, Dobosiewicz-sort, Shakersort, and sorting with stacks and queues in sequential or parallel mode. Especially in the case of Shellsort the uses of Kolmogorov complex- ity surprisingly easily resolved problems that had stayed open for a long time despite strenuous attacks. 1 Introduction We survey recent results in the analysis of sorting algorithms using a new technical tool: the incompressibility method based on Kolmogorov complexity. Complementing approaches such as the counting method and the probabilistic method, the new method is especially suited for the average- case analysis of algorithms and machine models, whereas average-case analysis is usually more di±cult than worst-case analysis using the traditional methods. Obviously, the results described can be obtained using other proof methods|all true provable statements must be provable from the axioms of mathematics by the inference methods of mathematics. The question is whether a particular proof method facilitates and guides the proving e®ort. The following examples make clear that thinking in terms of coding and the incompressibility method suggests simple proofs that resolve long-standing open problems. A survey of the use of the incompressibility method in combinatorics, computational complexity, and the analysis of algorithms is [16] Chapter 6, and other recent work is [2, 15]. -

New Applications of the Incompressibility Method (Extended Abstract)

New Applications of the Incompressibility Method (Extended Abstract) Harry Buhrman1 , Tao Jiang2 , Ming Li3 , and Paul Vitanyi1 1 CWI, Kruislaan 413, 1098 SJ Amsterdam, The Netherlands, {buhrman, paul v }©cwi. nl Supported in part via NeuroCOLT II ESPRIT Working Group 2 Dept of Computing and Software, McMaster University, Hamilton, Ontario L8S 4Kl, Canada, jiang©cas .mcmaster. ea Supported in part by NSERC and CITO grants 3 Dept of Computer Science, University of Waterloo, Waterloo, Ontario N2L 3Gl, Canada, mli©math.uwaterloo.ca Supported in part by NSERC and CITO grants and Steacie Fellowship Abstract. The incompressibility method is an elementary yet powerful proof technique based on Kolmogorov complexity [13]. We show that it is particularly suited to obtain average-case computational complexity lower bounds. Such lower bounds have been difficult to obtain in the past by other methods. In this paper we present four new results and also give four new proofs of known results to demonstrate the power and elegance of the new method. 1 Introduction The incompressibility of individual random objects yields a simple but powerful proof technique: the incompressibility method. This method is a general purpose tool that can be used to prove lower bounds on computational problems, to obtain combinatorial properties of concrete objects, and to analyze the average complexity of an algorithm. Since the early 1980's, the incompressibility method has been successfully used to solve many well-known questions that had been open for a long time and to supply new simplified proofs for known results. Here we demonstrate how easy the incompressibility method can be used in the particular case of obtaining average-case computational complexity lower bounds. -

COMPUTABILITY and RANDOMNESS 1. Historical Roots

COMPUTABILITY AND RANDOMNESS ROD DOWNEY AND DENIS R. HIRSCHFELDT 1. Historical roots 1.1. Von Mises. Around 1930, Kolmogorov and others founded the theory of prob- ability, basing it on measure theory. Probability theory is concerned with the dis- tribution of outcomes in sample spaces. It does not seek to give any meaning to the notion of an individual object, such as a single real number or binary string, being random, but rather studies the expected values of random variables. How could a binary string representing a sequence of n coin tosses be random, when all strings of length n have the same probability of 2−n for a fair coin? Less well known than the work of Kolmogorov are early attempts to answer this kind of question by providing notions of randomness for individual objects. The modern theory of algorithmic randomness realizes this goal. One way to develop this theory is based on the idea that an object is random if it passes all relevant \randomness tests". For example, by the law of large numbers, for a random real X, we would expect the number of 1's in the binary expansion of X to have limiting 1 frequency 2 . (That is, writing X(j) for the jth bit of this expansion, we would jfj<n:X(j)=1gj 1 expect to have limn!1 n = 2 .) Indeed, we would expect X to be normal to base 2, meaning that for any binary string σ of length k, the occurrences of σ in the binary expansion of X should have limiting frequency 2−k. -

Around Kolmogorov Complexity: Basic Notions and Results

Around Kolmogorov complexity: basic notions and results Alexander Shen∗ Abstract Algorithmic information theory studies description complexity and ran- domness and is now a well known field of theoretical computer science and mathematical logic. There are several textbooks and monographs devoted to this theory [4, 1, 5, 2, 7] where one can find the detailed exposition of many difficult results as well as historical references. However, it seems that a short survey of its basic notions and main results relating these notions to each other, is missing. This report attempts to fill this gap and covers the basic notions of algorithmic information theory: Kolmogorov complexity (plain, conditional, prefix), Solomonoff universal a priori probability, no- tions of randomness (Martin-L¨of randomness, Mises–Church randomness), effective Hausdorff dimension. We prove their basic properties (symmetry of information, connection between a priori probability and prefix complex- ity, criterion of randomness in terms of complexity, complexity characteriza- tion for effective dimension) and show some applications (incompressibility method in computational complexity theory, incompleteness theorems). It is based on the lecture notes of a course at Uppsala University given by the arXiv:1504.04955v1 [cs.IT] 20 Apr 2015 author [6]. 1 Compressing information Everybody is familiar with compressing/decompressing programs such as zip, gzip, compress, arj, etc. A compressing program can be applied to an arbitrary file and produces a “compressed version” of that file. If we are lucky, the com- pressed version is much shorter than the original one. However, no information is ∗LIRMM (Montpellier), on leave from IITP RAS (Moscow), [email protected] 1 lost: the decompression program can be applied to the compressed version to get the original file.1 How is it possible? A compression program tries to find some regularities in a file which allow it to give a description of the file than is shorter than the file itself; the decompression program then reconstructs the file using this description. -

Kolmogorov Complexity

Introduction Basic Properties Variants of K-Complexity Applications Summary CISC 876: Kolmogorov Complexity Neil Conway March 27, 2007 Neil Conway CISC 876: Kolmogorov Complexity Introduction Basic Properties Variants of K-Complexity Applications Summary Outline 1 Introduction 2 Basic Properties Definition Incompressibility and Randomness 3 Variants of K-Complexity Prefix Complexity Resource-Bounded K-Complexity 4 Applications Incompressibility Method G¨odel’s Incompleteness Theorem 5 Summary Neil Conway CISC 876: Kolmogorov Complexity Introduction Basic Properties Variants of K-Complexity Applications Summary Outline 1 Introduction 2 Basic Properties Definition Incompressibility and Randomness 3 Variants of K-Complexity Prefix Complexity Resource-Bounded K-Complexity 4 Applications Incompressibility Method G¨odel’s Incompleteness Theorem 5 Summary Neil Conway CISC 876: Kolmogorov Complexity Intuition The first has a simple description: “print 1 16 times”. There is no (obvious) description for the second string that is essentially shorter than listing its digits. Kolmogorov complexity formalizes this intuitive notion of complexity. Introduction Basic Properties Variants of K-Complexity Applications Summary Complexity of Objects Example Which of these is more complex? 1 1111111111111111 2 1101010100011101 Neil Conway CISC 876: Kolmogorov Complexity Kolmogorov complexity formalizes this intuitive notion of complexity. Introduction Basic Properties Variants of K-Complexity Applications Summary Complexity of Objects Example Which of these is more complex? 1 1111111111111111 2 1101010100011101 Intuition The first has a simple description: “print 1 16 times”. There is no (obvious) description for the second string that is essentially shorter than listing its digits. Neil Conway CISC 876: Kolmogorov Complexity Introduction Basic Properties Variants of K-Complexity Applications Summary Complexity of Objects Example Which of these is more complex? 1 1111111111111111 2 1101010100011101 Intuition The first has a simple description: “print 1 16 times”. -

![[Math.PR] 10 Oct 2001](https://docslib.b-cdn.net/cover/6098/math-pr-10-oct-2001-8086098.webp)

[Math.PR] 10 Oct 2001

Randomness Paul Vit´anyi∗ CWI and Universiteit van Amsterdam Abstract Here we present in a single essay a combination and completion of the several aspects of the problem of randomness of individual objects which of necessity occur scattered in our text [10]. The reader can consult different arrangements of parts of the material in [7, 20]. Contents 1 Introduction 2 1.1 Occam’sRazorRevisited....................... 3 1.2 Lacuna of Classical Probability Theory . 4 1.3 LacunaofInformationTheory. 4 2 RandomnessasUnpredictability 6 2.1 Von Mises’ Collectives . 8 2.2 Wald-Church Place Selection . 11 3 RandomnessasIncompressibility 12 3.1 KolmogorovComplexity .. .. .. .. .. .. .. .. .. .. 14 3.2 Complexity Oscillations . 16 3.3 Relation with Unpredictability . 19 3.4 Kolmogorov-Loveland Place Selection . 20 4 Randomness as Membership of All Large Majorities 21 arXiv:math/0110086v2 [math.PR] 10 Oct 2001 4.1 Typicality............................... 21 4.2 RandomnessinMartin-L¨of’sSense . 24 4.3 RandomFiniteSequences . 25 4.4 RandomInfiniteSequences . 28 4.5 Randomnessof IndividualSequences Resolved. 37 ∗(1944— ) Partially supported by the European Union through NeuroCOLT ESPRIT Working Group Nr. 8556, and by NWO through NFI Project ALADDIN under Contract number NF 62-376. Address: CWI, Kruislaan 413, 1098 SJ Amsterdam, The Netherlands. Email: [email protected] 1 5 Applications 37 5.1 Prediction............................... 37 5.2 G¨odel’s incompleteness result . 38 5.3 Lowerbounds............................. 39 5.4 Statistical Properties of Finite Sequences . 41 5.5 Chaos and Predictability . 45 1 Introduction Pierre-Simon Laplace (1749—1827) has pointed out the following reason why intuitively a regular outcome of a random event is unlikely. “We arrange in our thought all possible events in various classes; and we regard as extraordinary those classes which include a very small number. -

Analysis of Sorting Algorithms by Kolmogorov Complexity (A Survey)

BOLYAI SOCIETY Entropy, Search, Complexity, pp. 209{232. MATHEMATICAL STUDIES, 16 Analysis of Sorting Algorithms by Kolmogorov Complexity (A Survey) PAUL VITANYI¶ ¤ Recently, many results on the computational complexity of sorting algorithms were obtained using Kolmogorov complexity (the incompressibility method). Es- pecially, the usually hard average-case analysis is ammenable to this method. Here we survey such results about Bubblesort, Heapsort, Shellsort, Dobosiewicz- sort, Shakersort, and sorting with stacks and queues in sequential or parallel mode. Especially in the case of Shellsort the uses of Kolmogorov complexity sur- prisingly easily resolved problems that had stayed open for a long time despite strenuous attacks. 1. Introduction We survey recent results in the analysis of sorting algorithms using a new technical tool: the incompressibility method based on Kolmogorov complex- ity. Complementing approaches such as the counting method and the prob- abilistic method, the new method is especially suited for the average-case analysis of algorithms and machine models, whereas average-case analysis is usually more di±cult than worst-case analysis using the traditional meth- ods. Obviously, the results described can be obtained using other proof methods { all true provable statements must be provable from the axioms of mathematics by the inference methods of mathematics. The question is whether a particular proof method facilitates and guides the proving e®ort. The following examples make clear that thinking in terms of coding and the ¤Supported in part via NeuroCOLT II ESPRIT Working Group. 210 P. Vit¶anyi incompressibility method suggests simple proofs that resolve long-standing open problems. A survey of the use of the incompressibility method in com- binatorics, computational complexity, and the analysis of algorithms is [16] Chapter 6, and other recent work is [2, 15].