Healing the Fragmentation to Realise the Full Potential of The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

V a Lida T in G R D F Da

Series ISSN: 2160-4711 LABRA GAYO • ET AL GAYO LABRA Series Editors: Ying Ding, Indiana University Paul Groth, Elsevier Labs Validating RDF Data Jose Emilio Labra Gayo, University of Oviedo Eric Prud’hommeaux, W3C/MIT and Micelio Iovka Boneva, University of Lille Dimitris Kontokostas, University of Leipzig VALIDATING RDF DATA This book describes two technologies for RDF validation: Shape Expressions (ShEx) and Shapes Constraint Language (SHACL), the rationales for their designs, a comparison of the two, and some example applications. RDF and Linked Data have broad applicability across many fields, from aircraft manufacturing to zoology. Requirements for detecting bad data differ across communities, fields, and tasks, but nearly all involve some form of data validation. This book introduces data validation and describes its practical use in day-to-day data exchange. The Semantic Web offers a bold, new take on how to organize, distribute, index, and share data. Using Web addresses (URIs) as identifiers for data elements enables the construction of distributed databases on a global scale. Like the Web, the Semantic Web is heralded as an information revolution, and also like the Web, it is encumbered by data quality issues. The quality of Semantic Web data is compromised by the lack of resources for data curation, for maintenance, and for developing globally applicable data models. At the enterprise scale, these problems have conventional solutions. Master data management provides an enterprise-wide vocabulary, while constraint languages capture and enforce data structures. Filling a need long recognized by Semantic Web users, shapes languages provide models and vocabularies for expressing such structural constraints. -

Deciding SHACL Shape Containment Through Description Logics Reasoning

Deciding SHACL Shape Containment through Description Logics Reasoning Martin Leinberger1, Philipp Seifer2, Tjitze Rienstra1, Ralf Lämmel2, and Steffen Staab3;4 1 Inst. for Web Science and Technologies, University of Koblenz-Landau, Germany 2 The Software Languages Team, University of Koblenz-Landau, Germany 3 Institute for Parallel and Distributed Systems, University of Stuttgart, Germany 4 Web and Internet Science Research Group, University of Southampton, England Abstract. The Shapes Constraint Language (SHACL) allows for for- malizing constraints over RDF data graphs. A shape groups a set of constraints that may be fulfilled by nodes in the RDF graph. We investi- gate the problem of containment between SHACL shapes. One shape is contained in a second shape if every graph node meeting the constraints of the first shape also meets the constraints of the second. Todecide shape containment, we map SHACL shape graphs into description logic axioms such that shape containment can be answered by description logic reasoning. We identify several, increasingly tight syntactic restrictions of SHACL for which this approach becomes sound and complete. 1 Introduction RDF has been designed as a flexible, semi-structured data format. To ensure data quality and to allow for restricting its large flexibility in specific domains, the W3C has standardized the Shapes Constraint Language (SHACL)5. A set of SHACL shapes are represented in a shape graph. A shape graph represents constraints that only a subset of all possible RDF data graphs conform to. A SHACL processor may validate whether a given RDF data graph conforms to a given SHACL shape graph. A shape graph and a data graph that act as a running example are pre- sented in Fig. -

Rdfa in XHTML: Syntax and Processing Rdfa in XHTML: Syntax and Processing

RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing A collection of attributes and processing rules for extending XHTML to support RDF W3C Recommendation 14 October 2008 This version: http://www.w3.org/TR/2008/REC-rdfa-syntax-20081014 Latest version: http://www.w3.org/TR/rdfa-syntax Previous version: http://www.w3.org/TR/2008/PR-rdfa-syntax-20080904 Diff from previous version: rdfa-syntax-diff.html Editors: Ben Adida, Creative Commons [email protected] Mark Birbeck, webBackplane [email protected] Shane McCarron, Applied Testing and Technology, Inc. [email protected] Steven Pemberton, CWI Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. The English version of this specification is the only normative version. Non-normative translations may also be available. Copyright © 2007-2008 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract The current Web is primarily made up of an enormous number of documents that have been created using HTML. These documents contain significant amounts of structured data, which is largely unavailable to tools and applications. When publishers can express this data more completely, and when tools can read it, a new world of user functionality becomes available, letting users transfer structured data between applications and web sites, and allowing browsing applications to improve the user experience: an event on a web page can be directly imported - 1 - How to Read this Document RDFa in XHTML: Syntax and Processing into a user’s desktop calendar; a license on a document can be detected so that users can be informed of their rights automatically; a photo’s creator, camera setting information, resolution, location and topic can be published as easily as the original photo itself, enabling structured search and sharing. -

Using Rule-Based Reasoning for RDF Validation

Using Rule-Based Reasoning for RDF Validation Dörthe Arndt, Ben De Meester, Anastasia Dimou, Ruben Verborgh, and Erik Mannens Ghent University - imec - IDLab Sint-Pietersnieuwstraat 41, B-9000 Ghent, Belgium [email protected] Abstract. The success of the Semantic Web highly depends on its in- gredients. If we want to fully realize the vision of a machine-readable Web, it is crucial that Linked Data are actually useful for machines con- suming them. On this background it is not surprising that (Linked) Data validation is an ongoing research topic in the community. However, most approaches so far either do not consider reasoning, and thereby miss the chance of detecting implicit constraint violations, or they base them- selves on a combination of dierent formalisms, eg Description Logics combined with SPARQL. In this paper, we propose using Rule-Based Web Logics for RDF validation focusing on the concepts needed to sup- port the most common validation constraints, such as Scoped Negation As Failure (SNAF), and the predicates dened in the Rule Interchange Format (RIF). We prove the feasibility of the approach by providing an implementation in Notation3 Logic. As such, we show that rule logic can cover both validation and reasoning if it is expressive enough. Keywords: N3, RDF Validation, Rule-Based Reasoning 1 Introduction The amount of publicly available Linked Open Data (LOD) sets is constantly growing1, however, the diversity of the data employed in applications is mostly very limited: only a handful of RDF data is used frequently [27]. One of the reasons for this is that the datasets' quality and consistency varies signicantly, ranging from expensively curated to relatively low quality data [33], and thus need to be validated carefully before use. -

Bibliography of Erik Wilde

dretbiblio dretbiblio Erik Wilde's Bibliography References [1] AFIPS Fall Joint Computer Conference, San Francisco, California, December 1968. [2] Seventeenth IEEE Conference on Computer Communication Networks, Washington, D.C., 1978. [3] ACM SIGACT-SIGMOD Symposium on Principles of Database Systems, Los Angeles, Cal- ifornia, March 1982. ACM Press. [4] First Conference on Computer-Supported Cooperative Work, 1986. [5] 1987 ACM Conference on Hypertext, Chapel Hill, North Carolina, November 1987. ACM Press. [6] 18th IEEE International Symposium on Fault-Tolerant Computing, Tokyo, Japan, 1988. IEEE Computer Society Press. [7] Conference on Computer-Supported Cooperative Work, Portland, Oregon, 1988. ACM Press. [8] Conference on Office Information Systems, Palo Alto, California, March 1988. [9] 1989 ACM Conference on Hypertext, Pittsburgh, Pennsylvania, November 1989. ACM Press. [10] UNIX | The Legend Evolves. Summer 1990 UKUUG Conference, Buntingford, UK, 1990. UKUUG. [11] Fourth ACM Symposium on User Interface Software and Technology, Hilton Head, South Carolina, November 1991. [12] GLOBECOM'91 Conference, Phoenix, Arizona, 1991. IEEE Computer Society Press. [13] IEEE INFOCOM '91 Conference on Computer Communications, Bal Harbour, Florida, 1991. IEEE Computer Society Press. [14] IEEE International Conference on Communications, Denver, Colorado, June 1991. [15] International Workshop on CSCW, Berlin, Germany, April 1991. [16] Third ACM Conference on Hypertext, San Antonio, Texas, December 1991. ACM Press. [17] 11th Symposium on Reliable Distributed Systems, Houston, Texas, 1992. IEEE Computer Society Press. [18] 3rd Joint European Networking Conference, Innsbruck, Austria, May 1992. [19] Fourth ACM Conference on Hypertext, Milano, Italy, November 1992. ACM Press. [20] GLOBECOM'92 Conference, Orlando, Florida, December 1992. IEEE Computer Society Press. http://github.com/dret/biblio (August 29, 2018) 1 dretbiblio [21] IEEE INFOCOM '92 Conference on Computer Communications, Florence, Italy, 1992. -

Rdfs:Frbr– Towards an Implementation Model for Library Catalogs Using Semantic Web Technology

rdfs:frbr– Towards an Implementation Model for Library Catalogs Using Semantic Web Technology Stefan Gradmann SUMMARY. The paper sets out from a few basic observations (biblio- graphic information is still mostly part of the ‘hidden Web,’ library au- tomation methods still have a low WWW-transparency, and take-up of FRBR has been rather slow) and continues taking a closer look at Se- mantic Web technology components. This results in a proposal for im- plementing FRBR as RDF-Schema and of RDF-based library catalogues built on such an approach. The contribution concludes with a discussion of selected strategic benefits resulting from such an approach. [Article copies available for a fee from The Haworth Document Delivery Service: 1-800-HAWORTH. E-mail address: <[email protected]> Web- site: <http://www.HaworthPress.com> © 2005 by The Haworth Press, Inc. All rights reserved.] Stefan Gradmann, PhD, is Head, Hamburg University “Virtual Campus Library” Unit, which is part of the computing center and has a mission of providing information management services to the university as a whole, including e-publication services and open access to electronic scientific information resources. Address correspondence to: Stefan Gradmann, Virtuelle Campusbibliothek Regionales Rechenzentrum der Universität Hamburg, Schlüterstrasse 70, D-20146 Hamburg, Germany (E-mail: [email protected]). [Haworth co-indexing entry note]: “rdfs:frbr–Towards an Implementation Model for Library Cata- logs Using Semantic Web Technology.” Gradmann, Stefan. Co-published simultaneously in Cataloging & Classification Quarterly (The Haworth Information Press, an imprint of The Haworth Press, Inc.) Vol. 39, No. 3/4, 2005, pp. 63-75; and: Functional Requirements for Bibliographic Records (FRBR): Hype or Cure-All? (ed: Patrick Le Boeuf) The Haworth Information Press, an imprint of The Haworth Press, Inc., 2005, pp. -

SHACL Satisfiability and Containment

SHACL Satisfiability and Containment Paolo Pareti1 , George Konstantinidis1 , Fabio Mogavero2 , and Timothy J. Norman1 1 University of Southampton, Southampton, United Kingdom {pp1v17,g.konstantinidis,t.j.norman}@soton.ac.uk 2 Università degli Studi di Napoli Federico II, Napoli, Italy [email protected] Abstract. The Shapes Constraint Language (SHACL) is a recent W3C recom- mendation language for validating RDF data. Specifically, SHACL documents are collections of constraints that enforce particular shapes on an RDF graph. Previous work on the topic has provided theoretical and practical results for the validation problem, but did not consider the standard decision problems of satisfiability and containment, which are crucial for verifying the feasibility of the constraints and important for design and optimization purposes. In this paper, we undertake a thorough study of different features of non-recursive SHACL by providing a translation to a new first-order language, called SCL, that precisely captures the semantics of SHACL w.r.t. satisfiability and containment. We study the interaction of SHACL features in this logic and provide the detailed map of decidability and complexity results of the aforementioned decision problems for different SHACL sublanguages. Notably, we prove that both problems are undecidable for the full language, but we present decidable combinations of interesting features. 1 Introduction The Shapes Constraint Language (SHACL) has been recently introduced as a W3C recommendation language for the validation of RDF graphs, and it has already been adopted by mainstream tools and triplestores. A SHACL document is a collection of shapes which define particular constraints and specify which nodes in a graph should be validated against these constraints. -

Using Rule-Based Reasoning for RDF Validation

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Ghent University Academic Bibliography Using Rule-Based Reasoning for RDF Validation Dörthe Arndt, Ben De Meester, Anastasia Dimou, Ruben Verborgh, and Erik Mannens Ghent University - imec - IDLab Sint-Pietersnieuwstraat 41, B-9000 Ghent, Belgium [email protected] Abstract. The success of the Semantic Web highly depends on its in- gredients. If we want to fully realize the vision of a machine-readable Web, it is crucial that Linked Data are actually useful for machines con- suming them. On this background it is not surprising that (Linked) Data validation is an ongoing research topic in the community. However, most approaches so far either do not consider reasoning, and thereby miss the chance of detecting implicit constraint violations, or they base them- selves on a combination of dierent formalisms, eg Description Logics combined with SPARQL. In this paper, we propose using Rule-Based Web Logics for RDF validation focusing on the concepts needed to sup- port the most common validation constraints, such as Scoped Negation As Failure (SNAF), and the predicates dened in the Rule Interchange Format (RIF). We prove the feasibility of the approach by providing an implementation in Notation3 Logic. As such, we show that rule logic can cover both validation and reasoning if it is expressive enough. Keywords: N3, RDF Validation, Rule-Based Reasoning 1 Introduction The amount of publicly available Linked Open Data (LOD) sets is constantly growing1, however, the diversity of the data employed in applications is mostly very limited: only a handful of RDF data is used frequently [27]. -

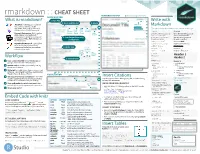

R Markdown Cheat Sheet I

1. Workflow R Markdown is a format for writing reproducible, dynamic reports with R. Use it to embed R code and results into slideshows, pdfs, html documents, Word files and more. To make a report: R Markdown Cheat Sheet i. Open - Open a file that ii. Write - Write content with the iii. Embed - Embed R code that iv. Render - Replace R code with its output and transform learn more at rmarkdown.rstudio.com uses the .Rmd extension. easy to use R Markdown syntax creates output to include in the report the report into a slideshow, pdf, html or ms Word file. rmarkdown 0.2.50 Updated: 8/14 A report. A report. A report. A report. A plot: A plot: A plot: A plot: Microsoft .Rmd Word ```{r} ```{r} ```{r} = = hist(co2) hist(co2) hist(co2) ``` ``` Reveal.js ``` ioslides, Beamer 2. Open File Start by saving a text file with the extension .Rmd, or open 3. Markdown Next, write your report in plain text. Use markdown syntax to an RStudio Rmd template describe how to format text in the final report. syntax becomes • In the menu bar, click Plain text File ▶ New File ▶ R Markdown… End a line with two spaces to start a new paragraph. *italics* and _italics_ • A window will open. Select the class of output **bold** and __bold__ you would like to make with your .Rmd file superscript^2^ ~~strikethrough~~ • Select the specific type of output to make [link](www.rstudio.com) with the radio buttons (you can change this later) # Header 1 • Click OK ## Header 2 ### Header 3 #### Header 4 ##### Header 5 ###### Header 6 4. -

CBOR (RFC 7049) Concise Binary Object Representation

CBOR (RFC 7049) Concise Binary Object Representation Carsten Bormann, 2015-11-01 1 CBOR: Agenda • What is it, and when might I want it? • How does it work? • How do I work with it? 2 CBOR: Agenda • What is it, and when might I want it? • How does it work? • How do I work with it? 3 Slide stolen from Douglas Crockford History of Data Formats • Ad Hoc • Database Model • Document Model • Programming Language Model Box notation TLV 5 XML XSD 6 Slide stolen from Douglas Crockford JSON • JavaScript Object Notation • Minimal • Textual • Subset of JavaScript Values • Strings • Numbers • Booleans • Objects • Arrays • null Array ["Sunday", "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday"] [ [0, -1, 0], [1, 0, 0], [0, 0, 1] ] Object { "name": "Jack B. Nimble", "at large": true, "grade": "A", "format": { "type": "rect", "width": 1920, "height": 1080, "interlace": false, "framerate": 24 } } Object Map { "name": "Jack B. Nimble", "at large": true, "grade": "A", "format": { "type": "rect", "width": 1920, "height": 1080, "interlace": false, "framerate": 24 } } JSON limitations • No binary data (byte strings) • Numbers are in decimal, some parsing required • Format requires copying: • Escaping for strings • Base64 for binary • No extensibility (e.g., date format?) • Interoperability issues • I-JSON further reduces functionality (RFC 7493) 12 BSON and friends • Lots of “binary JSON” proposals • Often optimized for data at rest, not protocol use (BSON ➔ MongoDB) • Most are more complex than JSON 13 Why a new binary object format? • Different design goals from current formats – stated up front in the document • Extremely small code size – for work on constrained node networks • Reasonably compact data size – but no compression or even bit-fiddling • Useful to any protocol or application that likes the design goals 14 Concise Binary Object Representation (CBOR) 15 “Sea Boar” “Sea Boar” 16 Design goals (1 of 2) 1. -

Rmarkdown : : CHEAT SHEET RENDERED OUTPUT File Path to Output Document SOURCE EDITOR What Is Rmarkdown? 1

rmarkdown : : CHEAT SHEET RENDERED OUTPUT file path to output document SOURCE EDITOR What is rmarkdown? 1. New File Write with 5. Save and Render 6. Share find in document .Rmd files · Develop your code and publish to Markdown ideas side-by-side in a single rpubs.com, document. Run code as individual shinyapps.io, The syntax on the lef renders as the output on the right. chunks or as an entire document. set insert go to run code RStudio Connect Rmd preview code code chunk(s) Plain text. Plain text. Dynamic Documents · Knit together location chunk chunk show End a line with two spaces to End a line with two spaces to plots, tables, and results with outline start a new paragraph. start a new paragraph. narrative text. Render to a variety of 4. Set Output Format(s) Also end with a backslash\ Also end with a backslash formats like HTML, PDF, MS Word, or and Options reload document to make a new line. to make a new line. MS Powerpoint. *italics* and **bold** italics and bold Reproducible Research · Upload, link superscript^2^/subscript~2~ superscript2/subscript2 to, or attach your report to share. ~~strikethrough~~ strikethrough Anyone can read or run your code to 3. Write Text run all escaped: \* \_ \\ escaped: * _ \ reproduce your work. previous modify chunks endash: --, emdash: --- endash: –, emdash: — chunk run options current # Header 1 Header 1 chunk ## Header 2 Workflow ... Header 2 2. Embed Code ... 11. Open a new .Rmd file in the RStudio IDE by ###### Header 6 Header 6 going to File > New File > R Markdown. -

Conda-Build Documentation Release 3.21.5+15.G174ed200.Dirty

conda-build Documentation Release 3.21.5+15.g174ed200.dirty Anaconda, Inc. Sep 27, 2021 CONTENTS 1 Installing and updating conda-build3 2 Concepts 5 3 User guide 17 4 Resources 49 5 Release notes 115 Index 127 i ii conda-build Documentation, Release 3.21.5+15.g174ed200.dirty Conda-build contains commands and tools to use conda to build your own packages. It also provides helpful tools to constrain or pin versions in recipes. Building a conda package requires installing conda-build and creating a conda recipe. You then use the conda build command to build the conda package from the conda recipe. You can build conda packages from a variety of source code projects, most notably Python. For help packing a Python project, see the Setuptools documentation. OPTIONAL: If you are planning to upload your packages to Anaconda Cloud, you will need an Anaconda Cloud account and client. CONTENTS 1 conda-build Documentation, Release 3.21.5+15.g174ed200.dirty 2 CONTENTS CHAPTER ONE INSTALLING AND UPDATING CONDA-BUILD To enable building conda packages: • install conda • install conda-build • update conda and conda-build 1.1 Installing conda-build To install conda-build, in your terminal window or an Anaconda Prompt, run: conda install conda-build 1.2 Updating conda and conda-build Keep your versions of conda and conda-build up to date to take advantage of bug fixes and new features. To update conda and conda-build, in your terminal window or an Anaconda Prompt, run: conda update conda conda update conda-build For release notes, see the conda-build GitHub page.