Iot Malicious Traffic Classification Using Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Internet Infrastructure Review Vol.34

Internet Infrastructure Review Mar.2017 Vol. 34 Infrastructure Security Ursnif (Gozi) Anti-Analysis Techniques and Methods for Bypassing Them Technology Trends The Current State of Library OSes Internet Infrastructure Review March 2017 Vol.34 Executive Summary ............................................................................................................................ 3 1. Infrastructure Security .................................................................................................................. 4 1.1 Introduction ..................................................................................................................................... 4 1.2 Incident Summary ........................................................................................................................... 4 1.3 Incident Survey ...............................................................................................................................11 1.3.1 DDoS Attacks ...................................................................................................................................11 1.3.2 Malware Activities ......................................................................................................................... 13 1.3.3 SQL Injection Attacks ..................................................................................................................... 17 1.3.4 Website Alterations ....................................................................................................................... -

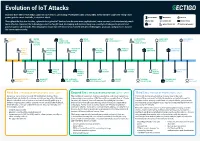

Evolution of Iot Attacks

Evolution of IoT Attacks By 2025, there will be 41.6 billion connected IoT devices, generating 79 zettabytes (ZB) of data (IDC). Every Internet-connected “thing,” from power grids to smart doorbells, is at risk of attack. BLUETOOTH INDUSTRIAL MEDICAL Throughout the last few decades, cyberattacks against IoT devices have become more sophisticated, more common, and unfortunately, much BOTNET GENERIC IOT SMART HOME more effective. However, the technology sector has fought back, developing and implementing new security technologies to prevent and CAR INFRASTRUCTURE WATCH/WEARABLE protect against cyberattacks. This infographic shows how the industry has evolved with new technologies, protocols, and processes to raise the bar on cybersecurity. STUXNET WATER BMW MIRAI FITBIT SAUDI PETROL SILEX LINUX DARK NEXUS VIRUS UTILITY CONNECTED BOTNET VULNERABILITY CHEMICAL MALWARE BOTNET SYSTEM DRIVE SYSTEM PLANT ATTACK (SCADA) PUERTO UNIVERSITY UKRAINIAN HAJIME AMNESIA AMAZON PHILIPS HUE RICO OF MICHIGAN POWER VIGILANTE BOTNET RING HACK LIGHTBULB SMART TRAFFIC GRID BOTNET METERS LIGHTS MEDTRONIC GERMAN TESLA CCTV PERSIRAI FANCY SWEYNTOOTH INSULIN STEEL MODEL S BOTNET BOTNET BEAR VS. FAMILY PUMPS MILL HACK REMOTE SPORTS HACK HACKABLE HEART BASHLITE FIAT CHRYSLER NYADROP SELF- REAPER THINKPHP TWO MILLION KAIJI MONITORS BOTNET REMOTE CONTROL UPDATING MALWARE BOTNET EXPLOITATION TAKEOVER MALWARE First Era | THE AGE OF EXPLORATION | 2005 - 2009 Second Era | THE AGE OF EXPLOITATION | 2011 - 2019 Third Era | THE AGE OF PROTECTION | 2020 Security is not a priority for early IoT/embedded devices. Most The number of connected devices is exploding, and cloud connectivity Connected devices are ubiquitous in every area of life, from cyberattacks are limited to malware and viruses impacting Windows- is becoming commonplace. -

Reviewing Traffic Classification

Reviewing Traffic Classification Silvio Valenti1,4, Dario Rossi1, Alberto Dainotti2, Antonio Pescape`2, Alessandro Finamore3, Marco Mellia3 1 Telecom ParisTech, France – [email protected] 2 Universita` di Napoli Federico II, Italy – [email protected] 3 Politecnico di Torino, Italy – [email protected] 4 Current affiliation: Google, Inc. Abstract. Traffic classification has received increasing attention in the last years. It aims at offering the ability to automatically recognize the application that has generated a given stream of packets from the direct and passive observation of the individual packets, or stream of packets, flowing in the network. This ability is instrumental to a number of activities that are of extreme interest to carriers, Internet service providers and network administrators in general. Indeed, traffic classification is the basic block that is required to enable any traffic management operations, from differentiating traffic pricing and treatment (e.g., policing, shap- ing, etc.), to security operations (e.g., firewalling, filtering, anomaly detection, etc.). Up to few years ago, almost any Internet application was using well-known transport- layer protocol ports that easily allowed its identification. More recently, the num- ber of applications using random or non-standard ports has dramatically increased (e.g. Skype, BitTorrent, VPNs, etc.). Moreover, often network applications are configured to use well-known protocol ports assigned to other applications (e.g. TCP port 80 originally reserved for Web traffic) attempting to disguise their pres- ence. For these reasons, and for the importance of correctly classifying traffic flows, novel approaches based respectively on packet inspection, statistical and machine learning techniques, and behavioral methods have been investigated and are be- coming standard practice. -

An Adaptive Multi-Layer Botnet Detection Technique Using Machine Learning Classifiers

applied sciences Article An Adaptive Multi-Layer Botnet Detection Technique Using Machine Learning Classifiers Riaz Ullah Khan 1,* , Xiaosong Zhang 1, Rajesh Kumar 1 , Abubakar Sharif 1, Noorbakhsh Amiri Golilarz 1 and Mamoun Alazab 2 1 Center of Cyber Security, School of Computer Science & Engineering, University of Electronic Science and Technology of China, Chengdu 611731, China; [email protected] (X.Z.); [email protected] (R.K.); [email protected] (A.S.); [email protected] (N.A.G.) 2 College of Engineering, IT and Environment, Charles Darwin University, Casuarina 0810, Australia; [email protected] * Correspondence: [email protected]; Tel.: +86-155-2076-3595 Received: 19 March 2019; Accepted: 24 April 2019; Published: 11 June 2019 Abstract: In recent years, the botnets have been the most common threats to network security since it exploits multiple malicious codes like a worm, Trojans, Rootkit, etc. The botnets have been used to carry phishing links, to perform attacks and provide malicious services on the internet. It is challenging to identify Peer-to-peer (P2P) botnets as compared to Internet Relay Chat (IRC), Hypertext Transfer Protocol (HTTP) and other types of botnets because P2P traffic has typical features of the centralization and distribution. To resolve the issues of P2P botnet identification, we propose an effective multi-layer traffic classification method by applying machine learning classifiers on features of network traffic. Our work presents a framework based on decision trees which effectively detects P2P botnets. A decision tree algorithm is applied for feature selection to extract the most relevant features and ignore the irrelevant features. -

Identifying and Characterizing Bashlite and Mirai C&C Servers

Identifying and Characterizing Bashlite and Mirai C&C Servers Gabriel Bastos∗, Artur Marzano∗, Osvaldo Fonseca∗, Elverton Fazzion∗y, Cristine Hoepersz, Klaus Steding-Jessenz, Marcelo H. P. C. Chavesz, Italo´ Cunha∗, Dorgival Guedes∗, Wagner Meira Jr.∗ ∗Department of Computer Science – Universidade Federal de Minas Gerais (UFMG) yDepartment of Computing – Universidade Federal de Sao˜ Joao˜ del-Rei (UFSJ) zCERT.br - Brazilian National Computer Emergency Response Team NIC.br - Brazilian Network Information Center Abstract—IoT devices are often a vector for assembling massive which contributes to the rise of a large number of variants. botnets, as a consequence of being broadly available, having Variants exploit other vulnerabilities, include new forms of limited security protections, and significant challenges in deploy- attack, and use different mechanisms to circumvent existing ing software upgrades. Such botnets are usually controlled by centralized Command-and-Control (C&C) servers, which need forms of defense. The increasing number of variants of these to be identified and taken down to mitigate threats. In this paper malwares makes the analysis and reverse engineering process we propose a framework to infer C&C server IP addresses using expensive, hindering the development of countermeasures and four heuristics. Our heuristics employ static and dynamic analysis mitigations. To address the growing number of threats, security to automatically extract information from malware binaries. We analysts and researchers need tools to automate the collection, use active measurements to validate inferences, and demonstrate the efficacy of our framework by identifying and characterizing analysis, and reverse engineering of malware. C&C servers for 62% of 1050 malware binaries collected using The C&C server is a core component of botnets, responsible 47 honeypots. -

Identifying and Measuring Internet Traffic: Techniques and Considerations

Identifying and Measuring Internet Traffic: Techniques and Considerations An Industry Whitepaper Contents Executive Summary Accurate traffic identification and insightful measurements form Executive Summary ................................... 1 the foundation of network business intelligence and network Introduction to Internet Traffic Classification ... 2 policy control. Without identifying and measuring the traffic flowing on their networks, CSPs are unable to craft new Traffic Identification .............................. 2 subscriber services, optimize shared resource utilization, and Traffic Categories ............................... 3 ensure correct billing and charging. Data Extraction and Measurement .............. 4 First and foremost, CSPs must understand their use cases, as Techniques .......................................... 5 these determine tolerance for accuracy. It is likely less of a Considerations for Traffic Classification .......... 7 problem if reports show information that is wrong by a small margin, but it can be catastrophic if subscriber billing/charging Information Requirements ........................ 7 is incorrect or management policies are applied to the wrong Traffic Classification Capabilities ............... 7 traffic. Completeness .................................... 8 Many techniques exist to identify traffic and extract additional False Positives and False Negatives .......... 9 information or measure quantities, ranging from relatively Additional Data and Measurements ......... 10 simple to extremely -

Machine Learning for Identifying Botnet Network Traffic

Aalborg Universitet Machine learning for identifying botnet network traffic Stevanovic, Matija; Pedersen, Jens Myrup Publication date: 2013 Document Version Accepted author manuscript, peer reviewed version Link to publication from Aalborg University Citation for published version (APA): Stevanovic, M., & Pedersen, J. M. (2013). Machine learning for identifying botnet network traffic. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. ? Users may download and print one copy of any publication from the public portal for the purpose of private study or research. ? You may not further distribute the material or use it for any profit-making activity or commercial gain ? You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us at [email protected] providing details, and we will remove access to the work immediately and investigate your claim. Downloaded from vbn.aau.dk on: September 23, 2021 Machine learning for identifying botnet network traffic (Technical report) Matija Stevanovic and Jens Myrup Pedersen Networking and Security Section, Department of Electronic Systems Aalborg University, DK-9220 Aalborg East, Denmark Email: {mst, jens}@es.aau.dk Abstract—During the last decade, a great scientific effort ment, improving it’s mechanisms of propagation, malicious has been invested in the development of methods that could activity, and resilience to take-down efforts. -

Designing an Effective Network Forensic Framework for The

Designing an effective network forensic framework for the investigation of botnets in the Internet of Things Nickolaos Koroniotis A thesis submitted in fulfilment of the requirements for the degree of Doctor of Philosophy School of Engineering and Information Technology The University of New South Wales Australia March 2020 COPYRIGHT STATEMENT ‘I hereby grant the University of New South Wales or its agents a non-exclusive licence to archive and to make available (including to members of the public) my thesis or dissertation in whole or part in the University libraries in all forms of media, now or here after known. I acknowledge that I retain all intellectual property rights which subsist in my thesis or dissertation, such as copyright and patent rights, subject to applicable law. I also retain the right to use all or part of my thesis or dissertation in future works (such as articles or books).’ ‘For any substantial portions of copyright material used in this thesis, written permission for use has been obtained, or the copyright material is removed from the final public version of the thesis.’ Signed ……………………………………………........................... Date …………………………………………….............................. AUTHENTICITY STATEMENT ‘I certify that the Library deposit digital copy is a direct equivalent of the final officially approved version of my thesis.’ Signed ……………………………………………........................... Date …………………………………………….............................. 1 Thesis Dissertation Sheet Surname/Family Name : Koroniotis Given Name/s : Nickolaos Abbreviation for degree : PhD as give in the University calendar Faculty : UNSW Canberra at ADFA School : UC Engineering & Info Tech Thesis Title : Designing an effective network forensic framework for the investigation of botnets in the Internet of Things 2 Abstract 350 words maximum: The emergence of the Internet of Things (IoT), has heralded a new attack surface, where attackers exploit the security weaknesses inherent in smart things. -

Techniques for Detecting Compromised Iot Devices

MSc System and network Engineering Research Project 1 Techniques for detecting compromised IoT devices Project Report Ivo van der Elzen Jeroen van Heugten February 12, 2017 Abstract The amount of connected Internet of Things (IoT) devices is growing, expected to hit 50 billion devices in 2020. Many of those devices lack proper security. This has led to malware families designed to infect IoT devices and use them in Distributed Denial of Service (DDoS) attacks. In this paper we do an in-depth analysis of two families of IoT malware to determine common properties which can be used for detection. Focusing on the ISP-level, we evaluate commonly available detection techniques and apply the results from our analysis to detect IoT malware activity in an ISP network. Applying our detection method to a real-world data set we find indications for a Mirai malware infection. Using generic honeypots we gain new insight in IoT malware behavior. 1 Introduction ple of days after the attacks, the source code for the malware (dubbed \Mirai") was pub- September 20th 2016: A record setting Dis- lished [9] [10]. tributed Denial of Service (DDoS) attack of over 660 Gbps is launched on the website krebsonse- With the rise of these IoT based DDoS at- curity.com of infosec journalist Brian Krebs [1]. tacks [11] it has become clear that this problem A few days later, web hosting company OVH will only become bigger in the future, unless reports they have been hit with a DDoS of over IoT device vendors accept responsibility and 1 Tbps [2]. -

Securing Your Home Routers: Understanding Attacks and Defense Strategies of the Modem’S Serial Number As a Password

Securing Your Home Routers Understanding Attacks and Defense Strategies Joey Costoya, Ryan Flores, Lion Gu, and Fernando Mercês Trend Micro Forward-Looking Threat Research (FTR) Team A TrendLabsSM Research Paper TREND MICRO LEGAL DISCLAIMER The information provided herein is for general information Contents and educational purposes only. It is not intended and should not be construed to constitute legal advice. The information contained herein may not be applicable to all situations and may not reflect the most current situation. 4 Nothing contained herein should be relied on or acted upon without the benefit of legal advice based on the particular facts and circumstances presented and nothing Entry Points: How Threats herein should be construed otherwise. Trend Micro reserves the right to modify the contents of this document Can Infiltrate Your Home at any time without prior notice. Router Translations of any material into other languages are intended solely as a convenience. Translation accuracy is not guaranteed nor implied. If any questions arise related to the accuracy of a translation, please refer to 10 the original language official version of the document. Any discrepancies or differences created in the translation are not binding and have no legal effect for compliance or Postcompromise: Threats enforcement purposes. to Home Routers Although Trend Micro uses reasonable efforts to include accurate and up-to-date information herein, Trend Micro makes no warranties or representations of any kind as to its accuracy, currency, or completeness. You agree that access to and use of and reliance on this document 17 and the content thereof is at your own risk. -

Early Detection of Mirai-Like Iot Bots in Large-Scale Networks Through Sub-Sampled Packet Traffic Analysis

Early Detection Of Mirai-Like IoT Bots In Large-Scale Networks Through Sub-Sampled Packet Traffic Analysis Ayush Kumar and Teng Joon Lim Department of Electrical and Computer Engineering, National University of Singapore, Singapore 119077 [email protected], [email protected] Abstract. The widespread adoption of Internet of Things has led to many secu- rity issues. Recently, there have been malware attacks on IoT devices, the most prominent one being that of Mirai. IoT devices such as IP cameras, DVRs and routers were compromised by the Mirai malware and later large-scale DDoS at- tacks were propagated using those infected devices (bots) in October 2016. In this research, we develop a network-based algorithm which can be used to detect IoT bots infected by Mirai or similar malware in large-scale networks (e.g. ISP network). The algorithm particularly targets bots scanning the network for vul- nerable devices since the typical scanning phase for botnets lasts for months and the bots can be detected much before they are involved in an actual attack. We analyze the unique signatures of the Mirai malware to identify its presence in an IoT device. The prospective deployment of our bot detection solution is discussed next along with the countermeasures which can be taken post detection. Further, to optimize the usage of computational resources, we use a two-dimensional (2D) packet sampling approach, wherein we sample the packets transmitted by IoT devices both across time and across the devices. Leveraging the Mirai signatures identified and the 2D packet sampling approach, a bot detection algorithm is pro- posed. -

Honor Among Thieves: Towards Understanding the Dynamics and Interdependencies in Iot Botnets

Honor Among Thieves: Towards Understanding the Dynamics and Interdependencies in IoT Botnets Jinchun Choi1;2, Ahmed Abusnaina1, Afsah Anwar1, An Wang3, Songqing Chen4, DaeHun Nyang2, Aziz Mohaisen1 1University of Central Florida 2Inha University 3Case Western Reserve University 4George Mason University Abstract—In this paper, we analyze the Internet of Things (IoT) affected. The same botnet was able to take down the Internet Linux malware binaries to understand the dependencies among of the African nation of Liberia [14]. malware. Towards this end, we use static analysis to extract Competition and coordination among botnets are not well endpoints that malware communicates with, and classify such endpoints into targets and dropzones (equivalent to Command explored, although recent reports have highlighted the potential and Control). In total, we extracted 1,457 unique dropzone of such competition as demonstrated in adversarial behaviors IP addresses that target 294 unique IP addresses and 1,018 of Gafgyt towards competing botnets [9]. Understanding a masked target IP addresses. We highlight various characteristics phenomenon in behavior is important for multiple reasons. of those dropzones and targets, including spatial, network, and First, such an analysis would highlight the competition and organizational affinities. Towards the analysis of dropzones’ interdependencies and dynamics, we identify dropzones chains. alliances among IoT botnets (or malactors; i.e., those who Overall, we identify 56 unique chains, which unveil coordination are behind the botnet), which could shed light on cybercrime (and possible attacks) among different malware families. Further economics. Second, understanding such a competition would analysis of chains with higher node counts reveals centralization. necessarily require appropriate analysis modalities, and such We suggest a centrality-based defense and monitoring mechanism a competition signifies those modalities, even when they are to limit the propagation and impact of malware.