Hyperhypernetworks for the Design of Antenna Arrays

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Low-Profile Wideband Antennas Based on Tightly Coupled Dipole

Low-Profile Wideband Antennas Based on Tightly Coupled Dipole and Patch Elements Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Erdinc Irci, B.S., M.S. Graduate Program in Electrical and Computer Engineering The Ohio State University 2011 Dissertation Committee: John L. Volakis, Advisor Kubilay Sertel, Co-advisor Robert J. Burkholder Fernando L. Teixeira c Copyright by Erdinc Irci 2011 Abstract There is strong interest to combine many antenna functionalities within a single, wideband aperture. However, size restrictions and conformal installation requirements are major obstacles to this goal (in terms of gain and bandwidth). Of particular importance is bandwidth; which, as is well known, decreases when the antenna is placed closer to the ground plane. Hence, recent efforts on EBG and AMC ground planes were aimed at mitigating this deterioration for low-profile antennas. In this dissertation, we propose a new class of tightly coupled arrays (TCAs) which exhibit substantially broader bandwidth than a single patch antenna of the same size. The enhancement is due to the cancellation of the ground plane inductance by the capacitance of the TCA aperture. This concept of reactive impedance cancellation was motivated by the ultrawideband (UWB) current sheet array (CSA) introduced by Munk in 2003. We demonstrate that as broad as 7:1 UWB operation can be achieved for an aperture as thin as λ/17 at the lowest frequency. This is a 40% larger wideband performance and 35% thinner profile as compared to the CSA. Much of the dissertation’s focus is on adapting the conformal TCA concept to small and very low-profile finite arrays. -

Patch Antenna Array for RF Energy Harvesting Systems in 2.4 Ghz WLAN Frequency Band

Published by : International Journal of Engineering Research & Technology (IJERT) http://www.ijert.org ISSN: 2278-0181 Vol. 9 Issue 05, May-2020 Patch Antenna Array for RF Energy Harvesting Systems in 2.4 GHz WLAN Frequency Band Akash Kumar Gupta V. Praveen, V. Swetha Sri, Department of ECE CH. Nagavinay Kumar, B. Kishore Raghu Institute of Technology UG-Student, Department of ECE Raghu Visakhapatnam, India Institute of Technology Visakhapatnam, India Abstract—In present days, technology have been emerging harvesting topologies that operates low power devices. It has with significant importance mainly in wireless local area network. three stages receiving antenna, energy conversion into DC In this Energy harvesting is playing a key role. The RF Energy output, utilization of output of output in low power applications. harvesting is the collection of small amounts of ambient energy to power wireless devices, Especially, radio frequency (RF) energy II. RADIO FREQUENCY ENERGY HARVESTING has interesting key attributes that make it very attractive for low- power consumer electronics and wireless sensor networks (WSNs). FOR MICROSTRIP ANTENNA Commercial RF transmitting stations like Wi-Fi, or radar signals Microstrip patch antenna is a metallic patch which is may provide ambient RF energy. Throughout this paper, a specific fabricated on a dielectric substrate. The microstrip patch emphasis is on RFEH, as a green technology that is suitable for antenna is layered structure of ground plane as bottom layer solving power supply to WLAN node-related problems throughout and dielectric substrate as medium layer and radiating patch difficult environments or locations that cannot be reached. For RF harvesting RF signals are extracted using antennas.in this work to as top layer. -

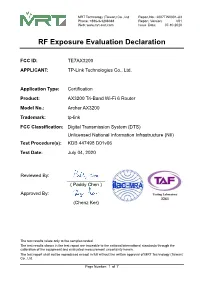

RF Exposure Evaluation Declaration

MRT Technology (Taiwan) Co., Ltd Report No.: 2007TW0001-U3 Phone: +886-3-3288388 Report Version: V01 Web: www.mrt-cert.com Issue Date: 07-10-2020 RF Exposure Evaluation Declaration FCC ID: TE7AX3200 APPLICANT: TP-Link Technologies Co., Ltd. Application Type: Certification Product: AX3200 Tri-Band Wi-Fi 6 Router Model No.: Archer AX3200 Trademark: tp-link FCC Classification: Digital Transmission System (DTS) Unlicensed National Information Infrastructure (NII) Test Procedure(s): KDB 447498 D01v06 Test Date: July 04, 2020 Reviewed By: ( Paddy Chen ) Approved By: (Chenz Ker) The test results relate only to the samples tested. The test results shown in the test report are traceable to the national/international standards through the calibration of the equipment and evaluated measurement uncertainty herein. The test report shall not be reproduced except in full without the written approval of MRT Technology (Taiwan) Co., Ltd. Page Number: 1 of 7 Report No.: 2007TW0001-U3 Revision History Report No. Version Description Issue Date Note 2007TW0001-U3 Rev. 01 Initial report 07-10-2020 Valid Page Number: 2 of 7 Report No.: 2007TW0001-U3 1. PRODUCT INFORMATION 1.1. Equipment Description Product Name AX3200 Tri-Band Wi-Fi 6 Router Model No. Archer AX3200 Brand Name: tp-link Wi-Fi Specification: 802.11a/b/g/n/ac/ax 1.2. Description of Available Antennas Antenna Frequency TX Number of Max Beamforming CDD Directional Gain Type Band (MHz) Paths spatial Antenna Directional (dBi) streams Gain Gain For Power For PSD (dBi) (dBi) 2412 ~ 2462 2 1 3.52 6.53 3.52 6.53 Monopole 5150 ~ 5250 2 1 3.54 6.55 3.54 6.55 Antenna 5725 ~ 5850 4 1 3.20 9.22 3.20 9.22 Note: 1. -

Review of Recent Phased Arrays for Millimeter-Wave Wireless Communication

sensors Review Review of Recent Phased Arrays for Millimeter-Wave Wireless Communication Aqeel Hussain Naqvi and Sungjoon Lim * School of Electrical and Electronics Engineering, College of Engineering, Chung-Ang University, 221, Heukseok-Dong, Dongjak-Gu, Seoul 156-756, Korea; [email protected] * Correspondence: [email protected]; Tel.: +82-2-820-5827; Fax: +82-2-812-7431 Received: 27 July 2018; Accepted: 18 September 2018; Published: 21 September 2018 Abstract: Owing to the rapid growth in wireless data traffic, millimeter-wave (mm-wave) communications have shown tremendous promise and are considered an attractive technique in fifth-generation (5G) wireless communication systems. However, to design robust communication systems, it is important to understand the channel dynamics with respect to space and time at these frequencies. Millimeter-wave signals are highly susceptible to blocking, and they have communication limitations owing to their poor signal attenuation compared with microwave signals. Therefore, by employing highly directional antennas, co-channel interference to or from other systems can be alleviated using line-of-sight (LOS) propagation. Because of the ability to shape, switch, or scan the propagating beam, phased arrays play an important role in advanced wireless communication systems. Beam-switching, beam-scanning, and multibeam arrays can be realized at mm-wave frequencies using analog or digital system architectures. This review article presents state-of-the-art phased arrays for mm-wave mobile terminals (MSs) and base stations (BSs), with an emphasis on beamforming arrays. We also discuss challenges and strategies used to address unfavorable path loss and blockage issues related to mm-wave applications, which sets future directions. -

Millimeter-Wave Evolution for 5G Cellular Networks

1 Millimeter-wave Evolution for 5G Cellular Networks Kei SAKAGUCHI†a), Gia Khanh TRAN††, Hidekazu SHIMODAIRA††, Shinobu NANBA†††, Toshiaki SAKURAI††††, Koji TAKINAMI†††††, Isabelle SIAUD*, Emilio Calvanese STRINATI**, Antonio CAPONE***, Ingolf KARLS****, Reza AREFI****, and Thomas HAUSTEIN***** SUMMARY Triggered by the explosion of mobile traffic, 5G (5th Generation) cellular network requires evolution to increase the system rate 1000 times higher than the current systems in 10 years. Motivated by this common problem, there are several studies to integrate mm-wave access into current cellular networks as multi-band heterogeneous networks to exploit the ultra-wideband aspect of the mm-wave band. The authors of this paper have proposed comprehensive architecture of cellular networks with mm-wave access, where mm-wave small cell basestations and a conventional macro basestation are connected to Centralized-RAN (C-RAN) to effectively operate the system by enabling power efficient seamless handover as well as centralized resource control including dynamic cell structuring to match the limited coverage of mm-wave access with high traffic user locations via user-plane/control-plane splitting. In this paper, to prove the effectiveness of the proposed 5G cellular networks with mm-wave access, system level simulation is conducted by introducing an expected future traffic model, a measurement based mm-wave propagation model, and a centralized cell association algorithm by exploiting the C-RAN architecture. The numerical results show the effectiveness of the proposed network to realize 1000 times higher system rate than the current network in 10 years which is not achieved by the small cells using commonly considered 3.5 GHz band. -

Notes on the Extended Aperture Log-Periodic Array Part 1: the Extended Element and the Standard LPDA

Notes on the Extended Aperture Log-Periodic Array Part 1: The Extended Element and the Standard LPDA L. B. Cebik, W4RNL In the R.S.G.B. Bulletin for July, 1961, F. J. H. Charman, G6CJ, resurrected a 1938 idea for antenna elements developed by E. C. Cork of E.M.I. Electronics. The elements are variously called loaded or extended wire elements. Charman increased the length of a center-fed wire to 1-λ while still obtaining a bi-directional pattern and a usable feedpoint impedance. He inserted capacitances between the center ½-λ section and the outer sections, thereby changing the current distribution. After a brief flurry of HF and VHF antenna ideas, the technique fell into obscurity, although the basic concept is related to certain collinear designs that use an inductance and a space between sections to obtain the correct phasing. I am indebted to Roger Paskvan, WA0IUJ, for sending me the relevant RSGB materials on the Charman element. In the 1970s, the element reappeared in a new garb as integral to the 1973 U.S. patent for “Extended Aperture Log-Periodic and Quasi-Log-Periodic Antennas and Arrays” received by Robert L. Tanner, founder and technical director of TCI, Inc. (U.S. patent 3,765,022, Oct. 9, 1973) The ideas found their way into TCI’s Model 510 and Model 512 5-30-MHz extended aperture log-periodic dipole arrays (EALPDAs). The arrays may have been in the TCI book since Tanner’s filing date (1971) or shortly thereafter, although TCI had previously produced other versions of the log-periodic dipole array (LPDA). -

Output SNR Improvement in Array Processing Architectures of WCDMA Systems by Low Side Lobe Beamforming

Output SNR Improvement in Array Processing Architectures of WCDMA Systems by Low Side Lobe Beamforming * Rajesh Khanna & Rajiv Saxena Department of Electronics & Communication Engineering, Thapar Institute of Engineering & Technology, Patiala. * Principal, Rustamji Institute of Technology, BSF Academy, Tekanpur ABSTRACT wanted signal. This is done by placing the nulls in the In Wideband direct sequence code division multiple pattern in the corresponding known directions of these access (WCDMA) same frequency spectrum is shared through interferers and simultaneously steering the main beam in all cells simultaneously, as opposed to TDMA which is used for the direction of the desired signal. This method of beam most 2nd generation systems. In a WCDMA system all forming is known as Null Beam Forming [1]. Adaptive transmitted signals turn out to be disturbing factors to all arrays, often referred as “Smart antennas”, were suggested other users in the system in the form of interference limiting in the early 1960’s and proved to be useful to cancel the system capacity. To suppress the amount of interference, directional interfering signals, improving the performance fast and reliable interference controlling algorithms must be of cellular wireless communications systems [3]. An employed in next generation systems. In this paper it is shown adaptive array with optimum weight vector can be used for that antenna arrays with steer able low side lobes can reduce null beamforming. The flexibility of array weighting to get interference in WCDMA can increase the system capacity and the desired array pattern can be exploited to cancel output signal to noise ratio of the array processing directional sources operating even at the same frequency architecture. -

Wide-Angle Scanning Wide-Band Phased Array Antennas

Wide-angle scanning wide-band phased array antennas ANDERS ELLGARDT Doctoral Thesis Stockholm, Sweden 2009 TRITA-EE 2009:017 KTH School of Electrical Engineering ISSN 1653-5146 SE-100 44 Stockholm ISBN 978-91-7415-291-3 SWEDEN Akademisk avhandling som med tillstånd av Kungl Tekniska högskolan framlägges till offentlig granskning för avläggande av teknologie doktorsexamen fredagen den 8 maj 2009 klockan 13.15 i sal F3, Kungl Tekniska högskolan, Lindstedtsvägen 26, Stockholm. © Anders Ellgardt, 2009 Tryck: Universitetsservice US AB Abstract This thesis considers problems related to the design and the analysis of wide- angle scanning phased arrays. The goals of the thesis are the design and analysis of antenna elements suitable for wide-angle scanning array antennas, and the study of scan blindness effects and edge effects for this type of antennas. Wide-angle scanning arrays are useful in radar applications, and the designs considered in the thesis are intended for an airborne radar antenna. After a study of the wide-angle scanning limits of three candidate elements, the tapered-slot was chosen for the proposed application. A tapered-slot antenna element was designed by using the infinitive array approach and the resulting element is capable of scanning out to 60◦ from broadside in all scan planes for a bandwidth of 2.5:1 and an active reflec- tion coefficient less than -10 dB. This design was implemented on an experimental antenna consisting of 256 elements. The predicted performance of the antenna was then verified by measuring the coupling coefficients and the embedded element pat- terns, and the measurements agreed well with the numerical predictions. -

Realization of a Planar Low-Profile Broadband Phased Array Antenna

REALIZATION OF A PLANAR LOW-PROFILE BROADBAND PHASED ARRAY ANTENNA DISSERTATION Presented in Partial Ful¯llment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Justin A. Kasemodel, M.S., B.S. Graduate Program in Electrical and Computer Engineering The Ohio State University 2010 Dissertation Committee: John L.Volakis, Co-Adviser Chi-Chih Chen, Co-Adviser Joel T. Johnson ABSTRACT With space at a premium, there is strong interest to develop a single ultra wide- band (UWB) conformal phased array aperture capable of supporting communications, electronic warfare and radar functions. However, typical wideband designs transform into narrowband or multiband apertures when placed over a ground plane. There- fore, it is not surprising that considerable attention has been devoted to electromag- netic bandgap (EBG) surfaces to mitigate the ground plane's destructive interference. However, EBGs and other periodic ground planes are narrowband and not suited for wideband applications. As a result, developing low-cost planar phased array aper- tures, which are concurrently broadband and low-pro¯le over a ground plane, remains a challenge. The array design presented herein is based on the in¯nite current sheet array (CSA) concept and uses tightly coupled dipole elements for wideband conformal op- eration. An important aspect of tightly coupled dipole arrays (TCDAs) is the capac- itive coupling that enables the following: (1) allows ¯eld propagation to neighboring elements, (2) reduces dipole resonant frequency, (3) cancels ground plane inductance, yielding a low-pro¯le, ultra wideband phased array aperture without using lossy ma- terials or EBGs on the ground plane. -

Self-Interference Cancellation Via Beamforming in an Integrated Full Duplex Circulator-Receiver Phased Array

Self-Interference Cancellation via Beamforming in an Integrated Full Duplex Circulator-Receiver Phased Array Mahmood Baraani Dastjerdi, Tingjun Chen, Negar Reiskarimian, Gil Zussman, and Harish Krishnaswamy Department of Electrical Engineering, Columbia University, New York, NY, 10027, USA TABLE I: Link budget calculations. Abstract—This paper describes how phased array beamforming can be exploited to achieve wideband Metric Calculation Value self-interference cancellation (SIC). This SIC is gained Frequency (f) 730MHz with no additional power consumption while minimizing link # of ANT Elements (N) 8 TX Power per Element (PTX) 1dBm budget (transmitter (TX) and receiver (RX) array gain) penalty 2 TX Array Gain (ATTX) N − 3dB 15dB by repurposing spatial degrees of freedom. Unlike prior works TX ANT Gain (GTX) 6dBi that rely only on digital transmit beamforming, this work takes Bandwidth (BW ) 16:26MHz advantage of analog/RF beamforming capability that can be RX Noise Figure (NF ) 5dB 2 easily embedded within an integrated circulator-receiver array. RX Array Gain (AGRX) N − 3dB 15dB RX ANT Gain (GRX) 6dBi This enables (i) obtaining SIC through beamforming on both RX Array Noise Floor referred kT · BW · NF · N −88dBm TX and RX sides, thus increasing the number of degrees of to the ANT Input (Nfloor) freedom (DoF) that can be used to obtain SIC and form the Required SNR 20dB RX Array Sensitivity referred desired beams, while (ii) sharing the antenna array between TX Nfloor · SNR −68dBm to the ANT Input (Psense) and RX. A 750MHz 65nm CMOS scalable 4-element full-duplex Implementation Losses (IL) 10dB circulator-receiver array is demonstrated in conjunction with λ 4π (PTXAGTXGTX · a TX phased array implemented using discrete components. -

The Field Day Special Antenna

NOrcN'9 AELIEVE WE'RE Trythe CARRYIK A HAM STATION "FD Special" AN9 ilO.EL ARCAf] 4!l Antenna l- l> .. Lookingfor an antennathat's simple, inexpensive,lightweight and easy to install?Here's one that fits the description. By RoyW. Lewallen,*WTEL "juices" hile the Field Day were want the nuisanceof establishinga decent thelower curve of Fig. l. It showsthe effect - flowing last year, my Field Day ground system which most vertical of losseson thegain Qosses don't affectthe partnersand I decidedto become arraysrequire. front-to-backratio, and changeonly the more competitivewithout compromising The theoreticalgain and front-to-back scalingof thepattern). Fig. 3 showsthe pat- our generalphilosophy: Use all homemade ratio of 2-element arrays with terns of arrays with 135, 160 and gear,pack it in to the site,and don't let l/8-wavelength spacing between the 18O-degreerelative spacing. All aredrawn operating interfere with watching the elementsare shown in Figs. I and 2. Note to the samescale. The 1,/8-waveleneth- scenery.Since most of our gearalready ran the acceptedQRP powerlimit of l0-W dc input and had been designedto provide high outputefficiency, the antennaseemed like the reasonablepoint of attack(our high qualitysuperheterodyne receivers have not o beenthe limiting item). The constraintsdic- -4 tatedthat an antennabe lightweight,por- = tableand easy to put up. It shouldalso have o5 substantialgain. <2 5 TL LOSS/ EL€MENT Becauseof our WestCoast location, the 3 o front-to-backratio wasnot a concern.But havinga reasonablywide lobe toward the Eastwas. We desireda low SWR because r30 r40 150 160 i70 of the relativelyhigh lossof our RG-58/U ANGLEBETWEEN ELEMENT CURRENTS (OEGRE€S) and/or RG-174/U feed line. -

Chapter 5: Antenna Arrays Antennas and Propagation

Antennas and Propagation Chapter 5: Antenna Arrays 5 Antenna Arrays Advantage Combine multiple antennas More flexibility in transmitting / receiving signals Spatial filtering Beamforming Excite elements coherently (phase/amp shifts) Steer main lobes and nulls Super-Resolution Methods Non-linear techniques Allow very high resolution for direction finding Antennas and Propagation Slide 2 Chapter 4 5 Antenna Arrays (2) Diversity Redundant signals on multiple antennas Reduce effects due to channel fading Spatial Multiplexing (MIMO) Different information on multiple antennas Increase system throughput (capacity) Antennas and Propagation Slide 3 Chapter 4 General Array Assume we have N elements pattern of ith antenna Total pattern Identical antenna elements Element Factor Array Factor “Pattern Multiplication” Antennas and Propagation Slide 4 Chapter 4 Uniform Linear Array (ULA) Place N elements on the z-axis Uniform spacing Δ Antennas and Propagation Slide 5 Chapter 4 Uniform Excitation Apply equal amplitude to elements (different phases only) Recall: Antennas and Propagation Slide 6 Chapter 4 Uniform Excitation (2) Note: sin(Nx)/sin(x) behaves like Nsinc(x) Maximum occurs for θ= θ0 If we center array about z=0, and normalize Result: Steers a beam in direction 1/2 θ= θ0 that has amplitude N compared to single element “Array Gain” Normalize input power with additional elements for θ= θ0, sin(Nx)/sin(x) goes to N Antennas and Propagation Slide 7 Chapter 4 Uniform Excitation: Examples Example: N=8, Δ=λ/2 Antennas and Propagation Slide 8 Chapter