Opengl ES 1.0 (For C++) • M3G (Aka JSR-184, for Java)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High End Visualization with Scalable Display System

HIGH END VISUALIZATION WITH SCALABLE DISPLAY SYSTEM Dinesh M. Sarode*, Bose S.K.*, Dhekne P.S.*, Venkata P.P.K.*, Computer Division, Bhabha Atomic Research Centre, Mumbai, India Abstract display, then the large number of pixels shows the picture Today we can have huge datasets resulting from in greater details and interaction with it enables the computer simulations (CFD, physics, chemistry etc) and greater insight in understanding the data. However, the sensor measurements (medical, seismic and satellite). memory constraints, lack of the rendering power and the There is exponential growth in computational display resolution offered by even the most powerful requirements in scientific research. Modern parallel graphics workstation makes the visualization of this computers and Grid are providing the required magnitude difficult or impossible. computational power for the simulation runs. The rich While the cost-performance ratio for the component visualization is essential in interpreting the large, dynamic based on semiconductor technologies doubling in every data generated from these simulation runs. The 18 months or beyond that for graphics accelerator cards, visualization process maps these datasets onto graphical the display resolution is lagging far behind. The representations and then generates the pixel resolutions of the displays have been increasing at an representation. The large number of pixels shows the annual rate of 5% for the last two decades. The ability to picture in greater details and interaction with it enables scale the components: graphics accelerator and display by the greater insight on the part of user in understanding the combining them is the most cost-effective way to meet data more quickly, picking out small anomalies that could the ever-increasing demands for high resolution. -

THINC: a Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices

THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2011 c 2011 Ricardo A. Baratto This work may be used in accordance with Creative Commons, Attribution-NonCommercial-NoDerivs License. For more information about that license, see http://creativecommons.org/licenses/by-nc-nd/3.0/. For other uses, please contact the author. ABSTRACT THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto THINC is a new virtual and remote display architecture for desktop computing. It has been designed to address the limitations and performance shortcomings of existing remote display technology, and to provide a building block around which novel desktop architectures can be built. THINC is architected around the notion of a virtual display device driver, a software-only component that behaves like a traditional device driver, but instead of managing specific hardware, enables desktop input and output to be intercepted, manipulated, and redirected at will. On top of this architecture, THINC introduces a simple, low-level, device-independent representation of display changes, and a number of novel optimizations and techniques to perform efficient interception and redirection of display output. This dissertation presents the design and implementation of THINC. It also intro- duces a number of novel systems which build upon THINC's architecture to provide new and improved desktop computing services. The contributions of this dissertation are as follows: • A high performance remote display system for LAN and WAN environments. -

Wearable Mixed Reality System in Less Than 1 Pound

Eurographics Symposium on Virtual Environments (2006) Roger Hubbold and Ming Lin (Editors) Wearable Mixed Reality System In Less Than 1 Pound Achille Peternier,1 Frédéric Vexo1 and Daniel Thalmann1 1Virtual Reality Laboratory (VRLab), École Polytechnique Fédérale de Lausanne (EPFL), 1015 Lausanne, Switzerland Abstract We have designed a wearable Mixed Reality (MR) framework which allows to real-time render game-like 3D scenes on see-through head-mounted displays (see through HMDs) and to localize the user position within a known internet wireless area. Our equipment weights less than 1 Pound (0.45 Kilos). The information visualized on the mobile device could be sent on-demand from a remote server and realtime rendered onboard. We present our PDA-based platform as a valid alternative to use in wearable MR contexts under less mobility and encumbering constraints: our approach eliminates the typical backpack with a laptop, a GPS antenna and a heavy HMD usually required in this cases. A discussion about our results and user experiences with our approach using a handheld for 3D rendering is presented as well. 1. Introduction also few minutes to put on or remove the whole system. Ad- ditionally, a second person is required to help him/her in- The goal of wearable Mixed Reality is to give more infor- stalling the framework for the first time. Gleue and Daehne mation to users by mixing it with the real world in the less pointed the encumbering, even if limited, of their platform invasive way. Users need to move freely and comfortably and the need of a skilled technician for the maintenance of when wear such systems, in order to improve their expe- their system [GD01]. -

Order Independent Transparency in Opengl 4.X Christoph Kubisch – [email protected] TRANSPARENT EFFECTS

Order Independent Transparency In OpenGL 4.x Christoph Kubisch – [email protected] TRANSPARENT EFFECTS . Photorealism: – Glass, transmissive materials – Participating media (smoke...) – Simplification of hair rendering . Scientific Visualization – Reveal obscured objects – Show data in layers 2 THE CHALLENGE . Blending Operator is not commutative . Front to Back . Back to Front – Sorting objects not sufficient – Sorting triangles not sufficient . Very costly, also many state changes . Need to sort „fragments“ 3 RENDERING APPROACHES . OpenGL 4.x allows various one- or two-pass variants . Previous high quality approaches – Stochastic Transparency [Enderton et al.] – Depth Peeling [Everitt] 3 peel layers – Caveat: Multiple scene passes model courtesy of PTC required Peak ~84 layers 4 RECORD & SORT 4 2 3 1 . Render Opaque – Depth-buffer rejects occluded layout (early_fragment_tests) in; fragments 1 2 3 . Render Transparent 4 – Record color + depth uvec2(packUnorm4x8 (color), floatBitsToUint (gl_FragCoord.z) ); . Resolve Transparent 1 2 3 4 – Fullscreen sort & blend per pixel 4 2 3 1 5 RESOLVE . Fullscreen pass uvec2 fragments[K]; // encodes color and depth – Not efficient to globally sort all fragments per pixel n = load (fragments); sort (fragments,n); – Sort K nearest correctly via vec4 color = vec4(0); register array for (i < n) { blend (color, fragments[i]); – Blend fullscreen on top of } framebuffer gl_FragColor = color; 6 TAIL HANDLING . Tail Handling: – Discard Fragments > K – Blend below sorted and hope error is not obvious [Salvi et al.] . Many close low alpha values are problematic . May not be frame- coherent (flicker) if blend is not primitive- ordered K = 4 K = 4 K = 16 Tailblend 7 RECORD TECHNIQUES . Unbounded: – Record all fragments that fit in scratch buffer – Find & Sort K closest later + fast record - slow resolve - out of memory issues 8 HOW TO STORE . -

Best Practice for Mobile

If this doesn’t look familiar, you’re in the wrong conference 1 This section of the course is about the ways that mobile graphics hardware works, and how to work with this hardware to get the best performance. The focus here is on the Vulkan API because the explicit nature exposes the hardware, but the principles apply to other programming models. There are ways of getting better mobile efficiency by reducing what you’re drawing: running at lower frame rate or resolution, only redrawing what and when you need to, etc.; we’ve addressed those in previous years and you can find some content on the course web site, but this time the focus is on high-performance 3D rendering. Those other techniques still apply, but this talk is about how to keep the graphics moving and assuming you’re already doing what you can to reduce your workload. 2 Mobile graphics processing units are usually fundamentally different from classical desktop designs. * Mobile GPUs mostly (but not exclusively) use tiling, desktop GPUs tend to use immediate-mode renderers. * They use system RAM rather than dedicated local memory and a bus connection to the GPU. * They have multiple cores (but not so much hyperthreading), some of which may be optimised for low power rather than performance. * Vulkan and similar APIs make these features more visible to the developer. Here, we’re mostly using Vulkan for examples – but the architectural behaviour applies to other APIs. 3 Let’s start with the tiled renderer. There are many approaches to tiling, even within a company. -

Power Optimizations for Graphics Processors

POWER OPTIMIZATIONS FOR GRAPHICS PROCESSORS B.V.N.SILPA DEPARTMENT OF COMPUTER SCIENCE & ENGINEERING INDIAN INSTITUTE OF TECHNOLOGY DELHI March 2011 POWER OPTIMAZTIONS FOR GRAPHICS PROCESSORS by B.V.N.SILPA Department of Computer Science and Engineering Submitted in fulfillment of the requirements of the degree of Doctor of Philosophy to the Indian Institute of Technology Delhi March 2011 Certificate This is to certify that the thesis titled Power optimizations for graphics pro- cessors being submitted by B V N Silpa for the award of Doctor of Philosophy in Computer Science & Engg. is a record of bona fide work carried out by her under my guidance and supervision at the Deptartment of Computer Science & Engineer- ing, Indian Institute of Technology Delhi. The work presented in this thesis has not been submitted elsewhere, either in part or full, for the award of any other degree or diploma. Preeti Ranjan Panda Professor Dept. of Computer Science & Engg. Indian Institute of Technology Delhi Acknowledgment It is with immense gratitude that I acknowledge the support and help of my Professor Preeti Ranjan Panda in guiding me through this thesis. I would like to thank Professors M. Balakrishnan, Anshul Kumar, G.S. Visweswaran and Kolin Paul for their valuable feedback, suggestions and help in all respects. I am indebted to my dear friend G Kr- ishnaiah for being my constant support and an impartial critique. I would like to thank Neeraj Goel, Anant Vishnoi and Aryabartta Sahu for their technical and moral support. I would also like to thank the staff of Philips, FPGA, Intel laboratories and IIT Delhi for their help. -

A Hybrid Client-Server Based Technique for Navigation in Large Terrains Using Mobile Devices

A Hybrid Client-Server Based Technique for Navigation in Large Terrains Using Mobile Devices Jos´eM. Noguera, Rafael J. Segura, Carlos J. Og´ayar Grupo de Gr´aficos y Geom´atica Universidad de Ja´en Robert Joan-Arinyo Grup d’Inform`atica a l’Enginyeria Universitat Polit`ecnica de Catalunya January 29, 2010 Abstract We describe a hybrid client-server technique for remote adaptive streaming and render- ing of large terrains in resource-limited mobile devices. The technique has been designed to achieve an interactive rendering performance on a mobile device connected to a low- bandwidth wireless network. The rendering workload is split between the client and the server. The terrain area close to the viewer is rendered in real-time by the client using a hierarchical multiresolution scheme. The terrain located far from the viewer is portrayed as view-dependent impostors, rendered by the server on demand and, then sent to the client. The hybrid technique provides tools to dynamically balance the rendering work- load according to the resources available at the client side and to the saturation of the network and server. A prototype has been built and an exhaustive set of experiments covering several platforms, wireless networks and a wide range of viewer velocities has been conducted. Results show that the approach is feasible, effective and robust. Keywords: Terrain navigation, Terrain rendering, Mobile computing, 3D graphics, Soft- ware Portability, Adaptive streaming. 1 Introduction Nowadays, mobile devices with computational capabilities like mobile phones and Personal Digital Assistants (PDA) are ubiquitous. Following the general framework depicted in Fig- ure 1, they are used as interactive guides to real environments, offer features such as Global Positioning Systems (GPS), access to location-based data and, visualization of maps and, terrain rendering. -

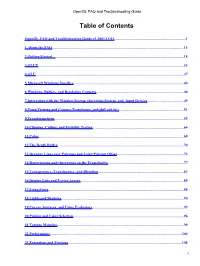

Opengl FAQ and Troubleshooting Guide

OpenGL FAQ and Troubleshooting Guide Table of Contents OpenGL FAQ and Troubleshooting Guide v1.2001.11.01..............................................................................1 1 About the FAQ...............................................................................................................................................13 2 Getting Started ............................................................................................................................................18 3 GLUT..............................................................................................................................................................33 4 GLU.................................................................................................................................................................37 5 Microsoft Windows Specifics........................................................................................................................40 6 Windows, Buffers, and Rendering Contexts...............................................................................................48 7 Interacting with the Window System, Operating System, and Input Devices........................................49 8 Using Viewing and Camera Transforms, and gluLookAt().......................................................................51 9 Transformations.............................................................................................................................................55 10 Clipping, Culling, -

Ray Tracing on Programmable Graphics Hardware

Ray Tracing on Programmable Graphics Hardware Timothy J. Purcell Ian Buck William R. Mark ∗ Pat Hanrahan Stanford University † Abstract In this paper, we describe an alternative approach to real-time ray tracing that has the potential to out perform CPU-based algorithms Recently a breakthrough has occurred in graphics hardware: fixed without requiring fundamentally new hardware: using commodity function pipelines have been replaced with programmable vertex programmable graphics hardware to implement ray tracing. Graph- and fragment processors. In the near future, the graphics pipeline ics hardware has recently evolved from a fixed-function graph- is likely to evolve into a general programmable stream processor ics pipeline optimized for rendering texture-mapped triangles to a capable of more than simply feed-forward triangle rendering. graphics pipeline with programmable vertex and fragment stages. In this paper, we evaluate these trends in programmability of In the near-term (next year or two) the graphics processor (GPU) the graphics pipeline and explain how ray tracing can be mapped fragment program stage will likely be generalized to include float- to graphics hardware. Using our simulator, we analyze the per- ing point computation and a complete, orthogonal instruction set. formance of a ray casting implementation on next generation pro- These capabilities are being demanded by programmers using the grammable graphics hardware. In addition, we compare the perfor- current hardware. As we will show, these capabilities are also suf- mance difference between non-branching programmable hardware ficient for us to write a complete ray tracer for this hardware. As using a multipass implementation and an architecture that supports the programmable stages become more general, the hardware can branching. -

Neural Network FAQ, Part 1 of 7

Neural Network FAQ, part 1 of 7: Introduction Archive-name: ai-faq/neural-nets/part1 Last-modified: 2002-05-17 URL: ftp://ftp.sas.com/pub/neural/FAQ.html Maintainer: [email protected] (Warren S. Sarle) Copyright 1997, 1998, 1999, 2000, 2001, 2002 by Warren S. Sarle, Cary, NC, USA. --------------------------------------------------------------- Additions, corrections, or improvements are always welcome. Anybody who is willing to contribute any information, please email me; if it is relevant, I will incorporate it. The monthly posting departs around the 28th of every month. --------------------------------------------------------------- This is the first of seven parts of a monthly posting to the Usenet newsgroup comp.ai.neural-nets (as well as comp.answers and news.answers, where it should be findable at any time). Its purpose is to provide basic information for individuals who are new to the field of neural networks or who are just beginning to read this group. It will help to avoid lengthy discussion of questions that often arise for beginners. SO, PLEASE, SEARCH THIS POSTING FIRST IF YOU HAVE A QUESTION and DON'T POST ANSWERS TO FAQs: POINT THE ASKER TO THIS POSTING The latest version of the FAQ is available as a hypertext document, readable by any WWW (World Wide Web) browser such as Netscape, under the URL: ftp://ftp.sas.com/pub/neural/FAQ.html. If you are reading the version of the FAQ posted in comp.ai.neural-nets, be sure to view it with a monospace font such as Courier. If you view it with a proportional font, tables and formulas will be mangled. -

Computer Hardware and Servicing

GOVERNMENT OF TAMILNADU DIRECTORATE OF TECHNICAL EDUCATION CHENNAI – 600 025 STATE PROJECT COORDINATION UNIT Diploma in Computer Engineering Course Code: 1052 M – Scheme e-TEXTBOOK on Computer Hardware and Servicing for VI Semester Diploma in Computer Engineering Convener for Computer Engineering Discipline: Tmt.A.Ghousia Jabeen Principal TPEVR Government Polytechnic College Vellore- 632202 Team Members for Computer Hardware and Servicing: Mr. M. Suresh Babu HOD / Computer Engineering, N.P.A. Centenary Polytechnic College, Kotagiri – 643217 Mr. H.Ganesh Lecturer (SG) / Computer Engineering, N.P.A. Centenary Polytechnic College, Kotagiri – 643217 Dr. S.Sharmila HOD / IT P.S.G. Polytechnic College, Coimbatore – 641001. Validated by Dr. S. Brindha HOD/Computer Networks, PSG Polytechnic College, Coimbatore – 641001. CONTENTS Unit No. Name of the Unit Page No. 1 MOTHERBOARD COMPONENTS 1 2 MEMORY AND I/O DEVICES 33 3 DISPLAY, POWER SUPPLY AND BIOS 91 4 MAINTENANCE AND TROUBLE SHOOTING OF 114 DESKTOP & LAPTOP COMPUTERS 5 MOBILE PHONE SERVICING 178 Unit-1 Motherboard Components UNIT -1 MOTHERBOARD COMPONENTS Learning Objectives: Learner should be able to ➢ Acquire the skills of motherboard and its components ➢ Explain the basic concepts of processor. ➢ Differentiate the types of processor technology ➢ Describe the concepts of chipsets ➢ Differentiate the features of PCI,AGP, USB and processor bus Introduction: To troubleshoot the PC effectively, a student must be familiar about the components and its features. This chapter focuses the motherboard and its components. Motherboard is an important component of the PC. The architecture and the construction of the motherboard are described. This chapter deals the various types of processors and its features. -

Interactive Computer Graphics Stanford CS248, Winter 2021 You Are Almost Done!

Lecture 18: Parallelizing and Optimizing Rasterization Interactive Computer Graphics Stanford CS248, Winter 2021 You are almost done! ▪ Wed night deadline: - Exam redo (no late days) ▪ Thursday night deadline: - Final project writeup - Final project video - It should demonstrate that your algorithms “work” - But hopefully you can get creative and have some fun with it! ▪ Friday: - Relax and party Stanford CS248, Winter 2021 Cyberpunk 2077 Stanford CS248, Winter 2021 Ghost of Tsushima Stanford CS248, Winter 2021 Forza Motorsport 7 Stanford CS248, Winter 2021 NVIDIA V100 GPU: 80 streaming multiprocessors (SMs) L2 Cache (6 MB) 900 GB/sec (4096 bit interface) GPU memory (HBM) (16 GB) Stanford CS248, Winter 2021 V100 GPU parallelism 1.245 GHz clock 1.245 GHz clock 80 SM processor cores per chip 80 SM processor cores per chip 64 parallel multiple-add units per SM 64 parallel multiple-add units per SM 80 x 64 = 5,120 fp32 mul-add ALUs 80 x 64 = 5,120 fp32 mul-add ALUs = 12.7 TFLOPs * = 12.7 TFLOPs * Up to 163,840 fragments being processed at a time on the chip! Up to 163,840 fragments being processed at a time on the chip! L2 Cache (6 MB) 900 GB/sec GPU memory (16 GB) * mul-add counted as 2 !ops: Stanford CS248, Winter 2021 Hardware units RTX 3090 GPU for rasterization Stanford CS248, Winter 2021 RTX 3090 GPU Hardware units for texture mapping Stanford CS248, Winter 2021 RTX 3090 GPU Hardware units for ray tracing Stanford CS248, Winter 2021 For the rest of the lecture, I’m going to focus on mapping rasterization workloads to modern mobile GPUs Stanford CS248, Winter 2021 all Q.