Statistical Modelling of Citation Exchange Between Statistics Journals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alberto Abadie

ALBERTO ABADIE Office Address Massachusetts Institute of Technology Department of Economics 50 Memorial Drive Building E52, Room 546 Cambridge, MA 02142 E-mail: [email protected] Academic Positions Massachusetts Institute of Technology Cambridge, MA Professor of Economics, 2016-present IDSS Associate Director, 2016-present Harvard University Cambridge, MA Professor of Public Policy, 2005-2016 Visiting Professor of Economics, 2013-2014 Associate Professor of Public Policy, 2004-2005 Assistant Professor of Public Policy, 1999-2004 University of Chicago Chicago, IL Visiting Assistant Professor of Economics, 2002-2003 National Bureau of Economic Research (NBER) Cambridge, MA Research Associate (Labor Studies), 2009-present Faculty Research Fellow (Labor Studies), 2002-2009 Non-Academic Positions Amazon.com, Inc. Seattle, WA Academic Research Consultant, 2020-present Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Economics, 1995-1999 Thesis title: \Semiparametric Instrumental Variable Methods for Causal Response Mod- els." Centro de Estudios Monetarios y Financieros (CEMFI) Madrid, Spain M.A. in Economics, 1993-1995 Masters Thesis title: \Changes in Spanish Labor Income Structure during the 1980's: A Quantile Regression Approach." 1 Universidad del Pa´ıs Vasco Bilbao, Spain B.A. in Economics, 1987-1992 Specialization Areas: Mathematical Economics and Econometrics. Honors and Awards Elected Fellow of the Econometric Society, 2016. NSF grant SES-1756692, \A General Synthetic Control Framework of Estimation and Inference," 2018-2021. NSF grant SES-0961707, \A General Theory of Matching Estimation," with G. Imbens, 2010-2012. NSF grant SES-0617810, \The Economic Impact of Terrorism: Lessons from the Real Estate Office Markets of New York and Chicago," with S. Dermisi, 2006-2008. -

Miguel De Carvalho

School of Mathematics Miguel de Carvalho Contact M. de Carvalho T: +44 (0) 0131 650 5054 Information The University of Edinburgh B: [email protected] School of Mathematics : mb.carvalho Edinburgh EH9 3FD, UK +: www.maths.ed.ac.uk/ mdecarv Personal Born September 20, 1980 in Montijo, Lisbon. Details Portuguese and EU citizenship. Interests Applied Statistics, Biostatistics, Econometrics, Risk Analysis, Statistics of Extremes. Education Universidade de Lisboa, Portugal Habilitation in Probability and Statistics, 2019 Thesis: Statistical Modeling of Extremes Universidade Nova de Lisboa, Portugal PhD in Mathematics with emphasis on Statistics, 2009 Thesis: Extremum Estimators and Stochastic Optimization Advisors: Manuel Esqu´ıvel and Tiago Mexia Advisors of Advisors: Jean-Pierre Kahane and Tiago de Oliveira. Nova School of Business and Economics (Triple Accreditation), Portugal MSc in Economics, 2009 Thesis: Mean Regression for Censored Length-Biased Data Advisors: Jos´eA. F. Machado and Pedro Portugal Advisors of Advisors: Roger Koenker and John Addison. Universidade Nova de Lisboa, Portugal `Licenciatura'y in Mathematics, 2004 Professional Probation Period: Statistics Portugal (Instituto Nacional de Estat´ıstica). Awards & ISBA (International Society for Bayesian Analysis) Honours Lindley Award, 2019. TWAS (Academy of Sciences for the Developing World) Young Scientist Prize, 2015. International Statistical Institute Elected Member, 2014. American Statistical Association Young Researcher Award, Section on Risk Analysis, 2011. National Institute of Statistical Sciences j American Statistical Association Honorary Mention as a Finalist NISS/ASA Best y-BIS Paper Award, 2010. Portuguese Statistical Society (Sociedade Portuguesa de Estat´ıstica) Young Researcher Award, 2009. International Association for Statistical Computing ERS IASC Young Researcher Award, 2008. 1 of 13 p l e t t si a o e igulctoson Applied Statistical ModelingPublications 1. -

Kshitij Khare

Kshitij Khare Basic Information Mailing Address: Telephone Numbers: Internet: Department of Statistics Office: (352) 273-2985 E-mail: [email protected]fl.edu 103 Griffin Floyd Hall FAX: (352) 392-5175 Web: http://www.stat.ufl.edu/˜kdkhare/ University of Florida Gainesville, FL 32611 Education PhD in Statistics, 2009, Stanford University (Advisor: Persi Diaconis) Masters in Mathematical Finance, 2009, Stanford University Masters in Statistics, 2004, Indian Statistical Institute, India Bachelors in Statistics, 2002, Indian Statistical Institute, India Academic Appointments University of Florida: Associate Professor of Statistics, 2015-present University of Florida: Assistant Professor of Statistics, 2009-2015 Stanford University: Research/Teaching Assistant, Department of Statistics, 2004-2009 Research Interests High-dimensional covariance/network estimation using graphical models High-dimensional inference for vector autoregressive models Markov chain Monte Carlo methods Kshitij Khare 2 Publications Core Statistics Research Ghosh, S., Khare, K. and Michailidis, G. (2019). “High dimensional posterior consistency in Bayesian vector autoregressive models”, Journal of the American Statistical Association 114, 735-748. Khare, K., Oh, S., Rahman, S. and Rajaratnam, B. (2019). A scalable sparse Cholesky based approach for learning high-dimensional covariance matrices in ordered data, Machine Learning 108, 2061-2086. Cao, X., Khare, K. and Ghosh, M. (2019). “High-dimensional posterior consistency for hierarchical non- local priors in regression”, Bayesian Analysis 15, 241-262. Chakraborty, S. and Khare, K. (2019). “Consistent estimation of the spectrum of trace class data augmen- tation algorithms”, Bernoulli 25, 3832-3863. Cao, X., Khare, K. and Ghosh, M. (2019). “Posterior graph selection and estimation consistency for high- dimensional Bayesian DAG models”, Annals of Statistics 47, 319-348. -

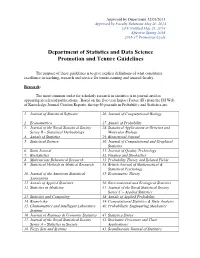

Department of Statistics and Data Science Promotion and Tenure Guidelines

Approved by Department 12/05/2013 Approved by Faculty Relations May 20, 2014 UFF Notified May 21, 2014 Effective Spring 2016 2016-17 Promotion Cycle Department of Statistics and Data Science Promotion and Tenure Guidelines The purpose of these guidelines is to give explicit definitions of what constitutes excellence in teaching, research and service for tenure-earning and tenured faculty. Research: The most common outlet for scholarly research in statistics is in journal articles appearing in refereed publications. Based on the five-year Impact Factor (IF) from the ISI Web of Knowledge Journal Citation Reports, the top 50 journals in Probability and Statistics are: 1. Journal of Statistical Software 26. Journal of Computational Biology 2. Econometrica 27. Annals of Probability 3. Journal of the Royal Statistical Society 28. Statistical Applications in Genetics and Series B – Statistical Methodology Molecular Biology 4. Annals of Statistics 29. Biometrical Journal 5. Statistical Science 30. Journal of Computational and Graphical Statistics 6. Stata Journal 31. Journal of Quality Technology 7. Biostatistics 32. Finance and Stochastics 8. Multivariate Behavioral Research 33. Probability Theory and Related Fields 9. Statistical Methods in Medical Research 34. British Journal of Mathematical & Statistical Psychology 10. Journal of the American Statistical 35. Econometric Theory Association 11. Annals of Applied Statistics 36. Environmental and Ecological Statistics 12. Statistics in Medicine 37. Journal of the Royal Statistical Society Series C – Applied Statistics 13. Statistics and Computing 38. Annals of Applied Probability 14. Biometrika 39. Computational Statistics & Data Analysis 15. Chemometrics and Intelligent Laboratory 40. Probabilistic Engineering Mechanics Systems 16. Journal of Business & Economic Statistics 41. -

Area13 ‐ Riviste Di Classe A

Area13 ‐ Riviste di classe A SETTORE CONCORSUALE / TITOLO 13/A1‐A2‐A3‐A4‐A5 ACADEMY OF MANAGEMENT ANNALS ACADEMY OF MANAGEMENT JOURNAL ACADEMY OF MANAGEMENT LEARNING & EDUCATION ACADEMY OF MANAGEMENT PERSPECTIVES ACADEMY OF MANAGEMENT REVIEW ACCOUNTING REVIEW ACCOUNTING, AUDITING & ACCOUNTABILITY JOURNAL ACCOUNTING, ORGANIZATIONS AND SOCIETY ADMINISTRATIVE SCIENCE QUARTERLY ADVANCES IN APPLIED PROBABILITY AGEING AND SOCIETY AMERICAN ECONOMIC JOURNAL. APPLIED ECONOMICS AMERICAN ECONOMIC JOURNAL. ECONOMIC POLICY AMERICAN ECONOMIC JOURNAL: MACROECONOMICS AMERICAN ECONOMIC JOURNAL: MICROECONOMICS AMERICAN JOURNAL OF AGRICULTURAL ECONOMICS AMERICAN POLITICAL SCIENCE REVIEW AMERICAN REVIEW OF PUBLIC ADMINISTRATION ANNALES DE L'INSTITUT HENRI POINCARE‐PROBABILITES ET STATISTIQUES ANNALS OF PROBABILITY ANNALS OF STATISTICS ANNALS OF TOURISM RESEARCH ANNU. REV. FINANC. ECON. APPLIED FINANCIAL ECONOMICS APPLIED PSYCHOLOGICAL MEASUREMENT ASIA PACIFIC JOURNAL OF MANAGEMENT AUDITING BAYESIAN ANALYSIS BERNOULLI BIOMETRICS BIOMETRIKA BIOSTATISTICS BRITISH JOURNAL OF INDUSTRIAL RELATIONS BRITISH JOURNAL OF MANAGEMENT BRITISH JOURNAL OF MATHEMATICAL & STATISTICAL PSYCHOLOGY BROOKINGS PAPERS ON ECONOMIC ACTIVITY BUSINESS ETHICS QUARTERLY BUSINESS HISTORY REVIEW BUSINESS HORIZONS BUSINESS PROCESS MANAGEMENT JOURNAL BUSINESS STRATEGY AND THE ENVIRONMENT CALIFORNIA MANAGEMENT REVIEW CAMBRIDGE JOURNAL OF ECONOMICS CANADIAN JOURNAL OF ECONOMICS CANADIAN JOURNAL OF FOREST RESEARCH CANADIAN JOURNAL OF STATISTICS‐REVUE CANADIENNE DE STATISTIQUE CHAOS CHAOS, SOLITONS -

Rank Full Journal Title Journal Impact Factor 1 Journal of Statistical

Journal Data Filtered By: Selected JCR Year: 2019 Selected Editions: SCIE Selected Categories: 'STATISTICS & PROBABILITY' Selected Category Scheme: WoS Rank Full Journal Title Journal Impact Eigenfactor Total Cites Factor Score Journal of Statistical Software 1 25,372 13.642 0.053040 Annual Review of Statistics and Its Application 2 515 5.095 0.004250 ECONOMETRICA 3 35,846 3.992 0.040750 JOURNAL OF THE AMERICAN STATISTICAL ASSOCIATION 4 36,843 3.989 0.032370 JOURNAL OF THE ROYAL STATISTICAL SOCIETY SERIES B-STATISTICAL METHODOLOGY 5 25,492 3.965 0.018040 STATISTICAL SCIENCE 6 6,545 3.583 0.007500 R Journal 7 1,811 3.312 0.007320 FUZZY SETS AND SYSTEMS 8 17,605 3.305 0.008740 BIOSTATISTICS 9 4,048 3.098 0.006780 STATISTICS AND COMPUTING 10 4,519 3.035 0.011050 IEEE-ACM Transactions on Computational Biology and Bioinformatics 11 3,542 3.015 0.006930 JOURNAL OF BUSINESS & ECONOMIC STATISTICS 12 5,921 2.935 0.008680 CHEMOMETRICS AND INTELLIGENT LABORATORY SYSTEMS 13 9,421 2.895 0.007790 MULTIVARIATE BEHAVIORAL RESEARCH 14 7,112 2.750 0.007880 INTERNATIONAL STATISTICAL REVIEW 15 1,807 2.740 0.002560 Bayesian Analysis 16 2,000 2.696 0.006600 ANNALS OF STATISTICS 17 21,466 2.650 0.027080 PROBABILISTIC ENGINEERING MECHANICS 18 2,689 2.411 0.002430 BRITISH JOURNAL OF MATHEMATICAL & STATISTICAL PSYCHOLOGY 19 1,965 2.388 0.003480 ANNALS OF PROBABILITY 20 5,892 2.377 0.017230 STOCHASTIC ENVIRONMENTAL RESEARCH AND RISK ASSESSMENT 21 4,272 2.351 0.006810 JOURNAL OF COMPUTATIONAL AND GRAPHICAL STATISTICS 22 4,369 2.319 0.008900 STATISTICAL METHODS IN -

1 Thirty-Five Years of Journal of Econometrics Takeshi Amemiya

1 Thirty-Five Years of Journal of Econometrics Takeshi Amemiya1 I. Introduction I am pleased to announce that Elsevier has agreed to sponsor the Amemiya lecture series for the Journal of Econometrics to promote econometrics research in developing countries. It is my honor to give the first lecture of the series. This idea was proposed by Cheng Hsiao, who believes that despite the tremendous advancement of econometric methodology in the last two or three decades, it does not seem to have had much impact on scholars in the third world countries. At the same time, the research interests of scholars in the third world countries, which naturally reflect their unique situation, have not attracted the attention of scholars in developed countries. This makes the submissions from developing countries difficult to go through the refereeing process of the Journal. There is, however, tremendous interest in the subject and scholars and students in the developing countries are eager to learn. For example, when Yongmiao Hong, associate editor of the Journal, organized a summer econometrics workshop in 2006 in Xiamen, China, with the sponsorship of the Journal of Econometrics, Chinese Ministry of Education, and Chinese Academy of Sciences, it attracted 610 applicants, although in the end only 230 were allowed to enroll due to space constraints. We hope that through this lecture series, we can enhance the interactions between scholars in the third world countries and scholars in the developed countries. See Table A, which classifies published articles according to the countries in which the authors resided during 1981- 1999, and Table B, the same data during 2000-2007. -

Ten Things We Should Know About Time Series

KIER DISCUSSION PAPER SERIES KYOTO INSTITUTE OF ECONOMIC RESEARCH Discussion Paper No.714 “Great Expectatrics: Great Papers, Great Journals, Great Econometrics” Michael McAleer August 2010 KYOTO UNIVERSITY KYOTO, JAPAN Great Expectatrics: Great Papers, Great Journals, Great Econometrics* Chia-Lin Chang Department of Applied Economics National Chung Hsing University Taichung, Taiwan Michael McAleer Econometric Institute Erasmus School of Economics Erasmus University Rotterdam and Tinbergen Institute The Netherlands and Institute of Economic Research Kyoto University Japan Les Oxley Department of Economics and Finance University of Canterbury New Zealand Rervised: August 2010 * The authors wish to thank Dennis Fok, Philip Hans Franses and Jan Magnus for helpful discussions. For financial support, the first author acknowledges the National Science Council, Taiwan; the second author acknowledges the Australian Research Council, National Science Council, Taiwan, and the Japan Society for the Promotion of Science; and the third author acknowledges the Royal Society of New Zealand, Marsden Fund. 1 Abstract The paper discusses alternative Research Assessment Measures (RAM), with an emphasis on the Thomson Reuters ISI Web of Science database (hereafter ISI). The various ISI RAM that are calculated annually or updated daily are defined and analysed, including the classic 2-year impact factor (2YIF), 5-year impact factor (5YIF), Immediacy (or zero-year impact factor (0YIF)), Eigenfactor score, Article Influence, C3PO (Citation Performance Per Paper Online), h-index, Zinfluence, and PI-BETA (Papers Ignored - By Even The Authors). The ISI RAM data are analysed for 8 leading econometrics journals and 4 leading statistics journals. The application to econometrics can be used as a template for other areas in economics, for other scientific disciplines, and as a benchmark for newer journals in a range of disciplines. -

Mathematical Statistics in 20Th-Century America

HISTORIA MATHEMATICA 23 (1996), 7±30 ARTICLE NO. 0002 Drawing the Boundaries: Mathematical Statistics in 20th-Century America PATTI W. HUNTER View metadata, citation and similar papers at core.ac.uk brought to you by CORE Department of Mathematics, University of Virginia, Charlottesville, Virginia 22903-3199 provided by Elsevier - Publisher Connector Statistics in Europe and in the United States acquired the status of a separate ®eld only in the 20th century. Until then, it had provided a quantitative tool for analyzing data in various sciences. In the early 1930s, the founding of the Annals of Mathematical Statistics and of the Institute of Mathematical Statistics served to institutionalize the profession of mathematical statistics in the United States, and helped to distinguish it as a discipline independent from the social sciences which had previously supported it. This paper examines the American mathematical statisticians' attempts to delineate the boundaries of their profession, focusing on their association with the social sciences, on the founding of the Annals and of the IMS, and on the profession's continuing advancement during World War II. 1996 Academic Press, Inc. Il n'eÂtait qu'au 20eÁme sieÁcle que la statistique est devenue une discipline distincte. AnteÂrieure- ment, elle a servi d'outil quantitatif pour l'analyse des donneÂes dans les sciences diverses. Dans les 1930s, l'eÂtablissement des Annals of Mathematical Statistics et de l'Institute of Mathematical Statistics a amene l'institutionalisation d'une profession ``statistique matheÂmatique'' et a servi aÁ distinguer cette profession des sciences sociales. Ici, j'analyse les tentatives des statisticiens matheÂmatiques ameÂricains d'eÂtablir les frontieÁres de leur discipline en concentrant sur leurs associations avec les sciences sociales, le fondement des Annals et de l'IMS, et le progreÁsde la profession pendant la deuxieÁme guerre mondiale. -

Douglas William Nychka

Douglas William Nychka Education 1978 B.A. Mathematics and Physics, Duke University 1983 Ph.D. Statistics, University of Wisconsin - Madison Honors and Awards 1978 Summa cum laude, Duke University 1978 Julia Dale Mathematics Award Duke University 2003 Fellow, American Statistical Association 2004 Jerry Sacks Award for Multidisciplinary Research 2013 Distinguished Achievement Award ENVR Section American Statistical Association 2013 Achievement Award, International Statistics and Climatology Meeting 2015 Fellow, Institute of Mathematical Statistics Professional Appointments 8/18 - present Professor, Department of Applied Mathematics and Statistics, Col- orado School of Mines, Golden, CO 8/97 - 8/18 National Center for Atmospheric Research, Boulder, CO. Visiting Scientist (8/97-7/99), Senior Scientist (8/99 - present), Project leader (8/99 - 9/04) Geophysical Statistics Project, Director (10/04 - 2017), Institute for Mathematics Applied to Geo- sciences (IMAGe) Senior Scientist Emeritus (8/18 - present) 7/83 - 6/99 Department of Statistics, North Carolina State University, Raleigh, NC Assistant (7/83 - 6/89), Associate (7/89 - 6/94) and Full Profes- sor (7/94 - 7/99), 1/94 - 7/03 National Institute of Statistical Sciences, Research Triangle Park, NC, Senior Fellow (1/94 -7/99) and Trustee (2000 - 2003) 6/93 and 6/08 Isaac Newton Institute for the Mathematical Sciences, Cambridge, England, Visiting Scholar 5/88 - 6/88, 6/92 Statistics Group, University of Bath, Bath, England, Visiting Faculty 7/89, 8/90 - 12/90 Operations Research and Industrial -

HHS Public Access Author Manuscript

HHS Public Access Author manuscript Author Manuscript Author ManuscriptBiometrika Author Manuscript. Author manuscript; Author Manuscript available in PMC 2016 June 01. Published in final edited form as: Biometrika. 2015 June ; 102(2): 281–294. doi:10.1093/biomet/asv011. On random-effects meta-analysis D. ZENG and D. Y. LIN Department of Biostatistics, CB #7420, University of North Carolina, Chapel Hill, North Carolina 27599, U.S.A D. ZENG: [email protected]; D. Y. LIN: [email protected] Summary Meta-analysis is widely used to compare and combine the results of multiple independent studies. To account for between-study heterogeneity, investigators often employ random-effects models, under which the effect sizes of interest are assumed to follow a normal distribution. It is common to estimate the mean effect size by a weighted linear combination of study-specific estimators, with the weight for each study being inversely proportional to the sum of the variance of the effect-size estimator and the estimated variance component of the random-effects distribution. Because the estimator of the variance component involved in the weights is random and correlated with study-specific effect-size estimators, the commonly adopted asymptotic normal approximation to the meta-analysis estimator is grossly inaccurate unless the number of studies is large. When individual participant data are available, one can also estimate the mean effect size by maximizing the joint likelihood. We establish the asymptotic properties of the meta-analysis estimator and the joint maximum likelihood estimator when the number of studies is either fixed or increases at a slower rate than the study sizes and we discover a surprising result: the former estimator is always at least as efficient as the latter. -

Professor Robert Kohn

Professor Robert Kohn Curriculum Vitae Education Ph.D. Econometrics, Australian National University. Master of Economics, Australian National University. Academic Experience 2003 to present. Professor,Australian School of Business. Joint appointment between School of Economics and School of Banking and Finance. 1987 to 2003 Professor, Australian Graduate School of Management, University of New South Wales 1985-1987 Associate Professor, Australian Graduate School of Management, University of NSW 1982-1986 Associate Professor of Statistics, Graduate School of Business, University of Chicago 1979 -1982 Assistant Professor in Statistics, Graduate School of Business, University of Chicago Related Academic Experience 1991-2002 Head, Statistics and Operations Group, Australian Graduate School of Management, University New South Wales 1995-January 2002. Director, PhD Program Australian Graduate School of Management 1996-1998 Director of Research, Australian Graduate School of Management 1998- 2002 Associate Dean for Research, Australian Graduate School of Management 1998 to 2002 1 Member of the Committee on Research, University of New South Wales 1992 to 2002 Member, Higher Degree Committee, Australian Graduate School of Management 1991 to 2002 Member, Executive Committee, Australian Graduate School of Management. Awards Fellow of the Journal of Econometrics 2000 Fellow of the Institute of Mathematical Statistics 2002 Fellow of the American Statistical Association 2005 Scientia Professor, University of New South Wales, 2007-2012 Fellow Academy Social Sciences of Australia 2007 Associate Editor. 1996- Australia and New Zealand Journal of Statistics Associate Editor 1999-2001. Journal of the American Statistical Association. Associate Editor 2004- Bayesian Analysis Journal Associate Editor 2006 -2007 Sankhya Professional Affiliations Member of American Statistical Association, Econometric Society, Institute of Mathematical Statistics, Royal Statistical Society and Australian Statistical Society.