Secure Live Migration of SGX Enclaves on Untrusted Cloud

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Press Statement

PRESS STATEMENT Textile CEOs Urge Candidates to Elaborate More Fully on Plans to Stop U.S. Manufacturing Job Loss and to Boost Competitiveness CONTACT: Lloyd Wood, Dir. of Membership and Media Outreach (202) 452-0866 or [email protected] For Immediate Release May 1, 2008 North Carolina textile industry CEOs urged candidates for public office to put forth more detailed plans to stop U.S. manufacturing job loss and to boost competitiveness at telephone conference call media event held on May 1. Allen Gant, CEO of Glen Raven Inc. of Glen Raven, North Carolina said, “America cannot have a prosperous future without a healthy domestic manufacturing sector. That’s why all candidates must more fully spell out where they stand on such key manufacturing issues such as stopping job loss and boosting the competitiveness of the U.S. government’s manufacturing policy.” Illustrating the challenges facing North Carolina’s economy, the American Manufacturing Trade Action Coalition (AMTAC) released a comprehensive report that showed a severe decline in manufacturing investment in North Carolina and that the state has been losing high-wage jobs and replacing them with low-wage jobs. It also broke out manufacturing job gain/loss by sector for North Carolina’s twelve largest metropolitan statistical areas (MSAs). Reacting to the report, Gant continued, "North Carolina can’t keep trading good manufacturing jobs for lower paying service sector jobs and expect to grow its economy as fast as it needs to grow.” “Americans haven't stopped buying manufactured goods; they just aren’t buying as many from North Carolina as before because of trade cheats like China. -

TSC002 Product Sheets Final

TTP-243M Series Desktop Thermal Transfer Bar Code Printer Economical Price. Reliable Performance. Corporate Headquarters ™ The TSC TTP-243M Series is the ideal printer to satisfy your on-demand Taiwan Semiconductor Co. Ltd. printing needs and is the price leader in its class. The steel enclosure and 2F, No. 8, Alley 16, Lane 235, Pao-Chiao Rd., Hsin-Tien City durable mechanism are designed to endure heavy use in industrial Taipei, Taiwan environments and keep your replacement costs down. Tel: 886-2-2917-4145 Fax: 886-2-2915-9741 We back every TTP-243M Series printer with a 2-year warranty, giving you the E-mail: [email protected] Web Site: www.tscprinters.com confidence of receiving reliable performance day in and day out. With print speeds up to 100mm per second (4.0 ips) and a large 2MB DRAM memory North/South America capacity, the TTP-243M Series offers the speed and efficiency you need to Sales Office TSC America Inc. keep running. The TTP-243M Series features include: 125 Mercury Circle • Up to 1MB flash memory and 2MB DRAM memory Pomona, CA 91768, USA • Up to 100mm (4.0") per second printing speeds Tel: 1-909-468-0100 Fax: 1-909-468-0101 • LCD control panel (132 x 32 pixels) E-mail: [email protected] • Real-Time-Clock Web site: www.tscprinters.com • LabelView XLT+™ and Windows Drivers China Sales Office • 2-year warranty Tianjin Everwell Technology Co. Ltd. • Options include stand-alone printer controller (KP-200 Keyboard), No. 165, Huang Hai Rd. rotary cutter, and expandable Flash memory cartridges. -

HU5930/10 Philips Air Washer

Philips Air Washer Humidifcation Rate:500ml/h* Breathe the difference Particle CADR:140m3/h* 0.02µm Particle Filtration* NanoCloud humidification, NanoProtect Purification Living Room (70 m2)* Breathe healthier air with the Philips NanoClean. Protect you from allergens and assure you with comfortable and healthier air, thanks to supreme NanoCloud humidification technology and efficient Nano Protect purification performance. Supreme & hygienically safe humidification • Supreme NanoCloud humidification performance • 4 precise humidification level settings • 4 different fan speed modes • Energy efficient Nano Protect purification • Nano Protect filter effectively remove 0.02 µm* particle • Healthy air protect alert HU5930/10 Digital • Numerical display with Smart Sensor • 1~8 hour digital timer setting and light off operation Simplicity •Child Lock • Easy cleaning Air Washer HU5930/10 Humidifcation Rate:500ml/h* Particle CADR:140m3/h*, 0.02µm Particle Filtration*, Living Room (70 m2)* Highlights Specifications Supreme NanoCloud capacity filtration. High purification performance up Design and finishing Triple protection against bacteria and mold. Philips to CADR 140m3/h*. • Color of control panel: Matte black NanoCloud technology is certified hygienically safe. • Control panel type: Touch Screen It is proven to release 99%* less bacteria into the air Healthy air protect alert • Fan speed indicators: Speed 1, 2, 3, Auto compared to ultrasonic humidifiers, protecting your Healthy air protect alert lets you know promptly • Material of main body: Plastic long term health with healthier clean air that's when it is time to replace the filter and wick. If the • Color(s): Cloud white virtually free of pathogens and molds. Philips filter or wick is not replaced promptly, the appliance NanoCloud's invisible mist of water does not create stops functioning to avoid running with no effect. -

Reflections Voices of English Learners

Reflections Voices of English Learners Third Edition, 2020 Reflections: Voices of English Learners • 1 OUR MISSION The Literacy Council of Frederick County teaches adults the reading and other English language skills they need to provide for their families and to contribute to the well-being and prosperity of our community. OUR VALUES • Sustaining our organization through responsible financial stewardship, sound management, and community engagement. • Teaching a parent in order to educate a family. Educating our students in a learner-centered format with compassion, confidentiality, and respect. • Producing a safer, healthier, economically stronger, and more vibrant community through adult literacy. • Sustaining, empowering, and energizing a passionate, strong dedicated volunteer base. OUR VISION A community where literacy is attainable for everyone. frederickliteracy.org The Literacy Council of Frederick County is a registered 501(c)(3) charitable organization. 2 • Reflections: Voices of English Learners FOREWORD The Literacy Council of Frederick County (LCFC) is publishing its third annual Reflections: Voices of English Learners. This is a collection of various stories that our students have written with pride. The LCFC started in 1963 to teach adults reading and other English language skills that they need to provide for their families and contribute to the well-being and prosperity of our community. We have helped 335 students in the past year with one-to-one tutoring, specialty classes, workplace classes for hospitality and restaurant employees, and English learning for parents of grade school students at several Frederick County Public Schools. As we each reflect on the additional stresses we have felt during the past six months, we realize that multiple things have shifted in our own lives. -

"Running Man" to "Mission X": Variety Shows As Cultural Representation of Local Identities

From "Running Man" to "Mission X": Variety Shows as Cultural Representation of Local Identities Nurul Laili Nadhifah, Universitas Brawijaya, Indonesia The Asian Conference on Media, Communication & Film 2018 Official Conference Proceedings Abstract Running Man, as one of Korean successful variety shows, never fails to advertise parts of Korean culture like food, K-Pop, cultural values, traditional games, history, even historical sites. With quite a huge amount of fans not only from South Korea but also from other countries as well, Running Man also occasionally encourages interactions between the hosts and their fans in some games, indirectly introducing Korean culture to all over the world, resulting in the increase of consumption on Korean culture’s products. This reality show concept is, then, adapted into Indonesian variety show called Mission X. Quite different from Running Man, Mission X has its own ways in introducing Indonesia and its culture. This study aims to analyze the way Running Man and Mission X represent themselves as the platforms of advertising their local identities by applying Fiske’s vertical intertextuality and cultural identity theories. Drawing on data collected from the shows as the main texts and SNS as secondary texts, while data of fans’ reactions are gathered by doing random interview online. This research, through comparative studies, intents to explain the similarities and differences of cultural representations shown on those shows. The findings show different aspects of local culture displayed by those variety shows caused different methods of representing them. While Running Man introducing different aspects and values of culture like food or history through games and certain themes, Mission X tends to introduce cultural aspects more through display of setting. -

A Shared Recommendation List for Summer Leisure Time Chinese/Taiwanese-American Films and Books

A Shared Recommendation List for Summer Leisure Time Chinese/Taiwanese-American films and books FILM LIST [In random order] Documentary Film: Minding the Gap (2018) Director: Bing Liu With: Keire Johnson, Zack Mulligan, Bing Liu Three childhood friends united in their love for skateboarding grow up in a small Illinois town in front of our eyes in filmmaker Bing Liu’s Oscar-nominated documentary, with searing observations on race, class and masculinity. Read a review: https://www.nytimes.com/2018/08/16/movies/minding-the-gap-review- documentary.html Feature Film: Chan is Missing Feature Film: Saving Face (2004) Director: Alice Wu Cast: Michelle Krusiec, Joan Chen, Lynn Chen A young surgeon keeps her sexual identity a secret from her mom, who has secrets of her own. A hallmark of lesbian cinema and a beloved comedy of Chinese American women negotiating family honor. Feature Film: Better Luck Tomorrow (2002) Director: Justin Lin Cast: Parry Shen, Jason Tobin, Sung Kang, Roger Fan “Fast & Furious” franchise helmer Justin Lin made his solo directing debut with this Sundance hit, a high school crime drama about Asian American overachievers breaking bad that sent the model minority myth spinning. It remains a milestone for ferociously defiant Asian American storytelling. Read a Review: https://www.theatlantic.com/entertainment/archive/2018/08/how-better-luck- tomorrow-argued-for-its-existence-15-years-ago/568045/ Films that will be screened in Chinese Classes: Chinese 101 The Farewell ALC 325 Chinese Cinema: Ideology and the Box office The Joy -

Salon Niksic Catalogue Download

1st Circular Exhibition of Photography "JADRAN CIRCUIT 2016" Salon Niksic FIAP 2016/261 PSA 2016-092 1st Circular Exhibition of Photography "JADRAN CIRCUIT 2016" Salon Niksic FIAP 2016/261 PSA 2016-092 1st Circular Exhibition of Photography "JADRAN CIRCUIT 2016" Salon Niksic FIAP 2016/261 PSA 2016-092 SECTIONS: A) WOMAN (color/monochrome digital) FIAP and PSA PID Color B) OPEN COLOR (color digital) FIAP and PSA PID Color C) OPEN MONOCHROME (monochrome digital) FIAP and PSA PID Monochrome JURU MEMBERS Hadži Miodrag MILADINOVIĆ Borislav MILOVANOVIĆ Zoran MOJSIN EFIAP, MF FSJ, Serbia EFIAP, F1 FSS, Serbia EFIAP, F1 FSS, Serbia Vuko Punosevic, Salon chairman www.photoclubcattaro.com [email protected] Niksic, 08.05.2016. 1st Circular Exhibition of Photography "JADRAN CIRCUIT 2016" Salon Niksic FIAP 2016/261 PSA 2016-092 FIAP Blue badge for The Best author: Trond M. Skaret, Norway Awards Theme A) WOMAN FIAP Gold medal - Kai Lon Tang, Macau, Rainy Mood PSA Gold medal - Trond M. Skaret, Norway, Three, but four DPA Gold medal - Marco Garabello, Italy, Sguardo SALON Gold medal - Stefan Nagy, Austria, Geometrie DPA Silver medal - Andris Apsenieks, Latvia, Winter is coming SALON Silver medal - Lajos Nagy, Romania, The smile DPA Bronze medal - Graeme Watson, Australia, Car Park Coffee SALON Bronze medal - Aida Abregova, Russian Federation, Sadness of memories FIAP HM - Atle Sveen, Norway, Victoria FIAP HM - Hakon Gronning, Norway, Flame in green bath DPA HM - Frank Hausdoerfer, Germany, Lights DPA HM - Hong Li, China, Yi woman8 DPA HM - Peng Li, China, Mother and Kid SALON HM - Carlos Rozensztroch, Argentina, Seduced SALON HM - Jianqiang Chen, China, belief SALON HM - The Eng Loe Djatinegoro, Indonesia, TALL SISTERS Awards Theme B) OPEN COLOR FIAP Gold medal - Atle Sveen, Norway, Raphaella III PSA Gold medal - Trond M. -

Analysis of Pressure Characteristics of Tube-Contained Raw Material Pipeline Hydraulic Transportation Under Different Discharges

International Conference on Electrical, Mechanical and Industrial Engineering (ICEMIE 2016) Analysis of Pressure Characteristics of Tube-contained Raw Material Pipeline Hydraulic Transportation under Different Discharges Jun Wang1,2, Xihuan Sun1,* and Yongye Li1 1College of Water Resource Science and Engineering, Taiyuan University of Technology, Taiyuan 030024, China 2Shanxi Digital Water Center, Taiyuan 030002, China *Corresponding author Abstract—Tube-contained raw material pipeline hydraulic material products - the piped hydraulic transportation of transportation is a new energy-saving and environmentally tube-contained raw material. -friendly technique of piped hydraulic transportation. In the paper, some pressure characteristics of tube-contained raw Tube-contained raw material pipeline hydraulic material pipeline hydraulic transportation under different transportation is a new technique. In this technique, the liquid discharges were mainly researched. The results show that there is used as a carrier, and bulk material (solid or liquid) is filled was a direct proportional linear relationship between the and sealed in a cylinder-shaped carriage. It is a transportation discharge and the average velocity of the piped carriage. mode which uses the closed pipeline to transport material Additionally, it was discovered that the fluidal pressure change of [2-4]. each cross section change in the pipe while the piped carriage was moving were closely connected with discharges. These Compared with the traditional piped hydraulic conclusions -

SUMMER / 2013 Wang Anyi Yi Sha Bai Hua Bi Feiyu Li Hao Zang Di Jiang Yun Lu Nei Yang Li Speed Photo by Qiu Lei

PATHLIGHT NEW CHINESE WRITING SUMMER / 2013 Wang Anyi Yi Sha Bai Hua Bi Feiyu Li Hao Zang Di Jiang Yun Lu Nei Yang Li Speed Photo by Qiu Lei PATHLIGHT / SUMMER - 2013 1 Photo by Wang Yan PATHLIGHT SUMMER / 2013 Summer 2013 ISBN 978-7-119-08377-3 © Foreign Languages Press Co. Ltd, Beijing, China, 2013 Published by Foreign Languages Press Co. Ltd. 24 Baiwanzhuang Road, Beijing 100037, China http://www.flp.com.cn E-mail: [email protected] Distributed by China International Book Trading Corporation 35 Chegongzhuang Xilu, Beijing 100044, China P.O. Box 399, Beijing, China Printed in the People’s Republic of China CONTENTS Fiction 004 Wang Anyi _ In the Belly of the Fog _ 4 Tradition and Rebellion: A Conversation with Wang Anyi _ 12 Bi Feiyu _ The Deluge _ 18 A Professional Interest in Suffering: A Conversation with Bi Feiyu _ 36 Jiang Yun _ The Red Detachment of Women _ 46 Li Hao _ The General _ 58 Lu Nei _ Keep Running, Little Brother _ 66 A Yi _ Two Lives _ 88 Ren Xiaowen _ I Am Fish _ 100 Su Cici _ The Zebra That Didn’t Exist _ 110 Sheng Tie _ The Train Was Clean and Cool _ 122 Poetry 132 Bai Hua _ Small Town Tale, Village, 1977, Mock Nursery Rhyme, The Illusion of Life, Thoughts Arising from the Pig _ 132 Yang Li _ Fated, An Old Poem, The White Horse, Courthouse (I) _ 138 Yi Sha _ Rhythm is What Matters, Memories Evoked by Reality, Notes on Mt. -

An Efficient Virtual Machine Consolidation Scheme For

sensors Article An Efficient Virtual Machine Consolidation Scheme for Multimedia Cloud Computing Guangjie Han 1,*, Wenhui Que 1, Gangyong Jia 2 and Lei Shu 3 1 Department of Information and Communication Systems, Hohai University, 200 North Jinling Road, Changzhou 213022, China; [email protected] 2 Department of Computer Science, Hangzhou Dianzi University, Hangzhou 310018, China; [email protected] 3 Guangdong Petrochemical Equipment Fault Diagnosis Key Laboratory, Guangdong University of Petrochemical Technology, Guangdong 525000, China; [email protected] * Correspondence: [email protected]; Tel.: +86-519-8519-1841; Fax.: +86-519-8519-1838 Academic Editor: Antonio Jara Received: 7 January 2016; Accepted: 14 February 2016; Published: 18 February 2016 Abstract: Cloud computing has innovated the IT industry in recent years, as it can delivery subscription-based services to users in the pay-as-you-go model. Meanwhile, multimedia cloud computing is emerging based on cloud computing to provide a variety of media services on the Internet. However, with the growing popularity of multimedia cloud computing, its large energy consumption cannot only contribute to greenhouse gas emissions, but also result in the rising of cloud users’ costs. Therefore, the multimedia cloud providers should try to minimize its energy consumption as much as possible while satisfying the consumers’ resource requirements and guaranteeing quality of service (QoS). In this paper, we have proposed a remaining utilization-aware (RUA) algorithm for virtual machine (VM) placement, and a power-aware algorithm (PA) is proposed to find proper hosts to shut down for energy saving. These two algorithms have been combined and applied to cloud data centers for completing the process of VM consolidation. -

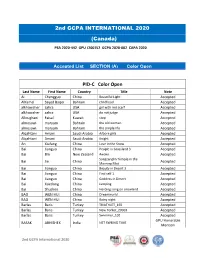

Acceped List

2nd GCPA INTERNATIONAL 2020 (Canada) PSA 2020-442 GPU L200152 GCPA 2020-002 CAPA 2020 Accepted List SECTION (A) Color Open PID-C Color Open Last Name First Name Country Title Note Ai Chengguo China Beautiful Light Accepted AlKamel Sayed Baqer Bahrain childhood Accepted alkhawaher zahra USA girl with red scarf Accepted alkhawaher zahra USA do not judge Accepted Alloughani Faisal Kuwait stop Accepted almosawi maryam Bahrain the old woman Accepted almosawi maryam Bahrain the simple life Accepted Alqahtani Amani Saudi Arabia Arbore girls Accepted Alqahtani Amani Saudi Arabia Knight Accepted An Xiufang China Love in the Snow Accepted Bai Jianguo China People in Grassland 3 Accepted Bai Bin New Zealand Awake Accepted Songzanglin Temple in the Bai Jie China Accepted Morning Mist Bai Jianguo China Beauty in Desert 3 Accepted Bai Jianguo China Find self 1 Accepted Bai Jianguo China Goddess in Desert Accepted Bai Xuedong China Jumping Accepted Bai Shuzhen China Herding song on snowland Accepted BAO WEN HUI China Dreamworld Accepted BAO WEN HUI China Rainy night Accepted Barlas Baris Turkey TRIATHLET_165 Accepted Barlas Baris Turkey New Yorker_20003 Accepted Barlas Baris Turkey Swimmer_102 Accepted GPU Honorable BASAK ABHISHEK India NET SWEING TIME Mention 2nd GCPA International 2020 GCPA Bronze BASAK ABHISHEK India NIGHT QUEEN Medal BASAK ABHISHEK India QUEEN IN RED 1 Accepted BASAK ABHISHEK India MY FREEDOM Accepted bin zhang China The sea of fantasy CAPA Silver Medal Buesching Gunter Germany Stroller Accepted Buesching Gunter Germany On Top 2 -

World Bank Document

Certificate No.: Guohuanpingzheng Jiazi No. 1703 Public Disclosure Authorized Environmental Impact Assessment For Harbin Cold Weather Smart Public Transportation System Project (Public Transport Corridors Component) Restructuring Stage Public Disclosure Authorized Entrusted by Public Disclosure Authorized Harbin Transportation Bureau Prepared by Heilongjiang Xingye Environmental Protection Technology Co., Ltd. Revised by HJI Group (USA) Public Disclosure Authorized July 2019 Project Name: Harbin Cold Weather Smart Public Transportation System Project (Public Transport Corridors Component) Project No.: HKYBGS - (2013) 008 Entrusted by: Harbin Transportation Bureau Prepared by: Heilongjiang Xingye Environmental Protection Technology Co., Ltd. Legal Representative: Chi Xiaode EIA Certificate: Guohuanpingzheng Jiazi No. 1703 Task Team Leaders: Sun Baini A17030081000 He Chenyan A17030009 Technical Reviewer: Guan Kezhi A17030023 Prepared by No. of Prepared by Duty Title Registration/Qualification Signature Certificate Sun Baini Report preparation Senior engineer A17030081000 PREFACE Urbanization and motorization have led to constant increase in gasoline consumption in China, and energy will become an important factor affecting China's social and economic development in the future. Over a long time, China's urban public transport system has been underdeveloped, and unable to meet the high-quality daily travelling demand of urban residents. The prominent contradictions between supply and demand in urban transport are shown by the incomplete capacity of urban public transport system, the failure of urban public transport system development in keeping simultaneous with urban development, the single structure of urban public transport system, the lack of advanced transport planning concepts, and the lack of public transport-oriented planning experience for most cities. The project is intended to optimize Harbin's urban public transport system, to improve social and economic development, and to alleviate residents' travel difficulties in cold weather.