The Evocative Power of Vocal Staging in Recorded Rock Music and Other

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

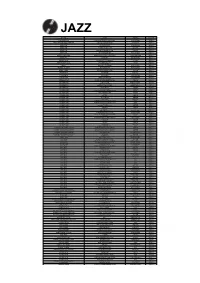

Jazz Lines Publications Fall Catalog 2009

Jazz lines PubLications faLL CataLog 2009 Vocal and Instrumental Big Band and Small Group Arrangements from Original Manuscripts & Accurate Transcriptions Jazz Lines Publications PO Box 1236 Saratoga Springs NY 12866 USA www.ejazzlines.com [email protected] 518-587-1102 518-587-2325 (Fax) KEY: I=Instrumental; FV=Female Vocal; MV=Male Vocal; FVQ=Female Vocal Quartet; FVT= Femal Vocal Trio PERFORMER / TITLE CAT # DESCRIPTION STYLE PRICE FORMAT ARRANGER Here is the extended version of I've Got a Gal in Kalamazoo, made famous by the Glenn Miller Orchestra in the film Orchestra Wives. This chart differs significantly from the studio recorded version, and has a full chorus band intro, an interlude leading to the vocals, an extra band bridge into a vocal reprise, plus an added 24 bar band section to close. At five and a half minutes long, it's a (I'VE GOT A GAL IN) VOCAL / SWING - LL-2100 showstopper. The arrangement is scored for male vocalist plus a backing group of 5 - ideally girl, 3 tenors and baritone, and in the GLENN MILLER $ 65.00 MV/FVQ DIFF KALAMAZOO Saxes Alto 2 and Tenor 1 both double Clarinets. The Tenor solo is written on the 2nd Tenor part and also cross-cued on the male vocal part. The vocal whistling in the interlude is cued on the piano part, and we have written out the opening Trumpet solo in full. Trumpets 1-4: Eb6, Bb5, Bb5, Bb5; Trombones 1-4: Bb4, Ab4, Ab4, F4; Male Vocal: Db3 - Db4 (8 steps): Vocal key: Db to Gb. -

Saison Du Théâtre De La Licorne Scène Conventionnée D'intérêt National Art, Enfance, Jeunesse

CANNES 2020/21 UNE PROGRAMMATION MAIRIE DE CANNES SAISON DU THÉÂTRE DE LA LICORNE SCÈNE CONVENTIONNÉE D'INTÉRÊT NATIONAL ART, ENFANCE, JEUNESSE cannes.com / 1 ÉDITO LES RENCONTRESDE CANNES 2020 LITTÉRAIRES 19, 20 et 21 novembre CINÉMATOGRAPHIQUES 23 au 29 novembre ARTISTIQUES 2 et 3 décembre DÉBATS 4, 5 et 6 décembre Mairi d Cannes - Communicatio - Août 2020 - Août - Communicatio d Cannes Mairi 15x21 rencontresdecannes.indd 1 19/08/2020 14:57 ÉDITO LES LA LICORNE MET EN SCÈNE LE SPECTACLE VIVANT POUR LES FAMILLES ET FÊTE LA CULTURE DE PROXIMITÉ RENCONTRESDE Le Théâtre municipal de La Licorne ouvre Les spectacles présentés cette saison 2020 ses portes à une nouvelle saison artistique 2020-2021 sont autant d’occasions de et culturelle de proximité, qualitative, rencontres pour les familles et la jeunesse CANNES préparée et proposée par la Mairie de de notre ville qui nous permettent de nous Cannes et ses partenaires. élever au-delà de notre condition, de nous arracher à notre humeur du moment, Aller au théâtre est un acte volontaire, de construire des souvenirs qui nous l’expression d’un désir, d’un esprit curieux marqueront. LITTÉRAIRES de découverte, le choix d’accéder à un univers nouveau. Jules Renard écrivait dans son Journal : 19, 20 et 21 novembre « Nous voulons de la vie au théâtre, et du Au théâtre, comme au cinéma, on rit, on théâtre dans la vie ». C’est cette polarité pleure, on applaudit la performance. C’est que nous vous proposons de partager tout tout un public, porté par la magie de ce qui au long de cette année, en compagnie des CINÉMATOGRAPHIQUES se passe sur scène, qui communie à une artistes professionnels, qui se produiront émotion collective. -

Québec.Ca/Alertecovid

OBTENEZ L'INTÉGRALITÉ DU JOURNAL EN LIGNE! ~ WWW.PONTIACJOURNAL.COM ~ GET THE ENTIRE JOURNAL ONLINE! Elite BARRIÈRES, MANGEOIRES, CHUTES GATES, FEEDERS, Alain Guérette M.Sc., BAA CHUTES Courtier immobilier inc. 819.775.1666 www.mandrfeeds.com [email protected] Micksburg 613-735-3689 Astrid van der Linden Pembroke 613-732-2843 Courtier immobilier Shawville 819-647-2814 819.712.0635 OCTOBER 21 OCTOBRE 2020 ~ VOL. 34 #20 [email protected] Michaela termine 3e en finale de La Voix Marie Gionet Tout le Pontiac a suivi le parcours de Michaela Cahill à la populaire émission La Voix alors que l’artiste a ouvert ses ailes pour partir à la conquête de la province! Quatre juges se sont retournées aux auditions à l’aveugle, suivis des duels, chants de bataille, quarts de finale, demi-finales, finale; un parcours complet attendait l'artiste originaire de Fort-Coulonge. Pour une première fois dans l’histoire de l’émission, la finale était 100% féminine et l’aventure de Cahill s’est terminée le 18 octobre. Malgré le soutien de la population, l’artiste locale n’a finalement pas pu remporter la dernière étape de la compétition. L’émission lui a donné la chance de travailler aux côtés de talentueux artistes, de Ginette Reno à Tyler Shaw, sans bien sûr oublier qu’elle a pu tout au long de l’aventure compter sur les conseils de son coach, Marc Dupré. Cette expérience lui aura aussi permis de se retrouver sur l’album La Voix chante. Pour Mme Cahill, La Voix était l’occasion de prouver à ses filles qu’il n’est jamais trop tard pour accomplir ses rêves. -

2020-2021 Season Changes

CONTACT Amanda J. Ely FOR IMMEDIATE RELEASE Director of Audience Development [email protected] 757-627-9545 ext. 3322 VIRGINIA OPERA REVISES SCHEDULE OF 2020–2021 SEASON PRODUCTIONS: OPENER RIGOLETTO CANCELLED; REVISED TRIO OF PERFORMANCE OFFERINGS TO BEGIN FEBRUARY 2021 WITH DOUBLE BILL LA VOIX HUMAINE/GIANNI SCHICCHI TO REPLACE COMPANY DEBUT OF COLD MOUNTAIN; MOZART’S THE MARRIAGE OF FIGARO AS SCHEDULED; AND THE PIRATES OF PENZANCE RESCHEDULED TO APRIL 2021 Virginia Opera makes major changes to offerings and events in continuing response to effects of COVID-19 Hampton Roads, Richmond, Fairfax, VA (June 30, 2020)—Today, Virginia Opera, The Official Opera Company of the Commonwealth of Virginia, announces an overhaul of the company’s previously announced main stage opera schedule for the 2020-2021 “Love is a Battlefield” season due to ongoing effects and circumstances surrounding the COVID-19 pandemic. A number of revisions affecting every facet of the company’s operations both on and off stage were required, including debuting the 2020–2021 Season offerings in February 2021, with an attenuated three-production statewide schedule between February 5, and April 25, 2021. Giuseppe Verdi’s Rigoletto, formerly the company’s lead-off October 2020 production, will not be performed; Gilbert and Sullivan’s The Pirates of Penzance will be rescheduled from November 2020 to April 2021; and the VO season will now begin in February 2021 with the change of a double bill featuring Francis Poulenc’s La Voix Humaine and Giacomo Puccini’s Gianni Schicchi performed in place of Jennifer Higdon and Gene Scheer’s Cold Mountain. -

La Voix Humaine: a Technology Time Warp

University of Kentucky UKnowledge Theses and Dissertations--Music Music 2016 La Voix humaine: A Technology Time Warp Whitney Myers University of Kentucky, [email protected] Digital Object Identifier: http://dx.doi.org/10.13023/ETD.2016.332 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Myers, Whitney, "La Voix humaine: A Technology Time Warp" (2016). Theses and Dissertations--Music. 70. https://uknowledge.uky.edu/music_etds/70 This Doctoral Dissertation is brought to you for free and open access by the Music at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Music by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. I agree that the document mentioned above may be made available immediately for worldwide access unless an embargo applies. -

THE VOICE KIDS » Saison 6 APPELS a CANDIDATURES PREAMBULE

« THE VOICE, LA PLUS BELLE VOIX » Saison 8 ET « THE VOICE KIDS » Saison 6 APPELS A CANDIDATURES PREAMBULE La société ITV STUDIOS FRANCE, société par actions simplifiée au capital de 868.000 euros, immatriculée au registre du commerce et des sociétés de Nanterre sous le N° 519 024 442 dont le siège social est situé 12 Boulevard des Iles- 92130 Issy Les Moulineaux (ci-après dénommée « la Société ») envisage de produire et réaliser deux concours télévisés consistant en une compétition de chant provisoirement ou définitivement intitulés, - « THE VOICE, LA PLUS BELLE VOIX » Saison 8, - « THE VOICE KIDS » Saison 6, (ci-après ensemble dénommés « les Concours », ou, individuellement « l’un des Concours », « THE VOICE LA PLUS BELLE VOIX » ou « THE VOICE KIDS »), destinés, notamment, en cas d’aboutissement du projet, à être diffusés sur les chaînes de télévision du Groupe TF1 (ci-après dénommée « le Diffuseur ») ainsi que sur tout réseau de communication électronique et/ou services de médias audiovisuels à la demande, sur des supports de téléphonie fixe et mobile, par voie d'exploitation radiophonique, etc. A cette occasion, la Société lance deux appels à candidatures (ci-après dénommés ensemble « Appels à Candidatures » et individuellement « l’Appel à Candidature ») à destination des personnes physiques désireuses de participer aux Concours. Le présent règlement a vocation à régir les Appels à Candidatures de ces deux Concours dans l’hypothèse où ces derniers seraient effectivement organisés, produits, réalisés et diffusés. L’inscription à l’un de ces deux Appels à Candidatures emporte l’acceptation sans réserve des termes du présent règlement par les participants majeurs, par les participants mineurs et le cas échéant leurs représentants légaux. -

Jovanotti È Un Personaggio Colorato, Vitale, Prezioso Di Spunti E Sollecitazioni Anche Per Chi Non Dovesse Immedesimarsi Del Tutto Nel Suo Linguaggio

5 Prefazione L’idea della Zanichelli di affidare un robusto Dizionario del Pop-Rock a due dei no- stri più appassionati e colti critici musicali, Enzo Gentile e Alberto Tonti, ripercor- rendo decenni e decenni di storia della musica leggera nelle sue varie forme ed evo- luzioni, è un segnale importante per diversi motivi. Primo fra tutti quello di ferma- re una memoria storica che ci aiuta a comprendere l’evoluzione della società dal do- poguerra ad oggi. Sì, perché la Musica è la colonna sonora del tempo, degli umori degli anni, degli ideali di un periodo, della malinconia, degli entusiasmi o della rab- bia della società nel suo procedere. Voglio dire che si può perfettamente compren- dere lo stato d’animo di un popolo attraverso la colonna sonora che lo sta accom- pagnando in quel preciso momento storico. La Musica, dalla metà degli anni ’50 in poi, attraverso straordinari artisti ha suggerito mode, ha capovolto comportamen- ti. È stata la bandiera, grazie a molti brani, di lotte politiche, di battaglie civili, di poesie di protesta, di confessioni dolenti ma anche di esaltazione collettiva. Blues, Soul, Pop, Rock, Techno e tanti altri stili, fino ad arrivare alle più interessanti ricer- che sonore che partono da Brian Eno fino a Sylvian, Fripp e Scott Walker, sono le spericolate mutazioni di un’arte in perenne trasformazione che ascolta le pulsazio- ni della vita che corre. Per questo credo che un dizionario serio del Pop-Rock sia stato non solo un atto culturale indispensabile, ma un risarcimento, grazie alla cul- tura dei due autori e dei loro sensibili collaboratori, per tanti artisti a torto consi- derati minori e qui finalmente rivalutati e segnalati per il loro talento non ricono- sciuto tempestivamente. -

Jazzflits 01 09 2003 - 01 09 2021

1 19de JAARGANG, NR. 361 16 AUGUSTUS 2021 IN DIT NUMMER: 1 BERICHTEN 5 JAZZ OP DE PLAAT Eve Beuvens, Steve Cole, Flat Earth Society Orchestra, Ben van de Dungen, Gerry Gibbs e.a. EN VERDER (ONDER MEER): 10 Lee Morgan (Erik Marcel Frans) 16 Europe Jazz Media Chart Augustus 18 J AAR JAZZFLITS 01 09 2003 - 01 09 2021 NR. 362 KOMT 13 SEPT. UIT ONAFHANKELIJK JAZZPERIODIEK SINDS 2003 BERICHTEN CORONA-EDITIE FESTIVAL GENT JAZZ TREKT 20.000 BEZOEKERS In de tien dagen dat Gent Jazz ‘Back to Live’ duurde (van 9 tot en met 18 juli), passeerden zo’n twintigdui- zend mensen de festivalkassa. Van- wege de coronacrisis was op het laatste moment een speciale editie van het jaarlijkse zomerfestival in elkaar gezet met veel Belgische mu- sici. Maar ook de pianisten Monty Alexander, Tigran Hamasyan en ac- cordeonist Richard Galliano kwamen naar Gent. Festivaldirecteur Ber- trand Flamang sprak na afloop van een succesvol evenement. Cassandra Wilson tijdens het North Sea Jazz Festival 2015. (Foto: Joke Schot) Flamang: “In mei leek het er nog op dat deze editie niet zou doorgaan. Maar op CASSANDRA WILSON, STANLEY CLARKE twee maanden tijd zijn we erin geslaagd EN BILLY HART BENOEMD TOT NEA JAZZ MASTER om een coronaveilig evenement op po- ten te zetten.” Bezoekers konden zich Drummer Billy Hart, Bassist Stanley Clarke en zangeres niet vrij bewegen. Ze zaten per bubbel Cassandra Wilson zijn Benoemd tot NEA Jazz Master aan stoelen en tafels onder een giganti- 2022. Saxofonist Donald Harrison ontvangt de A.B. Spell- man NEA Jazz Masters Fellowship for Jazz Advocacy. -

Die 1950Er 321 Titel, 12,9 Std., 1,15 GB

Seite 1 von 13 -Die 1950er 321 Titel, 12,9 Std., 1,15 GB Name Dauer Album Künstler 1 Sing baby sing (ft Valente Caterina) 2:58 Alexander Peter 2 It's simply grand 2:00 Rockin' Rollin' High School Vol.4 - Sampler - 1983 (LP) Allen Milton 3 Youthful lover 2:20 Rockin' Rollin' High School Vol.6 - Sampler - 1983 (LP) Allen Milton 4 In the mood 2:49 Andrew Sisters The Andrew Sisters 5 Rum and Coca Cola 3:08 Oldies The Andrew Sisters 6 Boogie woogie bugle boy 2:44 Songs Of World War 2 The Andrew Sisters 7 Three little fishies (itty bitty pool) 2:37 The Andrew Sisters 8 Lonely boy 2:32 Jukebox RTL - Comp JJJJ (CD1-VBR) Anka Paul 9 Diana 2:18 Jukebox RTL - Comp JJJJ (CD1-VBR) Anka Paul 10 Put your head on my shoulder 2:39 Million Sellers (The Fifties) Vol.03 - Comp 1992 (CD-VBR) Anka Paul 11 High society calypso 2:12 As Time Goes By - Disc 2 Armstrong Louis 12 Hello Dolly 2:26 Wonderful World Of Musical - Comp 1999 (CD2-VBR) Armstrong Louis 13 Onkel Satchmo's lullaby 2:26 Das waren Schlager 59/60 - Comp (LP) Armstrong Louis & die kleine Gabriele 14 Just ask your heart 2:28 Billboard Top 100 - 1959 Avalon Frankie 15 Jim Dandy 2:12 Baker LaVern 16 Ice cream 3:27 Oldies But Goldies (LP OrangeGrün 1980) Barber Chris 17 Petite fleur 2:43 40 Hits From The 50s & 60s - Sampler (LP2) Barber Chris 18 Kiss me honey honey kiss me 2:23 Beyond the sea Bassey Shirley 19 Banana boat song 3:03 Rock Party Volume 3 - Comp JJJJ (CD-VBR) Belafonte Harry 20 Rainy night in Georgia 3:50 Best Of Soul Classics - Comp 2005 (CD3-VBR) Benton Brook 21 Rock and roll music 2:31 The Great Twenty-Eight Berry Chuck 22 Sweet little sixteen 3:01 The Rolling Stone Magazines 500 Greatest Songs Of All Time Berry Chuck 23 Brown eyed handsome man 2:16 The Rolling Stone Magazines 500 Greatest Songs Of All Time Berry Chuck -Die 1950er Seite 2 von 13 Name Dauer Album Künstler 24 Johnny B. -

Order Form Full

JAZZ ARTIST TITLE LABEL RETAIL ADDERLEY, CANNONBALL SOMETHIN' ELSE BLUE NOTE RM112.00 ARMSTRONG, LOUIS LOUIS ARMSTRONG PLAYS W.C. HANDY PURE PLEASURE RM188.00 ARMSTRONG, LOUIS & DUKE ELLINGTON THE GREAT REUNION (180 GR) PARLOPHONE RM124.00 AYLER, ALBERT LIVE IN FRANCE JULY 25, 1970 B13 RM136.00 BAKER, CHET DAYBREAK (180 GR) STEEPLECHASE RM139.00 BAKER, CHET IT COULD HAPPEN TO YOU RIVERSIDE RM119.00 BAKER, CHET SINGS & STRINGS VINYL PASSION RM146.00 BAKER, CHET THE LYRICAL TRUMPET OF CHET JAZZ WAX RM134.00 BAKER, CHET WITH STRINGS (180 GR) MUSIC ON VINYL RM155.00 BERRY, OVERTON T.O.B.E. + LIVE AT THE DOUBLET LIGHT 1/T ATTIC RM124.00 BIG BAD VOODOO DADDY BIG BAD VOODOO DADDY (PURPLE VINYL) LONESTAR RECORDS RM115.00 BLAKEY, ART 3 BLIND MICE UNITED ARTISTS RM95.00 BROETZMANN, PETER FULL BLAST JAZZWERKSTATT RM95.00 BRUBECK, DAVE THE ESSENTIAL DAVE BRUBECK COLUMBIA RM146.00 BRUBECK, DAVE - OCTET DAVE BRUBECK OCTET FANTASY RM119.00 BRUBECK, DAVE - QUARTET BRUBECK TIME DOXY RM125.00 BRUUT! MAD PACK (180 GR WHITE) MUSIC ON VINYL RM149.00 BUCKSHOT LEFONQUE MUSIC EVOLUTION MUSIC ON VINYL RM147.00 BURRELL, KENNY MIDNIGHT BLUE (MONO) (200 GR) CLASSIC RECORDS RM147.00 BURRELL, KENNY WEAVER OF DREAMS (180 GR) WAX TIME RM138.00 BYRD, DONALD BLACK BYRD BLUE NOTE RM112.00 CHERRY, DON MU (FIRST PART) (180 GR) BYG ACTUEL RM95.00 CLAYTON, BUCK HOW HI THE FI PURE PLEASURE RM188.00 COLE, NAT KING PENTHOUSE SERENADE PURE PLEASURE RM157.00 COLEMAN, ORNETTE AT THE TOWN HALL, DECEMBER 1962 WAX LOVE RM107.00 COLTRANE, ALICE JOURNEY IN SATCHIDANANDA (180 GR) IMPULSE -

Italy's Image As a Tourism Destination in the Chinese Leisure Traveler

International Journal of Marketing Studies; Vol. 9, No. 5; 2017 ISSN 1918-719X E-ISSN 1918-7203 Published by Canadian Center of Science and Education Italy’s Image as a Tourism Destination in the Chinese Leisure Traveler Market Silvia Gravili1 & Pierfelice Rosato1 1 Department of Management and Economics, University of Salento, Lecce, Italy Correspondence: Silvia Gravili, Department of Management and Economics, University of Salento, Complesso Ecotekne, via per Monteroni, Lecce, Italy. Tel: 39-0832-298-622. E-mail: [email protected] Received: September 8, 2017 Accepted: September 25, 2017 Online Published: September 30, 2017 doi:10.5539/ijms.v9n5p28 URL: http://doi.org/10.5539/ijms.v9n5p28 Abstract This study aims to measure the image of Italy as a tourism destination on the Chinese leisure traveller market. To this end, Echtner & Ritchie’s model (1991) is applied; however, compared to its original formulation, it is implemented with greater consideration of the experiential dimension and potential travel constraints affecting the perception of the range of tourism goods and services on offer. This enables not just a denotative, but also and above all a connotative measurement of the image, thereby reducing the risk of ambiguity in the interpretation of the most significant attributes that emerged during the analysis. Adopting a hypothetical segmentation of the market in question, it was also possible to the elements that act as encouraging and discouraging factors regarding a holiday in Italy are highlight, identifying tailor-made approaches to the construction and promotion/commercialisation of tourism products designed to be attractive to the specific segment of interest. -

Weekly

May WEEKLY SUN MON Tur INFO THU FRISAT no, 1 6 7 23 45 v4<..\k, 9 10 1112 13 14 15 $3.00 As?,9030. 8810 85 16 17 18 19 20 21 22 $2.80 plus .20 GST A 2_4 2330 243125 26 27 28 29 Volume 57 No. 14 -512 13 AA22. 2303 Week Ending April 17, 1993 .k2't '1,2. 282 25 No. 1 ALBUM ARE YOU GONNA GO MY WAY Lenny Kravitz TELL ME WHAT YOU DREAM Restless Heart WHO IS IT Michael Jackson SOMEBODY LOVE ME Michael W. Smith LOOKING THROUGH ERIC CLAPTON PATIENT EYES Unplugged PM Dawn Reprise - CDW-45024-P I FEEL YOU Depeche Mode LIVING ON THE EDGE Aerosmith YOU BRING ON THE SUN Londonbeat RUNNING ON FAITH CAN'T DO A THING Eric Clapton (To Stop Me) Chris Isaak to LOOK ME IN THE EYES oc Vivienne Williams IF YOU BELIEVE IN ME thebeANts April Wine coVapti FLIRTING WITH A HEARTACHE Ckade Dan Hill LOITA LOVE TO GIVE 0etex s Daniel Lanois oll.v-oceve,o-c1e, DON'T WALK AWAY Jade NOTHIN' MY LOVE CAN'T FIX DEPECHE MODE 461100'. Joey Lawrence ; Songs Of Faith And Devotion c.,25seNps CANDY EVERYBODY WANTS 10,000 FLYING THE CULT Blue Rodeo Pure Cult BIG TIME DWIGHT YOAKAM I PUT A SPELL ON YOU LEONARD COHEN This Time Bryan Ferry The Future ALBUM PICK DANIEL LANOIS HARBOR LIGHTS ALADDIN . For The Beauty Of Wynona Bruce Hornsby Soundtrack HOTHOUSE FLOWERS COUNTRY Songs From The Rain ADDS HIT PICK JUST AS I AM Ricky Van Shelton No.