SGI Management Center System Administrators Guide.Book

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Example Grant Materials

Example Grant Materials Do not redistribute except under terms noted within. Citation: Brown, Travis, Jennifer Guiliano, and Trevor Muñoz. "Active OCR: Tightening the Loop in Human Computing for OCR Correction" National Endowment for the Humanities, Grant Submission, University of Maryland, College Park, MD, 2011. Licensing: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. Collaborating Sites: University of Maryland Maryland Institute for Technology in the Humanities Team members: Maryland Institute for Technology in the Humanities Travis Brown Paul Evans Jennifer Guiliano Trevor Muñoz Kirsten Keister Acknowledgments Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the collaborating institutions or the National Endowment for the Humanities. Active OCR: A Level II Start Up Grant Enhancing the humanities through innovation: Over the past several years, many large archives (such as the National Library of Australia and the National Library of Finland) have attempted to improve the quality of their digitized text collections by inviting website visitors to assist with the correction of transcription errors. In the case of print collections, an optical character recognition (OCR) system is typically used to create an initial transcription of the text from scanned page images. While the accuracy of OCR engines such as Tesseract and ABBYY FineReader is constantly improving, these systems often perform poorly when confronted with historical typefaces and orthographic conventions. Traditional forms of manual correction are expensive even at a small scale. Engaging web volunteers—a process often called crowdsourcing—is one way for archives to correct their texts at a lower cost and on a larger scale, while also developing a user community. -

Keeping Old Computers Alive for Deeper Understanding of Computer Architecture

Keeping Old Computers Alive for Deeper Understanding of Computer Architecture Hisanobu Tomari Kei Hiraki Grad. School of Information Grad. School of Information Science and Technology, Science and Technology, The University of Tokyo The University of Tokyo Tokyo, Japan Tokyo, Japan [email protected] [email protected] Abstract—Computer architectures, as they are seen by stu- computers retrospectively. Working hardware is a requisite for dents, are getting more and more monolithic: few years ago a software execution environment that reproduces behavior of student had access to x86 processor on his or her laptop, SPARC socially and culturally important computers. server in the backyard, MIPS and PowerPC on large SMP system, and Alpha on calculation server. Today, only architectures that This paper presents our information science undergraduate students experience writing program on are x86 64 and possibly course for teaching concepts and methodology of computer ARM. On one hand, this simplifies their learning, but on the architecture. In this class, historic computer systems are used other hand, this makes it harder to discover options that are by students to learn different design concepts and perfor- available in designing an instruction set architecture. mance results. Students learn the different instruction sets In this paper, we introduce our undergraduate course that teaches computer architecture design and evaluation that uses by programming on a number of working systems. This historic computers to make more processor architectures acces- gives them an opportunity to learn what characteristics are sible to students. The collection of more than 270 old computers shared among popular instruction set, and what are special that were marketed in 1979 to 2014 are used in the class. -

SGI Propacktm 3 to SGI Propacktm 4 for Linux® Migration Guide

SGI ProPackTM 3 to SGI ProPackTM 4for Linux® Migration Guide 007–4719–003 COPYRIGHT © 2005, 2006, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo and Altix are registered trademarks and SGI ProPack for Linux is a trademark of Silicon Graphics, Inc., in the United States and/or other countries worldwide. Intel is a registered trademark of Intel Corporation, in the United States and other countries. Linux is a registered trademark of Linus Torvalds, used with permission by Silicon Graphics, Inc. Novell is a registered trademark, and SUSE is a trademark of Novell, Inc., in the United States and other countries. All other trademarks mentioned herein are the property of their respective owners. Record of Revision Version Description 001 February 2005 Original publication. Supports the SGI ProPack 4 for Linux release. 002 August 2005 Supports the SGI ProPack 4 for Linux Service Pack 2 release. -

Silicon Graphics® Scalable Graphics Capture PCI-X Option User's Guide

Silicon Graphics® Scalable Graphics Capture PCI-X Option User’s Guide 007-4663-002 CONTRIBUTORS Written by Mark Schwenden, Steven Levine Illustrated by Chrystie Danzer Engineering contributions by Michael Brown, Dick Brownell, Andrew James, Jeff Hane, Dave North, Keith Rich. and Tiffany To COPYRIGHT © 2004, 2005, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as "commercial computer software" subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1500 Crittenden Lane, Mountain View, CA 94043. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Onyx, OpenML, OpenGL Reality Center, and IRIX are registered trademarks and Onyx4, NUMAlink, Silicon Graphics Prism and UltimateVision are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. Record of Revision Version Description 001 March 2004 Initial version 002 April 2005 Support for Silicon Graphics Prism Visualization System added 007-4663-002 iii Contents Related Publications . vii Obtaining Publications . -

Alpana-Sgiprism-Gh05.Pdf

Silicon Graphics, Inc. Silicon Graphics PrismTM : A Platform for Scalable Graphics Presented by: Alpana Kaulgud Engineering Director, Visual Systems Bruno Stefanizzi Applications Engineering SGI Proprietary Silicon Graphics PrismTM – A Platform for Scalable Graphics Overview of Talk • Goals for Scalable Graphics • Scalable Architecture for Silicon Graphics Prism • Case Study • Call to Action and Future Directions SGI Proprietary Goals Traditional Computational Problems (CFD, Crash, Energy, Crypto, etc.) • Determine problem set size • Size compute server to solve problem in needed timeframe Small Problems: 1 – 16 CPUs Bigger Problem: 16 – 64 CPUs Large Problem: 64 – 1024 CPUs Scientific Challenge: 1024 CPUs or more Applications scale to use all computational resources: CPU, memory, I/O to reduce time to solution Traditional Visualization Problems (Media, CAD, Energy, Biomedical, etc.) • Determine problem set size • Reduce problem until it fits on a single GPU Small Problems: 1 GPU Bigger Problem: 1 GPU Large Problem: 1 GPU Scientific Challenge: up to 16 GPUs SGI Proprietary Images courtesy of Pratt and Whitney Canada and Landmark Graphics Goals Use the appropriate resource for each algorithm in the workflow to reduce “time to solution” CPUs for computation/visualization GPUs for visualization/computation FPGAs for algorithm acceleration Scalability Dimensions -Display -Data -Render (Geometry/Fill) -Number of User/Input Devices Single System Image –Ease of Use SGI Proprietary Images courtesy of Pratt and Whitney Canada and Landmark Graphics -

MSC Patran® 2006

MSC Patran® 2006 Installation and Operations Guide Corporate MSC.Software Corporation 2 MacArthur Place Santa Ana, CA 92707 USA Telephone: (800) 345-2078 Fax: (714) 784-4056 Europe MSC.Software GmbH Am Moosfeld 13 81829 Munich, Germany Telephone: (49) (89) 43 19 87 0 Fax: (49) (89) 43 61 71 6 Asia Pacific MSC.Software Japan Ltd. Shinjuku First West 8F 23-7 Nishi Shinjuku 1-Chome, Shinjuku-Ku Tokyo 160-0023, JAPAN Telephone: (81) (3)-6911-1200 Fax: (81) (3)-6911-1201 Worldwide Web www.mscsoftware.com Disclaimer This documentation, as well as the software described in it, is furnished under license and may be used only in accordance with the terms of such license. MSC.Software Corporation reserves the right to make changes in specifications and other information contained in this document without prior notice. The concepts, methods, and examples presented in this text are for illustrative and educational purposes only, and are not intended to be exhaustive or to apply to any particular engineering problem or design. MSC.Software Corporation assumes no liability or responsibility to any person or company for direct or indirect damages resulting from the use of any information contained herein. User Documentation: Copyright 2006 MSC.Software Corporation. Printed in U.S.A. All Rights Reserved. This notice shall be marked on any reproduction of this documentation, in whole or in part. Any reproduction or distribution of this document, in whole or in part, without the prior written consent of MSC.Software Corporation is prohibited. The software described herein may contain certain third-party software that is protected by copyright and licensed from MSC.Software suppliers. -

Diplomarbeit Interaktives Raytracing Auf Hochleistungsrechnern

TECHNISCHE UNIVERSITÄT DRESDEN FAKULTÄT INFORMATIK INSTITUT FÜR SOFTWARE- UND MULTIMEDIATECHNIK PROFESSUR FÜR COMPUTERGRAPHIK UND VISUALISIERUNG PROF.DR.STEFAN GUMHOLD Diplomarbeit zur Erlangung des akademischen Grades Diplom-Informatiker Interaktives Raytracing auf Hochleistungsrechnern Daniel Grafe (Geboren am 16. August 1980 in Ostercappeln) Betreuer: Prof. Dr. Stefan Gumhold, Dr. Matthias Müller Dresden, 11. November 2007 Aufgabenstellung Das Raytracing Verfahren erlaubt es, realistisch beleuchtete Szenen darzustellen. Dabei können harte und weiche Schatten, spiegelnde Reflektionen und Brechung an transparenten Oberflächen wiederge- geben werden. Außerdem lönnen beliebige Reflektionseigenschaften definiert werden. Das Raytracing- Verfahren eignet sich ausgesprochen gut für eine parallele Berechnung. In der Diplomarbeit soll ein paralleler Raytracer entwickelt und in eine interaktive Anwendung integriert werden. Im Einzelnen sind folgende Teilaufgaben zu lösen: • Literaturrecherche über bestehende Parallelisierungen des Raytracing Verfahrens • Entwicklung eines neuen oder Modifikation eines existierenden Raytracing Ansatzes für das par- allele Berechnen von Bildern. Es sollen Szenengraphen mit den üblichen Grundprimitiven, Trans- formationsknoten, Materialeigenschaften und Lichtquellen unterstützt werden. • Erstellung oder Import von Beispielszenen mit unterschiedlichen Kompexitätsmerkmalen • Entwicklung einer Applikation, die das Durchwandern einer Szene vor der Powerwall des Lehr- stuhls CGV mit stereoskopischer Projektion erlaubt. Für -

Arquitecturas Orientadas a Las Realidad Virtual

Tema 2. Arquitecturas orientadas a las RV TÉCNICAS Y DISPOSITIVOS DE REALIDAD VIRTUAL MASTER EN INFORMÁTICA GRÁFICA, JUEGOS Y REALIDAD VIRTUAL Pablo Toharia [email protected] Marcos García Miguel Ángel A. Otaduy Bibliografía “Virtual Reality Technology” Ed. Wiley-Interscience (Second Edition). Grigore C. Burdea & Philippe Coiffet. (sólo arquitecturas para realidad virtual, obsoleto en cuanto a arquitecturas gráficas) Una arquitectura simple CPU GPU Una arquitectura ¿simple? CPU multi-core GPU programable (IBM cell, Intel Larrabee…) (NVIDIA Tesla S870) Múltiples Periféricos Mosaico de pantallas Cueva (CAVE) para realidad virtual Sistema gráfico + háptico Globalización Juegos en red Second Life Ejemplo Introducción Motor virtual • Componente clave • Tarea – Lectura de los dispositivos entrada salida – Lectura de la base de datos – Actualización del estado (base de datos) – Escritura en los dispositivos de salida Motor virtual • Tiempo real – Imposible predecir las acciones de los usuarios • El mundo virtual se crea y destruye en tiempo real – Requisitos impuestos por los factores humanos • Refresco visual 25-30Hz • Refresco táctil 500-1000Hz – Tan importante es el refresco como la latencia: • Latencia: tiempo transcurrido desde que el usuario efectúa una acción hasta que recibe una respuesta • Latencia total = latencia del sensor + retraso del bus + cálculo del nuevo estado + retraso del bus + latencia del dispositivo de salida • La latencia visual no debe exceder los 100ms Sistema Gráfico + Háptico Motor virtual • Por tanto, los motores de RV: – Requieren: • Baja latencia • Rápida generación de gráficos • Hápticos • Refresco de audio • … – Se tienen que sostener sobre una arquitectura muy potente desde el punto de vista computacional • Las arquitecturas tradicionalmente se han construido sobre el pipeline de renderizado. -

Túlélőkészlet Túlélőkészlet

SGI Reality Center CD Digitális televízió Nyerjen 88. oldal! Helyszíni Merész tervek RADEON X800XL VGA-kártyát! 1 3 CD jelentés 2015-ig 2006 1495 Ft, elõfizetéssel 1047 Ft XVIII. évfolyam, 1. szám, 2006. január USB-USB- túlélõkészlet WWW.CHIPONLINE.HU SZÁMÍTÁSTECHNIKA & KOMMUNIKÁCIÓ minden feladatra TESZTEK, TRENDEK, TECHNOLÓGIÁK Kommunikáció: Webfejlesztés 2005 * 3CD 30 TELJES VERZIÓ USB-s túlélõkészlet * Tartalom Megmondták! AJÁNDÉK Trenddiktátorok MAYA PLE 7 TELJES VERZIÓ helyzetjelentése Szoftver: Omnipage 15 Pro * 3D szerkesztõ Kifaggattuk az IT-gurukat SGI RC * Macromedia Fireworks 8 Gates, Jobs, Dell a jövõrõl Képszerkesztõ Braille-kijelzõ 30 NAPOS TELJES VERZIÓ * NOVA PDF Kétes információk TELJES VERZIÓ Aktuális: GPGPU Elektronikus könyv Botrány a Wikipedia körül * Homályos képek? Photoshop CS2 HU WLAN routerek Office 12 újítások 30 NAPOS TELJES VERZIÓ * Ihlet a webrõl ACE Backup 2 Biztonsági mentés WYGIWYS: amit kapsz, azt látod TELJES VERZIÓ Középkategóriás VGA-k Merevlemezes MP3-lejátszók * SEGÉDPROGRAM-VÁLOGATÁS Acoustica v3.3 B290, Cobian Backup DV-kamerák körképe 7.4.0.323, DVD2one, EchoVNC 1.4, WLAN routerek Electrical Control Techniques Simulator 1.0.2, Euler 3D v2.0.4 X-Fi kártyacsalád ÚJDONSÁGOK GYAKORLAT Braille-kijelzõk vakoknak Tippek a Nero-tuningoláshoz Teszteltük: Windows Mobile 2005 A BIOS finomhangolása Hardvertesztek: Középkategóriás VGA-kártyák ScanSoft OmniPage 15 Professional Praktikus kiterjesztések az IE-hez SGI Reality Center DVD Digitális televízió Nyerjen 88. oldal! Helyszíni Merész tervek RADEON -

SGI Propack™ 4 for Linux® Start Here

SGI ProPack™ 4 for Linux® Start Here 007-4727-003 CONTRIBUTORS Written by Terry Schultz Production by Terry Schultz COPYRIGHT © 2005, 2006, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is “commercial computer software” provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, IRIX, OpenGL, Origin, Onyx, Onyx2, and XFS are registered trademarks and NUMAflex, NUMAlink, OpenGL Multipipe, OpenGL Performer, OpenGL Vizserver, OpenGL Volumizer, OpenMP, Performance Co-Pilot, RASC, SGI ProPack, SGIconsole, SHMEM, Silicon Graphics Prism, and Supportfolio are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. FLEXlm is a registered trademark of Macrovision Corporation. Java is a registered trademark of Sun Microsystems, Inc. in the United States and/or other countries. KAP/Pro Toolset and VTune are trademarks and Intel, Itanium, and Pentium are registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. Linux is a registered trademark of Linus Torvalds, used with permission by Silicon Graphics, Inc. -

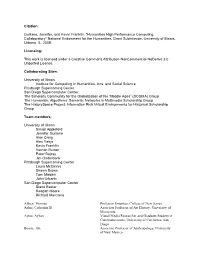

Humanities High Performance Computing Collaboratory 2008

Citation: Guiliano, Jennifer, and Kevin Franklin. "Humanities High Performance Computing Collaboratory" National Endowment for the Humanities, Grant Submission, University of Illinois, Urbana, IL, 2008. Licensing: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. Collaborating Sites: University of Illinois Institute for Computing in Humanities, Arts, and Social Science Pittsburgh Supercoming Center San Diego Supercomputer Center The Scholarly Community for the Globalization of the “Middle Ages” (SCGMA) Group The Humanistic Algorithms: Semantic Networks in Multimedia Scholarship Group The HistorySpace Project: Information Rich Virtual Environments for Historical Scholarship Group Team members: University of Illinois Simon Appleford Jennifer Guiliano Alan Craig Alex Yahja Kevin Franklin Vernon Burton Peter Bajcsy Jim Onderdonk Pittsburgh Supercoming Center Laura McGinnis Shawn Brown Tom Maiden John Urbanic San Diego Supercomputer Center Diane Baxter Reagan Moore Richard Marciano Allsen, Thomas Professor Emeritus, College of New Jersey Asher, Catherine B. Associate Professor of Art History, University of Minnesota Aytes, Ayhan Visual Media Researcher and Graduate Student in Communications, University of California- San Diego Boone, Jim Associate Professor of Anthropology, University of New Mexico Goldberg, David Theo Director, University of California Humanities Research Institute (UCHRI); Professor of Comparative Literature and Criminology, Law, and Society at the University of California, Irvine. Hart, Roger Assistant Professor of History, University of Texas at Austin. Heng, Geraldine Director of Medieval Studies, Associate Professor of English; Holder of the Perceval Endowment in Medieval Romance, Historiography, and Culture at the University of Texas at Austin. Ilnitchi, Gabriela Assistant Professor of Musicology, University of Minnesota. Kea, Ray A. Professor of History, University of California, Riverside Klieman, Kairn A. -

0. Obtaining Maximum Performance on Silicon Graphics Prism™ Visualization Systems

0. Obtaining Maximum Performance on Silicon Graphics Prism™ Visualization Systems This document provides guidance about how to get the best performance from a Silicon Graphics Prism Visualization system. It covers the following topics: • “Rendering” on page 2. • “Graphics Memory Usage” on page 5. • “Environment Variables” on page 7. • “XFree86™ Configuration” on page 14. • “Window Manager Considerations” on page 17. • “Platform Parameters” on page 18. • “Miscellaneous Issues” on page 23. 007-4751-001 1 Obtaining Maximum Performance on Silicon Graphics Prism™ Visualization Systems Rendering The Silicon Graphics Prism graphics subsystem has two or more graphics pipes, each with a single GPU and 256MB of local memory. Each graphics pipe has its own full-speed AGP8x interface, supplying bus bandwidths of up to 2.1GB/s and providing the connection between host-system main memory and local graphics memory. All graphics data is sent over this AGP8x bus to the graphics pipe, where it is rendered and then displayed. Programming Model The use of a “retained-mode” OpenGL programming model will usually provide the best graphics performance on a Prism graphics system. This retained-mode model is in contrast to the immediate-mode model widely used on previous SGI graphics systems. A retained-mode OpenGL programming model is one in which the geometry or pixel data resides in the local memory of the graphics pipe, rather than in the main memory of the host system. OpenGL commands that create objects (e.g., display lists, vertex buffer objects, vertex array objects, or texture objects) are retained-mode commands. All of these OpenGL commands return an identifier that allows the association of the object to the data that is resident on the graphics pipe.