Shot Quality Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Developing a Team-First Attitude"

"Developing a Team-First Attitude" Dr. Wayne Halliwell University of Montreal Presentation at the 2005 International Coaching Conference, June 29 – July 2, 2005, Vierumaki, Finland Design by Chantal Lalande " You don't coach hockey you coach people " First Who …… Then What " Get the right people on the bus Get the wrong people off the bus Get the right people in the right seats " Jim Collins " Good to Great " " Simple is better " Jacques Lemaire NHL Stanley Cup Winner as Player and Coach, NHL Hall of Fame Member HOW GOOD CAN I BE ? HOW GOOD CAN WE BE ? " The ultimate test of a great team is results " Patrick Lencioni " The Five Dysfunctions of a Team " " Get it done " Raymond Bourque NHL Stanley Cup Champion Colorado Avalanche - 2001 " Talent wins games, discipline and teamwork wins championships " Larry Robinson Head Coach New Jersey Devils NHL Champions - 2003 " Building a Team-First attitude is based on common sense " Work together Grow together Win together Claude Julien Head Coach Montreal Canadiens 10 Traits of Great Teams 1. Great work ethic 2. Great discipline 3. Relentless intensity 4. Great leadership 5. Relentless preparation 6. Great team chemistry 7. Great commitment / buy-in 8. Tremendous team trust 9. Great resilience 10. Great team pride " The most important trait of a great team is ………. Great Goaltending ! " The G.A.G.G. Rule " Get a Great Goalie " " Keys to Great Goaltending " 1. Have fun 2. Be the guy – exude confidence 3. Compete Sean Burke NHL goalie – 17 years Team Canada – 9 times TEAM IDENTITY TEAM DISCIPLINE TEAM COHESION TEAM CHEMISTRY TEAM - BUILDING TEAM TRUST TEAM CONFIDENCE TEAMWORK TEAM SPIRIT TEAM - FIRST " ThereisnoI in TEAM " " Thereisan I in TEAM " I = Individual I = Input I = Ice time I = Ink Together Everyone Achieves More " One finger can't lift a pebble " Phil Jackson Head Coach L.A. -

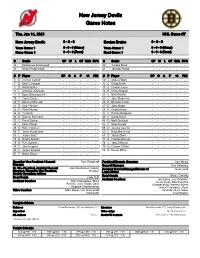

New Jersey Devils Game Notes

New Jersey Devils Game Notes Thu, Jan 14, 2021 NHL Game #7 New Jersey Devils 0 - 0 - 0 Boston Bruins 0 - 0 - 0 Team Game: 1 0 - 0 - 0 (Home) Team Game: 1 0 - 0 - 0 (Home) Home Game: 1 0 - 0 - 0 (Road) Road Game: 1 0 - 0 - 0 (Road) # Goalie GP W L OT GAA SV% # Goalie GP W L OT GAA SV% 29 Mackenzie Blackwood - - - - - - 40 Tuukka Rask - - - - - - 41 Scott Wedgewood - - - - - - 41 Jaroslav Halak - - - - - - # P Player GP G A P +/- PIM # P Player GP G A P +/- PIM 5 D Connor Carrick - - - - - - 10 L Anders Bjork - - - - - - 7 D Matt Tennyson - - - - - - 12 C Craig Smith - - - - - - 8 D Will Butcher - - - - - - 13 C Charlie Coyle - - - - - - 11 L Andreas Johnsson - - - - - - 14 R Chris Wagner - - - - - - 17 F Egor Sharangovich - - - - - - 21 L Nick Ritchie - - - - - - 19 C Travis Zajac - - - - - - 23 C Jack Studnicka - - - - - - 20 F Michael McLeod - - - - - - 25 D Brandon Carlo - - - - - - 21 R Kyle Palmieri - - - - - - 27 D John Moore - - - - - - 22 D Ryan Murray - - - - - - 28 R Ondrej Kase - - - - - - 24 D Ty Smith - - - - - - 37 C Patrice Bergeron - - - - - - 28 D Damon Severson - - - - - - 46 C David Krejci - - - - - - 37 C Pavel Zacha - - - - - - 48 D Matt Grzelcyk - - - - - - 44 L Miles Wood - - - - - - 52 C Sean Kuraly - - - - - - 45 D Sami Vatanen - - - - - - 55 D Jeremy Lauzon - - - - - - 59 F Janne Kuokkanen - - - - - - 63 L Brad Marchand - - - - - - 63 L Jesper Bratt - - - - - - 67 D Jakub Zboril - - - - - - 70 D Dmitry Kulikov - - - - - - 73 D Charlie McAvoy - - - - - - 76 D P.K. Subban - - - - - - 74 L Jake DeBrusk - - - - - - 86 C Jack Hughes - - - - - - 75 D Connor Clifton - - - - - - 90 F Jesper Boqvist - - - - - - 86 D Kevan Miller - - - - - - 97 F Nikita Gusev - - - - - - Executive Vice President / General Tom Fitzgerald President/Alternate Governor: Cam Neely Manager General Manager: Don Sweeney Sr. -

Goal Prevention 2004 a Review of Goaltending and Team Defense Including a Study of the Quality of �������������’��������������

Goal Prevention 2004 a review of goaltending and team defense including a study of the quality of a hockey ’shots allowed Copyright Alan Ryder 2004 Goal Prevention 2004 Page 2 Introduction I recently completed an assessment of “”in the NHL for the 2002-03 “”season (http://www.HockeyAnalytics.com/Research.htm). That study revealed that the quality of shots allowed varied significantly from team to team and was not well correlated with the number of shots allowed on goal. The consequence of that study was an improved ability to assess the goal prevention performance of teams and their goaltenders. This paper applies the same methods to the analysis of the 2003-04 “”season, focusing more on the results than the method. Shot Quality In summary, the approach used to assess the quality of shots allowed by a team is: 1. Collect, from NHL game event logs, the relevant data on each shot. 2. Analyze the goal probabilities for each shooting circumstance. In my analysis I separated certain “”from “”shots and studied the probability of a goal given the shot type, the ’distance and the on-ice situation (power play vs other). 3. Build a model of goal probabilities that relies on the measured circumstance. 4. Apply the model to the shot data for the defensive team in question for the season. For each shot, determine its goal probability. 5. Determine Expected Goals: EG = the sum of the goal probabilities for each shot. 6. Neutralize the variation in the number of shots on goal by calculating Normalized Expected Goals (NEG) = EG x League Average Shots / Shots 7. -

2021 Nhl Awards Presented by Bridgestone Information Guide

2021 NHL AWARDS PRESENTED BY BRIDGESTONE INFORMATION GUIDE TABLE OF CONTENTS 2021 NHL Award Winners and Finalists ................................................................................................................................. 3 Regular-Season Awards Art Ross Trophy ......................................................................................................................................................... 4 Bill Masterton Memorial Trophy ................................................................................................................................. 6 Calder Memorial Trophy ............................................................................................................................................. 8 Frank J. Selke Trophy .............................................................................................................................................. 14 Hart Memorial Trophy .............................................................................................................................................. 18 Jack Adams Award .................................................................................................................................................. 24 James Norris Memorial Trophy ................................................................................................................................ 28 Jim Gregory General Manager of the Year Award ................................................................................................. -

Curiosityguidetohockey LA.Pdf

LACEY ARTEMIS I first got into hockey back in the mid 90s. My dad watched a few different sports, but hockey was the one that caught my attention and it was only as an adult that I figured out really why. This was the first hockey player to really catch my attention: That is Trevor Kidd, who was drafted by, and started his career with the Calgary Flames of the NHL. Despite being drafted in the first round, 11th overall, Kidd was not the calibre of goalie that Calgary’s scouts and management ultimately thought and hoped he would be. In fact, the mention of his name to hockey fans these days yields one of two responses: a sigh/groan/shaking of head, or a “who?” He was chosen ahead of someone you might actually know, someone who just retired last year (2015). In the same draft as Kidd was selected, only 9 spots later, Martin Brodeur was drafted The Art of Hockey – A Fandom In Four Parts (www.curiosityguides.com) by the New Jersey Devils. Brodeur would go on to become a legendary goaltender, holding the records for both most career regular season wins (692) and shut-outs (125) by a goaltender. Both are records that will probably never be surpassed as few goalies nowadays have careers as long as Brodeur’s — 23 seasons, over half of which he played in 70 or more of the 82 regular season games. So, why did Kidd catch my attention and become my favourite goalie and not the legendary Brodeur? The main reason was his pads. -

Eli Wilson Bio

Eli Wilson Bio Having elevated the games of current National Hockey League goaltenders Carey Price, Ray Emery, Tim Thomas, Tuukka Rask, Brian Elliott, Jason LaBarbera, and Devan Dubnyk, over the course of his coaching career Eli has worked with 25 NHL goaltending in camps, clinics, private or in a team environment. Eli Wilson has established himself as one of the premier goaltending coaches in the world. His relentless work ethic, vivacious personality, knowledge of the goaltending position and tenacious approach to the game has afforded Eli the ability to build an impressive track record. Wilson had unprecedented success for four seasons with the Western Hockey League’s Medicine Hat Tigers. While there, Wilson’s goaltenders set new franchise records only to break them again two years later. During Eli’s tenure, the Tigers won two Championships and in both of those runs, the Medicine Hat goalies were named playoff MVP’s. Eli's success as a goaltending coach is best demonstrated by the number of goaltenders he has developed that have gone on to win numerous prestigious awards including Stanley Cups, Conn Smythe Trophies, Venzina Trophies, World Junior Gold Medals, AHL Championships, AHL Playoff MVP's, WHL Goaltender of the Year, OHL Goaltender of the Year and CHL Goaltender of the Year. In 2004, Eli founded the largest goaltending school in Western Canada – World Pro Goaltending. Known as Western Canada’s elite training centre for goaltenders, World Pro teaches a cutting edge compact butterfly style combined with read-and-react skills that focus on making goaltenders more efficient by simplifying their game. -

2020 Arizona Coyotes Postseason Guide Table of Contents Arizona Coyotes

2020 ARIZONA COYOTES POSTSEASON GUIDE TABLE OF CONTENTS ARIZONA COYOTES ARIZONA COYOTES Team Directory ................................................ 2 Media Information ............................................. 3 SEASON REVIEW Division Standings ............................................. 5 League Standings ............................................. 6 Individual Scoring .............................................. 7 Goalie Summary ............................................... 7 Category Leaders .............................................. 8 Faceoff & Goals Report ......................................... 9 Real Time Stats .............................................. 10 Time on Ice ................................................. 10 Season Notes ............................................... 11 Team Game by Game .......................................... 12 Player Game by Game ....................................... 13-17 Player Misc. Stats .......................................... 18-20 Individual Milestones .......................................... 21 QUALIFYING ROUND REVIEW Individual Scoring Summary .................................... 23 Goalie Summary .............................................. 23 Team Summary .............................................. 24 Real Time Stats .............................................. 24 Faceoff Leaders .............................................. 24 Time On Ice ................................................. 24 Qualifying Round Series Notes ............................... -

1989-90 Topps Hockey 198 Cards

1989-90 Topps Hockey 198 cards 1 Mario Lemieux 51 Glen Wesley 101 Shawn Burr 151 Marc Habscheid RC 2 Ulf Dahlen 52 Dirk Graham 102 John MacLean 152 Dan Quinn 3 Terry Carkner RC 53 G. Carbonneau 103 Tom Fergus 153 Stephane Richer 4 Tony McKegney 54 T. Sandstrom 104 Mike Krushelnyski 154 Doug Bodger 5 Denis Savard 55 Rod Langway 105 Gary Nylund 155 Ron Hextall 6 Derek King RC 56 P. Sundstrom 106 Dave Andreychuk 156 Wayne Gretzky 7 Lanny McDonald 57 Michel Goulet 107 Bernie Federko 157 Steve Tuttle RC 8 John Tonelli 58 Dave Taylor 108 Gary Suter 158 Charlie Huddy 9 Tom Kurvers 59 Phil Housley 109 Dave Gagner 159 Dave Christian 10 Dave Archibald 60 Pat LaFontaine 110 Ray Bourque 160 Andy Moog 11 P. Sidorkiewicz RC 61 Kirk McLean RC 111 Geoff Courtnall RC 161 Tony Granato RC 12 Esa Tikkanen 62 Ken Linseman 112 Doug Wilson 162 Sylvain Cote RC 13 Dave Barr 63 R. Cunneyworth 113 Joe Sakic RC 163 Mike Vernon 14 Brent Sutter 64 Tony Hrkac 114 John Vanbiesbrouck 164 Steve Chiasson RC 15 Cam Neely 65 Mark Messier 115 Dave Poulin 165 Mike Ridley 16 C. Johansson RC 66 Carey Wilson 116 Rick Meagher 166 Kelly Hrudey 17 Patrick Roy 67 Steve Leach RC 117 Kirk Muller 167 Bobby Carpenter 18 Dale DeGray RC 68 Christian Ruuttu 118 Mats Naslund 168 Zarley Zalapski RC 19 Phil Bourque RC 69 Dave Ellett 119 Ray Sheppard 169 Derek Laxdal RC 20 Kevin Dineen 70 Ray Ferraro 120 Jeff Norton RC 170 Clint Malarchuk 21 Mike Bullard 71 Colin Patterson RC 121 Randy Burridge 171 Kelly Kisio -

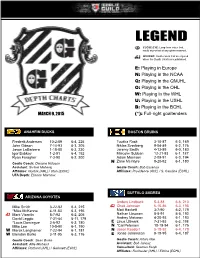

LEGEND CLOSE EYE: Long-Term Value That N Could Skyrocket at Any Given Moment

LEGEND CLOSE EYE: Long-term value that N could skyrocket at any given moment. INJURED: Goaltenders that are injured h when the Depth Charts are published. E: Playing in Europe N: Playing in the NCAA Q: Playing in the QMJHL O: Playing in the OHL W: Playing in the WHL U: Playing in the USHL B: Playing in the BCHL MARCH 9, 2015 (*): Full-right goaltenders ANAHEIM DUCKS BOSTON BRUINS Frederik Andersen 10-2-89 6-4, 225 Tuukka Rask 3-10-87 6-3, 169 John Gibson 7-14-93 6-3, 205 Niklas Svedberg 9-04-89 6-2, 176 Jason LaBarbera 1-18-80 6-3, 230 Jeremy Smith 4-13-89 6-0, 180 Igor Bobkov 1-2-91 6-4, 192 Malcolm Subban 12-21-93 6-1, 187 Ryan Faragher 7-3-90 6-2, 200 Adam Morrison 2-09-91 6-3, 194 Zane McIntyre 8-20-92 6-1, 180 Goalie Coach: Dwayne Roloson N Assistant: Sudsie Maharaj Goalie Coach: Bob Essensa Affiliates: Norfolk (AHL) / Utah (ECHL) Affiliates: Providence (AHL) / S. Carolina (ECHL) UFA Depth: Etienne Marcoux BUFFALO SABRES ARIZONA COYOTES Anders Lindback 5-3-88 6-6, 210 Mike Smith 3-22-82 6-4, 215 h Chad Johnson 6-10-86 6-3, 195 *Mike McKenna 4-11-83 6-3, 195 Matt Hackett 3-7-90 6-2, 179 h Mark Visentin 8-7-92 6-2, 205 Nathan Lieuwen 8-8-91 6-5, 192 David Leggio 7-31-84 5-11, 179 Andrey Makarov 4-20-93 6-1, 193 *Louis Domingue 3-6-92 6-3, 180 E Linus Ullmark 7-31-93 6-3, 198 Mike Lee 10-5-90 6-1, 190 N *Cal Petersen 10-19-94 6-1, 175 W Marek Langhamer 7-22-94 6-1, 181 N Jason Kasdorf 5-18-92 6-4, 179 W Brendan Burke 3-11-95 6-3, 176 E Jonas Johansson 9-19-95 6-4, 187 Goalie Coach: Sean Burke Goalie Coach: Arturs Irbe Assistant: Alfie -

Carolina Hurricanes 1, Tampa Bay Lightning 0 (Ot)

CAROLINA HURRICANES 1, TAMPA BAY LIGHTNING 0 (OT) Postgame Notes – January 28, 2021 CAROLINA HURRICANES SCORING NOTES Per Time Goal Scorer Assists STR Score 4 1:12 Martin Necas (1) Jordan Staal (1), Dougie Hamilton (3) EV -- Dougie Hamilton recorded an assist tonight to extend his assist streak to three games (1/16-1/28: 3a). -- Martin Necas scored his first career overtime goal tonight. TAMPA BAY LIGHTNING SCORING NOTES Per Time Goal Scorer Assists STR Score No scoring MRAZZLE DAZZLE Petr Mrazek stopped all 32 shots he faced tonight to record his 23rd career shutout, ninth with Carolina and second in three starts this season. He became the second different goaltender in franchise history to record a shutout in the team’s home opener, joining Sean Burke (10/7/95 vs. NYR, 10/5/96 vs. PHX). Mrazek is the first goaltender to shut out the Lightning since Devan Dubnyk (MIN) accomplished the feat on 3/7/19. FEELS LIKE THE FIRST TIME Steven Lorentz made his NHL/Hurricanes debut tonight. Drafted by Carolina in the seventh round (186th overall) of the 2015 NHL Draft, he has recorded 55 points (26g, 29a) in 93 career AHL games with Charlotte. Sheldon Rempal and Max McCormick also made their Hurricanes debuts tonight. Rempal played seven NHL games with Los Angeles in 2018-19, while McCormick skated in 71 NHL games with Ottawa from 2015-18, tallying 10 points (4g, 6a). SPECIAL TEAMS - Carolina went 0-for-4 on the power play tonight and is now 3-for-16 (18.8%) on the man-advantage this season. -

2019-20 Philadelphia Flyers Directory

2019-20 PHILADELPHIA FLYERS DIRECTORY PHILADELPHIA FLYERS Wells Fargo Center | 3601 South Broad Street | Philadelphia, PA 19148 Phone 215-465-4500 | PR FAX 215-218-7837 | www.philadelphiaflyers.com EXECUTIVE MANAGEMENT Chairman/CEO, Comcast-Spectacor .....................................................................................................................................Dave Scott President, Hockey Operations & General Manager ....................................................................................................... Chuck Fletcher President, Business Operations ..................................................................................................................................... Valerie Camillo Governor ..................................................................................................................................................................................Dave Scott Alternate Governors ....................................................................................................Valerie Camillo, Chuck Fletcher, Phil Weinberg Senior Advisors ........................................................................................................................Bill Barber, Bob Clarke, Paul Holmgren Executive Assistants ............................................................................................. Janine Gussin, Frani Scheidly, Tammi Zlatkowski HOCKEY CLUB PERSONNEL Vice President/Assistant General Manager ..........................................................................................................................Brent -

Introduction Theo Fleury, Warren Babe, Chris Joseph We Would Like to Welcome You to the Spring Edition of the Team Canada Alumni Association Newsletter

TEAM CANADA NEWSLETTER ALUMNI ASSOCIATION SPRING 2009 Dave Draper, Dave Chambers, Greg Hawgood, Rob Domaio, Mike Murray, Marc Laniel, Hervé Lord Jodi Hull, Todd Nicholson Introduction Theo Fleury, Warren Babe, Chris Joseph We would like to welcome you to the spring edition of the Team Canada Alumni Association newsletter. We were very pleased with the feedback we received regarding the inaugural edition and THE TEAM have incorporated as much of your input as possible into our second publication. Our goal is to create a communication medium that our alumni can be proud of, by continuously improving our CANADA ALUMNI newsletter to best meet the needs of our membership. ■ ASSOCIATION TABLE OF CONTENTS Where We Want To Be – Our Vision: Team OUR REGULAR FEATURES HIGHLIGHTS INSIDE THIS ISSUE Canada Alumni – Coming Together, Reaching Out. WHERE ARE THEY Now? Working with our Alumni Membership Get caught up with Sean Burke (Page 3) (Page 2) Why We Want To Go There – Our Mission: To and Todd Strueby (Page 4) engage, encourage, and enable Team Canada Hockey Canada Skills Camps reach coast alumni to maintain a lifelong relationship with ALUMNI EVENTS to coast (Page 6) Hockey Canada and our game. TCAA launches in Ottawa during 2009 IIHF World Junior Championship (Page 5) Olympic hopefuls in Calgary from August Who We Will Be Along the Way – Our Values: 24 to 28 for orientation camp (Page 7) WHAt’s NEW AT HOCKEY CANADA We are committed to honouring Canada’s inter- Updates Season of Champions begins as national hockey heritage, assisting with the we