Using GNU Make to Manage the Workflow of Data Analysis Projects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Emacspeak — the Complete Audio Desktop User Manual

Emacspeak | The Complete Audio Desktop User Manual T. V. Raman Last Updated: 19 November 2016 Copyright c 1994{2016 T. V. Raman. All Rights Reserved. Permission is granted to make and distribute verbatim copies of this manual without charge provided the copyright notice and this permission notice are preserved on all copies. Short Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::::::: 2 2 Announcing Emacspeak Manual 2nd Edition As An Open Source Project ::::::::::::::::::::::::::::::::::::::::::::: 3 3 Background :::::::::::::::::::::::::::::::::::::::::: 4 4 Introduction ::::::::::::::::::::::::::::::::::::::::: 6 5 Installation Instructions :::::::::::::::::::::::::::::::: 7 6 Basic Usage. ::::::::::::::::::::::::::::::::::::::::: 9 7 The Emacspeak Audio Desktop. :::::::::::::::::::::::: 19 8 Voice Lock :::::::::::::::::::::::::::::::::::::::::: 22 9 Using Online Help With Emacspeak. :::::::::::::::::::: 24 10 Emacs Packages. ::::::::::::::::::::::::::::::::::::: 26 11 Running Terminal Based Applications. ::::::::::::::::::: 45 12 Emacspeak Commands And Options::::::::::::::::::::: 49 13 Emacspeak Keyboard Commands. :::::::::::::::::::::: 361 14 TTS Servers ::::::::::::::::::::::::::::::::::::::: 362 15 Acknowledgments.::::::::::::::::::::::::::::::::::: 366 16 Concept Index :::::::::::::::::::::::::::::::::::::: 367 17 Key Index ::::::::::::::::::::::::::::::::::::::::: 368 Table of Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::: -

Easyaccess: Enhanced SQL Command Line Interpreter for Astronomical Surveys

easyaccess: Enhanced SQL command line interpreter for astronomical surveys Matias Carrasco Kind1, Alex Drlica-Wagner2, Audrey Koziol1, and Don Petravick1 1 National Center for Supercomputing Applications, University of Illinois at Urbana-Champaign. 1205 W Clark St, Urbana, IL USA 61801 2 Fermi National Accelerator Laboratory, P. O. Box 500, DOI: 00.00000/joss.00000 Batavia,IL 60510, USA Software • Review Summary • Repository • Archive easyaccess is an enhanced command line interpreter and Python package created to Submitted: 00 January 0000 facilitate access to astronomical catalogs stored in SQL Databases. It provides a custom Published: 00 January 0000 interface with custom commands and was specifically designed to access data from the License Dark Energy Survey Oracle database, although it can easily be extended to another survey Authors of papers retain copyright or SQL database. The package was completely written in Python and support customized and release the work under a Cre- addition of commands and functionalities. Visit https://github.com/mgckind/easyaccess ative Commons Attribution 4.0 In- to view installation instructions, tutorials, and the Python source code for easyaccess. ternational License (CC-BY). The Dark Energy Survey The Dark Energy Survey (DES) (DES Collaboration 2005; DES Collaboration 2016) is an international, collaborative effort of over 500 scientists from 26 institutions in seven countries. The primary goals of DES are reveal the nature of the mysterious dark energy and dark matter by mapping hundreds of millions of galaxies, detecting thousands of supernovae, and finding patterns in the large-scale structure of the Universe. Survey operations began on on August 31, 2013 and will conclude in early 2019. -

A Practical Guide for Improving Transparency and Reproducibility in Neuroimaging Research Krzysztof J

bioRxiv preprint first posted online Feb. 12, 2016; doi: http://dx.doi.org/10.1101/039354. The copyright holder for this preprint (which was not peer-reviewed) is the author/funder. It is made available under a CC-BY 4.0 International license. A practical guide for improving transparency and reproducibility in neuroimaging research Krzysztof J. Gorgolewski and Russell A. Poldrack Department of Psychology, Stanford University Abstract Recent years have seen an increase in alarming signals regarding the lack of replicability in neuroscience, psychology, and other related fields. To avoid a widespread crisis in neuroimaging research and consequent loss of credibility in the public eye, we need to improve how we do science. This article aims to be a practical guide for researchers at any stage of their careers that will help them make their research more reproducible and transparent while minimizing the additional effort that this might require. The guide covers three major topics in open science (data, code, and publications) and offers practical advice as well as highlighting advantages of adopting more open research practices that go beyond improved transparency and reproducibility. Introduction The question of how the brain creates the mind has captivated humankind for thousands of years. With recent advances in human in vivo brain imaging, we how have effective tools to peek into biological underpinnings of mind and behavior. Even though we are no longer constrained just to philosophical thought experiments and behavioral observations (which undoubtedly are extremely useful), the question at hand has not gotten any easier. These powerful new tools have largely demonstrated just how complex the biological bases of behavior actually are. -

Reproducible Reports with Knitr and R Markdown

Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Reproducible Reports with knitr and R Markdown Yihui Xie, RStudio 11/22/2014 @ UPenn, The Warren Center 1 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... An appetizer Run the app below (your web browser may request access to your microphone). http://bit.ly/upenn-r-voice install.packages("shiny") Or just use this: https://yihui.shinyapps.io/voice/ 2/46 2 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Overview and Introduction 3 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... I know you click, click, Ctrl+C and Ctrl+V 4/46 4 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... But imagine you hear these words after you finished a project Please do that again! (sorry we made a mistake in the data, want to change a parameter, and yada yada) http://nooooooooooooooo.com 5/46 5 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Basic ideas of dynamic documents · code + narratives = report · i.e. computing languages + authoring languages We built a linear regression model. -

Emacs Speaks Statistics (ESS): a Multi-Platform, Multi-Package Intelligent Environment for Statistical Analysis

Emacs Speaks Statistics (ESS): A multi-platform, multi-package intelligent environment for statistical analysis A.J. Rossini Richard M. Heiberger Rodney A. Sparapani Martin Machler¨ Kurt Hornik ∗ Date: 2003/10/22 17:34:04 Revision: 1.255 Abstract Computer programming is an important component of statistics research and data analysis. This skill is necessary for using sophisticated statistical packages as well as for writing custom software for data analysis. Emacs Speaks Statistics (ESS) provides an intelligent and consistent interface between the user and software. ESS interfaces with SAS, S-PLUS, R, and other statistics packages under the Unix, Microsoft Windows, and Apple Mac operating systems. ESS extends the Emacs text editor and uses its many features to streamline the creation and use of statistical software. ESS understands the syntax for each data analysis language it works with and provides consistent display and editing features across packages. ESS assists in the interactive or batch execution by the statistics packages of statements written in their languages. Some statistics packages can be run as a subprocess of Emacs, allowing the user to work directly from the editor and thereby retain a consistent and constant look- and-feel. We discuss how ESS works and how it increases statistical programming efficiency. Keywords: Data Analysis, Programming, S, SAS, S-PLUS, R, XLISPSTAT,STATA, BUGS, Open Source Software, Cross-platform User Interface. ∗A.J. Rossini is Research Assistant Professor in the Department of Biostatistics, University of Washington and Joint Assis- tant Member at the Fred Hutchinson Cancer Research Center, Seattle, WA, USA mailto:[email protected]; Richard M. -

A Command-Line Interface for Analysis of Molecular Dynamics Simulations

taurenmd: A command-line interface for analysis of Molecular Dynamics simulations. João M.C. Teixeira1, 2 1 Previous, Biomolecular NMR Laboratory, Organic Chemistry Section, Inorganic and Organic Chemistry Department, University of Barcelona, Baldiri Reixac 10-12, Barcelona 08028, Spain 2 DOI: 10.21105/joss.02175 Current, Program in Molecular Medicine, Hospital for Sick Children, Toronto, Ontario M5G 0A4, Software Canada • Review Summary • Repository • Archive Molecular dynamics (MD) simulations of biological molecules have evolved drastically since its application was first demonstrated four decades ago (McCammon, Gelin, & Karplus, 1977) and, nowadays, simulation of systems comprising millions of atoms is possible due to the latest Editor: Richard Gowers advances in computation and data storage capacity – and the scientific community’s interest Reviewers: is growing (Hospital, Battistini, Soliva, Gelpí, & Orozco, 2019). Academic groups develop most of the MD methods and software for MD data handling and analysis. The MD analysis • @amritagos libraries developed solely for the latter scope nicely address the needs of manipulating raw data • @luthaf and calculating structural parameters, such as: MDAnalysis (Gowers et al., 2016; Michaud- Agrawal, Denning, Woolf, & Beckstein, 2011); (McGibbon et al., 2015); (Romo, Submitted: 03 March 2020 MDTraj LOOS Published: 02 June 2020 Leioatts, & Grossfield, 2014); and PyTraj (Hai Nguyen, 2016; Roe & Cheatham, 2013), each with its advantages and drawbacks inherent to their implementation strategies. This diversity License enriches the field with a panoply of strategies that the community can utilize. Authors of papers retain copyright and release the work The MD analysis software libraries widely distributed and adopted by the community share under a Creative Commons two main characteristics: 1) they are written in pure Python (Rossum, 1995), or provide a Attribution 4.0 International Python interface; and 2) they are libraries: highly versatile and powerful pieces of software that, License (CC BY 4.0). -

An Introduction to ESS + Xemacs for Windows Users of R

An Introduction to ESS + XEmacs for Windows Users of R John Fox∗ McMaster University Revised: 23 January 2005 1WhyUseESS+XEmacs? Emacs is a powerful and widely used programmer’s editor. One of the principal attractions of Emacs is its programmability: Emacs can be adapted to provide customized support for program- ming languages, including such features as delimiter (e.g., parenthesis) matching, syntax highlight- ing, debugging, and version control. The ESS (“Emacs Speaks Statistics”) package provides such customization for several common statistical computing packages and programming environments, including the S family of languages (R and various versions of S-PLUS), SAS, and Stata, among others. The current document introduces the use of ESS with R running under Microsoft Windows. For some Unix/Linux users, Emacs is more a way of life than an editor: It is possible to do almost everything from within Emacs, including, of course, programming, but also writing documents in mark-up languages such as HTML and LATEX; reading and writing email; interacting with the Unix shell; web browsing; and so on. I expect that this kind of generalized use of Emacs will not be attractive to most Windows users, who will prefer to use familiar, and specialized, tools for most of these tasks. There are several versions of the Emacs editor, the two most common of which are GNU Emacs, a product of the Free Software Foundation, and XEmacs, an offshoot of GNU Emacs. Both of these implementations are free and are available for most computing platforms, including Windows. In my opinion, based only on limited experience, XEmacs offers certain advantages to Windows users, such as easier installation, and so I will explain the use of ESS under this version of the Emacs editor.1 As I am writing this, there are several Windows programming editors that have been customized for use with R. -

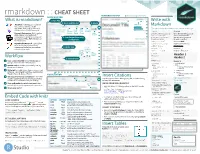

R Markdown Cheat Sheet I

1. Workflow R Markdown is a format for writing reproducible, dynamic reports with R. Use it to embed R code and results into slideshows, pdfs, html documents, Word files and more. To make a report: R Markdown Cheat Sheet i. Open - Open a file that ii. Write - Write content with the iii. Embed - Embed R code that iv. Render - Replace R code with its output and transform learn more at rmarkdown.rstudio.com uses the .Rmd extension. easy to use R Markdown syntax creates output to include in the report the report into a slideshow, pdf, html or ms Word file. rmarkdown 0.2.50 Updated: 8/14 A report. A report. A report. A report. A plot: A plot: A plot: A plot: Microsoft .Rmd Word ```{r} ```{r} ```{r} = = hist(co2) hist(co2) hist(co2) ``` ``` Reveal.js ``` ioslides, Beamer 2. Open File Start by saving a text file with the extension .Rmd, or open 3. Markdown Next, write your report in plain text. Use markdown syntax to an RStudio Rmd template describe how to format text in the final report. syntax becomes • In the menu bar, click Plain text File ▶ New File ▶ R Markdown… End a line with two spaces to start a new paragraph. *italics* and _italics_ • A window will open. Select the class of output **bold** and __bold__ you would like to make with your .Rmd file superscript^2^ ~~strikethrough~~ • Select the specific type of output to make [link](www.rstudio.com) with the radio buttons (you can change this later) # Header 1 • Click OK ## Header 2 ### Header 3 #### Header 4 ##### Header 5 ###### Header 6 4. -

Ed 060 620 Author Title Institution Spons Agency Pub Date

DOCUMENT RESUME ED 060 620 EM 009 626 AUTHOR Bork, Alfred M. TITLE Introduction to Computer Programming Languages. INSTITUTION California Univ., Irvine. Physics Computer Development Project. SPONS AGENCY National Science Foundation, Washington, D-C. PUB DATE Dec 71 NOTE 5p. JOURNAL CIT JCST; P 12-16 December 1971 EDRS PRICE MF-$0.65 HC-$3.29 DESCRIPTORS Computer Assisted Instruction; Computer Science Education; *Guides; *Programing; *Programing Languages ABSTRACT A brief introduction to computer programing explains the basic grammar of ccmputer language as well as fundamental computer techniques. What constitutes a computer program is made clear, then three simple kinds of statements basic to the computational computer are defined: assignment statements, input-output statements, and branching statements. A short description of several available computer languages is given along with an explanation of how the newcomer would make use of basic computer software. Finally, five different versions of a simple program (for solving the harmonic oscillator numerically) are given with comparison. (RB) 74.-E U.S. DEPARTMENT OF HEALTH. F749 EDUCATION & WELFARE erP\ e-"ri". "79' - A ott, Tritl OFFICE OF EDUCATION t,.4 taicta- THIS DOCUMENT HAS BEEN REPRO- DUCED EXACTLY AS RECEIVED FROM THE PERSON OR ORGANIZATION ORIG- '14r- C-1"?Fig ErtrIgf7t Or>re!9,1 2 INATING IT. POINTS OF VIEW OR OPIN- 616 ti LiS kC..".4 .2) szyj /ONS STATED DO NOT NECESSARILY cp-S.e REPRESENT OFFICIAL OFFICE OF EDU- CATION POSITION OR POLICY. By Affred M. Bork r,9 he digital computer is a powerful, calculating. andwhat the programmer wants it to do. For convenience, L logical device. -

HGC: a Fast Hierarchical Graph-Based Clustering Method

Package ‘HGC’ September 27, 2021 Type Package Title A fast hierarchical graph-based clustering method Version 1.1.3 Description HGC (short for Hierarchical Graph-based Clustering) is an R package for conducting hierarchical clustering on large-scale single-cell RNA-seq (scRNA-seq) data. The key idea is to construct a dendrogram of cells on their shared nearest neighbor (SNN) graph. HGC provides functions for building graphs and for conducting hierarchical clustering on the graph. The users with old R version could visit https://github.com/XuegongLab/HGC/tree/HGC4oldRVersion to get HGC package built for R 3.6. License GPL-3 Encoding UTF-8 SystemRequirements C++11 Depends R (>= 4.1.0) Imports Rcpp (>= 1.0.0), RcppEigen(>= 0.3.2.0), Matrix, RANN, ape, dendextend, ggplot2, mclust, patchwork, dplyr, grDevices, methods, stats LinkingTo Rcpp, RcppEigen Suggests BiocStyle, rmarkdown, knitr, testthat (>= 3.0.0) VignetteBuilder knitr biocViews SingleCell, Software, Clustering, RNASeq, GraphAndNetwork, DNASeq Config/testthat/edition 3 NeedsCompilation yes git_url https://git.bioconductor.org/packages/HGC git_branch master git_last_commit 61622e7 git_last_commit_date 2021-07-06 Date/Publication 2021-09-27 1 2 CKNN.Construction Author Zou Ziheng [aut], Hua Kui [aut], XGlab [cre, cph] Maintainer XGlab <[email protected]> R topics documented: CKNN.Construction . .2 FindClusteringTree . .3 HGC.dendrogram . .4 HGC.parameter . .5 HGC.PlotARIs . .6 HGC.PlotDendrogram . .7 HGC.PlotParameter . .8 KNN.Construction . .9 MST.Construction . 10 PMST.Construction . 10 Pollen . 11 RNN.Construction . 12 SNN.Construction . 12 Index 14 CKNN.Construction Building Unweighted Continuous K Nearest Neighbor Graph Description This function builds a Continuous K Nearest Neighbor (CKNN) graph in the input feature space using Euclidean distance metric. -

Rmarkdown : : CHEAT SHEET RENDERED OUTPUT File Path to Output Document SOURCE EDITOR What Is Rmarkdown? 1

rmarkdown : : CHEAT SHEET RENDERED OUTPUT file path to output document SOURCE EDITOR What is rmarkdown? 1. New File Write with 5. Save and Render 6. Share find in document .Rmd files · Develop your code and publish to Markdown ideas side-by-side in a single rpubs.com, document. Run code as individual shinyapps.io, The syntax on the lef renders as the output on the right. chunks or as an entire document. set insert go to run code RStudio Connect Rmd preview code code chunk(s) Plain text. Plain text. Dynamic Documents · Knit together location chunk chunk show End a line with two spaces to End a line with two spaces to plots, tables, and results with outline start a new paragraph. start a new paragraph. narrative text. Render to a variety of 4. Set Output Format(s) Also end with a backslash\ Also end with a backslash formats like HTML, PDF, MS Word, or and Options reload document to make a new line. to make a new line. MS Powerpoint. *italics* and **bold** italics and bold Reproducible Research · Upload, link superscript^2^/subscript~2~ superscript2/subscript2 to, or attach your report to share. ~~strikethrough~~ strikethrough Anyone can read or run your code to 3. Write Text run all escaped: \* \_ \\ escaped: * _ \ reproduce your work. previous modify chunks endash: --, emdash: --- endash: –, emdash: — chunk run options current # Header 1 Header 1 chunk ## Header 2 Workflow ... Header 2 2. Embed Code ... 11. Open a new .Rmd file in the RStudio IDE by ###### Header 6 Header 6 going to File > New File > R Markdown. -

Statistics with Free and Open-Source Software

Free and Open-Source Software • the four essential freedoms according to the FSF: • to run the program as you wish, for any purpose • to study how the program works, and change it so it does Statistics with Free and your computing as you wish Open-Source Software • to redistribute copies so you can help your neighbor • to distribute copies of your modified versions to others • access to the source code is a precondition for this Wolfgang Viechtbauer • think of ‘free’ as in ‘free speech’, not as in ‘free beer’ Maastricht University http://www.wvbauer.com • maybe the better term is: ‘libre’ 1 2 General Purpose Statistical Software Popularity of Statistical Software • proprietary (the big ones): SPSS, SAS/JMP, • difficult to define/measure (job ads, articles, Stata, Statistica, Minitab, MATLAB, Excel, … books, blogs/posts, surveys, forum activity, …) • FOSS (a selection): R, Python (NumPy/SciPy, • maybe the most comprehensive comparison: statsmodels, pandas, …), PSPP, SOFA, Octave, http://r4stats.com/articles/popularity/ LibreOffice Calc, Julia, … • for programming languages in general: TIOBE Index, PYPL, GitHut, Language Popularity Index, RedMonk Rankings, IEEE Spectrum, … • note that users of certain software may be are heavily biased in their opinion 3 4 5 6 1 7 8 What is R? History of S and R • R is a system for data manipulation, statistical • … it began May 5, 1976 at: and numerical analysis, and graphical display • simply put: a statistical programming language • freely available under the GNU General Public License (GPL) → open-source