A Practical Guide for Improving Transparency and Reproducibility in Neuroimaging Research Krzysztof J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

Modern Programming Languages CS508 Virtual University of Pakistan

Modern Programming Languages (CS508) VU Modern Programming Languages CS508 Virtual University of Pakistan Leaders in Education Technology 1 © Copyright Virtual University of Pakistan Modern Programming Languages (CS508) VU TABLE of CONTENTS Course Objectives...........................................................................................................................4 Introduction and Historical Background (Lecture 1-8)..............................................................5 Language Evaluation Criterion.....................................................................................................6 Language Evaluation Criterion...................................................................................................15 An Introduction to SNOBOL (Lecture 9-12).............................................................................32 Ada Programming Language: An Introduction (Lecture 13-17).............................................45 LISP Programming Language: An Introduction (Lecture 18-21)...........................................63 PROLOG - Programming in Logic (Lecture 22-26) .................................................................77 Java Programming Language (Lecture 27-30)..........................................................................92 C# Programming Language (Lecture 31-34) ...........................................................................111 PHP – Personal Home Page PHP: Hypertext Preprocessor (Lecture 35-37)........................129 Modern Programming Languages-JavaScript -

TANGO Device

School on TANGO Controls system Basics of TANGO Lorenzo Pivetta Claudio Scafuri Graziano Scalamera http://www.tango-controls.org L.Pivetta, C.Scafuri, G.Scalamera School on TANGO Control System - Trieste 4-8th July 2016 2 Prerequisites To better understand the training a background on the following arguments is desirable: ● Programming language ● Object oriented programming ● Linux/UNIX operating system ● Networking ● Control systems L.Pivetta, C.Scafuri, G.Scalamera School on TANGO Control System - Trieste 4-8th July 2016 3 Outline 1 - What is TANGO? 2 - TANGO architecture Language/OS/Compilers Device hierarchy CORBA and ZeroMQ TANGO domains TANGO device and device server TANGO Database Communication models 3 - TANGO configuration/tools Multicast Jive Polling Starter/Astor Events Pogo Alarms TANGO installation Groups Client basics TANGO ACL Logging system Historical DataBase 4 – Examples Test device L.Pivetta, C.Scafuri, G.Scalamera School on TANGO Control System - Trieste 4-8th July 2016 4 What is TANGO? Scientific workspaces Native client applications In short: Industrial SCADA Control system framework TANGO Based on CORBA and ZMQ C++ TANGO Java Archiving Python System Centralized config. database TANGO TANGO TANGO TANGO Clients binding binding binding binding (CLI/GUI) Software bus for distributed TANGO software bus objects Provides unified interface to Device Device Device Device Device Device Device Server Server Server Server Server Server Server all equipments hiding how they are HV ps + Pylon OPC UA Data Motion TANGO SNMP connected/managed -

Reproducible Reports with Knitr and R Markdown

Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Reproducible Reports with knitr and R Markdown Yihui Xie, RStudio 11/22/2014 @ UPenn, The Warren Center 1 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... An appetizer Run the app below (your web browser may request access to your microphone). http://bit.ly/upenn-r-voice install.packages("shiny") Or just use this: https://yihui.shinyapps.io/voice/ 2/46 2 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Overview and Introduction 3 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... I know you click, click, Ctrl+C and Ctrl+V 4/46 4 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... But imagine you hear these words after you finished a project Please do that again! (sorry we made a mistake in the data, want to change a parameter, and yada yada) http://nooooooooooooooo.com 5/46 5 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Basic ideas of dynamic documents · code + narratives = report · i.e. computing languages + authoring languages We built a linear regression model. -

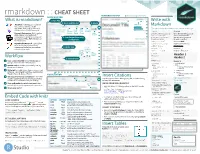

R Markdown Cheat Sheet I

1. Workflow R Markdown is a format for writing reproducible, dynamic reports with R. Use it to embed R code and results into slideshows, pdfs, html documents, Word files and more. To make a report: R Markdown Cheat Sheet i. Open - Open a file that ii. Write - Write content with the iii. Embed - Embed R code that iv. Render - Replace R code with its output and transform learn more at rmarkdown.rstudio.com uses the .Rmd extension. easy to use R Markdown syntax creates output to include in the report the report into a slideshow, pdf, html or ms Word file. rmarkdown 0.2.50 Updated: 8/14 A report. A report. A report. A report. A plot: A plot: A plot: A plot: Microsoft .Rmd Word ```{r} ```{r} ```{r} = = hist(co2) hist(co2) hist(co2) ``` ``` Reveal.js ``` ioslides, Beamer 2. Open File Start by saving a text file with the extension .Rmd, or open 3. Markdown Next, write your report in plain text. Use markdown syntax to an RStudio Rmd template describe how to format text in the final report. syntax becomes • In the menu bar, click Plain text File ▶ New File ▶ R Markdown… End a line with two spaces to start a new paragraph. *italics* and _italics_ • A window will open. Select the class of output **bold** and __bold__ you would like to make with your .Rmd file superscript^2^ ~~strikethrough~~ • Select the specific type of output to make [link](www.rstudio.com) with the radio buttons (you can change this later) # Header 1 • Click OK ## Header 2 ### Header 3 #### Header 4 ##### Header 5 ###### Header 6 4. -

Automated Regional Behavioral Analysis for Human Brain Images

METHODS ARTICLE published: 28 August 2012 NEUROINFORMATICS doi: 10.3389/fninf.2012.00023 Automated regional behavioral analysis for human brain images Jack L. Lancaster 1*, Angela R. Laird 1, Simon B. Eickhoff 2,3, Michael J. Martinez 1, P. M i c k l e F o x 1 and Peter T. Fox 1 1 Research Imaging Institute, The University of Texas Health Science Center at San Antonio, San Antonio, TX, USA 2 Institute of Neuroscience and Medicine (INM-1), Research Center, Jülich, Germany 3 Institute for Clinical Neuroscience and Medical Psychology, Heinrich-Heine University, Düsseldorf, Germany Edited by: Behavioral categories of functional imaging experiments along with standardized brain Robert W. Williams, University of coordinates of associated activations were used to develop a method to automate regional Tennessee Health Science Center, behavioral analysis of human brain images. Behavioral and coordinate data were taken USA from the BrainMap database (http://www.brainmap.org/), which documents over 20 years Reviewed by: Glenn D. Rosen, Beth Israel of published functional brain imaging studies. A brain region of interest (ROI) for behavioral Deaconess Medical Center, USA analysis can be defined in functional images, anatomical images or brain atlases, if Khyobeni Mozhui, University of images are spatially normalized to MNI or Talairach standards. Results of behavioral Tennessee Health Science Center, analysis are presented for each of BrainMap’s 51 behavioral sub-domains spanning five USA behavioral domains (Action, Cognition, Emotion, Interoception, and Perception). For each *Correspondence: Jack L. Lancaster, Research Imaging behavioral sub-domain the fraction of coordinates falling within the ROI was computed Institute, The University of Texas and compared with the fraction expected if coordinates for the behavior were not Health Science Center at San clustered, i.e., uniformly distributed. -

HGC: a Fast Hierarchical Graph-Based Clustering Method

Package ‘HGC’ September 27, 2021 Type Package Title A fast hierarchical graph-based clustering method Version 1.1.3 Description HGC (short for Hierarchical Graph-based Clustering) is an R package for conducting hierarchical clustering on large-scale single-cell RNA-seq (scRNA-seq) data. The key idea is to construct a dendrogram of cells on their shared nearest neighbor (SNN) graph. HGC provides functions for building graphs and for conducting hierarchical clustering on the graph. The users with old R version could visit https://github.com/XuegongLab/HGC/tree/HGC4oldRVersion to get HGC package built for R 3.6. License GPL-3 Encoding UTF-8 SystemRequirements C++11 Depends R (>= 4.1.0) Imports Rcpp (>= 1.0.0), RcppEigen(>= 0.3.2.0), Matrix, RANN, ape, dendextend, ggplot2, mclust, patchwork, dplyr, grDevices, methods, stats LinkingTo Rcpp, RcppEigen Suggests BiocStyle, rmarkdown, knitr, testthat (>= 3.0.0) VignetteBuilder knitr biocViews SingleCell, Software, Clustering, RNASeq, GraphAndNetwork, DNASeq Config/testthat/edition 3 NeedsCompilation yes git_url https://git.bioconductor.org/packages/HGC git_branch master git_last_commit 61622e7 git_last_commit_date 2021-07-06 Date/Publication 2021-09-27 1 2 CKNN.Construction Author Zou Ziheng [aut], Hua Kui [aut], XGlab [cre, cph] Maintainer XGlab <[email protected]> R topics documented: CKNN.Construction . .2 FindClusteringTree . .3 HGC.dendrogram . .4 HGC.parameter . .5 HGC.PlotARIs . .6 HGC.PlotDendrogram . .7 HGC.PlotParameter . .8 KNN.Construction . .9 MST.Construction . 10 PMST.Construction . 10 Pollen . 11 RNN.Construction . 12 SNN.Construction . 12 Index 14 CKNN.Construction Building Unweighted Continuous K Nearest Neighbor Graph Description This function builds a Continuous K Nearest Neighbor (CKNN) graph in the input feature space using Euclidean distance metric. -

Rmarkdown : : CHEAT SHEET RENDERED OUTPUT File Path to Output Document SOURCE EDITOR What Is Rmarkdown? 1

rmarkdown : : CHEAT SHEET RENDERED OUTPUT file path to output document SOURCE EDITOR What is rmarkdown? 1. New File Write with 5. Save and Render 6. Share find in document .Rmd files · Develop your code and publish to Markdown ideas side-by-side in a single rpubs.com, document. Run code as individual shinyapps.io, The syntax on the lef renders as the output on the right. chunks or as an entire document. set insert go to run code RStudio Connect Rmd preview code code chunk(s) Plain text. Plain text. Dynamic Documents · Knit together location chunk chunk show End a line with two spaces to End a line with two spaces to plots, tables, and results with outline start a new paragraph. start a new paragraph. narrative text. Render to a variety of 4. Set Output Format(s) Also end with a backslash\ Also end with a backslash formats like HTML, PDF, MS Word, or and Options reload document to make a new line. to make a new line. MS Powerpoint. *italics* and **bold** italics and bold Reproducible Research · Upload, link superscript^2^/subscript~2~ superscript2/subscript2 to, or attach your report to share. ~~strikethrough~~ strikethrough Anyone can read or run your code to 3. Write Text run all escaped: \* \_ \\ escaped: * _ \ reproduce your work. previous modify chunks endash: --, emdash: --- endash: –, emdash: — chunk run options current # Header 1 Header 1 chunk ## Header 2 Workflow ... Header 2 2. Embed Code ... 11. Open a new .Rmd file in the RStudio IDE by ###### Header 6 Header 6 going to File > New File > R Markdown. -

Statistics with Free and Open-Source Software

Free and Open-Source Software • the four essential freedoms according to the FSF: • to run the program as you wish, for any purpose • to study how the program works, and change it so it does Statistics with Free and your computing as you wish Open-Source Software • to redistribute copies so you can help your neighbor • to distribute copies of your modified versions to others • access to the source code is a precondition for this Wolfgang Viechtbauer • think of ‘free’ as in ‘free speech’, not as in ‘free beer’ Maastricht University http://www.wvbauer.com • maybe the better term is: ‘libre’ 1 2 General Purpose Statistical Software Popularity of Statistical Software • proprietary (the big ones): SPSS, SAS/JMP, • difficult to define/measure (job ads, articles, Stata, Statistica, Minitab, MATLAB, Excel, … books, blogs/posts, surveys, forum activity, …) • FOSS (a selection): R, Python (NumPy/SciPy, • maybe the most comprehensive comparison: statsmodels, pandas, …), PSPP, SOFA, Octave, http://r4stats.com/articles/popularity/ LibreOffice Calc, Julia, … • for programming languages in general: TIOBE Index, PYPL, GitHut, Language Popularity Index, RedMonk Rankings, IEEE Spectrum, … • note that users of certain software may be are heavily biased in their opinion 3 4 5 6 1 7 8 What is R? History of S and R • R is a system for data manipulation, statistical • … it began May 5, 1976 at: and numerical analysis, and graphical display • simply put: a statistical programming language • freely available under the GNU General Public License (GPL) → open-source -

Writing Reproducible Reports Knitr with R Markdown

Writing reproducible reports knitr with R Markdown Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/AdvData I To estimate a p-value? I To estimate some other quantity? I To estimate power? How many simulation replicates? 2 I To estimate a p-value? I To estimate some other quantity? How many simulation replicates? I To estimate power? 2 I To estimate some other quantity? How many simulation replicates? I To estimate power? I To estimate a p-value? 2 How many simulation replicates? I To estimate power? I To estimate a p-value? I To estimate some other quantity? 2 Data analysis reports I Figures/tables + email I Static Word document I LATEX + R ! PDF I R Markdown = knitr + Markdown ! Web page 3 What if the data change? What if you used the wrong version of the data? 4 rmarkdown.rstudio.com knitr in a knutshell kbroman.org/knitr_knutshell 5 knitr in a knutshell kbroman.org/knitr_knutshell rmarkdown.rstudio.com 5 knitr code chunks Input to knitr: We see that this is an intercross with `r nind(sug)` individuals. There are `r nphe(sug)` phenotypes, and genotype data at `r totmar(sug)` markers across the `r nchr(sug)` autosomes. The genotype data is quite complete. ```{r summary_plot, fig.height=8} plot(sug) ``` Output from knitr: We see that this is an intercross with 163 individuals. There are 6 phenotypes, and genotype data at 93 markers across the 19 autosomes. The genotype data is quite complete. ```r plot(sug) ```  6 html <!DOCTYPE html> <html> <head> <meta charset=utf-8"/> <title>Example html file</title> </head> <body> <h1>Markdown example</h1> <p>Use a bit of <strong>bold</strong> or <em>italics</em>. -

Knitr with R Markdown

Writing reproducible reports knitr with R Markdown Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR knitr in a knutshell kbroman.org/knitr_knutshell 2 Data analysis reports I Figures/tables + email I Static LATEX or Word document I knitr/Sweave + LATEX ! PDF I knitr + Markdown ! Web page 3 What if the data change? What if you used the wrong version of the data? 4 knitr code chunks Input to knitr: We see that this is an intercross with `r nind(sug)` individuals. There are `r nphe(sug)` phenotypes, and genotype data at `r totmar(sug)` markers across the `r nchr(sug)` autosomes. The genotype data is quite complete. ```{r summary_plot, fig.height=8} plot(sug) ``` Output from knitr: We see that this is an intercross with 163 individuals. There are 6 phenotypes, and genotype data at 93 markers across the 19 autosomes. The genotype data is quite complete. ```r plot(sug) ```  5 html <!DOCTYPE html> <html> <head> <meta charset=utf-8"/> <title>Example html file</title> </head> <body> <h1>Markdown example</h1> <p>Use a bit of <strong>bold</strong> or <em>italics</em>. Use backticks to indicate <code>code</code> that will be rendered in monospace.</p> <ul> <li>This is part of a list</li> <li>another item</li> </ul> </body> </html> [Example] 6 CSS ul,ol { margin: 0 0 0 35px; } a { color: purple; text-decoration: none; background-color: transparent; } a:hover { color: purple; background: #CAFFFF; } [Example] 7 Markdown # Markdown example Use a bit of **bold** or _italics_. -

R Programming for Data Science

R Programming for Data Science Roger D. Peng This book is for sale at http://leanpub.com/rprogramming This version was published on 2015-07-20 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. ©2014 - 2015 Roger D. Peng Also By Roger D. Peng Exploratory Data Analysis with R Contents Preface ............................................... 1 History and Overview of R .................................... 4 What is R? ............................................ 4 What is S? ............................................ 4 The S Philosophy ........................................ 5 Back to R ............................................ 5 Basic Features of R ....................................... 6 Free Software .......................................... 6 Design of the R System ..................................... 7 Limitations of R ......................................... 8 R Resources ........................................... 9 Getting Started with R ...................................... 11 Installation ............................................ 11 Getting started with the R interface .............................. 11 R Nuts and Bolts .......................................... 12 Entering Input .......................................... 12 Evaluation ...........................................