Audio Oriented UI Components for the Web Platform Victor Saiz, Benjamin Matuszewski, Samuel Goldszmidt

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bloomberg Professional Services MENU

Software Compatibility Matrix English 16 July 2020 Version: 24.0 This document should be used in conjunction with the Bloomberg® Workstation Requirements and Bloomberg® Transport and Security document. Please see our website or BBPC<GO> on the Terminal for more information. The information provided in this document outlines the Microsoft® platforms that are compatible with the Bloomberg Terminal® and its associated software. These requirements should be taken into consideration when determining the overall suitability of the users’ workstation to successfully utilize all available functionality. Please keep the latest Bloomberg specifications in mind when planning workstation upgrades. Extended support end date* Minimum Product Comments Microsoft Bloomberg Requirements Operating Systems Windows 7 Home Premium *Only 64-Bit versions are supported. Ultimate *Windows 7 Starter Edition is not supported. Professional Service Pack 1 *Customers installing Bloomberg Terminal® software 14-Jan-20 31-Jan-22 Enterprise newly must install Microsoft .NET Framework 4.0 or higher. 64-Bit only Windows 8/8.1 *Only 64-Bit versions are supported. Pro *Customers must install Microsoft Silverlight in order to 10-Jan-23 *TBA* Enterprise run multimedia functions and the BMAP function. *Only 64-Bit versions are supported. Windows 10 *Windows 10 Mobile / Mobile Enterprise are not Home supported. *TBA* *TBA* Pro *Windows 10 in S mode is not supported. Education *Customers must install Microsoft Silverlight in order to Enterprise run multimedia functions and the BMAP function. Microsoft Office Versions Office 2010 Service Pack 2 *Office Starter 2010 is not supported. 13-Oct-20 30-Apr-21 Version 14.0.x Office Professional Service Pack 1 11-Apr-23 *TBA* 2013 Version 15.0.x Office 365 *Office 365 ProPlus must be installed on the local Service Pack 1 computer. -

HTML5 and the Open Web Platform

HTML5 and the Open Web Platform Stuttgart 28 May 2013 Dave Raggett <[email protected]> The Open Web Platform What is the W3C? ● International community where Members, a full-time staff and the public collaborate to develop Web standards ● Led by Web inventor Tim Berners-Lee and CEO Jeff Jaffe ● Hosted by MIT, ERCIM, Keio and Beihang ● Community Groups open to all at no fee ● Business Groups get more staff support ● Technical Working Groups ● Develop specs into W3C Recommendations ● Participants from W3C Members and invited experts ● W3C Patent process for royalty free specifications 3 Who's involved ● W3C has 377 Members as of 11 May 2013 ● To name just a few ● ACCESS, Adobe, Akamai, Apple, Baidu, BBC, Blackberry (RIM), BT, Canon, Deutsche Telekom, eBay, Facebook, France Telecom, Fujitsu, Google, Hitachi, HP, Huawei, IBM, Intel, LG, Microsoft, Mozilla, NASA, NEC, NTT DoCoMo, Nuance, Opera Software, Oracle, Panasonic, Samsung, Siemens, Sony, Telefonica, Tencent, Vodafone, Yandex, … ● Full list at ● http://www.w3.org/Consortium/Member/List 4 The Open Web Platform 5 Open Web Platform ● Communicate with HTTP, Web Sockets, XML and JSON ● Markup with HTML5 ● Style sheets with CSS ● Rich graphics ● JPEG, PNG, GIF ● Canvas and SVG ● Audio and Video ● Scripting with JavaScript ● Expanding range of APIs ● Designed for the World's languages ● Accessibility with support for assistive technology 6 Hosted and Packaged Apps ● Hosted Web apps can be directly loaded from a website ● Packaged Web apps can be locally installed on a device and run without the need for access to a web server ● Zipped file containing all the necessary resources ● Manifest file with app meta-data – Old work on XML based manifests (Web Widgets) – New work on JSON based manifests ● http://w3c.github.io/manifest/ ● Pointer to app's cache manifest ● List of required features and permissions needed to run correctly ● Runtime and security model for web apps ● Privileged apps must be signed by installation origin's private key 7 HTML5 Markup ● Extensive range of features ● Structural, e.g. -

Platform Independent Web Application Modeling and Development with Netsilon Pierre-Alain Muller, Philippe Studer, Frédéric Fondement, Jean Bézivin

Platform independent Web application modeling and development with Netsilon Pierre-Alain Muller, Philippe Studer, Frédéric Fondement, Jean Bézivin To cite this version: Pierre-Alain Muller, Philippe Studer, Frédéric Fondement, Jean Bézivin. Platform independent Web application modeling and development with Netsilon. Software and Systems Modeling, Springer Ver- lag, 2005, 4 (4), pp.424-442. 10.1007/s10270-005-0091-4. inria-00120216 HAL Id: inria-00120216 https://hal.inria.fr/inria-00120216 Submitted on 21 Feb 2007 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Platform Independent Web Application Modeling and Development with Netsilon PIERRE-ALAIN MULLER INRIA Rennes Campus de Beaulieu, Avenue du Général Leclerc, 35042 Rennes, France pa.muller@ uha.fr PHILIPPE STUDER ESSAIM/MIPS, Université de Haute-Alsace, 12 rue des Frères Lumière, 68093 Mulhouse, France ph.studer@ uha.fr FREDERIC FONDEMENT EPFL / IC / UP-LGL, INJ œ Station 14, CH-1015 Lausanne EPFL, Switzerland frederic.fondement@ epfl.ch JEAN BEZIVIN ATLAS Group, INRIA & LINA, Université de Nantes, 2, rue de la Houssinière, BP 92208, 44322 Nantes, France jean.bezivin@ lina.univ-nantes.fr Abstract. This paper discusses platform independent Web application modeling and development in the context of model-driven engineering. -

Seamless Offloading of Web App Computations from Mobile Device to Edge Clouds Via HTML5 Web Worker Migration

Seamless Offloading of Web App Computations From Mobile Device to Edge Clouds via HTML5 Web Worker Migration Hyuk Jin Jeong Seoul National University SoCC 2019 Virtual Machine & Optimization Laboratory Department of Electrical and Computer Engineering Seoul National University Computation Offloading Mobile clients have limited hardware resources Require computation offloading to servers E.g., cloud gaming or cloud ML services for mobile Traditional cloud servers are located far from clients Suffer from high latency 60~70 ms (RTT from our lab to the closest Google Cloud DC) Latency<50 ms is preferred for time-critical games Cloud data center End device [Kjetil Raaen, NIK 2014] 2 Virtual Machine & Optimization Laboratory Edge Cloud Edge servers are located at the edge of the network Provide ultra low (~a few ms) latency Central Clouds Mobile WiFi APs Small cells Edge Device Cloud Clouds What if a user moves? 3 Virtual Machine & Optimization Laboratory A Major Issue: User Mobility How to seamlessly provide a service when a user moves to a different server? Resume the service at the new server What if execution state (e.g., game data) remains on the previous server? This is a challenging problem Edge computing community has struggled to solve it • VM Handoff [Ha et al. SEC’ 17], Container Migration [Lele Ma et al. SEC’ 17], Serverless Edge Computing [Claudio Cicconetti et al. PerCom’ 19] We propose a new approach for web apps based on app migration techniques 4 Virtual Machine & Optimization Laboratory Outline Motivation Proposed system WebAssembly -

RIA Development with Silverlight &

RIA Development with Silverlight & WPF James Chittenden UX Evangelist Public Sector Why RIA? WPF Silverlight “A line-of-business application is one of the set of critical computer applications that are vital to running an enterprise ” – Wikipedia http://en.wikipedia.org/wiki/Line_of_business Deployable & Maintainable We don’t have a designer Employees don’t care what it looks like If it ain’t broke… Return on investment Don’t have the budget “ Design matters. But design is not about decoration or about ornamentation. Design is about making communication as easy and clear for the viewer as possible.” – Garr Reynolds http://www.presentationzen.com 1 Simplicity 2 Visibility 3 Metaphor 4 Natural Mappings 5 Constraints 6 Error Prevention 7 Consistency WPF Silverlight Microsoft .NET Application Platform Deliver applications across the UX Continuum Consistent Tools & Application Model Develop Deploy Design Browser User Experience Continuum Client • Unify UI, media, graphics and documents • Take full advantage of the graphical power of the PC • Easy, low-impact deployment options • Integration with Office and Windows • Compatibility with Silverlight for web and devices Key WPF Platform Concepts Element Lookless XAML Composition Controls Composited Data Binding Visuals XAML: Declarative Programming for Windows • Markup – Build applications in simple declarative statements • Code and content are separate – Streamline collaboration between designers and developers • Easy for tools to consume and generate <Button Width="100">OK Button b1 = new Button(); -

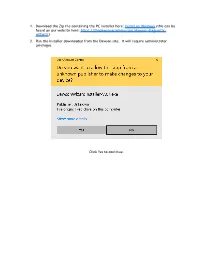

Dawson Install for Windows Users-V01

1. Download the Zip file containing the PC installer here: Install on Windows (this can be found on our website here: https://thedawsonacademy.com/dawson-diagnostic- wizard/) 2. Run the installer downloaded from the Dawson site. It will require administrator privileges. Click Yes to continue. 3. You will see the installer unpacking files and then see a message box letting you know that the installation will proceed. 4. Next, Microsoft Silverlight will be installed. Silverlight is the engine that runs the Dawson Wizard App. Click OK Uncheck the Bing and MSN boxes unless you want this, and click “Install now”. Note that any box that’s left check, will have that feature enabled for ALL your installed Browsers on your computer. Silverlight will proceed with the Install and you will see this window. Click “Close” to Continue. If Silverlight requires any additional configuration, you will see this window which will automatically close and take you to the next step when completed. 5. The next message window lets you know that the Dawson Wizard App installation step is about to proceed. After clicking OK, a Browser window will open where you will log into your account and the Dawson Wizard App will be downloaded and installed on your computer. Enter Credentials Wait for Download to finish Click “Install Application” and you will get another Install Window. Click “Install”: 6. Once the Install finishes, you’ll see the Dawson Wizard App running and a message box saying that the Install was successful. Click OK on the message box and log into the Dawson Wizard App with your credentials. -

The Viability of the Web Browser As a Computer Music Platform

Lonce Wyse and Srikumar Subramanian The Viability of the Web Communications and New Media Department National University of Singapore Blk AS6, #03-41 Browser as a Computer 11 Computing Drive Singapore 117416 Music Platform [email protected] [email protected] Abstract: The computer music community has historically pushed the boundaries of technologies for music-making, using and developing cutting-edge computing, communication, and interfaces in a wide variety of creative practices to meet exacting standards of quality. Several separate systems and protocols have been developed to serve this community, such as Max/MSP and Pd for synthesis and teaching, JackTrip for networked audio, MIDI/OSC for communication, as well as Max/MSP and TouchOSC for interface design, to name a few. With the still-nascent Web Audio API standard and related technologies, we are now, more than ever, seeing an increase in these capabilities and their integration in a single ubiquitous platform: the Web browser. In this article, we examine the suitability of the Web browser as a computer music platform in critical aspects of audio synthesis, timing, I/O, and communication. We focus on the new Web Audio API and situate it in the context of associated technologies to understand how well they together can be expected to meet the musical, computational, and development needs of the computer music community. We identify timing and extensibility as two key areas that still need work in order to meet those needs. To date, despite the work of a few intrepid musical Why would musicians care about working in explorers, the Web browser platform has not been the browser, a platform not specifically designed widely considered as a viable platform for the de- for computer music? Max/MSP is an example of a velopment of computer music. -

Internet Explorer Users Are Required to Add the Portal URL to Trusted Sites

CLA Client Portal Browser and Silverlight FAQs 1. Question: I am receiving an “Error 500” when clicking the link to access the CLA Document Portal. Resolution: Verify with your IT department that the portal is not blocked by any internal monitoring or protection applications. 2. Question: How do I know if my computer has Microsoft Silverlight Installed? Resolution: The first time you try and login to the portal you will be prompted to install Silverlight from Microsoft’s website if you don’t have it already installed. The installation typically takes less than one minute and is completely safe. http://www.microsoft.com/getsilverlight/Get-Started/Install/Default.aspx If you cannot, or prefer not to, install Silverlight on your machine, a simplified version of the document portal that does not require Silverlight is available. Click on the Take me to the non- Silverlight login on the CLA Document Portal page (www.claconnect.com/docportal). 3. Question: I cannot access the CLA Document Portal. (Server error/Page not found) Resolution: Check that you are using a Microsoft Silverlight 4 compatible browser on all PC’s or MAC. A complete list of browsers and operating systems that support Silverlight 4 can be found at http://www.microsoft.com/getsilverlight/locale/en-us/html/installation-win-SL4.html Please note: Internet Explorer users are required to add the portal URL to Trusted Sites. Adding to Trusted Sites Internet Explorer settings 1. Open Internet Explorer and browse to https://portal.cchaxcess.com/Portal/. 2. In Internet Explorer, select Tools / Internet Options; then select the Security tab and click Trusted Sites and then Sites. -

Xactimate 28 Network Installation Guide

Network Installation Guide © 2011-2013 by Xactware . All rights reserved. Xactware, Xactimate, Xactimate Online, XactNet, and/or other Xactware products referenced herein are either trademarks or registered trademarks of Xactware Solutions, Inc. Other product and company names mentioned herein may be the trademarks of their respective owners. (031213) www.xactware.com Xactimate version 28 Network Installation Guide INTRODUCTION Like all networked software applications, Xactimate must be installed on a workstation connected to a network that has been properly set up and mapped to a shared drive (this document uses the X drive as an example). Xactware recommends that a certified network technician set up and administer the network. It is recommended for a network installation of Xactimate to use the physical disc. For instructions about how to set up a network installation of Xactimate via the downloadable version, visit the eService Center at https:/ / eservice.xactware.com/apps/esc/ Xactimate v28.0 Network Installation Guide Page 1 Introduction SYSTEM REQUIREMENTS - XACTIMATE V28.0 NETWORK INSTALLATION Specifications Minimum Requirements Recommended Requirements Processor Single Core Processor 1.5GHz Dual Core Processors Operating System Windows 8 (32 bit, 64 bit) Windows 7 (32 bit, 64 bit) Windows Vista (32 bit, 64 bit Business, 64 bit Ultimate) Windows XP Service Pack 3 *Windows RT is not Supported Video Card Open GL 2.0 Compatible with 128 Open GL 2.0 Compatible with MB of VRAM and Latest Drivers 512 MB of VRAM and Latest Drivers -

1 in the UNITED STATES DISTRICT COURT for the DISTRICT of DELAWARE VIATECH TECHNOLOGIES, INC., ) ) Plaintiff, ) Case No. 1:17Cv5

Case 1:17-cv-00570-RGA Document 11 Filed 10/01/17 Page 1 of 37 PageID #: 145 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE VIATECH TECHNOLOGIES, INC., ) ) Plaintiff, ) Case No. 1:17cv570 v. ) ) MICROSOFT CORPORATION, ) DEMAND FOR JURY TRIAL ) Defendant. ) AMENDED COMPLAINT FOR PATENT INFRINGEMENT Plaintiff ViaTech Technologies, Inc. (“plaintiff” or “ViaTech”), through its attorneys, for its complaint against defendant Microsoft Corporation (“defendant” or “Microsoft”), alleges as follows: THE PARTIES 1. Plaintiff is a corporation organized and existing under the laws of the State of Delaware having a place of business at 1136 Ashbourne Circle, Trinity, FL 34655-7103. 2. Defendant Microsoft is a corporation organized and existing under the laws of the State of Washington having its principal place of business at One Microsoft Way, Redmond, WA 98052. JURISDICTION AND VENUE 3. This action arises under the patent laws of the United States, Title 35 of the United States Code. Subject matter jurisdiction is proper in this Court pursuant to 28 U.S.C. §§ 1331 and 1338(a). 4. Defendant Microsoft is subject to this Court’s specific and general personal jurisdiction consistent with due process and the Delaware Long Arm Statute, 10 Del. C. § 3104. 1 Case 1:17-cv-00570-RGA Document 11 Filed 10/01/17 Page 2 of 37 PageID #: 146 5. Venue in this Judicial District is proper under 28 U.S.C. § 1400(b). 6. Microsoft is registered to do business in Delaware, and has appointed Corporation Service Company, 2711 Centerville Rd., Suite 400, Wilmington, DE 19808, as its registered agent, and either directly, or indirectly through its distribution network, has transacted and/or continues to transact business in Delaware, and has regularly solicited and continues to regularly solicit business in Delaware. -

Microsoft Silverlight Photography Framework: Comparing Component Based Designs in Adobe Flex and Microsoft Silverlight

Grand Valley State University ScholarWorks@GVSU Masters Projects Graduate Research and Creative Practice 8-3-2009 Microsoft Silverlight Photography Framework: Comparing Component Based Designs in Adobe Flex and Microsoft Silverlight David Roossien Grand Valley State University, [email protected] Follow this and additional works at: https://scholarworks.gvsu.edu/gradprojects Part of the Computer and Systems Architecture Commons ScholarWorks Citation Roossien, David, "Microsoft Silverlight Photography Framework: Comparing Component Based Designs in Adobe Flex and Microsoft Silverlight" (2009). Masters Projects. 1. https://scholarworks.gvsu.edu/gradprojects/1 This Dissertation is brought to you for free and open access by the Graduate Research and Creative Practice at ScholarWorks@GVSU. It has been accepted for inclusion in Masters Projects by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. Microsoft Silverlight Photography Framework Comparing Component Based Designs in Adobe Flex and Microsoft Silverlight David Roossien August 3, 2009 Grand Valley State University CS693 Masters Project For Professor Robert Adams Table of Contents Introduction .......................................................................................................................... 3 Rich Internet Applications ............................................................................................... 3 Goals of Adobe Flex ....................................................................................................... -

Document Object Model

Document Object Model Copyright © 1999 - 2020 Ellis Horowitz DOM 1 What is DOM • The Document Object Model (DOM) is a programming interface for XML documents. – It defines the way an XML document can be accessed and manipulated – this includes HTML documents • The XML DOM is designed to be used with any programming language and any operating system. • The DOM represents an XML file as a tree – The documentElement is the top-level of the tree. This element has one or many childNodes that represent the branches of the tree. Copyright © 1999 - 2020 Ellis Horowitz DOM 2 Version History • DOM Level 1 concentrates on HTML and XML document models. It contains functionality for document navigation and manipulation. See: – http://www.w3.org/DOM/ • DOM Level 2 adds a stylesheet object model to DOM Level 1, defines functionality for manipulating the style information attached to a document, and defines an event model and provides support for XML namespaces. The DOM Level 2 specification is a set of 6 released W3C Recommendations, see: – https://www.w3.org/DOM/DOMTR#dom2 • DOM Level 3 consists of 3 different specifications (Recommendations) – DOM Level 3 Core, Load and Save, Validation, http://www.w3.org/TR/DOM-Level-3/ • DOM Level 4 (aka DOM4) consists of 1 specification (Recommendation) – W3C DOM4, http://www.w3.org/TR/domcore/ • Consolidates previous specifications, and moves some to HTML5 • See All DOM Technical Reports at: – https://www.w3.org/DOM/DOMTR • Now DOM specification is DOM Living Standard (WHATWG), see: – https://dom.spec.whatwg.org