Report on the Project PN-III-P4-ID-PCE-2016-0842 ATCO, "Technici Avansate in Optimizarea Combinatorie ¸Sicomplexitatea Computa¸Tionalã"

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Algoritmi Za Sortiranje U Programskom Jeziku C++ Završni Rad

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the University of Rijeka SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA POLITEHNIKU Algoritmi za sortiranje u programskom jeziku C++ Završni rad Mentor završnog rada: doc. dr. sc. Marko Maliković Student: Alen Jakus Rijeka, 2016. SVEUČILIŠTE U RIJECI Filozofski fakultet Odsjek za politehniku Rijeka, Sveučilišna avenija 4 Povjerenstvo za završne i diplomske ispite U Rijeci, 07. travnja, 2016. ZADATAK ZAVRŠNOG RADA (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Zadatak: Algoritmi za sortiranje u programskom jeziku C++ Rješenjem zadatka potrebno je obuhvatiti sljedeće: 1. Napraviti pregled algoritama za sortiranje. 2. Opisati odabrane algoritme za sortiranje. 3. Dijagramima prikazati rad odabranih algoritama za sortiranje. 4. Opis osnovnih svojstava programskog jezika C++. 5. Detaljan opis tipova podataka, izvedenih oblika podataka, naredbi i drugih elemenata iz programskog jezika C++ koji se koriste u rješenjima odabranih problema. 6. Opis rješenja koja su dobivena iz napisanih programa. 7. Cjelokupan kôd u programskom jeziku C++. U završnom se radu obvezno treba pridržavati Pravilnika o diplomskom radu i Uputa za izradu završnog rada sveučilišnog dodiplomskog studija. Zadatak uručen pristupniku: 07. travnja 2016. godine Rok predaje završnog rada: ____________________ Datum predaje završnog rada: ____________________ Zadatak zadao: Doc. dr. sc. Marko Maliković 2 FILOZOFSKI FAKULTET U RIJECI Odsjek za politehniku U Rijeci, 07. travnja 2016. godine ZADATAK ZA ZAVRŠNI RAD (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Naslov završnog rada: Algoritmi za sortiranje u programskom jeziku C++ Kratak opis zadatka: Napravite pregled algoritama za sortiranje. Opišite odabrane algoritme za sortiranje. -

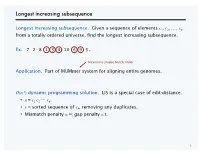

Longest Increasing Subsequence

Longest increasing subsequence Longest increasing subsequence. Given a sequence of elements c1, c2, …, cn from a totally-ordered universe, find the longest increasing subsequence. Ex. 7 2 8 1 3 4 10 6 9 5. Maximum Unique Match finder Application. Part of MUMmer system for aligning entire genomes. O(n 2) dynamic programming solution. LIS is a special case of edit-distance. ・x = c1 c2 ⋯ cn. ・y = sorted sequence of ck, removing any duplicates. ・Mismatch penalty = ∞; gap penalty = 1. 1 Patience solitaire Patience. Deal cards c1, c2, …, cn into piles according to two rules: ・Can't place a higher-valued card onto a lowered-valued card. ・Can form a new pile and put a card onto it. Goal. Form as few piles as possible. first card to deal 2 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. first card to deal 3 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. Observation. At any stage during greedy algorithm, top cards of piles increase from left to right. first card to deal top cards 4 Patience-LIS: weak duality Weak duality. In any legal game of patience, the number of piles ≥ length of any increasing subsequence. Pf. ・Cards within a pile form a decreasing subsequence. ・Any increasing sequence can use at most one card from each pile. ▪ decreasing subsequence 5 Patience-LIS: strong duality Theorem. [Hammersley 1972] Min number of piles = max length of an IS; moreover greedy algorithm finds both. at time of insertion Pf. Each card maintains a pointer to top card in previous pile. -

View Publication

Patience is a Virtue: Revisiting Merge and Sort on Modern Processors Badrish Chandramouli and Jonathan Goldstein Microsoft Research {badrishc, jongold}@microsoft.com ABSTRACT In particular, the vast quantities of almost sorted log-based data The vast quantities of log-based data appearing in data centers has appearing in data centers has generated this interest. In these generated an interest in sorting almost-sorted datasets. We revisit scenarios, data is collected from many servers, and brought the problem of sorting and merging data in main memory, and show together either immediately, or periodically (e.g. every minute), that a long-forgotten technique called Patience Sort can, with some and stored in a log. The log is then typically sorted, sometimes in key modifications, be made competitive with today’s best multiple ways, according to the types of questions being asked. If comparison-based sorting techniques for both random and almost those questions are temporal in nature [7][17][18], it is required that sorted data. Patience sort consists of two phases: the creation of the log be sorted on time. A widely-used technique for sorting sorted runs, and the merging of these runs. Through a combination almost sorted data is Timsort [8], which works by finding of algorithmic and architectural innovations, we dramatically contiguous runs of increasing or decreasing value in the dataset. improve Patience sort for both random and almost-ordered data. Of Our investigation has resulted in some surprising discoveries about particular interest is a new technique called ping-pong merge for a mostly-ignored 50-year-old sorting technique called Patience merging sorted runs in main memory. -

Anagnostou Msc2009.Pdf

Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Αναγνώστου Χρήστος Μεταπτυχιακός Φοιτητής ∆ιπλωματική Εργασία Επιβλέπων: Παπαρρίζος Κωνσταντίνος, Καθηγητής Τμήμα Εφαρμοσμένης Πληροφορικής Πρόγραμμα Μεταπτυχιακών Σπουδών Ειδίκευσης Πανεπιστήμιο Μακεδονίας Θεσσαλονίκη Ιανουάριος 2009 Πανεπιστήμιο Μακεδονίας – ΜΠΣΕ Τμήματος Εφαρμοσμένης Πληροφορικής Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Θα ήθελα να ευχαριστήσω θερμά τον επιβλέποντα καθηγητή μου και καθηγητή του τμήματος Εφαρμοσμένης Πληροφορικής του Πανεπιστημίου Μακεδονίας, κ. Παπαρρίζο Κωνσταντίνο τόσο για την πολύτιμη βοήθειά του κατά τη διάρκεια εκπόνησης της εργασίας αυτής όσο και για τη γενικότερη καθοδήγησή του. Επίσης, θα ήθελα να ευχαριστήσω την οικογένεια και τους φίλους μου που με βοήθησαν σε όλη αυτή την προσπάθεια. Πανεπιστήμιο Μακεδονίας – ΜΠΣΕ Τμήματος Εφαρμοσμένης Πληροφορικής Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Περιεχόμενα 1 Περίληψη ............................................................................................1 2 Εισαγωγή ............................................................................................2 2.1 Αντικείμενο της εργασίας..............................................................................2 2.2 Διάρθρωση Της Εργασίας..............................................................................3 -

Comparison Sorts Name Best Average Worst Memory Stable Method Other Notes Quicksort Is Usually Done in Place with O(Log N) Stack Space

Comparison sorts Name Best Average Worst Memory Stable Method Other notes Quicksort is usually done in place with O(log n) stack space. Most implementations on typical in- are unstable, as stable average, worst place sort in-place partitioning is case is ; is not more complex. Naïve Quicksort Sedgewick stable; Partitioning variants use an O(n) variation is stable space array to store the worst versions partition. Quicksort case exist variant using three-way (fat) partitioning takes O(n) comparisons when sorting an array of equal keys. Highly parallelizable (up to O(log n) using the Three Hungarian's Algorithmor, more Merge sort worst case Yes Merging practically, Cole's parallel merge sort) for processing large amounts of data. Can be implemented as In-place merge sort — — Yes Merging a stable sort based on stable in-place merging. Heapsort No Selection O(n + d) where d is the Insertion sort Yes Insertion number of inversions. Introsort No Partitioning Used in several STL Comparison sorts Name Best Average Worst Memory Stable Method Other notes & Selection implementations. Stable with O(n) extra Selection sort No Selection space, for example using lists. Makes n comparisons Insertion & Timsort Yes when the data is already Merging sorted or reverse sorted. Makes n comparisons Cubesort Yes Insertion when the data is already sorted or reverse sorted. Small code size, no use Depends on gap of call stack, reasonably sequence; fast, useful where Shell sort or best known is No Insertion memory is at a premium such as embedded and older mainframe applications. Bubble sort Yes Exchanging Tiny code size. -

On the Heapability of Finite Partial Orders Arxiv:1706.01230V5 [Math

Discrete Mathematics and Theoretical Computer Science DMTCS vol. 22:1, 2020, #17 On the Heapability of Finite Partial Orders Janos´ Balogh∗1 Cosmin Bonchis¸y2;3 Diana Dinis¸2;3 Gabriel Istratez2;3 Ioan Todincax4 1 Institute of Informatics, University of Szeged, Hungary 2 Department of Computer Science, West University of Timis¸oara, Timis¸oara, Romania. 3 e-Austria Research Institute, Timis¸oara, Romania 4 LIFO, Universite´ d’Orleans´ and INSA Centre-Val de Loire, France received 16th May 2018, revised 25th Mar. 2020, accepted 12th May 2020. Define a sequence of elements from a partially ordered set to be heapable if it can be successively inserted as the leaves of a binary tree, not necessarily complete, such that children nodes are always greater or equal than parent nodes. This is a natural binary analog of the notion of a chain in a poset and an easy extension of a definition for integers due to Byers et al. (2011). A set of elements is called heapable if some permutation of its elements is. We investigate the partitioning of sequences from a poset into a minimal number of heapable subsequences. We give an extension of Fulkerson’s proof of Dilworth’s theorem to decomposition into heapable subsequences which yields as a byproduct a flow-based algorithm for computing such a minimal decomposition. On the other hand, for sets and sequences of intervals and for trapezoid partial orders we prove that such minimal decompositions can be computed via simple greedy-type algorithms. Second, while the complexity of computing a maximal heapable subsequence of integers is still open, we show that this problem has a polynomial time algorithm for sequences of intervals. -

Strategies for Stable Merge Sorting

Strategies for Stable Merge Sorting Sam Buss∗ Alexander Knop∗ Abstract relative order of pairs of entries in the input list. We introduce new stable natural merge sort algorithms, Information-theoretic considerations imply that any called 2-merge sort and α-merge sort. We prove upper and comparison-based sorting algorithm must make at least lower bounds for several merge sort algorithms, including log2(n!) ≈ n log2 n comparisons in the worst case. How- Timsort, Shiver's sort, α-stack sorts, and our new 2-merge ever, in many practical applications, the input is fre- and α-merge sorts. The upper and lower bounds have quently already partially sorted. There are many adap- the forms c · n log m and c · n log n for inputs of length n tive sort algorithms which will detect this and run faster comprising m runs. For Timsort, we prove a lower bound of on inputs which are already partially sorted. Natu- (1:5−o(1))n log n. For 2-merge sort, we prove optimal upper ral merge sorts are adaptive in this sense: they detect and lower bounds of approximately (1:089 ± o(1))n log m. sorted sublists (called \runs") in the input, and thereby We state similar asymptotically matching upper and lower reduce the cost of merging sublists. One very popular bounds for α-merge sort, when ' < α < 2, where ' is the stable natural merge sort is the eponymous Timsort of golden ratio. Tim Peters [25]. Timsort is extensively used, as it is in- Our bounds are in terms of merge cost; this upper cluded in Python, in the Java standard library, in GNU bounds the number of comparisons and accurately models Octave, and in the Android operating system. -

Pengertian Algoritma Pengurutan (Sorting)

SORTING Pengertian Algoritma Pengurutan (sorting) • Dalam ilmu komputer, algoritma pengurutan adalah • algoritma yang meletakkan elemen-elemen suatu kumpulan data dalam urutan tertentu. Atau • proses pengurutan data yg sebelumnya disusun secara acak sehingga menjadi tersusun secara teratur menurut suatu aturan tertentu. • Yang pada kenyataannya ‘urutan tertentu’ yang umum digunakan adalah secara terurut secara numerikal ataupun secara leksikografi (urutan secara abjad sesuai kamus). • Ada 2 jijenis sorting : Ascen ding (ik)(naik) & Descen ding (turun) Klasifikasi AlgoritmaPengurutan (sorting) y Exchange Sort melakukan pembandingan antar data, dan melakukan pertukaran apabila urutan yang didapat belum sesuai. Contohnya : Bubble sort, Cocktail sort, Comb sort, Gnome sort, Quicksort. y Selection Sort mencari elemen yang tepat untu k dilet akk an di posisi yang telah diketahui, dan meletakkannya di posisi tersebut setelah data tersebut ditemukan. Contohnya :Selection sort, Heapsort, Smoothsort, Strand sort y Insertion Sort mencari tempat yang tepat untuk suatu elemen data yang telah diketahui ke dalam subkumpulan data yang telah terurut, kemudian melakukan penyisipan (insertion) data di tempat yang tepat tersebut. Contohnya adalah : Insertion sor, Shell sort, Tree sort, Library sort, Patience sorting. y Merge Sort dtdata dibidibagi menjdijadi subkumpu lan-subkumpul an yang kdikemudian subkumpulan tersebut diurutkan secara terpisah, dan kemudian digabungkan kembali dengan metode merging. algoritma ini melakukan metode pengurutan merge sort juga untuk mengurutkbkldkan subkumpulandata terse but, atau dengan klikata lain, pengurutan dilakukan secara rekursif. Contohnya adalah : Merge sort. y Non-Comparison Sort proses pengurutan data yang dilakukan algoritma ini tidak terdapat pembandingan antardata, data diurutkan sesuai dengan pigeon hole principle. Contohnya adalah : Radix sort, Bucket sort, Counting sort, Pigeonhole sort, Tally sort. -

Impatience Is a Virtue: Revisiting Disorder in High-Performance Log Analytics

Impatience is a Virtue: Revisiting Disorder in High-Performance Log Analytics Badrish Chandramouli, Jonathan Goldstein, Yinan Li Microsoft Research Email: fbadrishc, jongold, [email protected] (1h, 99%) Abstract—There is a growing interest in processing real-time queries over out-of-order streams in this big data era. This Ideal paper presents a comprehensive solution to meet this requirement. solution Our solution is based on Impatience sort, an online sorting Completeness technique that is based on an old technique called Patience sort. Impatience sort is tailored for incrementally sorting streaming datasets that present themselves as almost sorted, usually due to (1ms, 90%) network delays and machine failures. With several optimizations, our solution can adapt to both input streams and query logic. Low-latency Further, we develop a new Impatience framework that leverages Fig. 1: Buffer-and-sort: Latency vs. completeness tradeoff. Impatience sort to reduce the latency and memory usage of query execution, and supports a range of user latency requirements, A. Challenges without compromising on query completeness and throughput, Latency and Completeness. A fundamental pitfall of the while leveraging existing efficient in-order streaming engines and operators. We evaluate our proposed solution in Trill, a buffer-and-sort mechanism is that users are forced to make a high-performance streaming engine, and demonstrate that our tradeoff between completeness and latency by setting reorder techniques significantly improve sorting performance and reduce latency. Note that events that arrive after the specified reorder memory usage – in some cases, by over an order of magnitude. latency have to be either discarded or adjusted (on timestamps), thus reducing the accuracy of query results on the input I. -

In Search of the Fastest Sorting Algorithm

In Search of the Fastest Sorting Algorithm Emmanuel Attard Cassar [email protected] Abstract: This paper explores in a chronological way the concepts, structures, and algorithms that programmers and computer scientists have tried out in their attempt to produce and improve the process of sorting. The measure of ‘fastness’ in this paper is mainly given in terms of the Big O notation. Keywords: sorting, algorithms orting is an extremely useful procedure in our information-laden society. Robert Sedgewick and Kevin Wayne have this to say: ‘In Sthe early days of computing, the common wisdom was that up to 30 per cent of all computing cycles was spent sorting. If that fraction is lower today, one likely reason is that sorting algorithms are relatively efficient, not that sorting has diminished in relative importance.’1 Many computer scientists consider sorting to be the most fundamental problem in the study of algorithms.2 In the literature, sorting is mentioned as far back as the seventh century BCE where the king of Assyria sorted clay tablets for the royal library according to their shape.3 1 R. Sedgewick, K. Wayne, Algorithms, 4th edn. (USA, 2011), Kindle Edition, Locations 4890-4892. 2 T.H. Cormen, C.E. Leiserson, R.L. Rivest, C. Stein, Introduction to Algorithms, 3rd edn. (USA, 2009), 148. 3 N. Akhter, M. Idrees, Furqan-ur-Rehman, ‘Sorting Algorithms – A Comparative Study’, International Journal of Computer Science and Information Security, Vol. 14, No. 12, (2016), 930. Symposia Melitensia Number 14 (2018) SYMPOSIA MELITENSIA NUMBER 14 (2018) Premise My considerations will be limited to algorithms on the standard von Neumann computer. -

Sắp Xếp (Sorting)

Chương 5 Sắp xếp (Sorting) Heap Sort Quick Sort William A. Martin, Sorting. ACM Computing Surveys, Vol. 3, Nr 4, Dec 1971, pp. 147-174. " ...The bibliography appearing at the end of this article lists 37 sorting algorithms and 100 books and papers on sorting published in the last 20 years... Suggestions are made for choosing the algorithm best suited to a given situation." D. Knuth: 40% thời gian hoạt động của các máy tính là dành cho sắp xếp! NGUYỄN ĐỨC NGHĨA Bộ môn KHMT - ĐHBKHN NỘI DUNG 5.1. Bài toán sắp xếp 5.2. Ba thuật toán sắp xếp cơ bản 5.3. Sắp xếp trộn (Merge Sort) 5.4. Sắp xếp nhanh (Quick Sort) 5.5. Sắp xếp vun đống (Heap Sort) 5.6. Cận dưới cho độ phức tạp tính toán của bài toán sắp xếp 5.7. Các phương pháp sắp xếp đặc biệt NGUYỄNCuuDuongThanCong.com ĐỨC NGHĨA 2 Bộ môn KHMT - ĐHBKHN 5.1. Bài toán sắp xếp • 5.1.1. Bài toán sắp xếp • 5.1.2. Giới thiệu sơ lược về các thuật toán sắp xếp NGUYỄN ĐỨC NGHĨA 3 Bộ môn KHMT - ĐHBKHN 5.1.1. Bài toán sắp xếp • Sắp xếp (Sorting) – Là quá trình tổ chức lại họ các dữ liệu theo thứ tự giảm dần hoặc tăng dần (ascending or descending order) • Dữ liệu cần sắp xếp có thể là – Số nguyên (Integers) – Xâu ký tự (Character strings) – Đối tượng (Objects) • Khoá sắp xếp (Sort key) – Là bộ phận của bản ghi xác định thứ tự sắp xếp của bản ghi trong họ các bản ghi. -

Longest Increasing Subsequence

LONGEST INCREASING SUBSEQUENCE Lecture slides by Kevin Wayne Copyright © 2005 Pearson-Addison Wesley http://www.cs.princeton.edu/~wayne/kleinberg-tardos Last updated on 4/11/18 8:47 AM LONGEST INCREASING SUBSEQUENCE Longest increasing subsequence. Given a sequence of elements c1, c2, …, cn from a totally ordered universe, find the longest increasing subsequence. Ex. 7 2 8 1 3 4 10 6 9 5 elements must be in order (but not necessarily contiguous) Application. Part of MUMmer system for aligning whole genomes. 2 Patience solitaire Rules. Deal cards c1, c2, …, cn into piles according to two rules: ・Can put next card into a new singleton pile. ・Can put next card on a pile if it’s smaller than the top card of pile. Goal. Form as few piles as possible. first card to deal 4 Patience-LIS: weak duality Weak duality. Length of any increasing subsequence ≤ number of piles. Pf. ・Cards within a pile form a decreasing subsequence. ・Any increasing sequence can use at most one card per pile. ▪ decreasing subsequence 5 Patience-LIS: weak duality Weak duality. Length of any increasing subsequence ≤ number of piles. Corollary. If length of an increasing subsequence = number of piles, then both are optimal. length of increasing subsequence = # piles = 5 6 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. first card to deal 7 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. Observation. At any stage during greedy algorithm, top cards of piles increase from left to right. first card to deal top cards are in increasing order (but not necessarily a subsequence) 8 Patience-LIS: strong duality Theorem.