DASISH-D5.2 AB Final__25Nov-R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wikipedia, the Free Encyclopedia 03-11-09 12:04

Tea - Wikipedia, the free encyclopedia 03-11-09 12:04 Tea From Wikipedia, the free encyclopedia Tea is the agricultural product of the leaves, leaf buds, and internodes of the Camellia sinensis plant, prepared and cured by various methods. "Tea" also refers to the aromatic beverage prepared from the cured leaves by combination with hot or boiling water,[1] and is the common name for the Camellia sinensis plant itself. After water, tea is the most widely-consumed beverage in the world.[2] It has a cooling, slightly bitter, astringent flavour which many enjoy.[3] The four types of tea most commonly found on the market are black tea, oolong tea, green tea and white tea,[4] all of which can be made from the same bushes, processed differently, and in the case of fine white tea grown differently. Pu-erh tea, a post-fermented tea, is also often classified as amongst the most popular types of tea.[5] Green Tea leaves in a Chinese The term "herbal tea" usually refers to an infusion or tisane of gaiwan. leaves, flowers, fruit, herbs or other plant material that contains no Camellia sinensis.[6] The term "red tea" either refers to an infusion made from the South African rooibos plant, also containing no Camellia sinensis, or, in Chinese, Korean, Japanese and other East Asian languages, refers to black tea. Contents 1 Traditional Chinese Tea Cultivation and Technologies 2 Processing and classification A tea bush. 3 Blending and additives 4 Content 5 Origin and history 5.1 Origin myths 5.2 China 5.3 Japan 5.4 Korea 5.5 Taiwan 5.6 Thailand 5.7 Vietnam 5.8 Tea spreads to the world 5.9 United Kingdom Plantation workers picking tea in 5.10 United States of America Tanzania. -

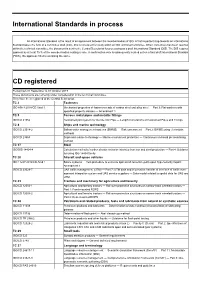

ISO Update Supplement to Isofocus

ISO Update Supplement to ISOfocus October 2019 International Standards in process ISO/CD Agricultural and forestry tractors — Roll-over 12003-2 protective structures on narrow-track wheeled An International Standard is the result of an agreement between tractors — Part 2: Rear-mounted ROPS the member bodies of ISO. A first important step towards an Interna- TC 28 Petroleum and related products, fuels tional Standard takes the form of a committee draft (CD) - this is cir- and lubricants from natural or synthetic culated for study within an ISO technical committee. When consensus sources has been reached within the technical committee, the document is sent to the Central Secretariat for processing as a draft International ISO/CD 11009 Petroleum products and lubricants — Deter- Standard (DIS). The DIS requires approval by at least 75 % of the mination of water washout characteristics of member bodies casting a vote. A confirmation vote is subsequently lubricating greases carried out on a final draft International Standard (FDIS), the approval ISO/CD 13736 Determination of flash point — Abel closed-cup criteria remaining the same. method ISO/CD TR Petroleum products and other liquids — Guid- 29662 ance for flash point testing ISO/CD Lubricants, industrial oils and related prod- 12925-2 ucts (class L) — Family C (Gears) — Part 2: Specifications of categories CKH, CKJ and CKM (lubricants open and semi-enclosed gear systems) CD registered TC 29 Small tools ISO/CD 525 Bonded abrasive products — General requirements Period from 01 September to 01 October 2019 ISO/CD 5743 Pliers and nippers — General technical These documents are currently under consideration in the technical requirements committee. -

Aportes De La Academia Al Desarrollo Local Y Regional

APORTES DE LA ACADEMIA AL DESARROLLO LOCAL Y REGIONAL El presente trabajo es el resultado de un esfuerzo mancomunado y cooperativo Experiencias en América Latina llevado a cabo entre Universidades que trabajan de manera comprometida y sos- Mario José Mantulak Stachuk (Comp.) Juan Carlos Michalus Jusczysczyn (Comp.) (Comp.) Valdés Juan Esteban Miño tenida para el desarrollo local y regional. El mismo incorpora la diversidad de apor- tes provenientes de la academia en rela- ción con diferentes actividades producti- vas vinculadas a la gestión de recursos tecnológicos, las redes de cooperación flexible, las cadenas de valor agropecua- rias, la gestión del conocimiento y la tecnología, la asociatividad entre PyMEs, Mario José Mantulak Stachuk (Comp.) así como las redes empresariales para la Juan Carlos Michalus Jusczysczyn (Comp.) innovación. Juan Esteban Miño Valdés (Comp.) APORTES DE LA ACADEMIA AL DESARROLLO LOCAL Y REGIONAL 9 789505 793662 Mario José Mantulak Stachuk (Comp.) Juan Carlos Michalus Jusczysczyn (Comp.) Juan Esteban Miño Valdés (Comp.) APORTES DE LA ACADEMIA AL DESARROLLO LOCAL Y REGIONAL EDITORIAL UNIVERSITARIA UNIVERSIDAD NACIONAL DE MISIONES Coronel José Félix Bogado 2160 Posadas - Misiones - Tel-Fax: (0376) 4428601 Correo electrónico: [email protected] Coordinación de la edición: Claudio O. Zalazar Armado de interior: Francisco A. Sánchez Tapa: Martín D. Villalba Mario José Mantulak Stachuk; Juan Carlos Michalus Jusczysczyn; Juan Esteban Miño Valdés (Compiladores) Aporte de la Academia al desarrollo local y regional: experiencias en América Latina. - 1a ed. - Posadas: EdUNaM - Editorial Universitaria de la Universidad Nacional de Misiones, 2014. 168 p.; 22x15 cm. ISBN 978-950-579-366-2 1. Desarrollo Regional. -

ISO Standards for Tea

ISO Standards for Tea Intergovernmental Group- on Tea 10th Session New Dehli, 12-14 May 2010 Andrew Scott, Chairman ISO TC/34 SC 8 Tea 1 2 Tea Over 3 million tonnes produced In over 30 countries Providing over 1000 billion cups of tea 3 International Standards for Tea International standards are vital to facilitate international trade To ensure consumer expectations are met To provide guidance and common understanding of Good Manufacturing practices via compositional specifications To provide validated methods of analysis 4 International Standards for Tea 1970’s - Tea Committee became ISO Working Group 8 - ISO Technical Committee 34 Sub-committee 8 – Tea 1977 - ISO 3720 Black tea standard 8 testing methods to measure basic tea parameters 1980 - ISO 1839 Sampling tea 1980 - ISO 3103 Preparation of liquor for sensory analysis 1982 - Glossary of terms 1990 - Instant tea standard 4 supporting test methods 5 …. why measure polyphenols in tea? 6 ISO BLACK TEA SPECIFICATION - ISO 3720 1977 (First edition) “INTRODUCTION The content of CAFFEINE and the content of POLYPHENOLIC constituents are important chemical characteristics of black tea, but it has not been possible to include limits for either of them in the specification. In the case of CAFFEINE, agreement has not yet been reached on a standard method for the determination. In the case of POLYPHENOLIC CONSTITUENTS, knowledge about test methods is not sufficiently developed to justify the standardization of any one of the methods in existence; moreover, information on the content of these -

List of Standards Approved for Confirmations Withdrawals June 2018

LIST FOR CONFIRMATIONS AND WITHDRAWALS APPROVED BY THE 119TH STANDARDS APPROVAL COMMITTEE MEETING ON 29TH JUNE 2018 CHEMICAL DEPARTMENT CONFIRMATIONS 1. KS ISO 16000-18:2011 Kenya Standard — Indoor air Part 18: Detection and enumeration of moulds — Sampling by impaction 2. KS 1998:2007 Kenya Standard — Marking pens for writing and drawing — Specification 3. KS 2031:2007 Kenya Standard — Radioactive waste — Disposal by the user — Code of practice. 4. KS 619:1985 Kenya Standard — Methods of test for printing inks 5. KS EAS 751:2010 Kenya Standard — Air quality — Specification 6. KS EAS 752:2010 Kenya Standard — Air quality — Tolerance limits of emissions discharged to the air by factories 7. KS ISO 12040:1997 Kenya Standard — Graphic technology — Prints and printing inks assessment of light fastness using filtered xenon arc light 8. KS ISO 12636:1998 Kenya Standard — Graphic technology — Blankets for offset printing. 9. KS ISO 13655:1996 Kenya Standard — Graphic technology — Spectral measurement and calorimetric computation for graphic arts images 10. KS ISO 16000-17:2008 Kenya Standard — Indoor air Part 17: Detection and enumeration of moulds-Culture-based method 11. KS ISO 16702:2007 Kenya Standard — Workplace air quality — Determination of total organic isocyanate groups in air using 1-(2-methoxyphenyl) piperazine and liquid chromatography. 12. KS ISO 24095:2009 Kenya Standard — Workplace air-Guidance for the measurement of respirable crystalline silica 13. KS ISO 2846-2:2007 Kenya Standard — Graphic technology — Colour and transparency of printing ink sets for four-colour printing Part 2: Coldset offset lithographic printing 14. KS ISO 2846-3:2002 Kenya Standard — Graphic technology — Colour and transparency of ink sets for four-colour-printing Part 3: Publication gravure printing. -

GOOD MANUFACTURING PRACTICE TEA PRIMARY PROCESSING (Part B - Suppliers Use)

Part B Suppliers Use Unilever Bestfoods Beverages GGOOOODD MMAANNUUFFAACCTTUURRIINNGG PPRRAACCTTIICCEE TTEEAA PPRRIIMMAARRYY PPRROOCCEESSSSIINNGG ((PPaarrtt BB –– SSuupppplliieerrss UUssee)) April 2003 1 Part B Suppliers Use GOOD MANUFACTURING PRACTICE TEA PRIMARY PROCESSING (Part B - Suppliers Use) INDEX Introduction .................................................................................................................................. 3 Product & Process Design............................................................................................................. 9 Raw Materials ............................................................................................................................. 14 Packing Materials ....................................................................................................................... 19 Processing.................................................................................................................................... 25 Finished Products........................................................................................................................ 30 Cleaning & Disinfection.............................................................................................................. 34 Factory Design & Layout............................................................................................................ 38 Personnel & Management Systems ............................................................................................ 45 Environmental............................................................................................................................ -

ISO Update February 2019

ISO Update Supplement to ISOfocus February 2019 International Standards in process TC 27 Solid mineral fuels ISO/CD 1928 Solid mineral fuels — Determination of gross An International Standard is the result of an agreement between calorific value the member bodies of ISO. A first important step towards an Interna- ISO/CD 20360 Brown coals and lignites — Determination of tional Standard takes the form of a committee draft (CD) - this is cir- the volatile matter in the analysis sample: one culated for study within an ISO technical committee. When consensus furnace method has been reached within the technical committee, the document is sent to the Central Secretariat for processing as a draft International TC 28 Petroleum and related products, fuels Standard (DIS). The DIS requires approval by at least 75 % of the and lubricants from natural or synthetic member bodies casting a vote. A confirmation vote is subsequently sources carried out on a final draft International Standard (FDIS), the approval criteria remaining the same. ISO/CD 23572 Petroleum products — Lubricating greases — Sampling of greases TC 33 Refractories ISO/CD 22605 Refractories — Determination of Young’s modulus(MOE)at elevated temperatures by impulse excitation of vibration TC 34 Food products ISO/CD 21983 Guidelines for harvesting, transportation, sepa- CD registered ration the stigma, drying and storage of saffron before packing ISO/DTS Prerequisite programmes on food safety — Period from 01 January to 31 January 2019 22002-5 Part 5: Transport and storage These documents are currently under consideration in the technical TC 35 Paints and varnishes committee. ISO/CD 4625-1 Binders for paints and varnishes — Determina- They have been registred at the Central Secretariat. -

Did You Know

a Volume 3, No. 10, November - December 2012, ISSN 2226-1095 Did you know... • Petrobras CEO on the strategic importance of International Standards th • 35 ISO General Assembly a Contents Comment Comment Did you know… ? ....................................................................................................... 1 Did you know… ? World Scene ISO Focus+ is published 10 times a year International events and international standardization ............................................ 2 … that ISO has standards for pasta, lawn mowers and even toothbrushes ? Most peo- (single issues : July-August, ple recognize the “ ISO brand ” due to the success of ISO 9001 or even ISO 14001, November-December). Guest Interview It is available in English and French. and would be surprised to discover that’s not all we do. But since its founding 65 Maria das Graças Silva Foster, Petrobras CEO ........................................................ 3 www.iso.org/isofocus+ years ago, ISO’s goal has been to put together international experience and wisdom ISO Update : www.iso.org/isoupdate Special Report to develop solutions based on state-of-the-art technology and good practice. The electronic edition (PDF file) of ISO From spaghetti to symphonies .................................................................................. 8 Focus+ is accessible free of charge on the The standardized dimensions of freight containers, and beyond, we must stop and ask : Are we doing Pasta-stic ! How to cook the perfect pasta ................................................................ 10 ISO Website www.iso.org/isofocus+ for example, make trade and transport cheaper and enough ? How can we improve standards knowledge An annual subscription to the paper edition Drink up ! How to make the perfect cuppa, and other thirst-quenching drinks ...... 13 faster. Standardized format and security protocols even further ? ISO Focus+ highlights important issues, costs 38 Swiss francs. -

International Standards in Process CD Registered

International Standards in process An International Standard is the result of an agreement between the member bodies of ISO. A first important step towards an International Standard takes the form of a committee draft (CD) - this is circulated for study within an ISO technical committee. When consensus has been reached within the technical committee, the document is sent to the Central Secretariat for processing as a draft International Standard (DIS). The DIS requires approval by at least 75 % of the member bodies casting a vote. A confirmation vote is subsequently carried out on a final draft International Standard (FDIS), the approval criteria remaining the same. CD registered Period from 01 September to 01 October 2019 These documents are currently under consideration in the technical committee. They have been registred at the Central Secretariat. TC 2 Fasteners ISO 898-3:2018/CD Amd 1 Mechanical properties of fasteners made of carbon steel and alloy steel — Part 3: Flat washers with specified property classes — Amendment 1 TC 5 Ferrous metal pipes and metallic fittings ISO/CD 21052 Restrained joint system for Ductile Iron Pipe — Length Calculations of Restrained Pipes and Fittings TC 8 Ships and marine technology ISO/CD 23314-2 Ballast water management systems (BWMS) — Risk assessment — Part 2: BWMS using electrolytic methods ISO/CD 23668 Ships and marine technology — Marine environment protection — Continuous on-board pH monitoring method TC 17 Steel ISO/DIS 14404-4 Calculation method of carbon dioxide emission intensity from iron -

Té Negro. Definiciones Y Requerimientos Básicos

Quito - Ecuador NORMA TÉCNICA ECUATORIANA NTE INEN-ISO 3720:2013 NÚMERO DE REFERENCIA ISO 3720:2011 (E) TÉ NEGRO. DEFINICIONES Y REQUERIMIENTOS BÁSICOS. (IDT) Primera Edición BLACK TEA. DEFINITION AND BASIC REQUIREMENTS First Edition EXTRACTO DESCRIPTORES: Tecnología de los alimentos, te, definiciones, requerimientos básicos. ICS: 67.140.10 ICS: 67.140.10 Prólogo ISO (la Organización Internacional de Normalización) es una federación mundial de organismos nacionales de normalización (organismos miembros de ISO). El trabajo de preparación de las Normas Internacionales normalmente se realiza a través de los comités técnicos de ISO. Cada organismo miembro interesado en una materia para la cual se haya establecido un comité técnico, tiene el derecho de estar representado en dicho comité. Las organizaciones internacionales, gubernamentales y no gubernamentales, en coordinación con ISO, también participan en el trabajo. ISO colabora estrechamente con la Comisión Electrotécnica Internacional (IEC) en todas las materias de normalización electrotécnica. Las normas internacionales se redactan de acuerdo con las reglas establecidas en la Parte 2 de las Directivas ISO/IEC. La tarea principal de los comités técnicos es preparar Normas Internacionales. Los proyectos de Normas Internacionales adoptados por los comités técnicos se envían a los organismos miembros para votación. La publicación como Norma Internacional requiere la aprobación por al menos el 75% de los organismos miembros que emiten voto. Se llama la atención sobre la posibilidad de que algunos de los elementos de este documento puedan estar sujetos a derechos de patente. ISO no asume la responsabilidad por la identificación de cualquiera o todos los derechos de patente. La Norma ISO 3720 fue preparada por el Comité Técnico ISO/TC 34, Productos alimenticios, Subcomité SC 8, Té Esta cuarta edición cancela y reemplaza a la tercera edición (ISO 3720:1986) que fue técnicamente revisada. -

Isoupdate January 2020

ISO Update Supplement to ISOfocus January 2020 International Standards in process ISO/CD Irrigation equipment — Sprinklers — Part 2: 15886-2 Requirements for design (structure) and testing An International Standard is the result of an agreement between TC 34 Food products the member bodies of ISO. A first important step towards an Interna- ISO 10272- Microbiology of the food chain — Horizon- tional Standard takes the form of a committee draft (CD) - this is cir- 1:2017/CD tal method for detection and enumeration culated for study within an ISO technical committee. When consensus Amd 1 of Campylobacter spp. — Part 1: Detection has been reached within the technical committee, the document is method — Amendment 1 sent to the Central Secretariat for processing as a draft International Standard (DIS). The DIS requires approval by at least 75 % of the ISO 10272- Microbiology of the food chain — Horizontal member bodies casting a vote. A confirmation vote is subsequently 2:2017/CD method for detection and enumeration of carried out on a final draft International Standard (FDIS), the approval Amd 1 Campylobacter spp. — Part 2: Colony-count criteria remaining the same. technique — Amendment 1 ISO/CD 24363 Determination of fatty acid methyl esters and squalene by gas chromatography ISO/CD Vegetable fats and oils — Determination of 29822-2 relative amounts of 1,2- and 1,3-diacylglycerols — Part 2: Isolation by solid phase extraction (SPE) ISO/CD TS Molecular biomarker analysis — Detection 20224-2.2 of animal derived materials in foodstuffs and CD registered feedstuffs by real-time PCR — Part 2: Ovine DNA Detection Method ISO/CD TS Molecular biomarker analysis — Detection Period from 01 December 2019 to 01 January 2020 20224-3.2 of animal derived materials in foodstuffs and These documents are currently under consideration in the technical feedstuffs by real-time PCR — Part 3: Porcine committee. -

UNIVERSITY of VAASA FACULTY of TECHNOLOGY INDUSTRIAL MANAGEMENT Camilla Granholm IMPLEMENTING ISO/FSSC 22000 Food Safety Managem

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Osuva UNIVERSITY OF VAASA FACULTY OF TECHNOLOGY INDUSTRIAL MANAGEMENT Camilla Granholm IMPLEMENTING ISO/FSSC 22000 Food Safety Management System in a SME Master’s Thesis in Industrial management VAASA 2017 1 TABLE OF CONTENTS ABBREVIATIONS 3 ABSTRACT 4 LIST OF FIGURES 5 LIST OF TABLES 6 1 INTRODUCTION 7 1.1 Background 7 1.2 Purpose 8 1.3 Delimitation 8 1.4 The company 9 1.5 Disposition 10 2 THEORY 11 2.1 Quality management 11 2.1.1 Commitment to quality 12 2.1.2 Internal assessment, audits and reviews 14 2.1.3 Benchmarking 16 2.2 Quality management system 17 2.2.1 FSSC 22000 18 2.2.2 ISO 22000 20 2.2.3 Continuous improvement 22 2.2.4 Road to certification 23 2.2.5 Implementing an ISO standard in a SME 26 2.2.6 Implementing ISO 9001 and 14001 in retrospect of ISO/FSSC 22000 28 2.2.7 Domestic and EU regulations 28 2.3 Document management 29 2 3 RESEARCH METHODOLOGY 32 3.1 Literature review 32 3.1.1 Acknowledging the requirements to qualify for the certificate 33 3.1.2 Acknowledging the benefits and challenges 34 3.2 Case study – Arctic Birch 35 3.3 Benchmarking 35 3.3.1 Focus areas 36 3.3.2 Benchmarking companies 37 4 RESULTS 39 4.1 Benchmarking results 39 4.2 ISO/FSSC 22000 implementation process 43 4.3 The documentation for FSMS – Quality handbook 49 4.4 Benefits and challenges for the company implementing a QMS 51 4.5 Discussion 54 5 CONCLUSIONS 57 5.1 Use of method 57 5.2 Benefits for the company 58 5.3 Further research 59 5.4 Final thoughts 59 5.5 Thanks 61 6 LIST OF REFERENCES 62 7 APPENDICES 67 3 ABBREVIATIONS CB Certification body FSMS Food safety management system FSSC 22000 Food safety system certification based on GFSI and ISO standards FST Food safety team GFSI Global food safety initiative GVA Gross Value Add - productivity metric that measures the contribution to an economy, producer, sector or region.