Numerical Methods for Time Dependent Phenomena William Layton

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MA-302 Advanced Calculus 8

Prof. D. P.Patil, Department of Mathematics, Indian Institute of Science, Bangalore Aug-Dec 2002 MA-302 Advanced Calculus 8. Inverse function theorem Carl Gustav Jacob Jacobi† (1804-1851) The exercises 8.1 and 8.2 are only to get practice, their solutions need not be submitted. 8.1. Determine at which points a of the domain of definition G the following maps F have differentiable inverse and give the biggest possible open subset on which F define a diffeomorphism. a). F : R2 → R2 with F(x,y) := (x2 − y2, 2xy) . (In the complex case this is the function z → z2.) b). F : R2 → R2 with F(x,y) := (sin x cosh y,cos x sinh y). (In the complex case this is the function z → sin z.) c). F : R2 → R2 with F(x,y) := (x+ y,x2 + y2) . d). F : R3 → R3 with F(r,ϕ,h) := (r cos ϕ,rsin ϕ,h). (cylindrical coordinates) × 2 2 3 3 e). F : (R+) → R with F(x,y) := (x /y , y /x) . xy f). F : R2 → R2 with F(x,y) := (x2 + y2 ,e ) . 8.2. Show that the following maps F are locally invertible at the given point a, and find the Taylor-expansion F(a) of the inverse map at the point upto order 2. a). F : R3 → R3 with F(x,y,z) := − (x+y+z), xy+xz+yz,−xyz at a =(0, 1, 2) resp. a =(−1, 2, 1). ( Hint : The problem consists in approximating the three zeros of the monic polynomial of degree 3 whose coefficients are close to those of X(X−1)(X−2) resp. -

Carl Gustav Jacob Jacobi

CARL GUSTAV JACOB JACOBI Along with Norwegian Niels Abel, German mathematician Carl Gustav Jacob Jacobi (December 10, 1804 – February 18, 1851) was one of the founders of the theory of elliptic functions, which he described in his 1829 work Fundamenta Nova Theoriae Functionum Ellipticarum (New Foundations of the Theory of Elliptic Functions). His achievements in this area drew praise from Joseph-Louis Lagrange, who had spent some 40 years studying elliptic integrals. Jacobi also investigated number theory, mathematical analysis, geometry and differential equations. His work with determinants, in particular the Hamilton-Jacobi theory, a technique of solving a system of partial differential equations by transforming coordinates, is important in the presentation of dynamics and quantum mechanics. V.I. Arnold wrote of the Hamilton-Jacobi method, “… this is the most powerful method known for exact integration.” Born in Potsdam, Jacobi was the son of a prosperous Jewish banker. His older brother Moritz Hermann Jacobi was a well-known physicist and engineer. The latter worked as a leading researcher at the Academy of Sciences in St. Petersburg. During their lifetimes Moritz was the better known of the two for his work on the practical applications of electricity and especially for his discovery in 1838 of galvanoplastics. Also called electrotyping, it is a process something like electroplating for making duplicate plates of relief, or letterpress, printing. Carl was constantly mistaken for his brother or even worse congratulated for having such a distinguished and accomplished brother. To one such compliment he responded with annoyance, “I am not his brother, he is mine.” Carl Jacobi demonstrated great talent for both languages and mathematics from an early age. -

Mathematical Genealogy of the Union College Department of Mathematics

Gemma (Jemme Reinerszoon) Frisius Mathematical Genealogy of the Union College Department of Mathematics Université Catholique de Louvain 1529, 1536 The Mathematics Genealogy Project is a service of North Dakota State University and the American Mathematical Society. Johannes (Jan van Ostaeyen) Stadius http://www.genealogy.math.ndsu.nodak.edu/ Université Paris IX - Dauphine / Université Catholique de Louvain Justus (Joost Lips) Lipsius Martinus Antonius del Rio Adam Haslmayr Université Catholique de Louvain 1569 Collège de France / Université Catholique de Louvain / Universidad de Salamanca 1572, 1574 Erycius (Henrick van den Putte) Puteanus Jean Baptiste Van Helmont Jacobus Stupaeus Primary Advisor Secondary Advisor Universität zu Köln / Université Catholique de Louvain 1595 Université Catholique de Louvain Erhard Weigel Arnold Geulincx Franciscus de le Boë Sylvius Universität Leipzig 1650 Université Catholique de Louvain / Universiteit Leiden 1646, 1658 Universität Basel 1637 Union College Faculty in Mathematics Otto Mencke Gottfried Wilhelm Leibniz Ehrenfried Walter von Tschirnhaus Key Universität Leipzig 1665, 1666 Universität Altdorf 1666 Universiteit Leiden 1669, 1674 Johann Christoph Wichmannshausen Jacob Bernoulli Christian M. von Wolff Universität Leipzig 1685 Universität Basel 1684 Universität Leipzig 1704 Christian August Hausen Johann Bernoulli Martin Knutzen Marcus Herz Martin-Luther-Universität Halle-Wittenberg 1713 Universität Basel 1694 Leonhard Euler Abraham Gotthelf Kästner Franz Josef Ritter von Gerstner Immanuel Kant -

Mathematical Genealogy of the Wellesley College Department Of

Nilos Kabasilas Mathematical Genealogy of the Wellesley College Department of Mathematics Elissaeus Judaeus Demetrios Kydones The Mathematics Genealogy Project is a service of North Dakota State University and the American Mathematical Society. http://www.genealogy.math.ndsu.nodak.edu/ Georgios Plethon Gemistos Manuel Chrysoloras 1380, 1393 Basilios Bessarion 1436 Mystras Johannes Argyropoulos Guarino da Verona 1444 Università di Padova 1408 Cristoforo Landino Marsilio Ficino Vittorino da Feltre 1462 Università di Firenze 1416 Università di Padova Angelo Poliziano Theodoros Gazes Ognibene (Omnibonus Leonicenus) Bonisoli da Lonigo 1477 Università di Firenze 1433 Constantinople / Università di Mantova Università di Mantova Leo Outers Moses Perez Scipione Fortiguerra Demetrios Chalcocondyles Jacob ben Jehiel Loans Thomas à Kempis Rudolf Agricola Alessandro Sermoneta Gaetano da Thiene Heinrich von Langenstein 1485 Université Catholique de Louvain 1493 Università di Firenze 1452 Mystras / Accademia Romana 1478 Università degli Studi di Ferrara 1363, 1375 Université de Paris Maarten (Martinus Dorpius) van Dorp Girolamo (Hieronymus Aleander) Aleandro François Dubois Jean Tagault Janus Lascaris Matthaeus Adrianus Pelope Johann (Johannes Kapnion) Reuchlin Jan Standonck Alexander Hegius Pietro Roccabonella Nicoletto Vernia Johannes von Gmunden 1504, 1515 Université Catholique de Louvain 1499, 1508 Università di Padova 1516 Université de Paris 1472 Università di Padova 1477, 1481 Universität Basel / Université de Poitiers 1474, 1490 Collège Sainte-Barbe -

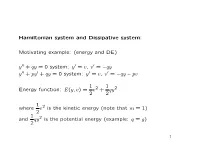

Hamiltonian System and Dissipative System

Hamiltonian system and Dissipative system: Motivating example: (energy and DE) y00 + qy = 0 system: y0 = v, v0 = −qy y00 + py0 + qy = 0 system: y0 = v, v0 = −qy − pv 1 1 Energy function: E(y, v) = v2 + qy2 2 2 1 where v2 is the kinetic energy (note that m = 1) 2 1 and qy2 is the potential energy (example: q = g) 2 1 y00 + qy = 0 d d 1 1 E(y(t), v(t)) = v2(t) + qy2(t) = v(t)v0(t) + qy(t)y0(t) dt dt 2 2 = v(t)(−qy(t)) + qy(t) · v(t) = 0 (energy is conserved) y00 + py0 + qy = 0 d d 1 1 E(y(t), v(t)) = v2(t) + qy2(t) = v(t)v0(t) + qy(t)y0(t) dt dt 2 2 = v(t)(−qy(t) − pv(t)) + qy(t) · v(t) = −p[v(t)]2 ≤ 0 (energy is dissipated) 2 Definition: dx dy = f(x, y), = g(x, y). dt dt If there is a function H(x, y) such that for each solution orbit d (x(t), y(t)), we have H(x(t), y(t)) = 0, then the system is a dt Hamiltonian system, and H(x, y) is called conserved quantity. (or energy function, Hamiltonian) If there is a function H(x, y) such that for each solution orbit d (x(t), y(t)), we have H(x(t), y(t)) ≤ 0, then the system is a dt dissipative system, and H(x, y) is called Lyapunov function. (or energy function) 3 Example: If a satellite is circling around the earth, it is a Hamil- tonian system; but if it drops to the earth, it is a dissipative system. -

THE JACOBIAN MATRIX a Thesis Presented to the Department Of

THE JACOBIAN MATRIX A Thesis Presented to the Department of Mathematics The Kansas State Teachers College of Emporia "*' In Partial Fulfillment of the Requirements for the Degree Master of Arts by Sheryl A. -Cole June 1970 aAo.xddv PREFACE The purpose of this paper is to investigate the Jacobian matrix in greater depth than this topic is dealt with in any individual calculus text. The history of Carl Jacobi, the man who discovered the determinant, is reviewed in chapter one. The several illustrations in chapter two demon strate tile mechanics of finding the Jacobian matrix. In chapter three the magnification of area under a transformation is related to ti1e Jacobian. The amount of magnification under coordinate changes is also discussed in this chapter. In chapter four a definition of smooth surface area is arrived at by application of the Jacobian. It is my pleasure to express appreciation to Dr. John Burger for all of his assistance in preparing this paper. An extra special thanks to stan for his help and patience. C.G.J. JACOBI (1804-1851) "Man muss immer umkehren" (Man must always invert.) , ,~' I, "1,1'1 TABLE OF CONTENTS . CHAPTER PAGE I. A HISTORY OF JACOBI • • • • • • • • • •• • 1 II. TIlE JACOBIAN .AND ITS RELATED THEOREMS •• • 8 III. TRANSFORMATIONS OF AREA • • • ••• • • • • 14 IV. SURFACE AREA • • ••• • • • • • • ••• • 23 CONCLUSION •• •• ••• • • • • • •• •• •• • • 28 FOOTNOTES •• •• • • • ••• ••• •• •• • • • 30 BIBLIOGRAPHY • • • • • • • • • • • • ·.. • • • • • 32 CHAPTER I A HISTORY OF JACOBI Carl Gustav Jacob Jacobi was a prominant mathema tician noted chiefly for his pioneering work in the field of elliptic functions. Jacobi was born December 10, 1804, in Potsdam, Prussia. He was the second of four children born to a very prosperous banker. -

Assimilation and Profession - the 'Jewish' Mathematician C

Helmut Pulte Assimilation and Profession - The 'Jewish' Mathematician C. G. J. Jacobi (1804-1851) Biographical Introduction "T;cques Simon Jacobi, nowadays better known as carl Gustav Jacob Jacobi, --r'ed from 1804 to 1851. He attended the victoria-Gymnasiunt in potsdam, :s did later Hermann von Helmholtz. He was an excellent pupil, especially .: mathematics and ancient languages. In the report on his final examina- :ron, the director of his school cerlified that Jacobi had extraordinary mental 'bilities, and he dared to predict that "in any case, he will be a famous man r.''me day.", That was in April 1821, the same month Jacobi registered at the univer- of Berlin. 'rry He studied mathematics, but from the very beginning he was rored by the curriculum offered to him; so he also studied classics and phi- rrsoph!. One of his teachers was the famous philologist August Böckh, .,. ho found only one fault with him: "that he comes from potsdam. because . lamous man has never ever come from there."2 Young Jacobi's hero in rhilosophy was Hegel, who later was a member of his examining commit- :e . Jacobi took the examination in 1824, and only one year later became a -)''itutdozent After another year, at the age of 23, he was appointed assis- ::nt professor in Königsberg and then full professor at the age of 28. Jacobi remained eighteen years at the Albertina in Königsberg, ,,where ris tireless activity produced amazing results in both research and academic ,nstruction."s His theory of elliptic functions developed in competition .'' ith Niels Henrik Abel was received by the mathematical community as a :l:st-rate sensation. -

On Lie Algebras of Generalized Jacobi Matrices

**************************************** BANACH CENTER PUBLICATIONS, VOLUME ** INSTITUTE OF MATHEMATICS POLISH ACADEMY OF SCIENCES WARSZAWA 2020 ON LIE ALGEBRAS OF GENERALIZED JACOBI MATRICES ALICE FIALOWSKI University of P´ecsand E¨otv¨osLor´andUniversity Budapest, Hungary E-mail: fi[email protected], fi[email protected] KENJI IOHARA Univ Lyon, Universit´eClaude Bernard Lyon 1 CNRS UMR 5208, Institut Camille Jordan, F-69622 Villeurbanne, France E-mail: [email protected] Abstract. In these lecture notes, we consider infinite dimensional Lie algebras of generalized Jacobi matrices gJ(k) and gl1(k), which are important in soliton theory, and their orthogonal and symplectic subalgebras. In particular, we construct the homology ring of the Lie algebra gJ(k) and of the orthogonal and symplectic subalgebras. 1. Introduction. In this expository article, we consider a special type of general linear Lie algebras of infinite rank over a field k of characteristic zero, and compute their homology with trivial coefficients. Our interest in this topic came from an old short note (2 pages long) of Boris Feigin and Boris Tsygan (1983, [FT]). They stated some results on the homology with trivial coefficients of the Lie algebra of generalized Jacobi matrices over a field of characteristic 0. It was a real effort to piece out the precise statements in that densely encoded note, not to speak about the few line proofs. In the process of understanding the statements and proofs, we got involved in the topic, found different generalizations and figured out correct approaches. We should also mention that their old results generated much interest during these 36 years. -

CAMBRIDGE LIBRARY COLLECTION Books of Enduring Scholarly Value

Cambridge University Press 978-1-108-05200-9 - Fundamenta Nova Theoriae Functionum Ellipticarum Carl Gustav Jacob Jacobi Frontmatter More information CAMBRIDGE LIBRARY COLLECTION Books of enduring scholarly value Mathematics From its pre-historic roots in simple counting to the algorithms powering modern desktop computers, from the genius of Archimedes to the genius of Einstein, advances in mathematical understanding and numerical techniques have been directly responsible for creating the modern world as we know it. This series will provide a library of the most influential publications and writers on mathematics in its broadest sense. As such, it will show not only the deep roots from which modern science and technology have grown, but also the astonishing breadth of application of mathematical techniques in the humanities and social sciences, and in everyday life. Fundamenta nova theoriae functionum ellipticarum Carl Gustav Jacob Jacobi (1804–51) was one of the nineteenth century’s greatest mathematicians, as attested by the diversity of mathematical objects named after him. His early work on number theory had already attracted the attention of Carl Friedrich Gauss, but his reputation was made by his work on elliptic functions. Elliptic integrals had been studied for a long time, but in 1827 Jacobi and Niels Henrik Abel realised independently that the correct way to view them was by means of their inverse functions – what we now call the elliptic functions. The next few years witnessed a flowering of the subject as the two mathematicians pushed ahead. Adrien-Marie Legendre, an expert on the old theory, wrote: ‘I congratulate myself that I have lived long enough to witness these magnanimous conflicts between two equally strong young athletes’. -

The Princeton Companion to Mathematics

i © Copyright, Princeton University Press. No part of this book may be distributed, posted, or reproduced in any form by digital or mechanical means without prior written permission of the publisher. Contents Preface ix III.15 Determinants 174 Contributors xvii III.16 Differential Forms and Integration 175 III.17 Dimension 180 III.18 Distributions 184 III.19 Duality 187 Part I Introduction III.20 Dynamical Systems and Chaos 190 I.1 What Is Mathematics About? 1 III.21 Elliptic Curves 190 I.2 The Language and Grammar of Mathematics 8 III.22 The Euclidean Algorithm and Continued Fractions 191 I.3 Some Fundamental Mathematical Definitions 16 III.23 The Euler and Navier–Stokes Equations 193 I.4 The General Goals of Mathematical Research 48 III.24 Expanders 196 III.25 The Exponential and Logarithmic Functions 199 III.26 The Fast Fourier Transform 202 Part II The Origins of Modern III.27 The Fourier Transform 204 Mathematics III.28 Fuchsian Groups 208 III.29 Function Spaces 210 II.1 From Numbers to Number Systems 77 III.30 Galois Groups 213 II.2 Geometry 83 III.31 The Gamma Function 213 II.3 The Development of Abstract Algebra 95 III.32 Generating Functions 214 II.4 Algorithms 106 III.33 Genus 215 II.5 The Development of Rigor in III.34 Graphs 215 Mathematical Analysis 117 III.35 Hamiltonians 215 II.6 The Development of the Idea of Proof 129 III.36 The Heat Equation 216 II.7 The Crisis in the Foundations of Mathematics 142 III.37 Hilbert Spaces 219 III.38 Homology and Cohomology 221 III.39 Homotopy Groups 221 Part III Mathematical Concepts III.40 -

Last Multipliers As Autonomous Solutions of the Liouville Equation of Transport

Houston Journal of Mathematics c 2008 University of Houston Volume 34, No. 2, 2008 LAST MULTIPLIERS AS AUTONOMOUS SOLUTIONS OF THE LIOUVILLE EQUATION OF TRANSPORT MIRCEA CRASMAREANU Communicated by David Bao Abstract. Using the characterization of last multipliers as solutions of the Liouville's transport equation, new results are given in this approach of ODEs by providing several new characterizations, e.g. in terms of Witten and Marsden differentials or adjoint vector field. Applications to Hamil- tonian vector fields on Poisson manifolds and vector fields on Riemannian manifolds are presented. In the Poisson case, the unimodular bracket con- siderably simplifies computations while, in the Riemannian framework, a Helmholtz type decomposition yields remarkable examples: One is the qua- dratic porous medium equation, the second (the autonomous version of the previous) produces harmonic square functions, while the third refers to the gradient of the distance function with respect to a two dimensional rotation- ally symmetric metric. A final example relates the solutions of Helmholtz (particularly Laplace) equation to provide a last multiplier for a gradient vector field. A connection of our subject with gas dynamics in Riemannian setting is pointed out at the end. Introduction In January 1838, Joseph Liouville(1809-1882) published a note ([10]) on the time-dependence of the Jacobian of the "transformation" exerted by the solution of an ODE on its initial condition. In modern language, if A = A(x) is the vector field corresponding to the given ODE and m = m(t; x) is a smooth function 2000 Mathematics Subject Classification. 58A15; 58A30; 34A26; 34C40. Key words and phrases. -

Timeline of Mathematicians 1700 BCE Ahmes

Timeline of Mathematicians 1700 B.C.E. Ahmes (c. 1650 B.C.E.) 700 B.C.E. 600 B.C.E. Thales of Miletus (c. 630-c 550) Pythagoras of Samos (c. 570-c. 490) 500 B.C.E. Socrates (469-399) 400 B.C.E. Plato (427-347) Eudoxus of Cnidos (c. 400-c. 347) Aristotle (384-322) 300 B.C.E. Euclid (fl. c. 295) Archimedes of Syracuse (287-212) Eratosthenes of Cyrene (c. 276-c. 195) 100 B.C.E. Zeno of Sidon (c. 79 BCE) 100 C.E. Ptolemy (Claudius Ptolemaeus) (c. 100-c. 170) 200 C.E. Diophantus of Alexandria (c. 250?) 300 C.E. 1 Pappus of Alexandria (fl. c. 300-c. 350) Hypatia of Alexandria (c. 370-415) 900 Abu l-Quasim Maslama ibn Ahmad al-Faradi al-Majriti (fl. 980-1000) 1000 `Umar al-Khayyami (Omar Khayyam) (c. 1048-c. 1131) 1100 Leonardo Fibonacci of Pisa (C. 1170-post 1240) 1300 William of Ockham (c. 1285-c. 1349) 1400 Piero della Francesca (c. 1410-1492) Leonardo da Vinci (1452-1519) Scipione del Ferro (1465-1526) Nicolas Copernicus (1473-1543) 1500 Girolamo Cardano (1501-1576) Robert Recorde (1510-1558) Gerardus Mercator (Kremer) (1512-1594) Ludovico Ferrari (1522-1565) 1550 Franois Vite (Vieta) (1540-1603) Ludolph van Ceulen (1540-1610) John Napier (1550-1617) Francis Bacon (1561-1626) Henry Briggs (1561-1631) Galileo Galilei (1564-1642) 1600 Ren du Perron Descartes (1596-1650) 2 Pierre de Fermat (1601-1665) John Wallis (1616-1703) Nicolas Mercator (Kaufman) (1620-1687) Blaise Pascal (1623-1662) 1650 Isaac Barrow (1630-1677) James Gregory (1638-1675) Isaac Newton (1642-1727) Gottfried Wilhelm Leibniz (1646-1716) 1675 Jacques Bernoulli (James, Jakob)