The Myth of the Superuser: Fear, Risk, and Harm Online

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cross-Platform Analysis of Indirect File Leaks in Android and Ios Applications

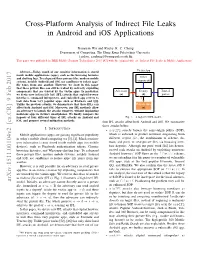

Cross-Platform Analysis of Indirect File Leaks in Android and iOS Applications Daoyuan Wu and Rocky K. C. Chang Department of Computing, The Hong Kong Polytechnic University fcsdwu, [email protected] This paper was published in IEEE Mobile Security Technologies 2015 [47] with the original title of “Indirect File Leaks in Mobile Applications”. Victim App Abstract—Today, much of our sensitive information is stored inside mobile applications (apps), such as the browsing histories and chatting logs. To safeguard these privacy files, modern mobile Other systems, notably Android and iOS, use sandboxes to isolate apps’ components file zones from one another. However, we show in this paper that these private files can still be leaked by indirectly exploiting components that are trusted by the victim apps. In particular, Adversary Deputy Trusted we devise new indirect file leak (IFL) attacks that exploit browser (a) (d) parties interfaces, command interpreters, and embedded app servers to leak data from very popular apps, such as Evernote and QQ. Unlike the previous attacks, we demonstrate that these IFLs can Private files affect both Android and iOS. Moreover, our IFL methods allow (s) an adversary to launch the attacks remotely, without implanting malicious apps in victim’s smartphones. We finally compare the impacts of four different types of IFL attacks on Android and Fig. 1. A high-level IFL model. iOS, and propose several mitigation methods. four IFL attacks affect both Android and iOS. We summarize these attacks below. I. INTRODUCTION • sopIFL attacks bypass the same-origin policy (SOP), Mobile applications (apps) are gaining significant popularity which is enforced to protect resources originating from in today’s mobile cloud computing era [3], [4]. -

Constantinos Georgiou Athanasopoulos

Constantinos Athanasopoulos, 1 Constantinos Georgiou Athanasopoulos Supervisor Mary Haight Title The Metaphysics of Intentionality: A Study of Intentionality focused on Sartre's and Wittgenstein's Philosophy of Mind and Language. Submission for PhD Department of Philosophy University of Glasgow JULY 1995 ProQuest Number: 13818785 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 13818785 Published by ProQuest LLC(2018). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106- 1346 GLASGOW UKVERSITT LIBRARY Constantinos Athanasopoulos, 2 ABSTRACT With this Thesis an attempt is made at charting the area of the Metaphysics of Intentionality, based mainly on the Philosophy of Jean-Paul Sartre. A Philosophical Analysis and an Evaluation o f Sartre’s Arguments are provided, and Sartre’s Theory of Intentionality is supported by recent commentaries on the work of Ludwig Wittgenstein. Sartre’s Theory of Intentionality is proposed, with few improvements by the author, as the only modem theory of the mind that can oppose effectively the advance of AI and physicalist reductivist attempts in Philosophy of Mind and Language. Discussion includes Sartre’s critique of Husserl, the relation of Sartre’s Theory of Intentionality to Realism, its applicability in the Theory of the Emotions, and recent theories of Intentionality such as Mohanty’s, Aquilla’s, Searle’s, and Harney’s. -

UPLC™ Universal Power-Line Carrier

UPLC™ Universal Power-Line Carrier CU4I-VER02 Installation Guide AMETEK Power Instruments 4050 N.W. 121st Avenue Coral Springs, FL 33065 1–800–785–7274 www.pulsartech.com THE BRIGHT STAR IN UTILITY COMMUNICATIONS March 2006 Trademarks All terms mentioned in this book that are known to be trademarks or service marks are listed below. In addition, terms suspected of being trademarks or service marks have been appropriately capital- ized. Ametek cannot attest to the accuracy of this information. Use of a term in this book should not be regarded as affecting the validity of any trademark or service mark. This publication includes fonts and/or images from CorelDRAW which are protected by the copyright laws of the U.S., Canada and elsewhere. Used under license. IBM and PC are registered trademarks of the International Business Machines Corporation. ST is a registered trademark of AT&T Windows is a registered trademark of Microsoft Corp. Universal Power-Line Carrier Installation Guide ESD WARNING! YOU MUST BE PROPERLY GROUNDED, TO PREVENT DAMAGE FROM STATIC ELECTRICITY, BEFORE HANDLING ANY AND ALL MODULES OR EQUIPMENT FROM AMETEK. All semiconductor components used, are sensitive to and can be damaged by the discharge of static electricity. Be sure to observe all Electrostatic Discharge (ESD) precautions when handling modules or individual components. March 2006 Page i Important Change Notification This document supercedes the preliminary version of the UPLC Installation Guide. The following list shows the most recent publication date for the new information. A publication date in bold type indicates changes to that information since the previous publication. -

35 Fallacies

THIRTY-TWO COMMON FALLACIES EXPLAINED L. VAN WARREN Introduction If you watch TV, engage in debate, logic, or politics you have encountered the fallacies of: Bandwagon – "Everybody is doing it". Ad Hominum – "Attack the person instead of the argument". Celebrity – "The person is famous, it must be true". If you have studied how magicians ply their trade, you may be familiar with: Sleight - The use of dexterity or cunning, esp. to deceive. Feint - Make a deceptive or distracting movement. Misdirection - To direct wrongly. Deception - To cause to believe what is not true; mislead. Fallacious systems of reasoning pervade marketing, advertising and sales. "Get Rich Quick", phone card & real estate scams, pyramid schemes, chain letters, the list goes on. Because fallacy is common, you might want to recognize them. There is no world as vulnerable to fallacy as the religious world. Because there is no direct measure of whether a statement is factual, best practices of reasoning are replaced be replaced by "logical drift". Those who are political or religious should be aware of their vulnerability to, and exportation of, fallacy. The film, "Roshomon", by the Japanese director Akira Kurisawa, is an excellent study in fallacy. List of Fallacies BLACK-AND-WHITE Classifying a middle point between extremes as one of the extremes. Example: "You are either a conservative or a liberal" AD BACULUM Using force to gain acceptance of the argument. Example: "Convert or Perish" AD HOMINEM Attacking the person instead of their argument. Example: "John is inferior, he has blue eyes" AD IGNORANTIAM Arguing something is true because it hasn't been proven false. -

Ironic Feminism: Rhetorical Critique in Satirical News Kathy Elrick Clemson University, [email protected]

Clemson University TigerPrints All Dissertations Dissertations 12-2016 Ironic Feminism: Rhetorical Critique in Satirical News Kathy Elrick Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations Recommended Citation Elrick, Kathy, "Ironic Feminism: Rhetorical Critique in Satirical News" (2016). All Dissertations. 1847. https://tigerprints.clemson.edu/all_dissertations/1847 This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information, please contact [email protected]. IRONIC FEMINISM: RHETORICAL CRITIQUE IN SATIRICAL NEWS A Dissertation Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Rhetorics, Communication, and Information Design by Kathy Elrick December 2016 Accepted by Dr. David Blakesley, Committee Chair Dr. Jeff Love Dr. Brandon Turner Dr. Victor J. Vitanza ABSTRACT Ironic Feminism: Rhetorical Critique in Satirical News aims to offer another perspective and style toward feminist theories of public discourse through satire. This study develops a model of ironist feminism to approach limitations of hegemonic language for women and minorities in U.S. public discourse. The model is built upon irony as a mode of perspective, and as a function in language, to ferret out and address political norms in dominant language. In comedy and satire, irony subverts dominant language for a laugh; concepts of irony and its relation to comedy situate the study’s focus on rhetorical contributions in joke telling. How are jokes crafted? Who crafts them? What is the motivation behind crafting them? To expand upon these questions, the study analyzes examples of a select group of popular U.S. -

SMM Rootkits

SMM Rootkits: A New Breed of OS Independent Malware Shawn Embleton Sherri Sparks Cliff Zou University of Central Florida University of Central Florida University of Central Florida [email protected] [email protected] [email protected] ABSTRACT 1. INTRODUCTION The emergence of hardware virtualization technology has led to A rootkit consists of a set of programs that work to subvert the development of OS independent malware such as the Virtual control of an Operating System from its legitimate users [16]. If Machine based rootkits (VMBRs). In this paper, we draw one were asked to classify viruses and worms by a single defining attention to a different but related threat that exists on many characteristic, the first word to come to mind would probably be commodity systems in operation today: The System Management replication. In contrast, the single defining characteristic of a Mode based rootkit (SMBR). System Management Mode (SMM) rootkit is stealth. Viruses reproduce, but rootkits hide. They hide is a relatively obscure mode on Intel processors used for low-level by compromising the communication conduit between an hardware control. It has its own private memory space and Operating System and its users. Secondary to hiding themselves, execution environment which is generally invisible to code rootkits are generally capable of gathering and manipulating running outside (e.g., the Operating System). Furthermore, SMM information on the target machine. They may, for example, log a code is completely non-preemptible, lacks any concept of victim user’s keystrokes to obtain passwords or manipulate the privilege level, and is immune to memory protection mechanisms. -

Fitting Words

FITTING WORDS Answer Key SAMPLE James B. Nance, Fitting Words: Classical Rhetoric for the Christian Student: Answer Key Copyright ©2016 by James B. Nance Version 1.0.0 Published by Roman Roads Media 739 S Hayes Street, Moscow, Idaho 83843 509-592-4548 | www.romanroadsmedia.com Cover design concept by Mark Beauchamp; adapted by Daniel Foucachon and Valerie Anne Bost. Cover illustration by Mark Beauchamp; adapted by George Harrell. Interior illustration by George Harrell. Interior design by Valerie Anne Bost. Printed in the United States of America. Unless otherwise indicated, Scripture quotations are from the New King James Version of the Bible, ©1979, 1980, 1982, 1984, 1988 by Thomas Nelson, Inc., Nashville, Tennessee. Scripture quotations marked NIV are from the the Holy Bible, New International Version®, NIV® Copyright © 1973, 1978, 1984, 2011 by Biblica, Inc.® Used by permission. All rights reserved worldwide. Scipture quotations marked ESV are from the English Standard Version copyright ©2001 by Crossway Bibles, a division of Good News Publishers. Used by permission. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by any means, electronic, mechanical, photocopy, recording, or otherwise, without prior permission of the author, except as provided by USA copyright law. Library of Congress Cataloging-in-Publication Data available on www.romanroadsmedia.com. ISBN-13: 978-1-944482-03-9 ISBN-10:SAMPLE 1-944482-03-2 16 17 18 19 20 21 22 23 10 9 8 7 6 5 4 3 2 1 FITTING WORDS Classical Rhetoric for the Christian Student Answer Key JAMES B. -

Hacks, Leaks and Disruptions | Russian Cyber Strategies

CHAILLOT PAPER Nº 148 — October 2018 Hacks, leaks and disruptions Russian cyber strategies EDITED BY Nicu Popescu and Stanislav Secrieru WITH CONTRIBUTIONS FROM Siim Alatalu, Irina Borogan, Elena Chernenko, Sven Herpig, Oscar Jonsson, Xymena Kurowska, Jarno Limnell, Patryk Pawlak, Piret Pernik, Thomas Reinhold, Anatoly Reshetnikov, Andrei Soldatov and Jean-Baptiste Jeangène Vilmer Chaillot Papers HACKS, LEAKS AND DISRUPTIONS RUSSIAN CYBER STRATEGIES Edited by Nicu Popescu and Stanislav Secrieru CHAILLOT PAPERS October 2018 148 Disclaimer The views expressed in this Chaillot Paper are solely those of the authors and do not necessarily reflect the views of the Institute or of the European Union. European Union Institute for Security Studies Paris Director: Gustav Lindstrom © EU Institute for Security Studies, 2018. Reproduction is authorised, provided prior permission is sought from the Institute and the source is acknowledged, save where otherwise stated. Contents Executive summary 5 Introduction: Russia’s cyber prowess – where, how and what for? 9 Nicu Popescu and Stanislav Secrieru Russia’s cyber posture Russia’s approach to cyber: the best defence is a good offence 15 1 Andrei Soldatov and Irina Borogan Russia’s trolling complex at home and abroad 25 2 Xymena Kurowska and Anatoly Reshetnikov Spotting the bear: credible attribution and Russian 3 operations in cyberspace 33 Sven Herpig and Thomas Reinhold Russia’s cyber diplomacy 43 4 Elena Chernenko Case studies of Russian cyberattacks The early days of cyberattacks: 5 the cases of Estonia, -

Nujj University of California, Berkeley School of Information Karen Hsu

Nujj University of California, Berkeley School of Information Karen Hsu Kesava Mallela Alana Pechon Nujj • Table of Contents Abstract 1 Introduction 2 Problem Statement 2 Objective 2 Literature Review 3 Competitive Analysis 4 Comparison matrix 4 Competition 5 Hypothesis 6 Use Case Scenarios 7 Overall System Design 10 Server-side 12 Design 12 Implementation 12 Twitter and Nujj 14 Client-side 15 Design 17 Implementation 17 Future Work 18 Extended Functionality in Future Implementations 18 Acknowledgements 19 Abstract Nujj is a location based service for mobile device users that enables users to tie electronic notes to physical locations. It is intended as an initial exploration into some of the many scenarios made possible by the rapidly increasing ubiquity of location-aware mobile devices. It should be noted that this does not limit the user to a device with a GPS embedded; location data can now also be gleaned through methods such as cell tower triangulation and WiFi IP address lookup. Within this report, both social and technical considerations associated with exposing a user’s location are discussed. The system envisioned addresses privacy concerns, as well as attempts to overcome the poor rate of adoption of current location based services already competing in the marketplace. Although several possible use cases are offered, it is the authors’ firm belief that a well-designed location- based service should not attempt to anticipate all, or even most, of the potential ways that users will find to take advantage of it. Rather, the service should be focused on building a system that is sufficiently robust yet flexible to allow users to pursue their own ideas. -

11 Gamero Cyber Conflicts

Explorations in Cyberspace and International Relations Challenges, Investigations, Analysis, Results Editors: Nazli Choucri, David D. Clark, and Stuart Madnick This work is funded by the Office of Naval Research under award number N00014-09-1-0597. Any opinions, findings, and conclusions or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the views of the Office of Naval Research. DECEMBER 2015 Chapter 11 Cyber Conflicts in International Relations: Framework and Case Studies ECIR Working Paper, March 2014. Alexander Gamero-Garrido This work is funded by the Office of Naval Research under award number N00014-09-1-0597. Any opinions, findings, and conclusions or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the views of the Office of Naval Research. 11.0 Overview Executive Summary Although cyber conflict is no longer considered particularly unusual, significant uncertainties remain about the nature, scale, scope and other critical features of it. This study addresses a subset of these issues by developing an internally consistent framework and applying it to a series of 17 case studies. We present each case in terms of (a) its socio-political context, (b) technical features, (c) the outcome and inferences drawn in the sources examined. The profile of each case includes the actors, their actions, tools they used and power relationships, and the outcomes with inferences or observations. Our findings include: • Cyberspace has brought in a number of new players – activists, shady government contractors – to international conflict, and traditional actors (notably states) have increasingly recognized the importance of the domain. -

The 13Th Annual ISNA-CISNA Education Forum Welcomes You!

13th Annual ISNA Education Forum April 6th -8th, 2011 The 13th Annual ISNA-CISNA Education Forum Welcomes You! The ISNA-CISNA Education Forum, which has fostered professional growth and development and provided support to many Islamic schools, is celebrating its 13-year milestone this April. We have seen accredited schools sprout from grassroots efforts across North America; and we credit Allah, subhanna wa ta‘alla, for empowering the many men and women who have made the dreams for our schools a reality. Today the United States is home to over one thousand weekend Islamic schools and several hundred full-time Islamic schools. Having survived the initial challenge of galvanizing community support to form a school, Islamic schools are now attempting to find the most effective means to build curriculum and programs that will strengthen the Islamic faith and academic excellence of their students. These schools continue to build quality on every level to enable their students to succeed in a competitive and increasingly multicultural and interdependent world. The ISNA Education Forum has striven to be a major platform for this critical endeavor from its inception. The Annual Education Forum has been influential in supporting Islamic schools and Muslim communities to carry out various activities such as developing weekend schools; refining Qur‘anic/Arabic/Islamic Studies instruction; attaining accreditation; improving board structures and policies; and implementing training programs for principals, administrators, and teachers. Thus, the significance of the forum lies in uniting our community in working towards a common goal for our youth. Specific Goals 1. Provide sessions based on attendees‘ needs, determined by surveys. -

35. Logic: Common Fallacies Steve Miller Kennesaw State University, [email protected]

Kennesaw State University DigitalCommons@Kennesaw State University Sexy Technical Communications Open Educational Resources 3-1-2016 35. Logic: Common Fallacies Steve Miller Kennesaw State University, [email protected] Cherie Miller Kennesaw State University, [email protected] Follow this and additional works at: http://digitalcommons.kennesaw.edu/oertechcomm Part of the Technical and Professional Writing Commons Recommended Citation Miller, Steve and Miller, Cherie, "35. Logic: Common Fallacies" (2016). Sexy Technical Communications. 35. http://digitalcommons.kennesaw.edu/oertechcomm/35 This Article is brought to you for free and open access by the Open Educational Resources at DigitalCommons@Kennesaw State University. It has been accepted for inclusion in Sexy Technical Communications by an authorized administrator of DigitalCommons@Kennesaw State University. For more information, please contact [email protected]. Logic: Common Fallacies Steve and Cherie Miller Sexy Technical Communication Home Logic and Logical Fallacies Taken with kind permission from the book Why Brilliant People Believe Nonsense by J. Steve Miller and Cherie K. Miller Brilliant People Believe Nonsense [because]... They Fall for Common Fallacies The dull mind, once arriving at an inference that flatters the desire, is rarely able to retain the impression that the notion from which the inference started was purely problematic. ― George Eliot, in Silas Marner In the last chapter we discussed passages where bright individuals with PhDs violated common fallacies. Even the brightest among us fall for them. As a result, we should be ever vigilant to keep our critical guard up, looking for fallacious reasoning in lectures, reading, viewing, and especially in our own writing. None of us are immune to falling for fallacies.