This Is Water and Religious Self-Deception Kevin Timpe in The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

How Do Fish See Water? Building Public Will to Advance Inclusive Communities

How Do Fish See Water? Building Public Will to Advance Inclusive Communities Tiffany Manuel, TheCaseMade “There are these two young fish swimming along, and they happen to meet an older fish swimming the other way, who nods at them and says, ‘Morning, boys. How’s the water?’ And the two young fish swim on for a bit, and then eventually one of them looks over at the other and goes, ‘What the hell is water?’” —David Foster Wallace1 Cultivating more equitable and inclusive communities is challenging work. In addition to the technical challenges of fostering such communities, there also is the added conundrum of how we build public support for policies and investments that make equitable and inclusive development possible. On the public will-building front, this work is made exponentially tougher because it generally means asking people to problematize an issue—racial and economic segregation—that they do not see as a problem that threatens the values and vitality of the communities in which they live. Unlike climate change, health care, education, or other social “issues” that are well-understood as requiring public intervention, racial and economic segregation operates so ubiquitously that it is often ignored as a “thing” to be solved. It just is. And, when people are asked explicitly to reflect on the high level of concentrated segregation that characterizes their communities and to consider the well-documented negative consequences of us living so separately, many struggle to “see” this as a compelling policy problem with the same shaping force of other issues requiring national attention. Perhaps most importantly, they struggle to see their stake in shaping solutions and supporting policies that cultivate more equitable and inclusive places. -

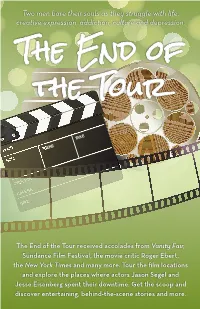

The End of the Tour

Two men bare their souls as they struggle with life, creative expression, addiction, culture and depression. The End of the Tour The End of the Tour received accolades from Vanity Fair, Sundance Film Festival, the movie critic Roger Ebert, the New York Times and many more. Tour the film locations and explore the places where actors Jason Segel and Jesse Eisenberg spent their downtime. Get the scoop and discover entertaining, behind-the-scene stories and more. The End of the Tour follows true events and the relationship between acclaimed author David Foster Wallace and Rolling Stone reporter David Lipsky. Jason Segel plays David Foster Wallace who committed suicide in 2008, while Jesse Eisenberg plays the Rolling Stone reporter who followed Wallace around the country for five days as he promoted his book, Infinite Jest. right before the bookstore opened up again. All the books on the shelves had to come down and were replaced by books that were best sellers and poplar at the time the story line took place. Schuler Books has a fireplace against one wall which was covered up with shelving and books and used as the backdrop for the scene. Schuler Books & Music is one of the nation’s largest independent bookstores. The bookstore boasts a large selection of music, DVDs, gift items, and a gourmet café. PHOTO: EMILY STAVROU-SCHAEFER, SCHULER BOOKS STAVROU-SCHAEFER, PHOTO: EMILY PHOTO: JANET KASIC DAVID FOSTER WALLACE’S HOUSE 5910 72nd Avenue, Hudsonville Head over to the house that served as the “home” of David Foster Wallace. This home (15 miles from Grand Rapids) is where all house scenes were filmed. -

The Lobster Considered

6 ! e Lobster Considered Robert C. Jones ! e day may come, when the rest of the animal creation may acquire those rights which never could have been withholden from them but by the hand of tyranny. — Jeremy Bentham Is it not possible that future generations will regard our present agribusiness and eating practices in much the same way we now view Nero ’ s entertainments or Mengele ’ s experiments? — David Foster Wallace ! e arguments to prove man ’ s superiority cannot shatter this hard fact: in su" ering the animals are our equals. — Peter Singer In 1941 M. F. K. Fisher " rst asked us to consider the oyster,1 n o t a s a m o r a l but as a culinary exploration. Sixty-three years later when David Foster Wallace asked us to consider the lobster2 for ostensibly similar reasons, the investigation quickly abandoned the gustatory and took a turn toward the philosophical and ethical. In that essay, originally published in Gourmet magazine, Wallace challenges us to think deeply about the troubling ethical questions raised by the issue of lobster pain and our moral (mis)treatment of these friendly crustaceans. Since the publication of that essay, research on nonhuman animal sentience has exploded. News reports of the " ndings of research into animal behavior and cognition are common; 2010 saw the publication of a popular book of the title Do Fish Feel Pain? 3 In this essay, I accept Wallace ’ s challenge and argue not only that according to our best 1 M. F. K. Fisher, Consider the Oyster (New York: Still Point Press, 2001). -

David Foster Wallace: Media, Metafiction, and Miscommunication

Bard College Bard Digital Commons Senior Projects Spring 2018 Bard Undergraduate Senior Projects Spring 2018 "Becoming" David Foster Wallace: Media, Metafiction, and Miscommunication Gordon Hugh Willis IV Bard College, [email protected] Follow this and additional works at: https://digitalcommons.bard.edu/senproj_s2018 Part of the American Literature Commons, and the American Popular Culture Commons This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. Recommended Citation Willis, Gordon Hugh IV, ""Becoming" David Foster Wallace: Media, Metafiction, and Miscommunication" (2018). Senior Projects Spring 2018. 133. https://digitalcommons.bard.edu/senproj_s2018/133 This Open Access work is protected by copyright and/or related rights. It has been provided to you by Bard College's Stevenson Library with permission from the rights-holder(s). You are free to use this work in any way that is permitted by the copyright and related rights. For other uses you need to obtain permission from the rights- holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. For more information, please contact [email protected]. !i “Becoming” David Foster Wallace: Media, Metafiction, and Miscommunication Senior Project submitted to The Division of Languages and Literature of Bard College by Gordon Hugh Willis IV Annandale-on-Hudson, New York May 2018 !ii Dedicated to Nathan Shockey for keeping me on track, something with which I’ve always -

Of Postmodernism in David Foster Wallace by Shannon

Reading Beyond Irony: Exploring the Post-secular “End” of Postmodernism in David Foster Wallace By Shannon Marie Minifie A thesis submitted to the Graduate Program in English Language and Literature in conformity with the requirements for the Degree of Doctor of Philosophy Queen's University Kingston, Ontario, Canada August 2019 Copyright © Shannon Marie Minifie, 2019 Dedicated to the memory of Tyler William Minifie (1996-2016) ii Abstract David Foster Wallace’s self-described attempt to move past the “ends” of postmodernism has made for much scholarly fodder, but the criticism that has resulted focuses on Wallace’s supposed attempts to eschew irony while neglecting what else is at stake in thinking past these “ends.” Looking at various texts across his oeuvre, I think about Wallace’s way “past” postmodern irony through his engagement with what has come to be variously known as the “postsecular.” Putting some of his work in conversation with postsecular thought and criticism, then, I aim to provide a new context for thinking about the nature of Wallace’s relationship to religion as well as to late postmodernism. My work builds on that of critics like John McClure, who have challenged the established secular theoretical frameworks for postmodernism, and argue that such post-secular interventions provide paradigmatic examples of the relationship between postmodernism and post-secularism, where the latter, as an evolution or perhaps a mutation of the former, signals postmodernism’s lateness—or at least its decline as a cultural- historical dominant. I also follow other Wallace critics in noting his spiritual and religious preoccupations, building on some of the great work that has already been done to explore his religious and post-secular leanings. -

"One Never Knew": David Foster Wallace and the Aesthetics of Consumption

Bowdoin College Bowdoin Digital Commons Honors Projects Student Scholarship and Creative Work 2016 "One Never Knew": David Foster Wallace and the Aesthetics of Consumption Jesse Ortiz Bowdoin College, [email protected] Follow this and additional works at: https://digitalcommons.bowdoin.edu/honorsprojects Part of the Literature in English, North America Commons Recommended Citation Ortiz, Jesse, ""One Never Knew": David Foster Wallace and the Aesthetics of Consumption" (2016). Honors Projects. 44. https://digitalcommons.bowdoin.edu/honorsprojects/44 This Open Access Thesis is brought to you for free and open access by the Student Scholarship and Creative Work at Bowdoin Digital Commons. It has been accepted for inclusion in Honors Projects by an authorized administrator of Bowdoin Digital Commons. For more information, please contact [email protected]. “One Never Knew”: David Foster Wallace and the Aesthetics of Consumption An Honors Paper for the Department of English By Jesse Ortiz Bowdoin College, 2016 ©2016 Jesse Ortiz Table of Contents Acknowledgements ii 0: Isn’t it Ironic? 1 1: Guilty Pleasure: Consumption in the Essays 4 2: Who’s There? 28 0. The Belly of the Beast: Entering Infinite Jest 28 1. De-formed: Undoing Aesthetic Pleasure 33 2. Avril is the Cruellest Moms 49 3. “Epiphanyish”: Against the Aesthetics of the Buzz 65 ∞: “I Do Have a Thesis” 79 Works Cited 81 ii Acknowledgements This project, of course, could not exist without the guidance of Professor Marilyn Reizbaum, who gave me no higher compliment than when she claimed I have a “modernist mind.” Thank you. I’d also like to thank my other readers, Morten Hansen, Brock Clarke and Hilary Thompson, for their insightful feedback. -

The Work of David Foster Wallace and Post-Postmodernism Charles Reginald Nixon Submitted in Accordance with the Requirements

- i - The work of David Foster Wallace and post-postmodernism Charles Reginald Nixon Submitted in accordance with the requirements for the degree of Doctor of Philosophy The University of Leeds School of English September 2013 - ii - - iii - The candidate confirms that the work submitted is his own and that appropriate credit has been given where reference has been made to the work of others. This copy has been supplied on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. © 2013 The University of Leeds and Charles Reginald Nixon The right of Charles Reginald Nixon to be identified as Author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. - iv - - v - Acknowledgements (With apologies to anyone I have failed to name): Many thanks to Hamilton Carroll for guiding this thesis from its earliest stages. Anything good here has been encouraged into existence by him, anything bad is the result of my stubborn refusal to listen to his advice. Thanks, too, to Andrew Warnes for additional guidance and help along the way, and to the many friends and colleagues at the University of Leeds and beyond who have provided assistance, advice and encouragement. Stephen Burn, in particular, and the large and growing number of fellow Wallace scholars I have met around the world have contributed much to this work's intellectual value; our conversations have been amongst my most treasured, from a scholarly perspective and just because they have been so enjoyable. -

Defending Literary Culture in the Fiction of David Foster

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Texas A&M University NOVEL AFFIRMATIONS: DEFENDING LITERARY CULTURE IN THE FICTION OF DAVID FOSTER WALLACE, JONATHAN FRANZEN, AND RICHARD POWERS A Dissertation by MICHAEL LITTLE Submitted to the Office of Graduate Studies of Texas A&M University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2004 Major Subject: English NOVEL AFFIRMATIONS: DEFENDING LITERARY CULTURE IN THE FICTION OF DAVID FOSTER WALLACE, JONATHAN FRANZEN, AND RICHARD POWERS A Dissertation by MICHAEL LITTLE Submitted to Texas A&M University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY Approved as to style and content by: David McWhirter Mary Ann O’Farrell (Chair of Committee) (Member) Sally Robinson Stephen Daniel (Member) (Member) Paul Parrish (Head of Department) May 2004 Major Subject: English iii ABSTRACT Novel Affirmations: Defending Literary Culture in the Fiction of David Foster Wallace, Jonathan Franzen, and Richard Powers. (May 2004) Michael Little, B.A., University of Houston; M.A., University of Houston Chair of Advisory Committee: Dr. David McWhirter This dissertation studies the fictional and non-fictional responses of David Foster Wallace, Jonathan Franzen, and Richard Powers to their felt anxieties about the vitality of literature in contemporary culture. The intangible nature of literature’s social value marks the literary as an uneasy, contested, and defensive cultural site. At the same time, the significance of any given cultural artifact or medium, such as television, film, radio, or fiction, is in a continual state of flux. -

The Shaping of Storied Selves in David Foster Wallace's the Pale King

Critique,55:508–521,2014 Copyright © Taylor & Francis Group, LLC ISSN: 0011-1619 print/1939-9138 online DOI: 10.1080/00111619.2013.809329 SHANNON ELDERON University of Cincinnati The Shaping of Storied Selves in David Foster Wallace’s The Pale King Postmortem retrospectives of David Foster Wallace have too often painted him in broad strokes as either a postmodern trickster, enthralled with literary gamesmanship and irony, or, perhaps more commonly, as an apostle of the earnest and the straightforward. This essay attempts to arrive at amorenuancedunderstandingofWallace’srelationshiptosincerity and irony through a reading of his final work, The Pale King,alongsidekeystatementsfrominterviewsandpublishedessays. The most well-developed sections of The Pale King portray characters for whom a commitment to sincerity can be just as much a danger as a commitment to irony. For these characters, moving toward adulthood means leaving childish fixations on sincerity behind and calling upon new parts of themselves that may be accessible only through performance, pretense, and artifice. Keywords: David Foster Wallace, The Pale King,sincerity,performance,narrative The relationship between sincerity and performance in DavidFosterWallace’sfictionisa vexed one, and it has been much commented on. In the popular imagination, he is often misread in one of two (diametrically opposed) ways. The first is as a supreme ironist, a writer who even in his unfinished final novel, The Pale King,wasenthralledwithpostmoderngamesin awaythatareaderlookingforsomethingmorestraightforward -

New Sincerity in American Literature

University of Rhode Island DigitalCommons@URI Open Access Dissertations 2018 THE NEW SINCERITY IN AMERICAN LITERATURE Matthew J. Balliro University of Rhode Island, [email protected] Follow this and additional works at: https://digitalcommons.uri.edu/oa_diss Recommended Citation Balliro, Matthew J., "THE NEW SINCERITY IN AMERICAN LITERATURE" (2018). Open Access Dissertations. Paper 771. https://digitalcommons.uri.edu/oa_diss/771 This Dissertation is brought to you for free and open access by DigitalCommons@URI. It has been accepted for inclusion in Open Access Dissertations by an authorized administrator of DigitalCommons@URI. For more information, please contact [email protected]. THE NEW SINCERITY IN AMERICAN LITERATURE BY MATTHEW J. BALLIRO A DISSERTATION SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN ENGLISH UNIVERSITY OF RHODE ISLAND 2018 DOCTOR OF PHILOSOPHY DISSERTATION OF Matthew J. Billaro APPROVED: Dissertation Committee: Major Professor Naomi Mandel Ryan Trimm Robert Widdell Nasser H. Zawia DEAN OF THE GRADUATE SCHOOL UNIVERSITY OF RHODE ISLAND 2018 Abstract The New Sincerity is a provocative mode of literary interpretation that focuses intensely on coherent connections that texts can build with readers who are primed to seek out narratives and literary works that rest on clear and stable relationships between dialectics of interior/exterior, self/others, and meaning/expression. Studies on The New Sincerity so far have focused on how it should be situated against dominant literary movements such as postmodernism. My dissertation aims for a more positive definition, unfolding the most essential details of The New Sincerity in three parts: by exploring the intellectual history of the term “sincerity” and related ideas (such as authenticity); by establishing the historical context that created the conditions that led to The New Sincerity’s genesis in the 1990s; and by tracing the different forms reading with The New Sincerity can take by analyzing a diverse body of literary texts. -

"Goo-Prone and Generally Pathetic": Empathy and Irony in David Foster Wallace's Infinite Jest

Georgia Southern University Digital Commons@Georgia Southern Electronic Theses and Dissertations Graduate Studies, Jack N. Averitt College of Spring 2017 "Goo-prone and generally pathetic": Empathy and Irony in David Foster Wallace's Infinite Jest Benjamin L. Peyton Follow this and additional works at: https://digitalcommons.georgiasouthern.edu/etd Part of the American Literature Commons, and the Literature in English, North America Commons Recommended Citation Peyton, Benjamin L., ""Goo-prone and generally pathetic": Empathy and Irony in David Foster Wallace's Infinite Jest" (2017). Electronic Theses and Dissertations. 1584. https://digitalcommons.georgiasouthern.edu/etd/1584 This thesis (open access) is brought to you for free and open access by the Graduate Studies, Jack N. Averitt College of at Digital Commons@Georgia Southern. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons@Georgia Southern. For more information, please contact [email protected]. “GOO-PRONE AND GENERALLY PATHETIC”: EMPATHY AND IRONY IN DAVID FOSTER WALLACE’S INFINITE JEST by BENJAMIN PEYTON (Under the Direction of Joe Pellegrino) ABSTRACT Critical considerations of David Foster Wallace’s work have tended, on the whole, to use the framework that the author himself established in his essay “E Unibus Pluram” and in his interview with Larry McCaffery. Following his own lead, the critical consensus is that Wallace succeeds in overcoming the limits of postmodern irony. If we examine the formal trappings of his writing, however, we find that the critical assertion that Wallace manages to transcend the paralytic irony of his postmodern predecessors is made in the face of his frequent employment of postmodern techniques and devices. -

David Foster Wallace, “This Is Water” Commencement Speech at Kenyon College 1

David Foster Wallace, “This is Water” Commencement Speech at Kenyon College 1 David Foster Wallace, “This is Water.” Commencement Speech Delivered at Kenyon College to the Class of 2005. In his commencement speech to the Kenyon College graduating class of 2005, David Foster Wallace asks the graduates to pay attention to the world around them. He challenges them to examine the real value of an education, which, as he claims, has very little to do with knowledge and a lot to do with awareness of what surrounds us. His example of a white-collar worker shopping for groceries in a crowded supermarket after a long work day drives home the point that unless graduates really “learn how to think,” they will be, as he puts it, “pissed and miserable” when they confront the daily challenges of life. Towards the end of the speech, Wallace makes a bold claim: that in the daily grind of life, “there is no such thing as atheism; we all worship.” He suggests to the graduates that a compelling reason for us to worship some transcendent being or some other abstract ideal, instead of material goods, beauty, power, or personal intelligence, is that worshiping these things will “eat you alive.” Click here to listen to David Foster Wallace deliver his famous speech, "This is Water." Click here to read a transcript of his address. Vocabulary Below are 40 terms that some students may need to know in order to understand David Foster Wallace’s commencement speech, “This is Water.” Define each word as succinctly as possible; define each word as it is used in the speech.