The Computer Nonsense Guide Or the Influence of Chaos on Reason! 14.05.2018 Contents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Evolution of Lisp

1 The Evolution of Lisp Guy L. Steele Jr. Richard P. Gabriel Thinking Machines Corporation Lucid, Inc. 245 First Street 707 Laurel Street Cambridge, Massachusetts 02142 Menlo Park, California 94025 Phone: (617) 234-2860 Phone: (415) 329-8400 FAX: (617) 243-4444 FAX: (415) 329-8480 E-mail: [email protected] E-mail: [email protected] Abstract Lisp is the world’s greatest programming language—or so its proponents think. The structure of Lisp makes it easy to extend the language or even to implement entirely new dialects without starting from scratch. Overall, the evolution of Lisp has been guided more by institutional rivalry, one-upsmanship, and the glee born of technical cleverness that is characteristic of the “hacker culture” than by sober assessments of technical requirements. Nevertheless this process has eventually produced both an industrial- strength programming language, messy but powerful, and a technically pure dialect, small but powerful, that is suitable for use by programming-language theoreticians. We pick up where McCarthy’s paper in the first HOPL conference left off. We trace the development chronologically from the era of the PDP-6, through the heyday of Interlisp and MacLisp, past the ascension and decline of special purpose Lisp machines, to the present era of standardization activities. We then examine the technical evolution of a few representative language features, including both some notable successes and some notable failures, that illuminate design issues that distinguish Lisp from other programming languages. We also discuss the use of Lisp as a laboratory for designing other programming languages. We conclude with some reflections on the forces that have driven the evolution of Lisp. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

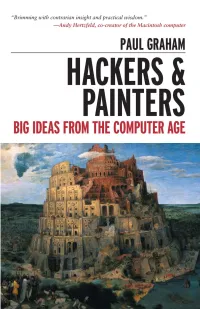

Hackers and Painters: Big Ideas from the Computer

HACKERS & PAINTERS Big Ideas from the Computer Age PAUL GRAHAM Hackers & Painters Big Ideas from the Computer Age beijing cambridge farnham koln¨ paris sebastopol taipei tokyo Copyright c 2004 Paul Graham. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly & Associates books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (safari.oreilly.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Allen Noren Production Editor: Matt Hutchinson Printing History: May 2004: First Edition. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. The cover design and related trade dress are trademarks of O’Reilly Media, Inc. The cover image is Pieter Bruegel’s Tower of Babel in the Kunsthistorisches Museum, Vienna. This reproduction is copyright c Corbis. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. ISBN13- : 978 - 0- 596-00662-4 [C] for mom Note to readers The chapters are all independent of one another, so you don’t have to read them in order, and you can skip any that bore you. -

1. with Examples of Different Programming Languages Show How Programming Languages Are Organized Along the Given Rubrics: I

AGBOOLA ABIOLA CSC302 17/SCI01/007 COMPUTER SCIENCE ASSIGNMENT 1. With examples of different programming languages show how programming languages are organized along the given rubrics: i. Unstructured, structured, modular, object oriented, aspect oriented, activity oriented and event oriented programming requirement. ii. Based on domain requirements. iii. Based on requirements i and ii above. 2. Give brief preview of the evolution of programming languages in a chronological order. 3. Vividly distinguish between modular programming paradigm and object oriented programming paradigm. Answer 1i). UNSTRUCTURED LANGUAGE DEVELOPER DATE Assembly Language 1949 FORTRAN John Backus 1957 COBOL CODASYL, ANSI, ISO 1959 JOSS Cliff Shaw, RAND 1963 BASIC John G. Kemeny, Thomas E. Kurtz 1964 TELCOMP BBN 1965 MUMPS Neil Pappalardo 1966 FOCAL Richard Merrill, DEC 1968 STRUCTURED LANGUAGE DEVELOPER DATE ALGOL 58 Friedrich L. Bauer, and co. 1958 ALGOL 60 Backus, Bauer and co. 1960 ABC CWI 1980 Ada United States Department of Defence 1980 Accent R NIS 1980 Action! Optimized Systems Software 1983 Alef Phil Winterbottom 1992 DASL Sun Micro-systems Laboratories 1999-2003 MODULAR LANGUAGE DEVELOPER DATE ALGOL W Niklaus Wirth, Tony Hoare 1966 APL Larry Breed, Dick Lathwell and co. 1966 ALGOL 68 A. Van Wijngaarden and co. 1968 AMOS BASIC FranÇois Lionet anConstantin Stiropoulos 1990 Alice ML Saarland University 2000 Agda Ulf Norell;Catarina coquand(1.0) 2007 Arc Paul Graham, Robert Morris and co. 2008 Bosque Mark Marron 2019 OBJECT-ORIENTED LANGUAGE DEVELOPER DATE C* Thinking Machine 1987 Actor Charles Duff 1988 Aldor Thomas J. Watson Research Center 1990 Amiga E Wouter van Oortmerssen 1993 Action Script Macromedia 1998 BeanShell JCP 1999 AngelScript Andreas Jönsson 2003 Boo Rodrigo B. -

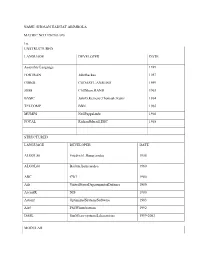

NAME: SHOSAN HADIJAT ABIMBOLA MATRIC NO:17/SCI01/076 1I). UNSTRUCTURED LANGUAGE DEVELOPER DATE Assembly Language 1949 FORTRAN

NAME: SHOSAN HADIJAT ABIMBOLA MATRIC NO:17/SCI01/076 1i). UNSTRUCTURED LANGUAGE DEVELOPER DATE Assembly Language 1949 FORTRAN JohnBackus 1957 COBOL CODASYL,ANSI,ISO 1959 JOSS CliffShaw,RAND 1963 BASIC JohnG.Kemeny,ThomasE.Kurtz 1964 TELCOMP BBN 1965 MUMPS NeilPappalardo 1966 FOCAL RichardMerrill,DEC 1968 STRUCTURED LANGUAGE DEVELOPER DATE ALGOL58 FriedrichL.Bauer,andco. 1958 ALGOL60 Backus,Bauerandco. 1960 ABC CWI 1980 Ada UnitedStatesDepartmentofDefence 1980 AccentR NIS 1980 Action! OptimizedSystemsSoftware 1983 Alef PhilWinterbottom 1992 DASL SunMicro-systemsLaboratories 1999-2003 MODULAR LANGUAGE DEVELOPER DATE ALGOLW NiklausWirth,TonyHoare 1966 APL LarryBreed,DickLathwellandco. 1966 ALGOL68 A.VanWijngaardenandco. 1968 AMOSBASIC FranÇois Lionet anConstantin 1990 Stiropoulos AliceML SaarlandUniversity 2000 Agda UlfNorell;Catarinacoquand(1.0) 2007 Arc PaulGraham,RobertMorrisandco. 2008 Bosque MarkMarron 2019 OBJECT-ORIENTED LANGUAGE DEVELOPER DATE C* ThinkingMachine 1987 Actor CharlesDuff 1988 Aldor ThomasJ.WatsonResearchCenter 1990 AmigaE WoutervanOortmerssen 1993 ActionScript Macromedia 1998 BeanShell JCP 1999 AngelScript AndreasJönsson 2003 Boo RodrigoB.DeOliveria 2003 AmbientTalk Softwarelanguageslab,Universityof Brussels 2006 Axum Microsoft 2009 1ii) a. Scientific Domain LANGUAGE DEVELOPER DATE FORTRAN JohnBackusandIBM 1950 APT DouglasT.Ross 1956 PL/I IBM 1964 AMPL AMPLOptimization,Inc. 1966 MATLAB MathWorks 1984 J KennethE,Iverson,RogerHui 1990 Ch HarryH.Cheng 2001 Julia JeffBezanson,AlanEdelmanandco. 2012 Cuneiform JörgenBrandt -

A Lecture Note on Csc 322 Operating System I by Dr

A LECTURE NOTE ON CSC 322 OPERATING SYSTEM I BY DR. S. A. SODIYA 1 SECTION ONE 1.0 INTRODUCTION TO OPERATING SYSTEMS 1.1 DEFINITIONS OF OPERATING SYSTEMS An operating system (commonly abbreviated OS and O/S) is the infrastructure software component of a computer system; it is responsible for the management and coordination of activities and the sharing of the limited resources of the computer. An operating system is the set of programs that controls a computer. The operating system acts as a host for applications that are run on the machine. As a host, one of the purposes of an operating system is to handle the details of the operation of the hardware. This relieves application programs from having to manage these details and makes it easier to write applications. Operating Systems can be viewed from two points of views: Resource manager and Extended machines. From Resource manager point of view, Operating Systems manage the different parts of the system efficiently and from extended machines point of view, Operating Systems provide a virtual machine to users that is more convenient to use. 1.2 HISTORICAL DEVELOPMENT OF OPERATING SYSTEMS Historically operating systems have been tightly related to the computer architecture, it is good idea to study the history of operating systems from the architecture of the computers on which they run. Operating systems have evolved through a number of distinct phases or generations which corresponds roughly to the decades. The 1940's - First Generation The earliest electronic digital computers had no operating systems. Machines of the time were so primitive that programs were often entered one bit at a time on rows of mechanical switches (plug boards). -

Computer History a Look Back Contents

Computer History A look back Contents 1 Computer 1 1.1 Etymology ................................................. 1 1.2 History ................................................... 1 1.2.1 Pre-twentieth century ....................................... 1 1.2.2 First general-purpose computing device ............................. 3 1.2.3 Later analog computers ...................................... 3 1.2.4 Digital computer development .................................. 4 1.2.5 Mobile computers become dominant ............................... 7 1.3 Programs ................................................. 7 1.3.1 Stored program architecture ................................... 8 1.3.2 Machine code ........................................... 8 1.3.3 Programming language ...................................... 9 1.3.4 Fourth Generation Languages ................................... 9 1.3.5 Program design .......................................... 9 1.3.6 Bugs ................................................ 9 1.4 Components ................................................ 10 1.4.1 Control unit ............................................ 10 1.4.2 Central processing unit (CPU) .................................. 11 1.4.3 Arithmetic logic unit (ALU) ................................... 11 1.4.4 Memory .............................................. 11 1.4.5 Input/output (I/O) ......................................... 12 1.4.6 Multitasking ............................................ 12 1.4.7 Multiprocessing ......................................... -

COMPUTER ORGANIZATION Subject Code: 10CS46

COMPUTER ORGANIZATION 10CS46 COMPUTER ORGANIZATION Subject Code: 10CS46 PART – A UNIT-1 6 Hours Basic Structure of Computers: Computer Types, Functional Units, Basic Operational Concepts, Bus Structures, Performance – Processor Clock, Basic Performance Equation, Clock Rate, Performance Measurement, Historical Perspective Machine Instructions and Programs: Numbers, Arithmetic Operations and Characters, Memory Location and Addresses, Memory Operations, Instructions and Instruction Sequencing, UNIT - 2 7 Hours Machine Instructions and Programs contd.: Addressing Modes, Assembly Language, Basic Input and Output Operations, Stacks and Queues, Subroutines, Additional Instructions, Encoding of Machine Instructions UNIT - 3 6 Hours Input/Output Organization: Accessing I/O Devices, Interrupts – Interrupt Hardware, Enabling and Disabling Interrupts, Handling Multiple Devices, Controlling Device Requests, Exceptions, Direct Memory Access, Buses UNIT-4 7 Hours Input/Output Organization contd.: Interface Circuits, Standard I/O Interfaces – PCI Bus, SCSI Bus, USB www.vtucs.com Dept of CSE,SJBIT Page 1 COMPUTER ORGANIZATION 10CS46 PART – B UNIT - 5 7 Hours Memory System: Basic Concepts, Semiconductor RAM Memories, Read Only Memories, Speed, Size, and Cost, Cache Memories – Mapping Functions, Replacement Algorithms, Performance Considerations, Virtual Memories, Secondary Storage UNIT - 6 7 Hours Arithmetic: Addition and Subtraction of Signed Numbers, Design of Fast Adders, Multiplication of Positive Numbers, Signed Operand Multiplication, Fast Multiplication, Integer Division, Floating-point Numbers and Operations UNIT - 7 6 Hours Basic Processing Unit: Some Fundamental Concepts, Execution of a Complete Instruction, Multiple Bus Organization, Hard-wired Control, Microprogrammed Control UNIT - 8 6 Hours Multicores, Multiprocessors, and Clusters: Performance, The Power Wall, The Switch from Uniprocessors to Multiprocessors, Amdahl’s Law, Shared Memory Multiprocessors, Clusters and other Message Passing Multiprocessors, Hardware Multithreading, SISD, IMD, SIMD, SPMD, and Vector. -

Redalyc.Optimization of Operating Systems Towards Green Computing

International Journal of Combinatorial Optimization Problems and Informatics E-ISSN: 2007-1558 [email protected] International Journal of Combinatorial Optimization Problems and Informatics México Appasami, G; Suresh Joseph, K Optimization of Operating Systems towards Green Computing International Journal of Combinatorial Optimization Problems and Informatics, vol. 2, núm. 3, septiembre-diciembre, 2011, pp. 39-51 International Journal of Combinatorial Optimization Problems and Informatics Morelos, México Available in: http://www.redalyc.org/articulo.oa?id=265219635005 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative © International Journal of Combinatorial Optimization Problems and Informatics, Vol. 2, No. 3, Sep-Dec 2011, pp. 39-51, ISSN: 2007-1558. Optimization of Operating Systems towards Green Computing Appasami.G Assistant Professor, Department of CSE, Dr. Pauls Engineering College, Affiliated to Anna University – Chennai, Villupuram, Tamilnadu, India E-mail: [email protected] Suresh Joseph.K Assistant Professor, Department of computer science, Pondicherry University, Pondicherry, India E-mail: [email protected] Abstract. Green Computing is one of the emerging computing technology in the field of computer science engineering and technology to provide Green Information Technology (Green IT / GC). It is mainly used to protect environment, optimize energy consumption and keeps green environment. Green computing also refers to environmentally sustainable computing. In recent years, companies in the computer industry have come to realize that going green is in their best interest, both in terms of public relations and reduced costs. -

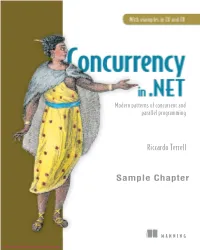

Concurrency in .NET: Modern Patterns of Concurrent and Parallel

Modern patterns of concurrent and parallel programming Riccardo Terrell Sample Chapter MANNING www.itbook.store/books/9781617292996 Chapter dependency graph Chapter 1 Chapter 2 • Why concurrency and definitions? • Solving problems by composing simple solutions • Pitfalls of concurrent programming • Simplifying programming with closures Chapter 3 Chapter 6 • Immutable data structures • Functional reactive programming • Lock-free concurrent code • Querying real-time event streams Chapter 4 Chapter 7 • Big data parallelism • Composing parallel operations • The Fork/Join pattern • Querying real-time event streams Chapter 5 Chapter 8 • Isolating and controlling side effects • Parallel asynchronous computations • Composing asynchronous executions Chapter 9 • Cooperating asynchronous computations • Extending asynchronous F# computational expressions Chapter 11 Chapter 10 • Agent (message-passing) model • Task combinators • Async combinators and conditional operators Chapter 13 Chapter 12 • Reducing memory consumption • Composing asynchronous TPL Dataflow blocks • Parallelizing dependent tasks • Concurrent Producer/Consumer pattern Chapter 14 • Scalable applications using CQRS pattern www.itbook.store/books/9781617292996 Concurrency in .NET Modern patterns of concurrent and parallel programming by Riccardo Terrell Chapter 1 Copyright 2018 Manning Publications www.itbook.store/books/9781617292996 brief contents Part 1 Benefits of functional programming applicable to concurrent programs ............................................ 1 1 ■ Functional -

1 1. Programacion Declarativa

1. PROGRAMACION DECLARATIVA................................................................. 4 1.1 NUEVOS LENGUAJES. ANTIGUA LÓGICA. ........................................... 4 1.1.1 Lambda Cálculo de Church y Deducción Natural de Grentzen....... 4 1.1.2 El impacto de la lógica..................................................................... 5 1.1.3 Demostradores de teoremas ............................................................. 5 1.1.4 Confianza y Seguridad. .................................................................... 6 1.2 INTRODUCCIÓN A LA PROGRAMACIÓN FUNCIONAL ...................... 7 1.2.1 ¿Qué es la Programación Funcional?............................................... 7 1.2.1.1 Modelo Funcional ................................................................. 7 1.2.1.2 Funciones de orden superior ................................................. 7 1.2.1.3 Sistemas de Inferencia de Tipos y Polimorfismo.................. 8 1.2.1.4 Evaluación Perezosa.............................................................. 8 1.2.2 ¿Qué tienen de bueno los lenguajes funcionales? ............................ 9 1.2.3 Funcional contra imperativo........................................................... 11 1.2.4 Preguntas sobre Programación Funcional...................................... 11 1.2.5 Otro aspecto: La Crisis del Software.............................................. 11 2. HASKELL (Basado en un artículo de Simon Peyton Jones)............................ 13 2.1 INTRODUCCIÓN....................................................................................... -

Are Central to Operating Systems As They Provide an Efficient Way for the Operating System to Interact and React to Its Environment

1 www.onlineeducation.bharatsevaksamaj.net www.bssskillmission.in OPERATING SYSTEMS DESIGN Topic Objective: At the end of this topic student will be able to understand: Understand the operating system Understand the Program execution Understand the Interrupts Understand the Supervisor mode Understand the Memory management Understand the Virtual memory Understand the Multitasking Definition/Overview: An operating system: An operating system (commonly abbreviated to either OS or O/S) is an interface between hardware and applications; it is responsible for the management and coordination of activities and the sharing of the limited resources of the computer. The operating system acts as a host for applications that are run on the machine. Program execution: The operating system acts as an interface between an application and the hardware. Interrupts: InterruptsWWW.BSSVE.IN are central to operating systems as they provide an efficient way for the operating system to interact and react to its environment. Supervisor mode: Modern CPUs support something called dual mode operation. CPUs with this capability use two modes: protected mode and supervisor mode, which allow certain CPU functions to be controlled and affected only by the operating system kernel. Here, protected mode does not refer specifically to the 80286 (Intel's x86 16-bit microprocessor) CPU feature, although its protected mode is very similar to it. Memory management: Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. www.bsscommunitycollege.in www.bssnewgeneration.in www.bsslifeskillscollege.in 2 www.onlineeducation.bharatsevaksamaj.net www.bssskillmission.in Key Points: 1.