The Impact of Video Compression on Remote Cardiac Pulse Measurement Using Imaging Photoplethysmography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wen-Mei William Hwu

Wen-mei William Hwu PERSONAL INFORMATION Office: Home: Coordinated Science Laboratory 2709 Bayhill Drive 1308 West Main Street, Champaign, Illinois, 61822-7988 Urbana, Illinois, 61801-2307 (217) 359-8984 (217) 244-8270 (217) 333-5579 (FAX) Email: [email protected] EDUCATION Ph.D., Computer Science,1987, University of California, Berkeley B.S., Electrical Engineering, 1983, National Taiwan University, Taiwan CURRENT POSITION Professor and Sanders III Advanced Micro Devices, Inc., Endowed Chair, Electrical and Computer Engineering; Research Professor of Coordinated Science Laboratory, University of Illinois, Urbana-Champaign (UIUC). Chief Technology Officer and Co-Founder, MulticoreWare, Sunnnyvale, California, St. Louis, Missouri, Champaign, Illinois, Chennai, India, Chang-Chun and Beijing, China. Chief Scientist, Parallel Computing Institute, University of Illinois at Urbana-Champaign Board Member, Personify, Inc., Champaign, IL PROFESSIONAL EXPERIENCE September 2016 to present Co-Director (with Jinjun Xiong of IBM) of the IBM-Illinois Center for Cognitive Computing Systems Research, funded by IBM at a total of $8M for five years. The center funds a total of 30+ researchers working on hardware, software, and algorithms for building cognitive computing systems for innovative AI applications. June 2010 to present Co-Director (with Mateo Valero) of the PUMPS Summer School in Barcelona jointly offered by UIUC and the Universitat Politècnica de Catalunya. The summer school has been attended by about 100 faculty and graduate students worldwide every year to study the advanced parallel algorithm techniques for manycore computing systems. June 2008 to present Principle Investigator of the UIUC CUDA Center of Excellence, funded by NVIDIA at over $2.0 M in cash and equipment. -

Opencl BOF Aug14

Neil Trevett Vice President Mobile Ecosystem at NVIDIA President of Khronos and Chair of the OpenCL Working Group SIGGRAPH, Vancouver 2014 © Copyright Khronos Group 2014 - Page 1 Speakers Neil Trevett OpenCL Chair, VP NVIDIA NVIDIA Introduction to Khronos and OpenCL Ecosystem Ralph Potter Research Engineer Codeplay SPIR Luke Iwanski Games Technology Programmer Codeplay SYCL Laszlo Kishonti CEO Kishonti Compute Benchmarking Neil Trevett OpenCL Chair, VP NVIDIA NVIDIA Wrap-up and Questions © Copyright Khronos Group 2014 - Page 2 OpenCL – Portable Heterogeneous Computing • Portable Heterogeneous programming of diverse compute resources - Targeting supercomputers -> embedded systems -> mobile devices • One code tree can be executed on CPUs, GPUs, DSPs and hardware - Dynamically interrogate system load and balance work across available processors • OpenCL = Two APIs and C-based Kernel language - Platform Layer API to query, select and initialize compute devices - Kernel language - Subset of ISO C99 + language extensions - C Runtime API to build and execute kernels across multiple devices OpenCL KernelOpenCL CodeKernel OpenCL CodeKernel OpenCL CodeKernel Code GPU DSP CPU CPU HW © Copyright Khronos Group 2014 - Page 3 OpenCL Roadmap • What markets has OpenCL been aimed at? • What problems is OpenCL solving? • How will OpenCL need to adapt in the future? HPC HPC HPC Desktop HPC Discussion Desktop Desktop Mobile Focus for New Desktop Mobile Mobile Web Capabilities Mobile Web Web FPGA FPGA Embedded Safety Critical 3-component vectors Shared Virtual -

Parallel Performance and Energy Efficiency of Modern Video Encoders on Multithreaded Architectures

PARALLEL PERFORMANCE AND ENERGY EFFICIENCY OF MODERN VIDEO ENCODERS ON MULTITHREADED ARCHITECTURES R. Rodr´ıguez-Sanchez´ 1, F. D. Igual2, J. L. Mart´ınez3, R. Mayo1, E. S. Quintana-Ort´ı1 1Depto. de Ingenier´ıa y Ciencia de Computadores, Universidad Jaume I (UJI), Castellon,´ Spain e-mails: frarodrig,mayo,[email protected] 2 Depto. de Arquitectura de Computadores y Automatica,´ Universidad Complutense de Madrid, Madrid, Spain e-mail: fi[email protected] 3Albacete Research Institute of Informatics, University of Castilla-La Mancha, Albacete, Spain e-mail: [email protected] ABSTRACT improve the encoding efficiency by introducing new tools or by improving the existing ones. In this paper we evaluate four mainstream video encoders: H.264/MPEG-4 Advanced Video Coding, Google’s VP8, Previous comparative analyses of video encoders mainly High Efficiency Video Coding, and Google’s VP9, studying focus on execution time and encoding efficiency. However, conventional figures-of-merit such as performance in terms of the considerable levels of hardware parallelism in current encoded frames per second, and encoding efficiency in both multicore servers, combined with the support for significant PSNR and bit-rate of the encoded video sequences. Addition- thread-level concurrency in these architectures, clearly asks ally, two platforms equipped with a large number of cores, for a detailed evaluation of the parallelism of modern video representative of current multicore architectures for high-end encoders. In particular, the number of threads/cores employed servers, and equipped with a wattmeter allow us to assess the in video encoders in general affects the execution time, but quality of these video encoders in terms of parallel scalability may also influence the encoding efficiency more subtlety, and energy consumption, which is well-founded given the as some encoding dependencies can be broken in a parallel significant levels of thread concurrency and the impact of the execution. -

Efficient Multi-Bitrate Hevc Encoding for Adaptive Streaming

EFFICIENT MULTI-BITRATE HEVC ENCODING FOR ADAPTIVE STREAMING Deepthi Nandakumar, Sagar Kotecha, Kavitha Sampath, Pradeep Ramachandran, Tom Vaughan MulticoreWare Inc. ABSTRACT Adaptive bitrate streaming is a critical feature in internet video that significantly improves the viewer experience by customizing video stream quality to the viewer device's capability and connectivity. Encoding the source content at multiple quality tiers or bitrates is extremely demanding for post-production houses, studios, and content delivery networks. This paper describes an intelligent multi-bitrate encoder, based on the High Efficiency Video Coding (HEVC)/H.265 standard that encodes a single title to multiple bitrates at significant performance gains and no compression efficiency loss, as compared to standalone single bitrate encoder instances. We first describe the threading infrastructure of x265, and demonstrate its ability to dynamically adapt to varying degrees of parallelism in hardware. We then describe the key architectural design of a multi-bitrate encoder, including thread synchronization challenges across encoder instances. We also discuss the analysis data shared across different quality tiers, that is carefully chosen to eliminate loss of compression efficiency compared to a single bitrate encoder instance. Finally, we show the high performance gains achieved by the multi-encoder, and demonstrate the feasibility of simultaneous encoding to multiple bitrates with negligible loss of compression efficiency. INTRODUCTION Over-The-Top (OTT) content streaming is poised to grow exponentially in the coming years, driven by the consumer’s need for a rich and high quality viewing experience. Recent projections indicate that Internet delivery of video will consume 80 to 90% of all Internet bandwidth by the year 2019 [1] [2]. -

SOC Programming Tutorial Hot Chips 2012 Neil Trevett Khronos President

SOC Programming Tutorial Hot Chips 2012 Neil Trevett Khronos President © Copyright Khronos Group 2012 | Page 1 Welcome! • An exploration of SOC capabilities from the programmer’s perspective - How is mobile silicon interfacing to mobile apps? • Overview of acceleration APIs on today’s mobile OS - And how they can be used to optimize performance and power • Focus on mobile innovation hotspots - Vision and gesture processing, Augmented Reality, Sensor Fusion, Computational Photography, 3D Graphics • Highlight silicon-level opportunities and challenges still be to solved - While exploring the state of the art in mobile programming SOC = ‘System On Chip’ Minus memory and some peripherals © Copyright Khronos Group 2012 | Page 2 Speakers Session Speaker Company Title Connecting Mobile SOC Hardware to Apps Neil Trevett Khronos Khronos President and NVIDIA VP of Mobile Content Break Break Camera and Video Sean Mao ArcSoft VP Marketing, Advanced Imaging Technologies Vision and Gesture Processing Itay Katz Eyesight Co-Founder & CTO Augmented Reality Ben Blachnitzky Metaio Director of R&D Sensor Fusion Jim Steele Sensor Platforms VP Engineering 3D Gaming Daniel Wexler the11ers CXO Panel Session All Speakers © Copyright Khronos Group 2012 | Page 3 Khronos Connects Software to Silicon • Khronos APIs define processor acceleration capabilities - Graphics, video, audio, compute, vision and sensor processing APIs developed today define the functionality of platforms and devices tomorrow © Copyright Khronos Group 2012 | Page 4 APIs BY the Industry FOR the -

Heterogeneous Computing with Opencl 2.0 This Page Intentionally Left Blank Heterogeneous Computing with Opencl 2.0 Third Edition

Heterogeneous Computing with OpenCL 2.0 This page intentionally left blank Heterogeneous Computing with OpenCL 2.0 Third Edition David Kaeli Perhaad Mistry Dana Schaa Dong Ping Zhang AMSTERDAM • BOSTON • HEIDELBERG • LONDON NEW YORK • OXFORD • PARIS • SAN DIEGO SAN FRANCISCO • SINGAPORE • SYDNEY • TOKYO Morgan Kaufmann is an imprint of Elsevier Acquiring Editor: Todd Green Editorial Project Manager: Charlie Kent Project Manager: Priya Kumaraguruparan Cover Designer: Matthew Limbert Morgan Kaufmann is an imprint of Elsevier 225 Wyman Street, Waltham, MA 02451, USA Copyright © 2015, 2013, 2012 Advanced Micro Devices, Inc. Published by Elsevier Inc. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Details on how to seek permission, further information about the Publisher’s permissions policies and our arrangements with organizations such as the Copyright Clearance Center and the Copyright Licensing Agency, can be found at our website: www.elsevier.com/permissions. This book and the individual contributions contained in it are protected under copyright by the Publisher (other than as may be noted herein). Notices Knowledge and best practice in this field are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments described herein. In using such information or methods they should be mindful of their own safety and the safety of others, including parties for whom they have a professional responsibility. -

SOC Programming Tutorial Hot Chips 2012 Neil Trevett Khronos President

SOC Programming Tutorial Hot Chips 2012 Neil Trevett Khronos President © Copyright Khronos Group 2012 | Page 1 Welcome! • An exploration of SOC capabilities from the programmer’s perspective - How is mobile silicon interfacing to mobile apps? • Overview of acceleration APIs on today’s mobile OS - And how they can be used to optimize performance and power • Focus on mobile innovation hotspots - Vision and gesture processing, Augmented Reality, Sensor Fusion, Computational Photography, 3D Graphics • Highlight silicon-level opportunities and challenges still be to solved - While exploring the state of the art in mobile programming SOC = ‘System On Chip’ Minus memory and some peripherals © Copyright Khronos Group 2012 | Page 2 Speakers Session Speaker Company Title Connecting Mobile SOC Hardware to Apps Neil Trevett Khronos Khronos President and NVIDIA VP of Mobile Content Break Break Camera and Video Sean Mao ArcSoft VP Marketing, Advanced Imaging Technologies Vision and Gesture Processing Itay Katz Eyesight Co-Founder & CTO Augmented Reality Ben Blachnitzky Metaio Director of R&D Sensor Fusion Jim Steele Sensor Platforms VP Engineering 3D Gaming Daniel Wexler the11ers CXO Panel Session All Speakers © Copyright Khronos Group 2012 | Page 3 Khronos Connects Software to Silicon • Khronos APIs define processor acceleration capabilities - Graphics, video, audio, compute, vision and sensor processing APIs developed today define the functionality of platforms and devices tomorrow © Copyright Khronos Group 2012 | Page 4 APIs BY the Industry FOR the -

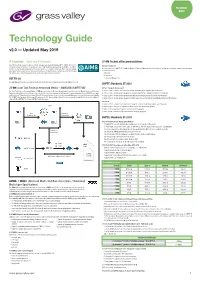

Technology Guide V2.0 — Updated May 2019

TECHNICAL BRIEF Technology Guide v2.0 — Updated May 2019 IP Standards – Video over IP Networks JT-NM Technical Recommendations The AIMS Roadmap is based on open standards and specifications established by SMPTE, AMWA, AES the IETF TR-1001-1:2018 v1.0 and other recognized bodies. It is aligned with the JT-NM Roadmap, developed by the AMWA, EBU, SMPTE and VSF in order provide guidelines for broadcast operations to adopt IP technology. AIMS’ own membership Recommendations for SMPTE ST 2110 Media Nodes in Engineered Networks that easily integrate equipment from multiple vendors with a minimum participates in these organizations. It is precisely because our industry enjoys a robust set of standards bodies of human interaction. that AIMS seeks to foster the adoption of this work as best practice for the industry. - Networks - Registration VSF TR-03 - Connection Management An early standard for audio, video and metadata over IP, TR-03 has been largely superseded by SMPTE ST 2110. SMPTE Standards ST 2022 JT-NM (Joint Task Force on Networked Media) – AMWA/EBU/SMPTE/VSF MPEG-2 Transport stream over IP The Joint Task Force on Networked Media (JT-NM) was formed by the European Broadcasting Union, the Society of Motion Picture and Television ST 2022-1: 2007 - Forward Error Correction for Real-Time Video/Audio Transport Over IP Networks Engineers and the Video Services Forum in the context of the transition from purpose-built broadcast equipment and interfaces (SDI, AES, cross point ST 2022-2: 2007 - Unidirectional Transport of Constant Bit Rate MPEG-2 Transport Streams on IP Networks switcher, etc.) to IT-based packet networks (Ethernet, IP, servers, storage, cloud, etc.) that is currently taking place in the professional media industry. -

Khronos Overview Dec14

Khronos Overview December 2014 Neil Trevett Khronos President NVIDIA Vice President Mobile Ecosystem @neilt3d © Copyright Khronos Group 2014 - Page 1 Why Do We Need Standards? • Standards are interoperability interfaces - They enable communities to communicate and independently innovate • Compelling user experiences can be created inexpensively to build mass markets - Don’t slow growth with functionality fragmentation that adds no value • E.g. Wireless and IO standards - GSM/EDGE, UMTS/HSPA, LTE, IEEE 802.11, Bluetooth, USB … Standards drive mobile market growth by expanding device capabilities © Copyright Khronos Group 2014 - Page 2 Khronos Connects Software to Silicon • Open Consortium creating OPEN STANDARD APIs for hardware acceleration - Any company is welcome – many international members • Defining the low-level silicon interfaces needed on every platform - Graphics, compute, rich media, vision, sensor and camera processing • Commitment to ROYALTY-FREE specifications for use by the whole industry - State-of-the art IP framework to protect members AND the standards • Non-profit organization - Membership fees cover operating and engineering expenses • Create and publish API Specifications AND Conformance Tests - For cross-vendor portability • Strong industry momentum - 100s of man years invested by industry experts Silicon Well over a BILLION people use Khronos APIs Every Day… Software © Copyright Khronos Group 2014 - Page 3 BOARD OF PROMOTERS Over 100 members worldwide Any company or university welcome to join © Copyright Khronos -

Khronos 简介 2014年12月 Neil Trevett Khronos 主席 NVIDIA移动生态系统副总裁 @Neilt3d

Khronos 简介 2014年12月 Neil Trevett Khronos 主席 NVIDIA移动生态系统副总裁 @neilt3d © Copyright Khronos Group 2014 - Page 1 为什么我们需要标准? • 标准是交互操作界面 - 他们实现了社群的交流和独立创新 • 以低成本地方式打造更强的用户体验,以创建巨大的市场 - 不要因毫无价值的功能碎片而减缓发展 • E.g. 无线和IO标准 - GSM/EDGE, UMTS/HSPA, LTE, IEEE 802.11, Bluetooth, USB … 通过扩展设备性能,标准着带动市 场的增长 © Copyright Khronos Group 2014 - Page 2 Khronos 将软件链接到芯片 • 开放组织为硬件加速创建开源标准API - 欢迎所有公司加入 – 众多国际成员企业 • 定义每个平台都需要的低水平芯片界面 - 图形、计算、多媒体、视觉、传感和相机处理 • 承诺将为整合业界带来免版税规范 - 不断更新的IP框架保护会员权益和标准 • 非盈利组织 - 会员费仅用于支付组织运营和工程开发费用 • 创建并发布API规范和一致性测试 - 以实现跨厂商可移植性 • 强大的业界投入 - 业界专家的数百人年投入 Silicon 每天都有数十亿人在使用Khronos的API Software © Copyright Khronos Group 2014 - Page 3 BOARD OF PROMOTERS Over 100 members worldwide Any company or university welcome to join © Copyright Khronos Group 2014 - Page 4 http://accelerateyourworld.org/ © Copyright Khronos Group 2014 - Page 5 现实世界中的标准 标准化的最佳时机? 厂商间差异化毫无价值 – 碎片不断增加 达尔文业界还在实验着什么行得通什 – 标准化的目标不断清晰 么行不通 有业界设计 由业界定义 业界的实验和设计速度迟缓且不够集 业界对标准化的内容是赞同的 – 共同为 中关注 各种观点定义高效的解决方案 一个不好的标准将扼杀创新并引起商 一个好的标准可以实现执行创新 业化 通过实际考验的流程可以加速高效生态系统的发展 通过实际考验的IP框架保护了市场中会员IP和规范本身 一个业界的平台将所有企业聚集在一起共同进行高效的芯片技术创新 © Copyright Khronos Group 2014 - Page 6 Khronos的参与价值 在产品开发之 在行业标准的 在草拟规范 前提前了解未 创建中拥有发 的同时开发 产品与全球市场需 来行业技术发 言权,使其满 产品,获得 求和趋势与时俱进 展趋势 足自己业务的 先机市场 需求 会员公司将比非会员 公司提早推出产品 为未来芯片加速方面搜集 规范草本仅对 公开发布规范和一 非会员公司 行业需求 Khornos会员开放 致性测试 发布产品 实践证明,Khronos的标准流程在未来硬件加速性能 方面迅速地引起共鸣,有效地创建了新的市场机遇。 © Copyright Khronos Group 2014 - Page 7 IP政策,采用&一致性,工作组流程 注:以下内容仅供参考。具体法律相关信息请参考Khronos会员协议(Khronos Membership Agreement)、Khronos采用者协议(Khronos Adopters Agreement )和Khronos商标标识指导(Khronos -

Hevc: Rating the Contenders

HEVC: RATING THE CONTENDERS Jan Ozer www.streaminglearningcenter.com [email protected]/ 276-235-8542 @janozer Agenda • Who are the competitors? • Setting the ground rules • Results • Conclusions Who Was Invited • Companies asked to participate • Intel – distributing through OEMs only • Elemental – declined to participate • Ittiam – declined to participate – no bandwidth • Beamr/Vanguard – declined – bad timing • Vantrix – f.265 – no real effort behind technology • NTT – declined, primarily sell custom products to specific OEMs • MainConcept – yes! • X265 – publicly available – yes! What I tested • Codecs • Focus • x264 as baseline • VOD • Main Concept HEVC • Encoding time specific • x265 • 720p, 1080p, 4K • VP9 (Google) • Five profiles each • Bitmovin AV1 (more • Four video files later) • Netflix Meridian • Blender TOS • Blender Sintel • New test clip Basic Encoding Parameters Data Rate to +/- 5% Encoding Details – x265 • Encoded in x265 (not FFmpeg) • 8-bit version (Main) • Simple command string created by x265 MulticoreWare • PSNR/SSIM tuning enabled for all tests x265_8bit.exe --input TOSN_720p.yuv --input-res 1280x720 --fps 24 --keyint 48 --min-keyint 48 --bframes 3 --ref 6 --bitrate 2000 --vbv-maxrate 2200 --vbv-bufsize 2000 --preset medium -- output TOSN_720p_2M.hevc --no-scenecut --tune psnr --psnr --ssim --pass 1 --slow-firstpass x265_8bit.exe --input TOSN_720p.YUV --input-res 1280x720 --fps 24 --keyint 48 --min-keyint 48 --bframes 3 --ref 6 --bitrate 2000 --vbv-maxrate 2200 --vbv-bufsize 2000 --preset medium -- output TOSN_720p_2M.hevc --no-scenecut --tune psnr --psnr --ssim --pass 2 A Note About Tuning • Tuning doesn’t “tune” the codec to produce better PSNR/SSIM scores. • Rather, “They simply turn off quality enhancement algorithms that are known to degrade objective measurements, although we also know that they improve subjective visual quality. -

Performance of HEVC Discrete Cosine and Sine Transforms GPU

Vol. 9(33), Jul. 2019, PP. 4302-4309 Performance of HEVC Discrete Cosine and Sine Transforms GPU using CUDA Moustafa Masoumi and HamidReza Ahmadifar University of Guilan, Engineering department, Rasht, Iran Phone Number: +98-935-9301109 *Corresponding Author's E-mail: [email protected] Abstract igh Efficiency Video Coding (HEVC) is the most recent video encoding standard that achieves much higher compression while maintaining the same quality as its predecessor x264. This is H done by a variety of upgrades for example a more diverse transform block size while some fundamental methods remain unchanged. Discrete Cosine Transform (DCT) along with an alternate Discrete Sine Transform (DST) is used by HEVC before quantization to achieve a lossless encoding of transform blocks. Both of these transforms are implemented in HEVC encoder using CPU. In this paper we implement these transforms on GPU using CUDA and analyze their performance compared to the default implementation. Using a batched kernel call 48% and 13% improvement is achieved on Inverse 32x32 and 16x16 DCT transform respectively compared to SIMD while our GPU implementation outperforms other CPU implementations on every transform. We also present a benchmark software for this very purpose which we will use to measure their performance real time. Keywords: video coding; High Efficiency Video Coding (HEVC) ; Discrete Sine Transform ; Discrete Cosine Transform ; Graphics Processor unit (GPU), Computed unified device architecture (CUDA) 1. Introduction As popularity of streaming services increases at an exponential rate while providers struggle to deliver higher resolution videos over limited bandwidth, better encoding software that can achieve a good compression, without sacrificing quality becomes a necessity.